Track

I’m sure that most of us have already come across deepfakes, whether in the form of viral memes or cleverly altered news clips. Whether we like it or not, they have become a common part of our online landscape.

Deepfakes are synthetic media such as videos, images, or audio that are created or modified using artificial intelligence to convincingly imitate real people or events. As AI advances, distinguishing fact from fiction will get harder, making deepfakes both a creative tool and a threat to truth and privacy.

In this article, I will cover:

- What deepfakes are

- The technology behind them

- Possible applications in different areas

- Ethical challenges surrounding deepfakes

- Possible ways to detect them

What Are Deepfakes?

Deepfakes are artificially generated or manipulated media created using deep learning models to produce highly realistic but synthetic representations of people, objects, or events.

While they are often used to mimic human faces, voices, or actions, deepfake techniques can also generate or alter objects, scenes, animals, or even entire environments in videos and images to create realistic but fake content, blurring the line between reality and fabrication across a wide range of contexts.

The origins of deepfakes can be traced back to major academic breakthroughs. In 2014, Ian Goodfellow and his colleagues published a pivotal paper that introduced Generative Adversarial Networks (GANs). GANs, which we will investigate in the next section, provide the foundation for generating synthetic content.

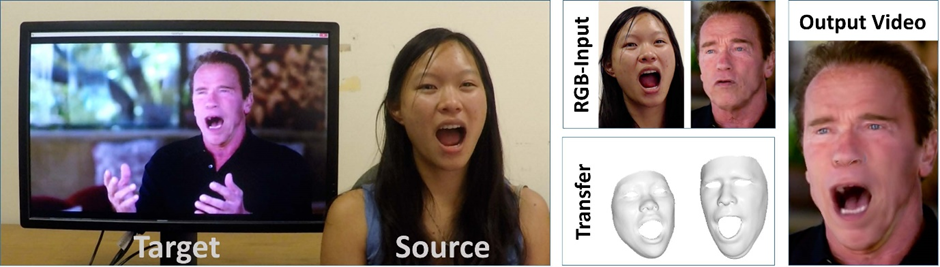

A further milestone came with the 2016 Face2Face paper by Justus Thies and his team, which focused on techniques for real-time face capture and reenactment. An example of its usage is demonstrated in the picture below, where a facial expression is transferred to the famous actor and politician Arnold Schwarzenegger.

Example of the combination of source and target pictures to transfer facial expressions using Face2Face. Source: Face2Face paper

The phenomenon gained widespread public attention in the end of 2017 when manipulated videos—often featuring celebrity face swaps, especially in adult content—began circulating on Reddit, eventually leading to social media platforms banning harmful use of deepfake techniques.

One of the most recognized examples emerged in 2018: a viral video featuring a digitally altered President Barack Obama, created by BuzzFeed in collaboration with Jordan Peele, which highlighted the potential of the technology to reshape public discourse.

In 2020, South Park creators Trey Parker and Matt Stone created another notable piece of deepfake art: the pilot of the series "Sassy Justice" featured fictional characters who were “played by” deepfakes of Donald Trump and Mark Zuckerberg, among others.

How Do Deepfakes Work?

When we talk about deepfakes, we often focus on the end product. Let’s dig a bit deeper and try to understand how they are created—I will make sure you understand how this works even if you don’t have a technical background.

Discriminative and generative models

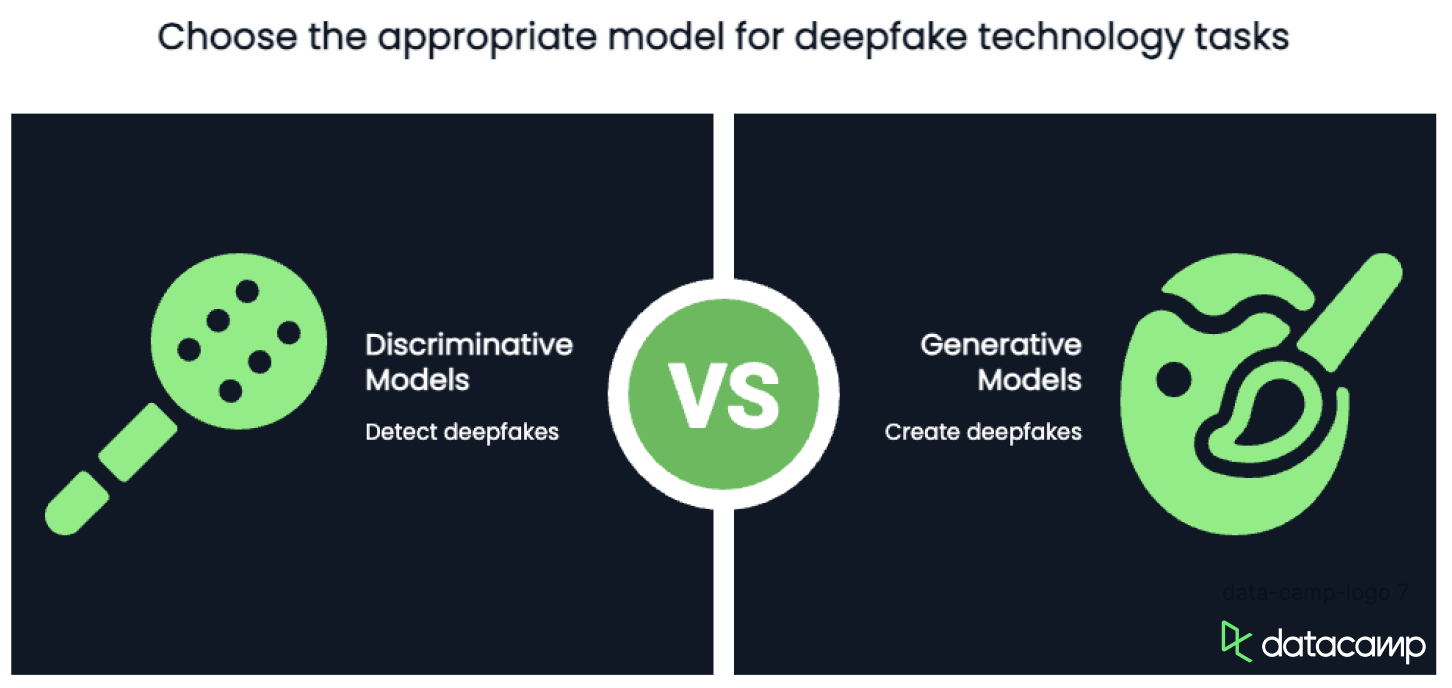

Discriminative models are used to tell the difference between things—for example, whether an image is real or fake. They look at the data and try to predict the correct label (like “real” or “deepfake”). Common model examples include logistic regression, decision trees, neural networks, and support vector machines. These models are great for tasks like detecting deepfakes, because they’re trained to spot signs that something has been changed or manipulated.

Generative models, on the other hand, try to understand how real data works so they can create new, similar data. They learn from lots of real examples—like pictures of real faces or clips of real voices—and then use that knowledge to generate fake but realistic-looking media. This is how deepfakes are made.

The main difference is what they’re used for: discriminative models detect, and generative models create. In deepfake technology, both are important—one to build convincing fakes, and the other to catch them.

To learn more about the difference, check out this blog on generative vs. discriminative models.

Generative adversarial networks

GANs are a type of generative AI that trains a generative and a discriminative model together. The relationship between both models is best described as friendly competition: while the generator creates synthetic content, the discriminator works to distinguish real media from fakes. This rivalry pushes the generator to improve its work until its outputs become nearly indistinguishable from authentic media.

For deepfakes the roles of the generative model is that of an art forger painting counterfeit artworks, while the discriminative model embodies an art critic scrutinizing each piece to spot the fakes. As they are collecting experience by being exposed to more and more data, both the forger and the critic become better at their jobs, resulting in increasingly convincing reproductions and more accurate evaluations.

The process involves training models on extensive datasets to capture a subject’s unique features, like tone of voice and facial characteristics. By analyzing this data, the system gradually builds an accurate representation of the target, which it uses to mimic them convincingly.

Deepfake techniques

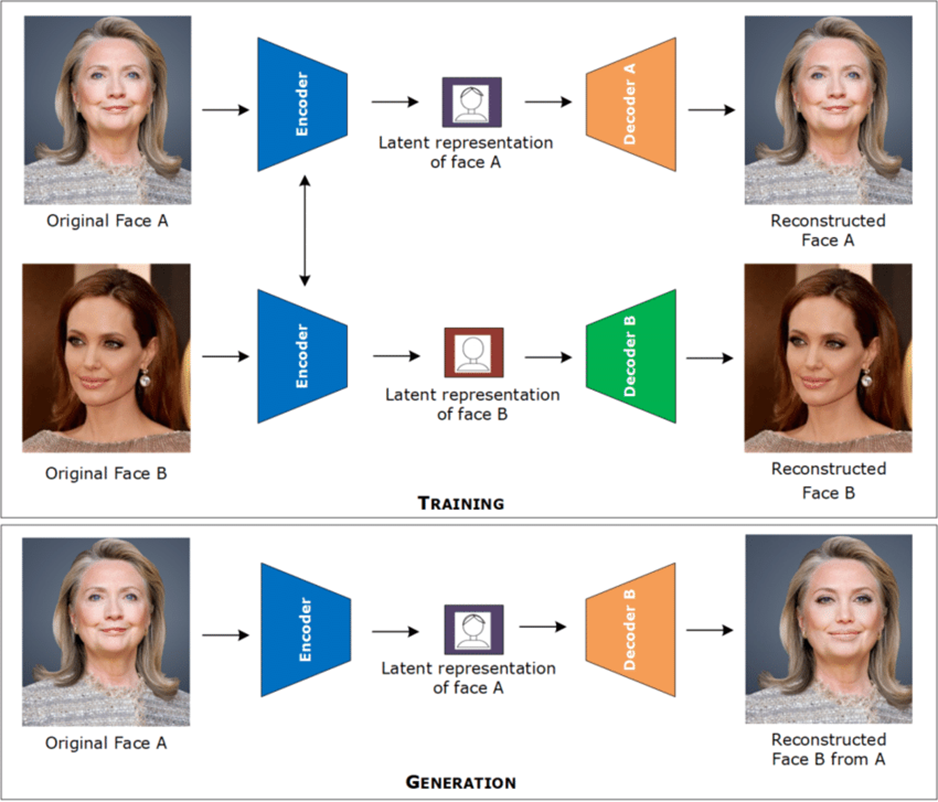

Common techniques for face-swapping often involve autoencoders and convolutional neural networks. These tools are trained on lots of images or video frames of a person to learn what their face looks like—its shape, expressions, and key features. The AI turns this information into a format it can understand, kind of like a digital summary of the person’s face.

Then, it uses that summary to copy facial expressions from one person and apply them to another, making the face-swap look realistic.

For lip syncing, neural networks analyze not only mouth movements but also audio inputs to align the video output accurately with the spoken words.

They are trained using labeled datasets, where each video frame is paired with an audio label indicating the exact spoken phoneme, allowing the model to learn the precise relationship between sound and visual articulation.

By simultaneously analyzing these data and label pairs, the network learns to generate video outputs where the mouth movements mirror the learned relationship.

Finally, voice cloning can be achieved by training speech synthesis models using extensive audio datasets paired with corresponding transcripts. These datasets capture the unique characteristics of an individual's voice (such as pitch, tone, and cadence) in a structured format that the models can analyze.

By learning from these data and label pairs, the models generate synthetic speech that closely replicates the target's natural vocal qualities, convincingly imitating their voice.

Deepfakes Applications

I am pretty sure most of us have seen synthetic videos featuring famous actors or public figures in humorous scenarios. But deepfakes can serve many other purposes, including educational purposes or to enhance accessibility.

Entertainment

Deepfakes offer filmmakers innovative tools, enabling them to make actors look younger or create dubbed versions of movies that closely match original performances.

One notable example of the former is Martin Scorsese’s “The Irishman” (2019), in which Robert De Niro, Al Pacino, and Joe Pesci appear decades younger for flashback scenes. This way, the story was able to flow naturally without the need for multiple performers to play their younger selves. See an explanation of this process in this video.

Deepfakes also revolutionize the creation of digital characters by generating realistic avatars for video games and virtual reality. Being able to design lifelike characters that interact naturally with users in immersive digital environments gives headway for more interactive and personalized entertainment experiences. For instance, Epic Games' MetaHuman Creator enables developers to build photorealistic avatars that move and respond convincingly in real-time.

The potential of deepfakes for producing satirical content is huge as well. While traditional imitations have long been part of a comedian's repertoire, deepfakes introduce a new level of detail and realism to impersonations. Artists like Snicklink and Kyle Dunnigan are already exploring this innovative approach, pushing the boundaries of digital satire.

Education and accessibility

One recent use of deepfake technology comes from BBC Maestro, which launched a writing course featuring a recreated Agatha Christie (nearly 50 years after her death).

Using AI-generated visuals and voice modeling based on her letters, interviews, and manuscripts, the course offers a version of Christie delivering lessons in her own words. It’s a clear example of how generative media can be used to bring historical figures into contemporary educational settings.

Deepfakes also promise to improve accessibility, especially for individuals with speech impairments. Developing synthetic voices that capture a person’s vocal characteristics from recordings can enable more natural communication.

A noteworthy example is “Project Revoice,” which helps individuals with Motor Neurone Disease (often resulting in the loss of speech), preserve and recreate their natural voice by capturing voice recordings early on.

Ethical and Social Challenges of Deepfakes

Any discussion about deepfakes would be incomplete without addressing the challenges they pose. Among the most pressing concerns are the following:

- Trust erosion

- Fraudulent activity

- Privacy violations

Deepfakes have the potential to erode public trust by making it increasingly difficult to distinguish between real and manipulated content. When false information spreads, it can shape public opinion, damage reputations, or create confusion in critical situations.

In the worst cases, deepfakes can be weaponized to destabilize societies by malicious actors, fueling political divisions or even influencing elections by spreading fabricated statements or actions attributed to public figures.

The ability to convincingly impersonate someone through deepfake technology opens doors for fraudulent activities, such as financial scams or identity theft. Cybercriminals can use cloned voices to deceive individuals into transferring money or granting access to sensitive data. This raises concerns for industries like banking and cybersecurity, where traditional verification methods may no longer be sufficient.

One of the most disturbing applications of deepfakes is the creation of non-consensual content, often used to exploit or intimidate individuals. Victims may find themselves inserted into fabricated media that damages their reputation or invades their privacy. This highlights a major ethical issue, as the misuse of personal likenesses without consent can have long-term psychological and professional consequences for those affected.

Despite the growing risks, laws and regulations surrounding deepfake creation and distribution remain unclear in many countries. While some jurisdictions have introduced targeted legislation, enforcement remains difficult due to the rapid advancement of the technology. The lack of a clear legal framework makes it challenging to hold perpetrators accountable and raises questions about how to balance freedom of expression with the need to prevent harm.

AI Ethics

How to Detect Deepfakes

As we have seen, detecting original from manipulated content is becoming a crucial skill in the era of AI. Let’s take a look at different approaches to tell them apart.

Human observation

Though becoming increasingly difficult to recognize, there are telltale signs of manipulation that the human eye can catch more or less easily. Most of us have probably heard of AI-generated pictures in which humans suddenly had four or six fingers, but other signs are more subtle than that.

For pictures, one hint on manipulation is unnatural blurring or inconsistent pixelation along the edges of faces or objects. This implies that the model did not accurately tell apart which pixel belongs to the face and which one to the background, leaving a more or less obvious seam.

Another clue can be lighting or shadows that don’t match the surrounding environment, making the image appear artificially composed.

AI-generated picture illustrating a mismatch between the light source and shadows.

In videos, lighting issues can catch the eye even more easily if the direction of the light source changes abruptly between frames. We might also spot unusual blinking patterns or stiff facial expressions that appear mismatched to the surrounding context, resulting in a subtle “uncanny” feeling. In some cases, edges around the head or hair flicker, revealing that the video might have been manipulated.

Technical methods

Detecting deepfakes often requires a combination of AI-driven detection methods that analyze inconsistencies in facial features, motion, and other artifacts. Some examples of software specializing in the detection of manipulated media content include:

- GoogleSynthID

- Truepic

- DuckDuckGoose

- Intel FakeCatcher

- Reality Defender

- Sensity AI

- Deepware Scanner

Each tool has a unique approach aimed at its respective primary use case. While all other mentioned tools focus on validating already created content, Google SynthID and Truepic can be considered preventive measures for identifying AI-generated content.

Google SynthID embeds imperceptible digital watermarks directly into AI-generated images to mark their origin, while Truepic uses cryptographic hashing and blockchain-based verification to authenticate media at the moment of capture.

DuckDuckGoose, which mainly analyses facial incostistencies, and Intel FakeCatcher, whose strength lies in the detection of biological signals like blood flow patterns, both target forensic and research applications.

The difference between Reality Defender, Sensity AI and Deepware Scanner, who all use deep learning models to differentiate between real and fake content, lies in the target group: while the former two offer integration with enterprise security tools and are suited for large-scale corporate detection missions, Deepware Scanner is a more lightweight consumer-focused tool for casual users.

Conclusion

Deepfakes represent both a technological advancement and a significant ethical challenge. While they open up exciting possibilities in entertainment, education, and accessibility, their misuse can lead to misinformation, fraud, and privacy violations.

In the future, we can expect deepfakes to become even more realistic, especially in virtual reality applications, while detection tools will try to keep pace with increasingly sophisticated manipulation techniques.

If you want to learn more about the theoretical and technological aspects of deepfakes, DataCamp has you covered with the following resources:

Data Science Editor @ DataCamp | Forecasting things and building with APIs is my jam.