The emergence of innovative AI models is reshaping the technology sector, with DeepSeek-R1—an open-source model from China that I’m sure you’ve heard something about—presenting a compelling challenge to established players like OpenAI's o1 series. This competition is driving remarkable advances in performance, cost efficiency, and accessibility of AI technologies.

It’s important to understand these models' capabilities and differences. Whether you're a newcomer exploring AI fundamentals through courses like Understanding Artificial Intelligence or if you have more experience and are ready to explore deeper with LLM Concepts, the choice between DeepSeek-R1 and OpenAI's o1 can significantly impact your projects.

This article provides a detailed comparison of these two leading models, examining their performance metrics, cost structures, safety protocols, and ideal use cases. Our analysis draws from extensive benchmarking data and practical applications, complemented by insights from our DeepSeek vs. ChatGPT guide and Fine-Tuning DeepSeek R1 tutorial.

Overview of the AI Models

Let’s start off by reviewing what is DeepSeek-R1 and OpenAI o1.

What is OpenAI's o1 Series?

The o1 series represents OpenAI's latest advancement, building upon the success of their previous models like ChatGPT and GPT-4. This new lineup features multiple variants—standard, mini, and pro—each designed to address different application requirements and use cases. The series employs a sophisticated combination of traditional supervised fine-tuning (SFT) with reinforcement learning, resulting in exceptional capabilities in complex problem-solving.

A distinctive feature of the o1 series is its advanced user interface options, which allows access to powerful AI capabilities. These interfaces provide intuitive tools for model fine-tuning, making it accessible not only to experienced developers but also to non-technical users who need to adapt the model for specific tasks. This approach significantly reduces the barrier to entry for organizations looking to implement AI solutions.

The o1 series also excels in its cross-platform compatibility. Whether deployed on cloud services or local infrastructure, the model maintains consistent performance. This versatility makes it particularly valuable in enterprise environments where diverse technology stacks are the norm and interoperability is important.

What is DeepSeek-R1?

DeepSeek-R1 represents a significant breakthrough in AI technology, developed by a Chinese AI company founded in 2023. The model uses an innovative training approach called R1-Zero, which sets it apart by relying solely on reinforcement learning combined with a sophisticated chain-of-thought reasoning process. This unique architecture enables remarkable self-correcting behavior and delivers a significant cost advantage. In fact, it is said to operate at about 5% of the cost of traditional models.

What makes DeepSeek-R1 particularly noteworthy is its open-source foundation, which creates unique opportunities for both developers and organizations. The model can be integrated into local ecosystems, allowing developers to customize and adapt it according to specific regional requirements or regulations.

Furthermore, DeepSeek-R1's open-source nature fosters a collaborative development environment. The model benefits from continuous community contributions, leading to rapid improvements and refinements based on real-world user feedback. This democratic approach to AI development not only accelerates innovation but also ensures the model remains responsive to evolving user needs and technical requirements.

Performance Comparison

Now, let’s compare the models according to all the most important benchmarks.

General reasoning

The GPQA Diamond benchmark pushes the boundaries of AI reasoning capabilities by presenting complex, multi-step problems that require sophisticated understanding and contextual awareness. This benchmark is particularly valuable as it assesses an AI model's ability to handle challenging reasoning tasks that span multiple domains and knowledge areas.

- DeepSeek-R1: 71.5%

- OpenAI o1: 75.7%

- Key Insight: OpenAI's o1 maintains a notable advantage in this category, showcasing the effectiveness of its hybrid approach combining supervised fine-tuning with reinforcement learning. This architecture appears particularly well-suited for tasks requiring broader contextual understanding and cross-domain knowledge application.

Math ability

The MATH-500 benchmark sets a high bar for AI models, presenting complex mathematical problems that require sophisticated logical deduction and mathematical insight. This benchmark effectively simulates the type of advanced problem-solving typically associated with human mathematical experts, making it a useful metric for evaluating AI capabilities in quantitative reasoning.

- DeepSeek-R1: 97.3%

- OpenAI o1: 96.4%

- Key Insight: Both models demonstrate near human-expert level performance, with DeepSeek-R1 maintaining a slight edge. This advantage likely stems from its reinforcement learning architecture, which appears particularly capable at adapting to novel mathematical concepts and abstract problem-solving scenarios.

Coding skills

Codeforces represents one of the most rigorous assessments of programming capability in the AI space. As a competitive programming platform, it challenges models to produce efficient, accurate code under constraints that mirror real-world software development scenarios, making it particularly relevant for evaluating practical coding abilities.

- DeepSeek-R1: 96.3%

- OpenAI o1: 96.6%

- Key Insight: OpenAI's o1 demonstrates marginally stronger performance in programming-related challenges. This advantage can be attributed to its extensive training across diverse programming tasks and coding scenarios, enabling better generalization across different programming challenges.

Additional benchmarks

Recent evaluations have introduced more sophisticated testing frameworks that probe the limits of AI capabilities. Two notable benchmarks in this category are AlpacaEval, which assesses conversational quality and coherence, and ArenaHard, which focuses on complex strategic problem-solving scenarios.

DeepSeek demonstrates notable improvements over GPT-4 Turbo in both AlpacaEval and ArenaHard evaluations, showcasing enhanced conversational coherence and strong capabilities in strategic thinking. While direct comparisons with o1 remain pending in these areas, DeepSeek-R1's performance reveals particular strengths in handling dynamic, unstructured problems that require high adaptability. This suggests the model could excel in situations where problem structures are fluid and conventional solutions may not apply.

DeepSeek vs. OpenAI. Source: DeepSeek API docs

Cost Comparison

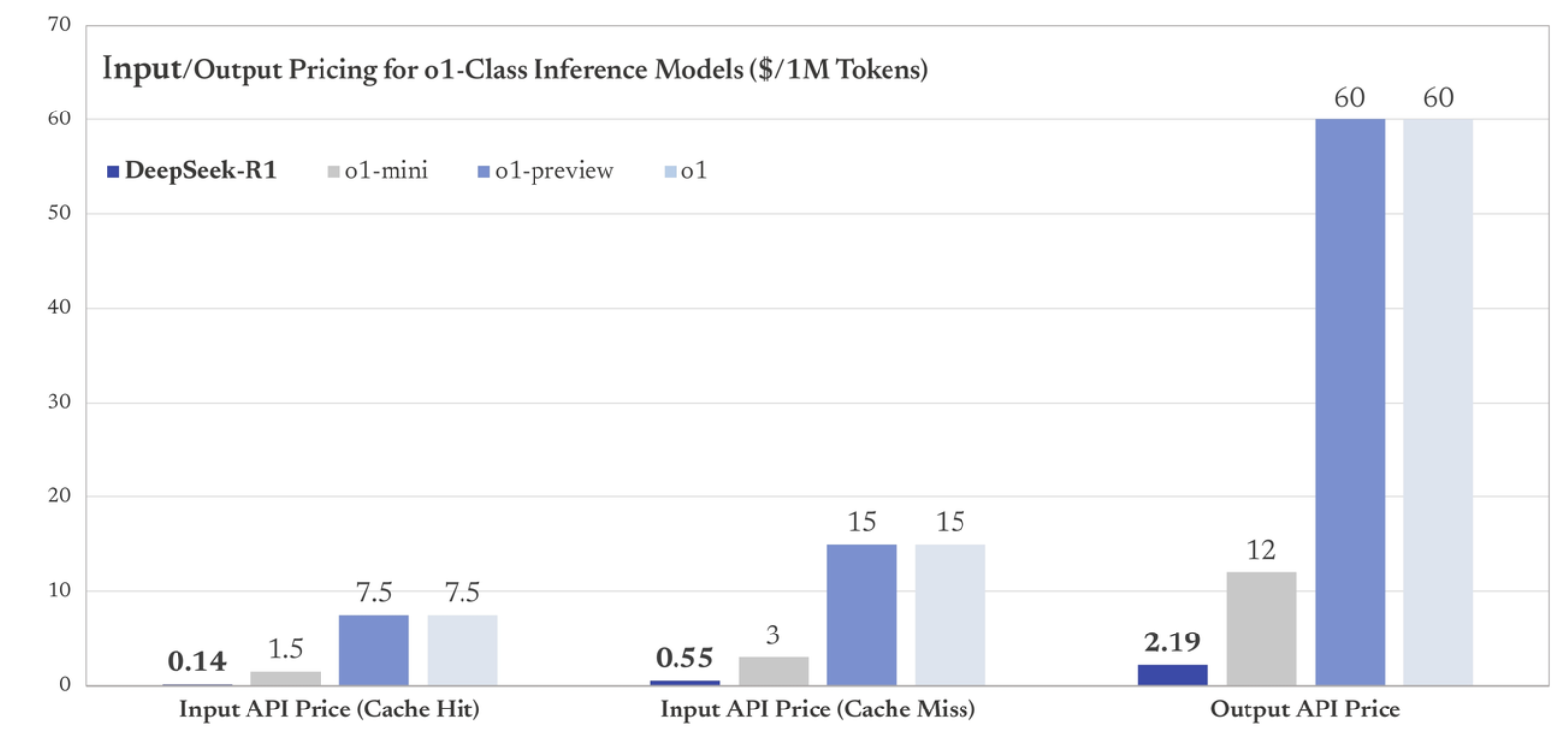

When evaluating AI models for deployment, understanding the different cost components is essential for budgeting and resource planning. Let's break down each pricing metric and compare the costs between DeepSeek-R1 and OpenAI's o1.

Cached input costs

Cached input refers to repeated or previously processed text that the model has already encountered, allowing for more efficient processing and lower costs. This is particularly beneficial for applications that frequently process similar content or maintain conversation history.

- DeepSeek-R1: $0.14 per 1M tokens

- OpenAI o1: $7.50 per 1M tokens

Input costs

Input costs cover the processing of new, unique text sent to the model for analysis or response generation. This includes user queries, documents for analysis, or any fresh content that requires the model's attention.

- DeepSeek-R1: $0.55 per 1M tokens

- OpenAI o1: $15.00 per 1M tokens

Output costs

Output costs apply to the text generated by the model in response to inputs. This includes everything from simple answers to complex analyses, code generation, or creative content production.

- DeepSeek-R1: $2.19 per 1M tokens

- OpenAI o1: $60.00 per 1M tokens

Cost analysis

The pricing comparison reveals a significant cost advantage for DeepSeek-R1 across all metrics. Operating at approximately 5% of OpenAI o1's costs, DeepSeek-R1 presents a compelling option for large-scale deployments and cost-sensitive projects. This dramatic price difference could be particularly impactful for organizations running extensive AI operations or startups working with limited budgets.

Safety and Security Considerations

OpenAI's o1 and DeepSeek-R1 approach safety and security considerations through distinct frameworks, each with its own advantages.

OpenAI’s protective architecture and controls

OpenAI has established a comprehensive security infrastructure for its o1 series, built on three key pillars. The first is its safety protocol system, which incorporates external red-teaming. Basically, independent security experts actively test the model for vulnerabilities. This is complemented by advanced jailbreak resistance mechanisms that protect against unauthorized access and manipulation attempts. The third pillar consists of bias mitigation strategies that help ensure fair and balanced model outputs.

Beyond these technical measures, OpenAI has fortified its commitment to security through formal partnerships with international AI safety institutes. These collaborations facilitate continuous monitoring and improvement of safety standards, while also contributing to the development of industry-wide best practices for AI security.

DeepSeek’s open-source security and compliance

DeepSeek-R1 takes a distinctively transparent approach to security, leveraging its open-source nature as a primary security feature. This transparency enables global developer communities to actively participate in security verification, creating a collaborative environment for identifying and addressing vulnerabilities.

The model's security framework is built around three core elements:

- Community-driven verification processes that leverage worldwide developer expertise

- Self-correcting mechanisms powered by reinforcement learning, which help align the model's behavior with human preferences

- Strict content guidelines that comply with Chinese regulations, providing clear frameworks for deployment and operation

Ongoing security development

Both models continue to evolve their security measures through different but effective approaches. OpenAI maintains its security edge through systematic updates based on user feedback and partner insights, while DeepSeek benefits from rapid community-driven security improvements. I'm sure that both models will continue to strengthen their security profiles, albeit through different mechanisms.

Choosing the Right Model

Selecting the appropriate AI model for your project requires careful consideration of various factors, including technical requirements, budget constraints, and operational needs. Let's examine specific use cases where each model excels.

DeepSeek-R1: Optimal use cases

DeepSeek-R1 emerges as the preferred choice for several specific scenarios. First, it offers exceptional value for budget-constrained projects. Its significantly lower cost structure (again, it's operating at roughly 5% of traditional model costs) makes it particularly attractive for startups and research projects.

The model's open-source foundation provides unique advantages for teams requiring customization flexibility. Organizations can modify and adapt the model to meet specific requirements, integrate it with existing systems, or optimize it for particular cases. This flexibility is especially valuable for companies with unique technical requirements or those operating in specialized domains.

I was particular impressed with DeepSeek-R1's superior performance in math (97.3% on MATH-500) makes it an excellent choice for applications involving complex calculations, statistical analysis, or mathematical modeling. This strength can be particularly valuable in fields such as financial modeling, scientific research, or engineering applications.

OpenAI's o1: Best-fit scenarios

OpenAI's o1 series is particularly well-suited for enterprise environments where you have to think a lot about reliability and security. Its comprehensive safety protocols and compliance measures make it ideal for organizations operating in regulated industries or handling sensitive information.

The model excels in programming tasks and complex reasoning scenarios, as evidenced by its strong performance on Codeforces (2061 rating) and GPQA Diamond (75.7%). This makes it particularly valuable for software development teams, especially those working on complex applications.

For organizations where proven track records and extensive testing are non-negotiable requirements, o1 provides the assurance of rigorous validation and testing protocols. This makes it especially suitable for mission-critical applications where reliability and predictable performance are essential.

The Broader Implications and Future Trends

The AI race

The emergence of models like DeepSeek-R1 and OpenAI's o1 signals a transformative shift in how AI capabilities are being delivered to users. The convergence of open-source flexibility with enterprise-grade performance is creating new possibilities for AI deployment and democratizing access to advanced AI capabilities.

This technological convergence is reshaping how organizations approach AI implementation. While traditional enterprise solutions focused primarily on performance and security, the new generation of models enables organizations to optimize for specific needs—whether that's cost efficiency, customization flexibility, or specialized performance in areas like mathematical reasoning.

The industry impact extends beyond just technical capabilities. These developments are fostering new approaches to AI deployment, where organizations can mix and match different models based on specific use cases. For instance, a company might use o1 for sensitive enterprise applications while leveraging DeepSeek-R1's cost advantages for large-scale data processing tasks. This hybrid approach represents a mature evolution in how organizations can practically implement AI solutions.

Implications for AI professionals

The current state of AI development creates unique opportunities and challenges. Success increasingly requires expertise in both open-source and proprietary systems, as organizations often use a mix of both.

Beyond technical skills, professionals are finding value in developing cross-disciplinary competencies. There’s a big need for people who understand the intersection of AI with business strategy, who can, in other words, integrate AI solutions into business contexts and industries.

The synthesis of technical expertise with business acumen will likely define the next generation of AI professionals. The trick is going to be your ability to bridge the gap, so to speak, between knowing what is the latest and cutting-edge technology but also be able to make it actionable in real-world applications. This is what is going to help you be positioned to drive innovation and value creation, wherever you work.

Conclusion

We've explored how DeepSeek-R1 and OpenAI's o1 models represent different approaches to advancing AI capabilities. DeepSeek-R1's innovative R1-Zero training method, combined with its reinforcement learning approach, delivers cost efficiency and self-correcting behavior.

OpenAI's o1 series, on the other hand, builds upon its established ecosystem, integrating supervised fine-tuning with reinforcement learning to deliver great performance. Its variants—standard, mini, and pro—provide flexibility for different use cases.

My belief is that both models offer advantages. DeepSeek's community-driven development approach and alignment with Chinese regulatory standards opens new possibilities for customization and regional deployment. Meanwhile, OpenAI's network of global safety collaborations and proven track record provides enterprise-grade reliability.

To stay current with these advancing technologies, consider exploring resources such as our Generative AI Concepts for foundational knowledge, Working with the OpenAI API to learn implementation skills, and AI Ethics for understanding the important aspects of responsible AI development. Also, if you are interested in the coding aspects, our Introduction to Deep Learning with PyTorch offers hands-on experience with neural networks and model development.

As the AI field continues to evolve, success will depend on maintaining a balanced understanding of both open-source and proprietary solutions. Success also depends on staying current, which is why, as a final thing, I recommend signing up for DataCamp’s flagship (and free) Radar: Skills Edition conference, which is coming right up on March 27, 2025, so make sure to register today.

As an adept professional in Data Science, Machine Learning, and Generative AI, Vinod dedicates himself to sharing knowledge and empowering aspiring data scientists to succeed in this dynamic field.

DeepSeek vs. OpenAI FAQs

What's the main difference between DeepSeek-R1 and OpenAI's o1 in terms of cost?

DeepSeek-R1 operates at approximately 5% of OpenAI o1's costs, with output tokens costing $2.19 vs $60.00 per 1M tokens respectively.

Which model should I choose for enterprise-level applications requiring strict security compliance?

OpenAI's o1 is better suited due to its robust safety protocols, external red-teaming, and formal agreements with international AI safety institutes.

How do their mathematical reasoning capabilities compare?

Both models demonstrate near human-expert level performance, with DeepSeek-R1 scoring slightly higher at 97.3% on MATH-500 compared to OpenAI o1's 96.4%.

Can I customize DeepSeek-R1 for my specific needs?

Yes, DeepSeek-R1's open-source nature allows for extensive customization and adaptation to specific requirements or regional regulations.

Which model performs better for coding tasks?

OpenAI's o1 has a slight edge in programming with a Codeforces rating of 2061 compared to DeepSeek-R1's 2029.

What's the R1-Zero training method used by DeepSeek?

R1-Zero is DeepSeek's innovative training approach that relies solely on reinforcement learning combined with chain-of-thought reasoning, enabling strong self-correcting behavior.