Track

The release of DeepSeek-R1 shook the AI industry, causing significant stock drops for NVIDIA and major American AI companies.

DeepSeek has now introduced Janus-Pro, its latest multimodal model designed for text and image generation. Like R1, Janus Pro is open-source and delivers strong benchmark results. Simply put, it’s a serious competitor to OpenAI’s DALL-E 3 and Stability AI’s Stable Diffusion in the multimodal AI space.

In this blog, I’ll explain Janus Pro, what it is, what multimodal AI means, how it works, and how to access it. I’ll also compare it with DALL-E 3 on a few prompts.

What Is Janus-Pro?

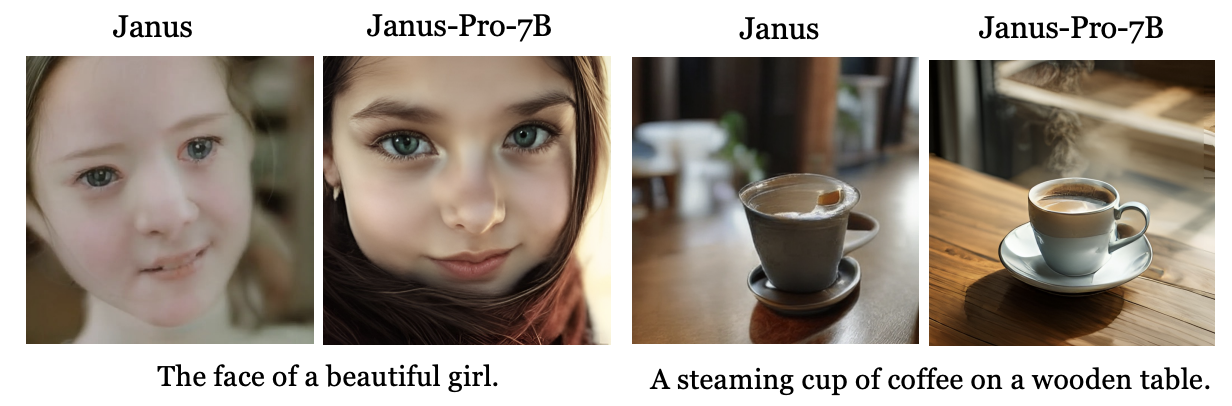

Janus-Pro is DeepSeek’s newest multimodal AI model, designed to handle tasks involving both text and images. It introduces several improvements over the original Janus model, including better training strategies, larger datasets, and scaled model sizes (available in 1B and 7B parameter versions).

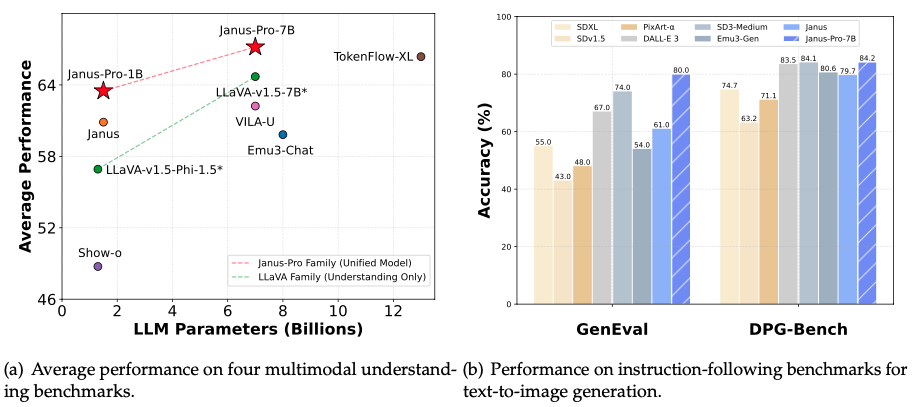

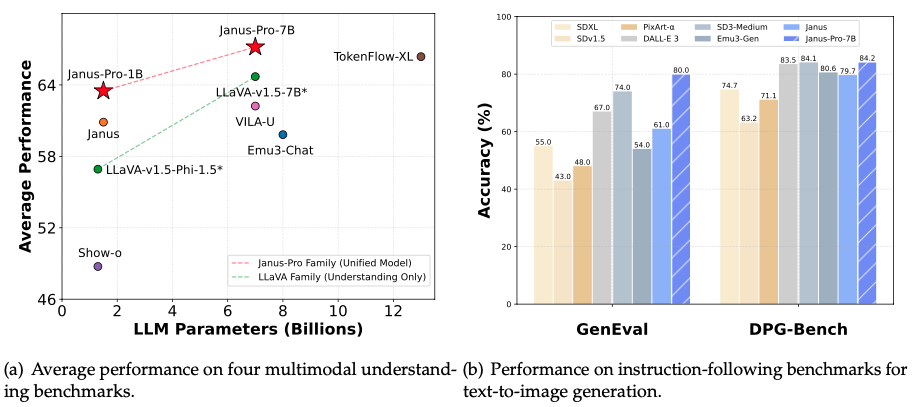

Janus vs. Janus Pro-7b. Source: Janus-Pro’s release paper.

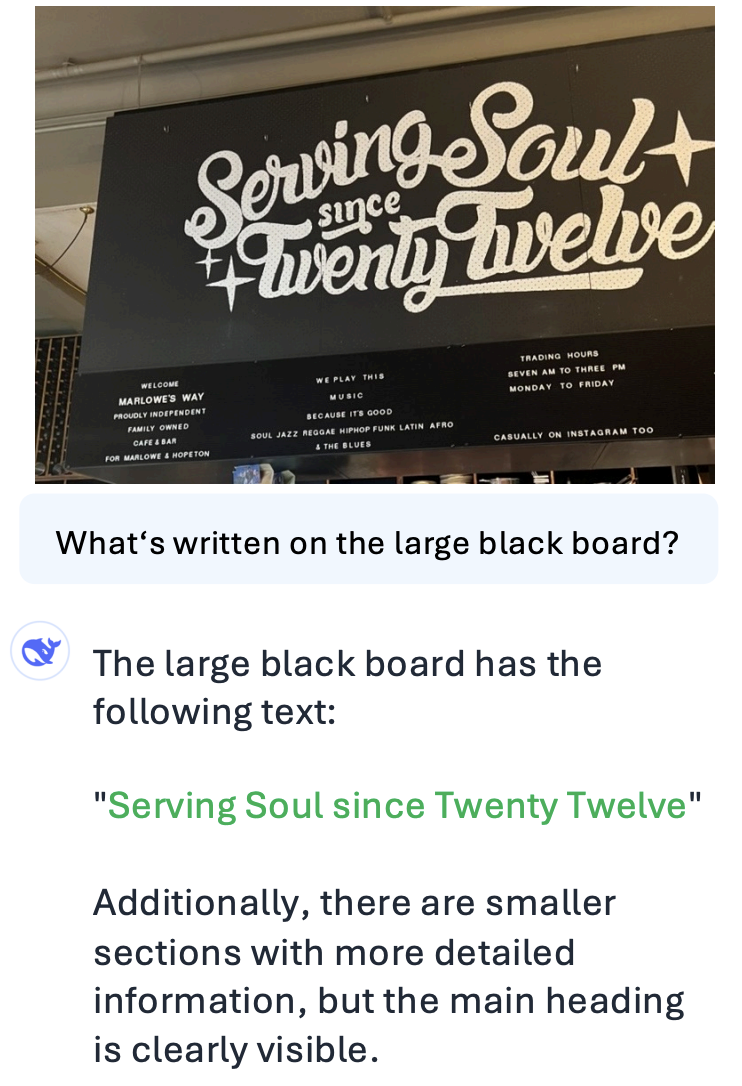

Unlike AI models that specialize in only one type of input, multimodal AI models like Janus-Pro are built to understand and connect these two modalities. For example, you can upload an image and ask a text-based question about it—such as identifying objects in the scene, interpreting text within the image, or even analyzing its context.

Text recognition with Janus-Pro. Source: Janus-Pro’s release paper.

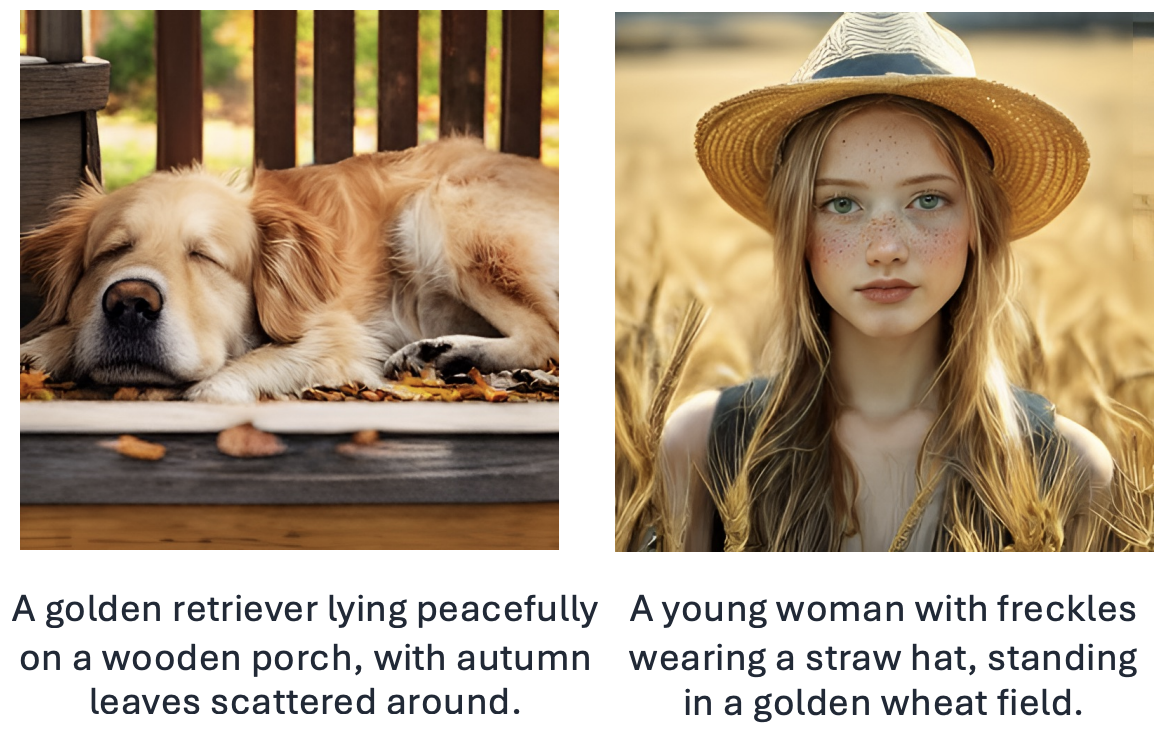

Janus-Pro can generate high-quality images from text prompts, such as creating detailed artwork, product designs, or realistic visualizations based on specific instructions. It can also analyze visual inputs, like identifying objects in a photo, reading and interpreting text within an image, or answering questions about a chart or diagram.

Text-to-image generation with Janus-Pro. Source: Janus-Pro’s release paper.

Janus-Pro comes in two sizes—1B and 7B parameters—offering flexibility depending on your hardware.

How Does Janus-Pro Work?

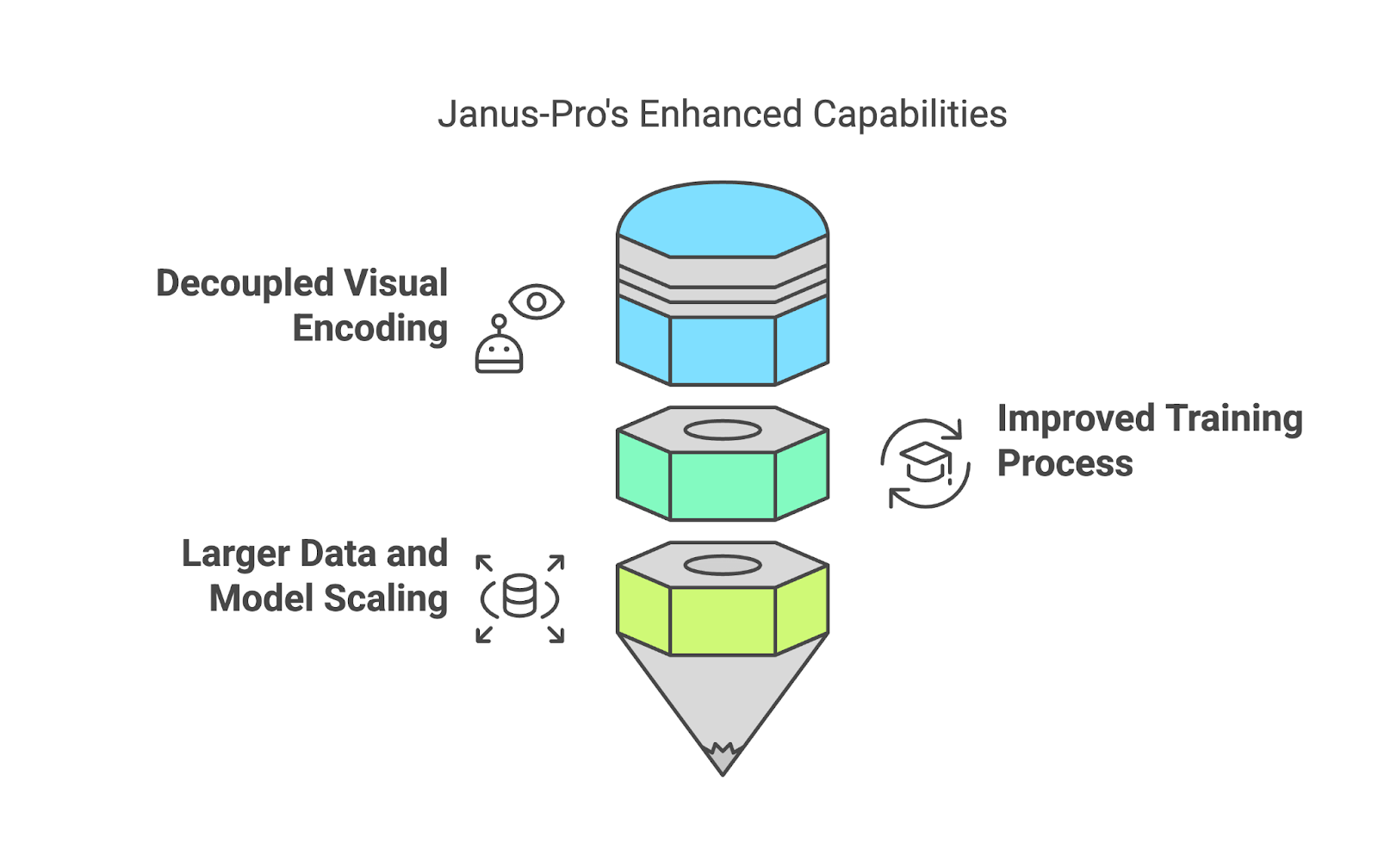

Janus-Pro is designed to handle both understanding and generating text and images, and it achieves this by making some clever improvements over its predecessor. Let me explain its key components in a way that’s easier to digest.

Decoupled visual encoding

One of the standout features of Janus-Pro is that it doesn’t use a single system to handle both interpreting and creating visuals. Instead, it separates these processes (decoupled visual encoding).

For example, when you upload an image and ask a question about it, Janus-Pro uses a specialized system to “read” the image and figure out what’s important. On the other hand, when you ask it to create an image from a text description, it switches to a different system that focuses on generating visuals. This separation allows the model to do both tasks better—avoiding the compromises that happen when one system is forced to handle everything.

Improved training process

Janus-Pro’s training is divided into three stages, each designed to refine its capabilities:

- Learning visual basics: The model begins by training on datasets like ImageNet, focusing on recognizing objects, text, and visual patterns. This stage was extended in Janus-Pro, giving the model more time to model pixel dependencies and process visual data effectively.

- Connecting visuals and text: In this phase, Janus-Pro integrates text and visuals by training on high-quality, descriptive text-to-image datasets. Unlike earlier versions, Janus-Pro skips inefficient approaches and uses dense prompts for better results.

- Final fine-tuning: This stage adjusts the balance of training data—multimodal, text-only, and text-to-image—from 7:3:10 to 5:1:4.

Larger data and model scaling

Janus-Pro scales up its capabilities by using both real-world and synthetic data in equal parts. This mix not only ensures higher-quality results but also improves stability during tasks like image generation.

Janus-Pro vs. DALL-E 3

In this section, I’ll compare Janus-Pro-7B and DALL-E 3 in multimodal understanding and text-to-image generation. This comparison is meant to give you a practical sense of these models in action, but it’s not a substitute for the depth of systematic benchmark measurements.

Multimodal understanding

I uploaded the image below and asked both Janus-Pro-7B and DALL-E 3:

“In one sentence, what's the main takeaway of this image?”

Source: Janus-Pro’s GitHub page.

Here are the responses:

Janus-Pro: The main takeaway of this image is that the Janus model achieves the highest average performance on multimodal understanding tasks, while also demonstrating high accuracy on instruction-following benchmarks for text-to-image generation.

DALL-E 3: The Janus-Pro models, particularly Janus-Pro-7B, outperform other multimodal models in both understanding and instruction-following benchmarks, demonstrating strong capabilities across text and image tasks.

While both models summarize the graph well, Janus-Pro incorrectly refers to “the Janus model,” failing to distinguish it from Janus-Pro. DALL-E 3, however, specifically identifies “Janus-Pro models, particularly Janus-Pro-7B,” showing better contextual understanding.

This is just an isolated example, so we can’t draw any general conclusions.

Text-to-image generation

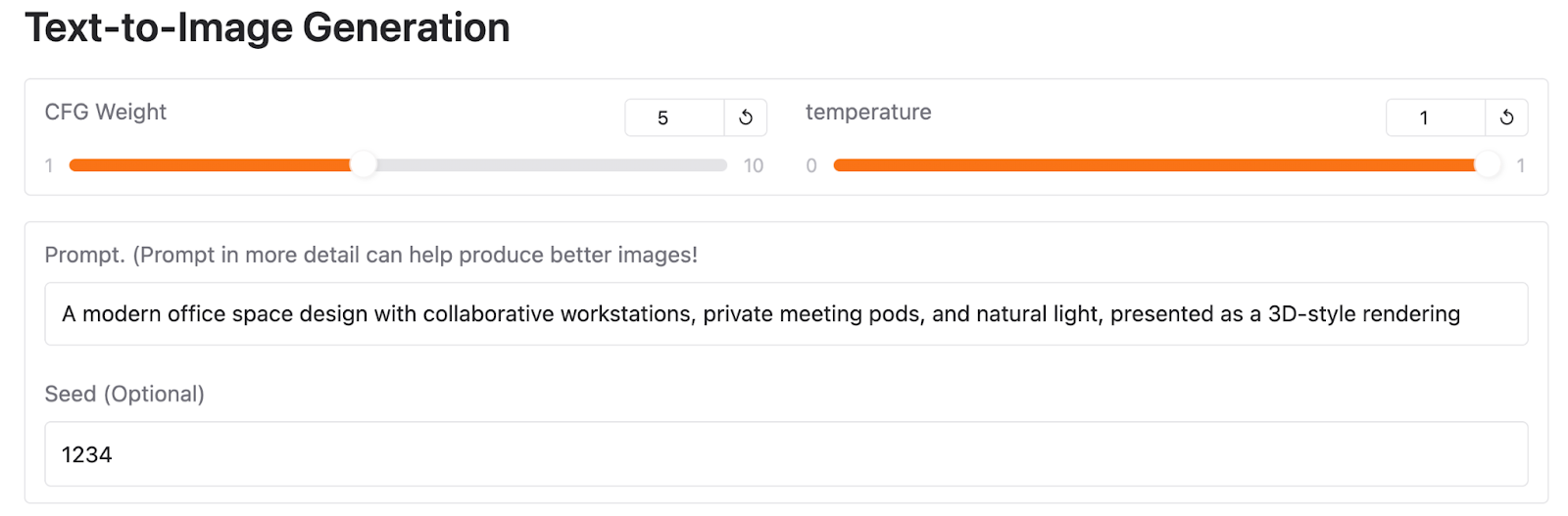

I tried this prompt because it might be something that has an actual use in practice:

“A modern office space design with collaborative workstations, private meeting pods, and natural light, presented as a 3D-style rendering”

DALL-E 3 generated this image below, which checks all the elements in the prompt: modern office space, collaborative workstations, private meeting pods, natural light, and 3D-style rendering. However, once you look closely, you’ll notice quite a few artifacts, like:

- The reflections in the upper-left glass panels appear slightly warped, especially the circular light fixture.

- Some of the desk items (lamps, papers, and computers) have blurred or unnatural edges, indicating potential AI merging errors.

- The office chairs, especially the one in the middle, seem slightly distorted, particularly the legs and how they interact with the floor (the armrest pads are also not positioned properly).

I prompted Janus-Pro-7B on Hugging Face. The model generated five images, and all of them look pretty bad:

By looking at the first image, we can spot a few major artifacts without much effort:

- The ceiling has an unnatural warping effect, with duplicated and misaligned lighting fixtures that appear stretched or floating.

- Some desks are oddly shaped, with inconsistent angles and unnatural overlaps. Certain chairs appear partially melted or fused with the floor.

- The booth structure on the right has an unnatural, melted appearance, with the chair inside it looking deformed and disconnected.

You’ll be able to reproduce this result on Hugging Face by using the same prompt and the following parameters and seed:

Despite experimenting with different parameters and seeds, I couldn’t produce better outputs with Janus-Pro-7B. Again, this is just one example and doesn’t provide enough evidence to make broad conclusions about either model.

Janus-Pro Benchmarks

Janus-Pro has been tested across multiple benchmarks to measure its performance in both multimodal understanding and text-to-image generation. The results show improvements over its predecessor, Janus, and place it among the top-performing models in its category.

Source: Janus-Pro’s GitHub page.

The left chart in the image above shows how Janus-Pro performs on four multimodal understanding benchmarks—the DeepSeek team averaged the accuracy of POPE, MME-Perception, GQA, and MMMU. The key takeaway is that Janus-Pro-7B outperforms its smaller counterpart, Janus-Pro-1B, as well as other multimodal models like LLaVA-v1.5-7B and VILA-U.

The right chart compares Janus-Pro-7B with other leading models in instruction-following benchmarks for text-to-image generation, specifically GenEval and DPG-Bench:

- On GenEval, which evaluates how well a model follows text prompts to generate images, Janus-Pro-7B scores 80.0%, outperforming DALL-E 3 (67%) and SD3-Medium (74%).

- On DPG-Bench, which tests accuracy on detailed prompt execution, Janus-Pro-7B scores 84.2%, surpassing all other models.

How to Access Janus-Pro

You can try Janus-Pro without complex setup using a few different methods.

Online demo on Hugging Face

The fastest way to test Janus-Pro is through its Hugging Face Spaces demo, where you can enter prompts and generate text or images directly in your browser. This requires no installation or setup.

Local GUI with Gradio

If you prefer a local setup with a user-friendly interface, DeepSeek provides a Gradio-based demo. This lets you interact with Janus-Pro through a web-based GUI on your machine. To use it, follow the instructions on Janus's official GitHub repository.

Conclusion

Janus-Pro is DeepSeek’s latest move in the multimodal AI space, offering an open-source alternative to models like DALL-E 3. It improves upon its predecessor with better training, larger datasets, and a decoupled architecture for handling text and images more effectively.

In my head-to-head comparison with DALL-E 3, Janus-Pro showed some weaknesses in text-to-image generation, producing noticeable artifacts and inconsistencies. However, it performed well in multimodal understanding tasks. That said, this is just a limited test and doesn’t provide enough evidence to draw general conclusions about the model’s overall capabilities.

FAQs

What hardware is required to run Janus-Pro locally?

Janus-Pro can be run on consumer-grade GPUs for smaller models like the 1B version. For the larger 7B model, a high-end GPU with sufficient VRAM, such as an NVIDIA A100 or similar, is recommended.

Is Janus-Pro suitable for real-time applications?

While Janus-Pro is powerful, its performance depends on the hardware it’s running on. Real-time applications may require significant computational resources, especially for the 7B model.

Does Janus-Pro support languages other than English?

Yes, Janus-Pro includes datasets aimed at enhancing multilingual capabilities, including Chinese conversational data and others, making it suitable for tasks in multiple languages.

Can Janus-Pro generate high-resolution images?

Currently, Janus-Pro generates images at a resolution of 384×384 pixels.

Can Janus-Pro be fine-tuned for specific applications?

Yes, as an open-source model, Janus-Pro can be fine-tuned using domain-specific datasets for customized applications.

I’m an editor and writer covering AI blogs, tutorials, and news, ensuring everything fits a strong content strategy and SEO best practices. I’ve written data science courses on Python, statistics, probability, and data visualization. I’ve also published an award-winning novel and spend my free time on screenwriting and film directing.