Track

On November 6, 2024, Google seemingly unintentionally revealed Jarvis AI by accidentally publishing an early version of this AI agent as an extension on the Google Chrome Web Store. In this article, I’ll explore what Jarvis AI could be and how it might fundamentally change the way we browse the web.

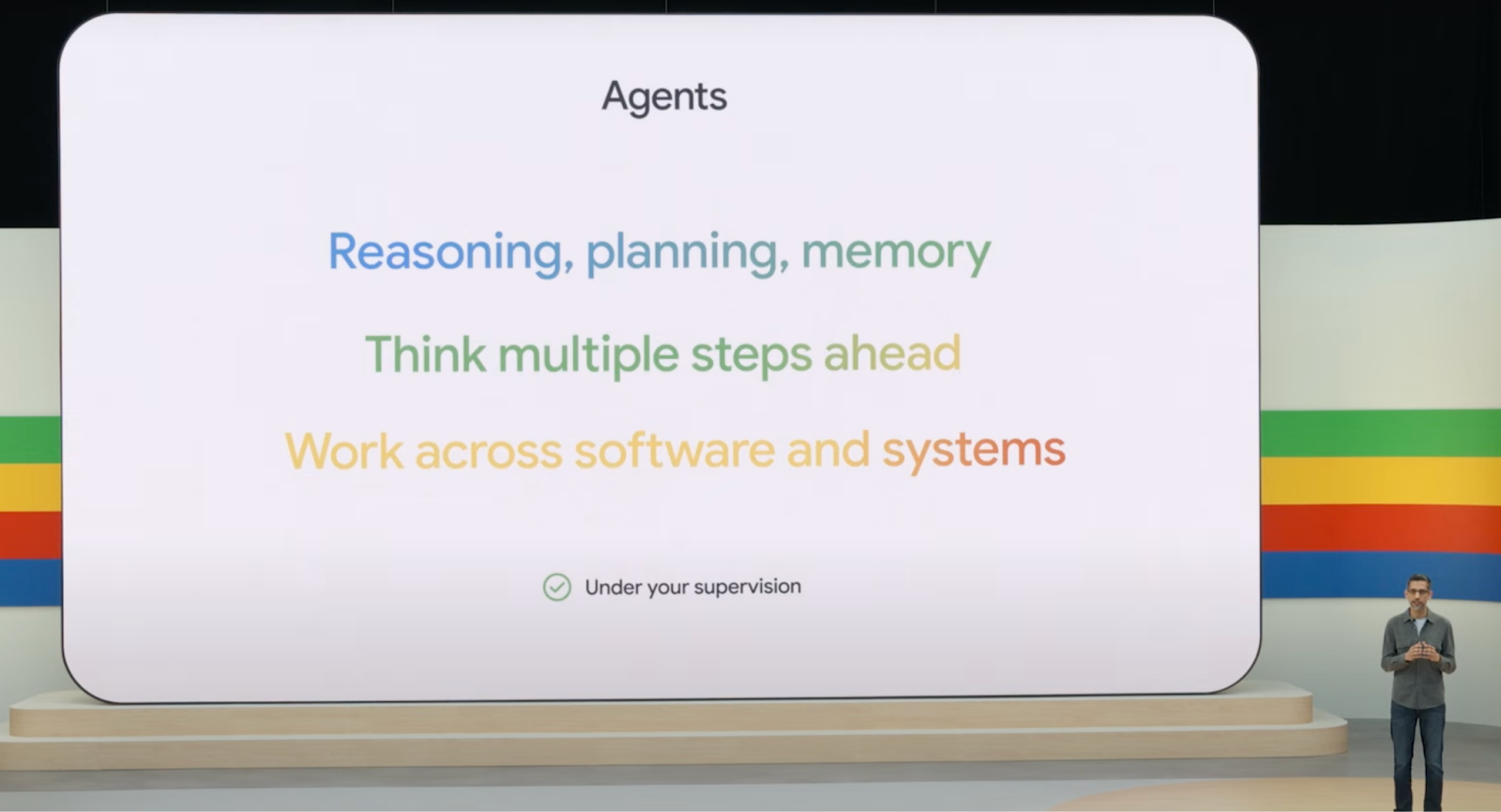

Introduction to AI Agents

What Is Jarvis AI?

The only thing we know about Jarvis AI is that earlier this November, a Google Chrome extension named Jarvis was released for a short period. Despite its brief appearance, the extension's description on the store page referred to it as "a helpful companion that surfs the web for you." This description aligns with Jarvis's intended functionality as an AI agent designed to automate web-based tasks.

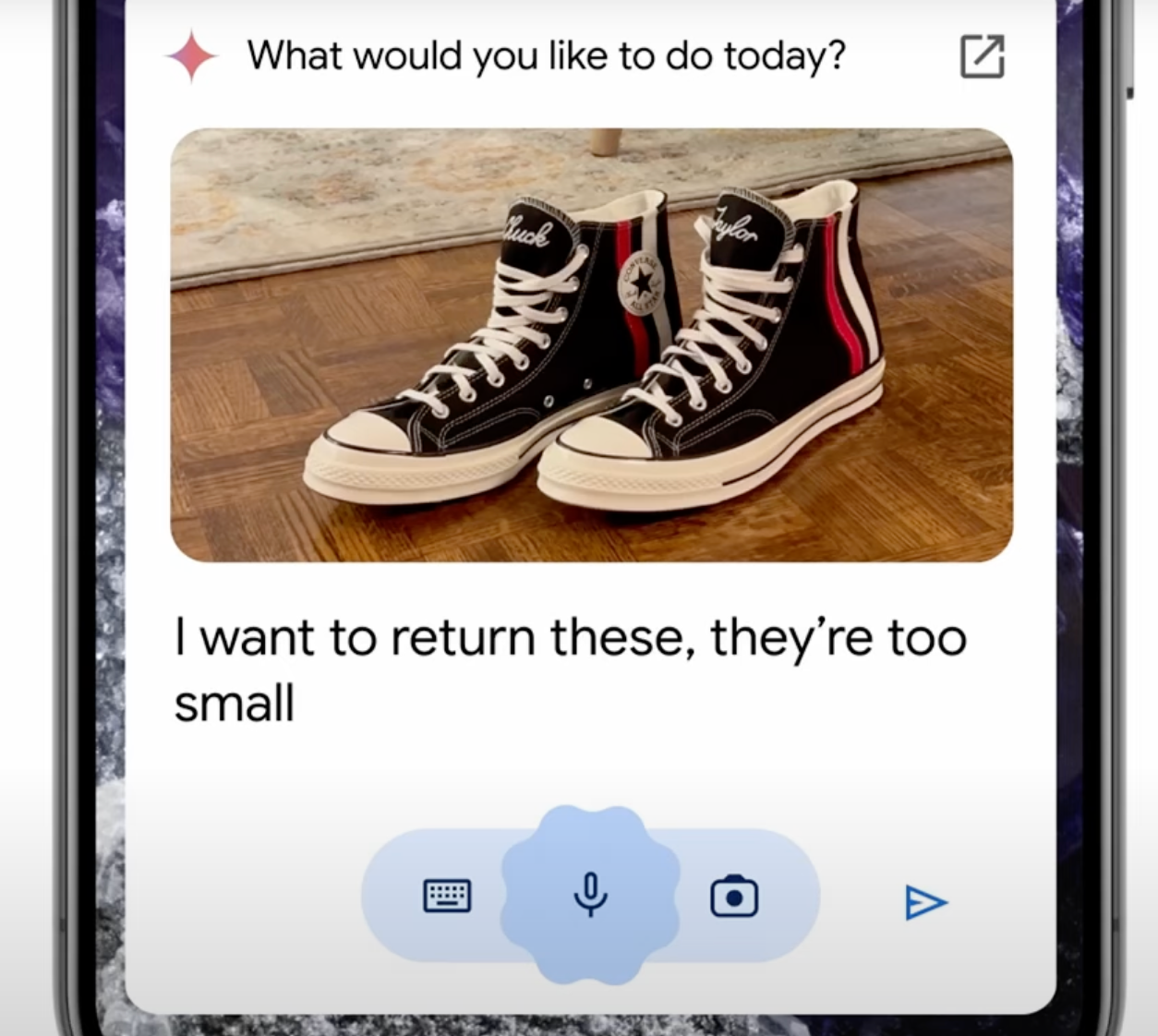

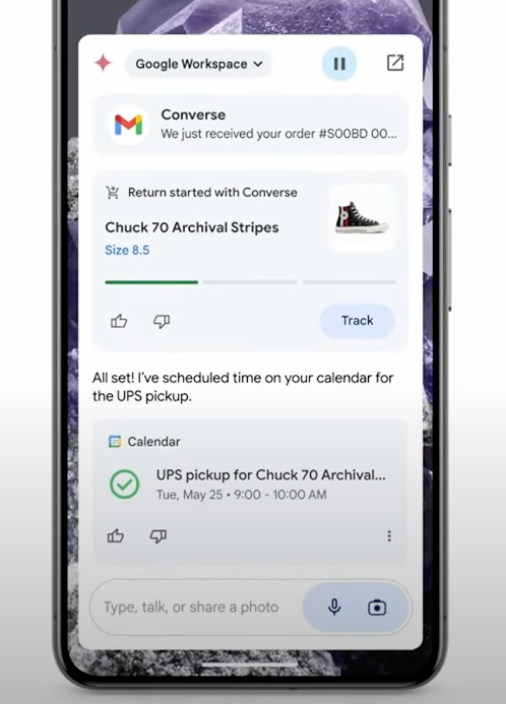

In their keynote speech earlier this year, Google showcased unreleased AI agents with the ability to control the browser to perform a wide variety of tasks. I speculate that Jarvis AI might be the actual product behind this presentation.

In their presentation, they give an example of returning shoes. Using AI agents, all we’d need to do is indicate that we want to return the shoes, and the agent would autonomously perform all the steps.

Integrated with the browser and other Google products, such as Gmail, the agent can complete the return process by following these steps:

- Search the inbox for the receipt.

- Locate the order number in the email.

- Fill out the return form.

- Schedule a pickup.

How Does Jarvis AI Work?

Jarvis AI can automate everyday web-based tasks for users, such as conducting research, making online purchases, and booking flights or reservations.

Jarvis likely utilizes a specialized version of Google's Gemini family of large language models. This suggests that Jarvis uses a "thinking" or reasoning model, contributing to its ability to handle complex tasks and provide more personalized responses. Furthermore, it is expected to integrate deeply with Google's existing services like Gmail, Maps, and Search.

This integration could enhance Jarvis’s capabilities by providing access to user data and streamlining various processes, such as retrieving receipts from Gmail for online returns or using location data from Maps for travel planning.

The Gemini models are multimodal and can process various types of data (text, images, video, audio, etc.), finding connections between them. It is likely that the agent will have access to the browser's content, enabling it to view, interpret, and interact with the elements displayed on the screen.

It's important to note that the information regarding internal workings and reliance on specific technologies is based on speculation.

Jarvis AI vs. Other AI Agents

As I’ve mentioned, I believe that Jarvis is designed to automate everyday web-based tasks. Jarvis distinguishes itself through its tight integration with the Google Chrome web browser. However, Jarvis is not alone in this area.

Anthropic’s computer use

Anthropic, the company behind Claude, has also made strides with its own AI agent, known as computer use. This agent is capable of interacting with various applications beyond web browsers. Anthropic's agent entered its public beta phase in October 2024, showcasing its ability to move the cursor, click buttons, and type text, much like a human user.

Despite their differences in developmental stage and scope of operation, both Jarvis and Anthropic's computer use share a common goal: to automate tasks by mimicking human-like interaction with computers. Both agents likely rely on sophisticated mechanisms for screen capture and interpretation to understand the context of user requests and execute the appropriate actions.

OpenAI’s Operator

OpenAI is set to introduce “Operator,” an autonomous AI agent designed to perform tasks on behalf of users, such as writing code and booking travel arrangements. According to Bloomberg, Operator is scheduled for a research preview release in January 2025.

Sam Altman answering a Reddit AMA

Meta’s Toolformer

Meta AI Research introduced Toolformer, a language model capable of autonomously utilizing external tools to enhance its performance on various tasks. Detailed in the paper “Toolformer: Language Models Can Teach Themselves to Use Tools,” the model is trained to determine which APIs to call, when to call them, what arguments to pass, and how to incorporate the results into future token predictions.

This self-supervised approach requires only a handful of demonstrations for each API, enabling the model to effectively use tools such as calculators, question-answering systems, search engines, translation systems, and calendars.

AI Agents Challenges

In general, AI agents introduce a range of challenges and considerations, particularly concerning privacy, accuracy, and ethical implications.

Privacy concerns

While it sounds appealing to automate boring and time-consuming tasks, this convenience comes with a sense of discomfort. Do I want Google or any other company to have full access to my computer? Something about that idea makes me deeply uneasy.

Privacy concerns are of great importance. Google needs to assure users that their data will be handled securely and responsibly. They will need to implement robust security measures and transparent data handling practices to mitigate potential risks of data breaches or misuse. Establishing clear guidelines on data access, storage, and usage and providing users with granular control over their data-sharing preferences will be crucial for building trust and ensuring user adoption.

Accuracy and reliability

What happens when my AI agent makes a mistake? When an AI chatbot makes a mistake, it may provide incorrect information, but no action is taken directly. If I ask an AI chatbot to help me plan a trip, it offers a text-based plan, but I'm still responsible for making reservations, handling payments, and so forth. There's a human layer to prevent unwanted outcomes. However, when AI agents take actions in the real world, these actions can have real unwanted consequences.

Who is responsible when the AI agent books the wrong flights or returns the wrong shoes? I imagine these agents will prompt the user before taking each action, but I'm unsure if that alone is enough to prevent mistakes in more complex scenarios.

Companies like Google must prioritize rigorous testing and validation processes to minimize these inaccuracies. Implementing mechanisms for fact-checking, cross-referencing information, and providing users with clear disclaimers about the potential for errors will be essential.

Ethical implications

The ethical implications of Jarvis and AI agents, in general, extend beyond privacy and accuracy. Companies must also consider the broader societal impact of these agents, including potential job displacement and the creation of new dependencies on AI systems.

AI Ethics

Conclusion

Everything we've said about Jarvis AI is speculative. Until its release, we won’t know for sure what it does. However, one thing is certain: AI agents are coming, and they represent the next step in the AI revolution.

Although I acknowledge that automating tasks is incredibly useful and has the potential to save significant amounts of time, I am uncomfortable with the idea of giving control of my computer to an AI (or anyone, for that matter).

In recent years, there has been much discussion about the potential dangers of AI. I believe that as long as AIs are limited to chatbots and cannot perform actions in the real world, the risks are minimal. Yes, an AI might instruct someone on how to make something harmful, but that person still needs to act on it. If someone really wants to learn that, they could acquire the knowledge anyway, given enough time. With AI agents, we lose that layer of protection and that sounds really dangerous as we provide these agents with more and more capabilities.

I feel that the move towards AI agents is inevitable, but it must be approached with great caution.