Track

Day 1 of Google I/O 2025 is over. In this article, I’ll walk you through the announcements that matter most for the AI industry.

I’ll focus on the updates that are either ready to use or expected to be released soon. Along the way, I’ll share quick impressions and, where relevant, put things in context with the competition.

We keep our readers updated on the latest in AI by sending out The Median, our free Friday newsletter that breaks down the week’s key stories. Subscribe and stay sharp in just a few minutes a week:

Veo 3

What stood out to me about Veo 3 is that it offers native audio output. You can now generate videos that include sound directly, no extra editing steps required. Let’s see an example:

Native audio output it’s something I haven’t seen yet in Runway or Sora. At this point, I’d say Veo 3 is one step ahead.

While the demo looks good, I’ve learned that demo videos rarely reflect how these models behave in practice. As soon as the prompt strays outside the shape of the training data—an unfamiliar scene, a weird character, or an idea with too much subtlety—the model tends to collapse. I’m looking forward to taking Veo 3 for a spin to see how well it does.

Access to Veo 3 requires an AI Ultra subscription, which costs $250/month. Even if you’re willing to pay, availability is limited. Right now, it’s only accessible in the U.S., and only within Google’s new AI-powered video editor called Flow (which we’ll cover next).

If you’re curious about the technical details or want to explore more examples, take a look at Veo’s official page here.

Flow

Flow is an AI filmmaking tool that lets you generate individual shots using a combination of Veo, Imagen, and Gemini.

One thing I find useful is that you can create separate elements (called “ingredients”) and then group them together into a single scene. This gives you modular control and can be especially helpful when you want to reuse the same elements across multiple prompts or shots.

Let’s see an example:

There are also tools for camera control and transitions inside Flow, which help give the clips a more cinematic feel. These are useful, but not new—Sora and Runway already offer similar features, so I wouldn’t say there’s anything groundbreaking here.

Still, it’s worth paying attention to how tools like these evolve. Flow feels like the early version of an AI-first video editor, and it’s not hard to imagine a future where this kind of workflow becomes standard. Just like we now take tools like Premiere Pro or DaVinci Resolve for granted, something like Flow might become the norm in a few years.

Flow is currently only available in the U.S., and you can access it through Google’s AI Pro and AI Ultra subscriptions.

Imagen 4

Another important announcement was Imagen 4, Google’s newest image generation model. You can use it directly in Gemini or inside Whisk, Google’s design tool.

Google claims improvements across the board—better photorealism, cleaner details on close-ups, more variety in art styles. That’s all fine, but the part that caught my attention was the promise of advanced spelling and typography. If you’ve used any image generator recently, you’ve probably seen that most of them still mess up words or deform letters entirely.

Let’s see an image that Imagen 4 generated:

Source: Google

Right now, I’d say GPT-4o’s image generation is the strongest on the market. However, it still struggles with text and prompt adherence sometimes. If Imagen 4 actually gets spelling right and holds onto prompt intent, I think it has a chance to take the lead in image generation.

Gemma 3n

Gemma 3n is Google’s latest and most capable on-device model. If you’re not familiar with the term, an on-device model is one that runs directly on your phone, tablet, or laptop—without needing to send data to the cloud. This matters for a few reasons: lower latency, better privacy, and offline availability.

But for that to work, the model needs to be small enough to fit in limited memory, yet powerful enough to handle real tasks. That’s the challenge Gemma 3n is trying to meet.

It’s built on a new architecture shared with Gemini Nano—and in fact, the “n” in “3n” stands for “nano.” This architecture is optimized for low memory usage, fast response times, and support for multiple input types like text, audio, and images.

Gemma 3n comes in two variants, with parameter sizes of 5B and 8B. Both are designed to run efficiently, with memory requirements closer to 2B and 4B models thanks to a few optimizations under the hood.

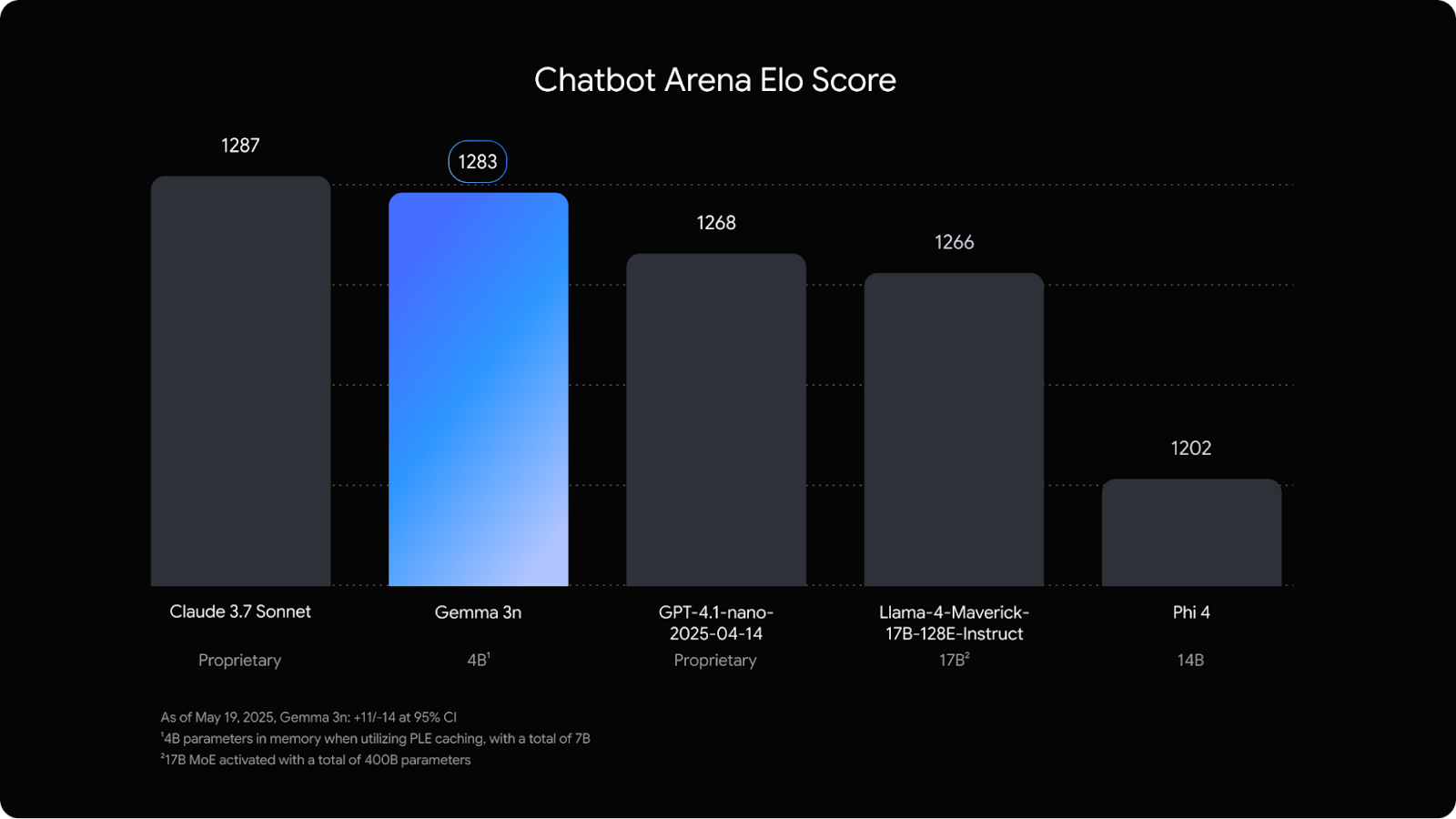

What I’ve found really striking is that it’s almost on par on the Chatbot Arena with Claude 3.7 Sonnet, a much larger model.

Source: Google

This release is mainly aimed at developers, especially those building mobile or embedded applications that can benefit from local AI. While our team at DataCamp is working on fresh Gemma 3n tutorials, I recommend starting with these Gemma 3 blogs:

Gemini Diffusion

The technology that I’m most curious about is Gemini Diffusion.

Gemini Diffusion is a new experimental model architecture designed to improve speed and coherence in text generation. Unlike traditional language models that generate tokens one-by-one in a fixed sequence, diffusion models work by refining noise through multiple steps—a method borrowed from image generation.

Instead of predicting the next word directly, Gemini Diffusion starts with a rough approximation and iteratively improves it, which makes it better at tasks that benefit from refinement and error correction—like math, code, and editing.

In early benchmarks, it’s been reported to generate tokens up to five times faster than standard autoregressive models like Gemini 2.0 Flash-Lite, while delivering similar or better performance on structured reasoning tasks.

Right now, access is limited to select testers. No public release date has been announced. Join the waitlist on this page.

Project Mariner

Project Mariner is Google’s take on an AI agent that can operate directly in the browser. It’s designed to help with complex, multi-step tasks—things like trip planning, product research, or summarizing dense content across multiple tabs.

In that sense, it’s similar to Manus AI or OpenAI’s Operator, which is already available through ChatGPT. Let’s see an example of Mariner in action:

Right now, Mariner isn’t publicly available, but Google says broader access is coming. Developer access is expected soon via the Gemini API.

See more examples here:

Project Astra

Project Astra is Google DeepMind’s prototype for a more general-purpose AI assistant—something that doesn’t just respond to prompts, but sees, listens, remembers, and reacts. It was first introduced last year as a research project, and at I/O 2025 we got a better look at what it can do.

Let’s see it in action:

This isn’t available to the public yet, but parts of Astra are already making their way into Gemini Live, and broader rollout is expected later. Whether it becomes a real product or stays in the research phase remains to be seen, but the direction is clear: this is what Google sees as the foundation for a universal AI assistant.

AI Mode in Search

AI Mode is Google’s new search experience that feels more like a chatbot than a search engine. It’s different from AI Overviews, which are short AI summaries placed on top of the traditional Google results page we’re all familiar with. AI Mode, by contrast, takes over the full interface—it’s a new tab where you can ask complex questions and follow up conversationally.

Let’s see an example:

The interface looks a lot like ChatGPT or Perplexity. You enter a prompt, and AI Mode responds with longer, more structured answers, including links, citations, charts, and sometimes even a full research breakdown. It uses a “query fan-out” approach to split your question into subtopics and pull from across the web, all in one pass.

One notable feature is the integration of the agentic capabilities of Project Mariner. This can save you time on tasks like buying event tickets, making reservations, or finding availability across multiple websites.

It’s clear that search is undergoing a fundamental shift. We’re moving from a model built around lists of links to one centered on direct answers, summaries, and task completion. This has major implications for how information is surfaced and consumed. The SEO and content marketing industries are already seeing the impact.

AI Mode is rolling out in the U.S. now, with more features coming to Labs in the next few weeks. You can enable the experiment in Google Labs if you want to try it early.

Conclusion

That wraps up the most important AI announcements from Day 1 of I/O 2025. As always, there’s a lot of ambition on display—but the real test is how these tools perform in the hands of everyday users and developers.

Some of this tech is still early or gated behind expensive subscriptions, but a few features are already rolling out to all users.

I’m an editor and writer covering AI blogs, tutorials, and news, ensuring everything fits a strong content strategy and SEO best practices. I’ve written data science courses on Python, statistics, probability, and data visualization. I’ve also published an award-winning novel and spend my free time on screenwriting and film directing.