Course

If you work with big data processing, you know that managing and analyzing unorganized data files can be challenging and prone to errors. The risk of losing valuable data due to a simple mistake, such as an accidental delete command, is a constant concern.

Apache Iceberg addresses these challenges by providing a robust and reliable table format that supports rollbacks and restores previous states of your data. This ensures data integrity and consistency, making data management more efficient and less error-prone.

In this tutorial, we’ll explain what Apache Iceberg is, why it's used, and how it works. You’ll also learn how to get started with it through hands-on, step-by-step instructions, empowering you to manage and analyze your data more effectively.

What Is Apache Iceberg?

Apache Iceberg is an open table format designed to handle huge analytic datasets efficiently. It provides a high-performance table structure that brings the benefits of traditional databases, such as SQL querying, ACID compliance, and partitioning, to your data files.

Essentially, Apache Iceberg acts as a lens, allowing you to view and manage a collection of data files as if they were a single, cohesive table.

The main advantage of Apache Iceberg is its ability to handle large-scale data in a highly optimized manner. It supports features like schema evolution, hidden partitioning, and time travel, making it a robust solution for managing complex data workflows.

By abstracting the complexities of underlying storage formats, Iceberg enables efficient data management and querying, making it an ideal choice for big data processing.

Apache Iceberg history

Netflix developed Apache Iceberg in 2017 to address limitations with Hive, particularly in handling incremental processing and streaming data. In 2018, Netflix donated Iceberg to the Apache Software Foundation, making it an open-source project.

Since then, Apache Iceberg has become a cornerstone for modern data lake architectures, with widespread adoption across various industries.

The project has continuously evolved, incorporating features like hidden partitioning and schema evolution to meet the demands of large-scale data environments.

In 2024, Databricks announced its agreement to acquire Tabular, a data management company founded by the original creators of Apache Iceberg. This acquisition aims to unify Apache Iceberg and Delta Lake, enhancing data compatibility and driving the evolution toward a single, open interoperability standard.

Apache Iceberg evolution timeline

|

Year |

Event |

|

2017 |

Netflix develops Apache Iceberg to address limitations with Hive, especially for incremental processing and streaming data. |

|

2018 |

Netflix donates Apache Iceberg to the Apache Software Foundation, making it an open-source project. |

|

2019 |

Apache Iceberg is adopted by various industries for its scalable and efficient data management capabilities. Features like schema evolution and hidden partitioning are introduced. |

|

2020 |

Community contributions and adoption continue to grow, with improvements in performance and new features like time travel and metadata management. |

|

2021 |

Apache Iceberg gains significant traction as a preferred table format for data lakes, integrating with major big data tools and platforms. |

|

2022 |

New features and optimizations are added, enhancing Iceberg's support for complex data workflows and large-scale data environments. |

|

2023 |

Apache Iceberg continues to evolve with a focus on improving data compatibility and interoperability with other data formats and systems. |

|

2024 |

Databricks announced its agreement to acquire Tabular. This acquisition aims to unify Iceberg and Delta Lake. |

What Is Apache Iceberg Used For?

As we saw before, Apache Iceberg is a powerful tool for data management and analytics in large-scale environments.

Here’s a more specific overview of what Apache Iceberg is used for.

Analyzing data

Using file formats like ORC or Parquet is easy to implement, but running analytics on them is inefficient. However, Iceberg provides them with a table format with metadata information to optimize queries.

For example, raw data files don't store information about which table they belong to, but Iceberg's metadata files do. This allows query engines to decide which tables to read and which to skip, significantly improving query efficiency.

Metadata files store this type of information for efficient querying. Querying languages first search for the relevant file name in the metadata and fetch only that file for fast querying, reducing unnecessary data reads.

Partition pruning

Partition pruning is a technique that skips irrelevant data and performs operations only on the necessary data.

For example, if your partition column is "date" and you specify a specific date range, the query reads only data within that range. This reduces the amount of data read from the disk, making querying Iceberg tables faster and more efficient.

Time travel

Time travel is a feature that allows you to access older versions of your data by fetching snapshots from a specific point in time.

A snapshot is a complete set of data files at a particular moment.

Metadata files track snapshot IDs, timestamps, and history details, enabling access to each snapshot by its ID or timestamp.

Integrations

Many popular storage systems, such as Google Cloud, AWS, and Microsoft Azure, support the Iceberg table format. You can store data files on those cloud platforms and use an external or built-in catalog service to point to their metadata.

Once the catalog service is configured, you can use big data processing frameworks like Apache Spark or Apache Flink.

Iceberg also supports various querying engines, such as SQL, Trino, and Presto, allowing seamless integration with existing data workflows.

Community

Apache Iceberg has an active community and an online presence on various collaboration platforms like Twitter and Github. It also has a dedicated Slack workspace for those who want to participate in the latest development talks.

Open-source contributors and Iceberg developers are accessible to the learning community through social platforms. This makes it easy to get a solution when you encounter issues while implementing Iceberg features.

Apache Iceberg Core Concepts

Apache Iceberg introduces a set of core concepts that enable efficient data management and querying. In this section, we will review them.

Metadata management

Iceberg manages table schemas, partitions, file locations, and more through its metadata layer, which maintains metadata, manifest lists, and manifest files in JSON format.

- Metadata file: tracks the table’s schema and partitions.

- Manifest files: contain file-level information, such as the location, size, partition, and row and column stats of a data file.

Iceberg supports versioning through snapshot metadata. It stores details about the snapshot’s timestamp, partition, and relevant data files. A snapshot is a view of your entire data at a specific point in time.

Schema evolution

Schema evolution is the process of modifying a table schema to accommodate new data elements or changing needs. Apache Iceberg supports native schema evolution, allowing schema updates without costly data rewrites or migrations.

For example, if you’re maintaining employee data and want to add a new column for performance metrics, you can add an “employee_performance” column. Iceberg updates its metadata to include this column without affecting existing data. The new column initially has default values and gets updated as new records are inserted.

Partitioning

Partitioning divides data into smaller subsets, allowing you to access only the data needed for a query instead of reading the entire dataset.

Iceberg supports many partitioning strategies, for example:

- Range partitioning: Divides data based on a range of values in the partition column, such as specific dates, numeric values, or string entries.

- Hash partitioning: Applies a hash function to the partition key to divide the data.

- Truncate partitioning: Truncates the values of a partition column and groups the data. For example, truncating zip codes 533405, 533404, 533689, 533098, 535209, and 535678 to 3 digits groups the data to ‘533’ and ‘535’.

- List partitioning: Matches partition key values with values in a list, dividing the data accordingly. This is suitable for categorical values in the partition column. For example, partitioning laptop companies into groups like “Lenovo,” "Apple,” and “HP.”

Snapshots

A snapshot is a set of manifest files valid at a specific point in time. Every change you make to the data creates a new snapshot with updated manifest files and metadata.

Iceberg follows snapshot-based querying, meaning you can access the entire set of data files at a specific point in time by accessing a particular timestamp. This allows you to access historical data and roll back to previous versions in case of data loss.

Apache Iceberg Technical Architecture

Apache Iceberg doesn’t store data in tables. Instead, it organizes data files to show them as a single table.

Let’s review the architecture that makes that possible.

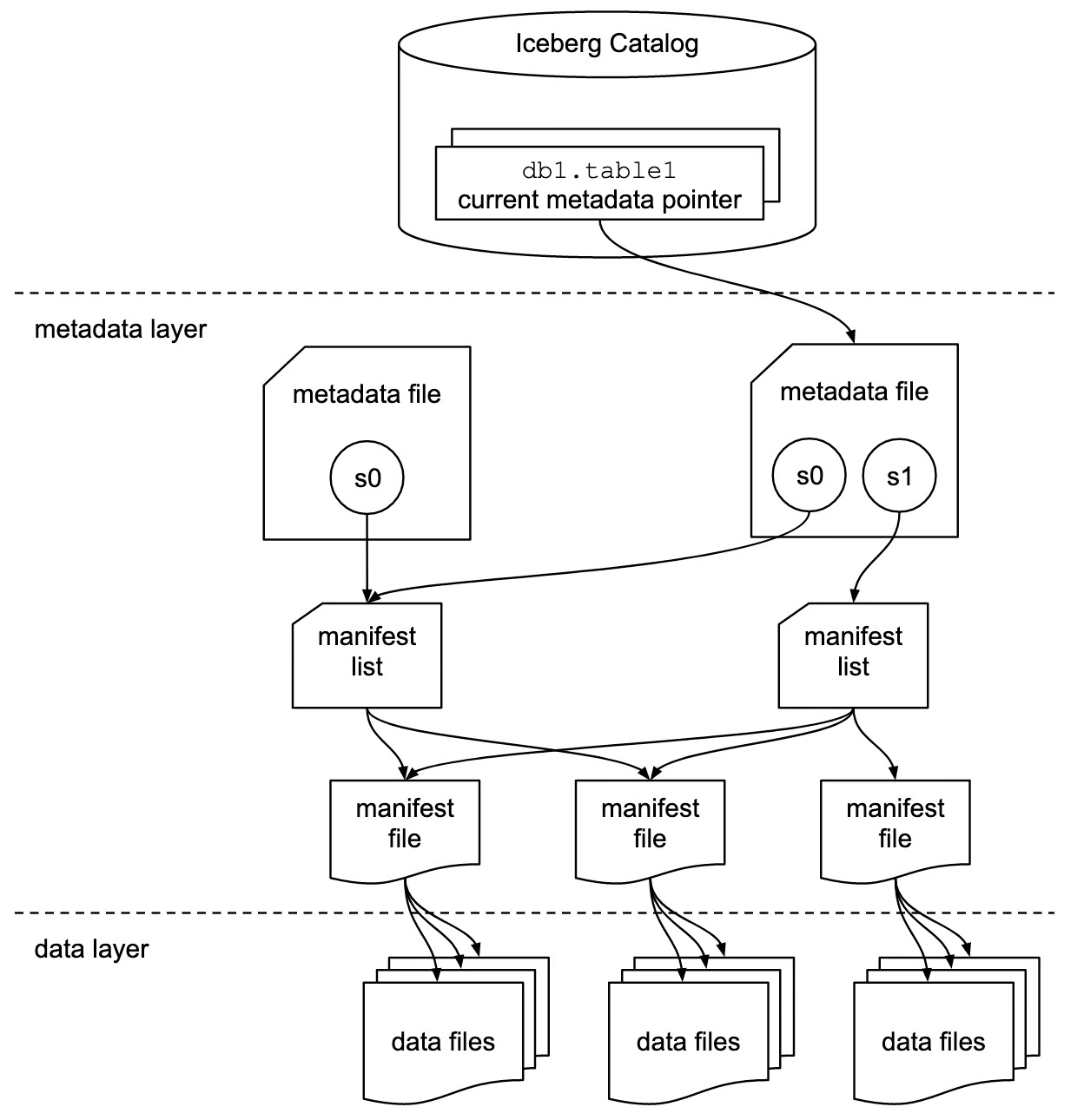

Image source

Iceberg catalog

The catalog layer contains a reference or pointer to the table's current metadata file. Whenever you change data, a new metadata file is written, and the pointer now points to the most recent metadata file in the log.

This layer facilitates ACID compliance within Iceberg tables. For example, ongoing changes are not visible to other transactions until they are done and committed to the table. Till then, the pointer refers to the current metadata file.

Overall, catalogs simplify ACID compliance management in Iceberg tables by pointing to specific versions of metadata files.

Metadata layer

This layer contains three types of files:

- Metadata files: These files store the table’s schema, location, partition information, snapshot timestamps, and other information.

- Manifest files: Manifest files track metadata at the file level. They store partition information, statistics like row count, column count, snapshot details, and file format for each data file.

- Manifest lists: A group of manifest files that make up a single snapshot is a manifest list.

This layer maintains the structure and integrity of the table, enabling efficient data querying and management.

Data layer

The data layer is the storage component of the Iceberg architecture where the actual data resides.

Iceberg supports various data formats, including Parquet, ORC, and Avro. This flexibility allows for optimized storage and efficient data processing, catering to different data types and use cases.

Apache Iceberg Integration and Compatibility

Apache Iceberg integrates with many popular big data processing frameworks and computing engines.

Integrations with data processing engines

Apache Spark

Iceberg tables function like large data storage systems on which you can use Spark APIs to read and write data. In addition to the dataframe API, you can use the Spark SQL module to query Iceberg tables.

Apache Spark has two catalogs: org.apache.iceberg.spark.SparkCatalog and org.apache.iceberg.spark.SparkSessionCatalog. These catalogs help Spark discover and access available Iceberg tables metadata.

metadataorg.apache.iceberg.spark.SparkCatalog: To use external catalog services like Hive or Hadoop.org.apache.iceberg.spark.SparkSessionCatalog: Spark's built-in catalog can handle both Iceberg and non-Iceberg tables in the same session.

Apache Flink

Apache Flink and Iceberg integration is known for streaming data processing. This integration allows you to stream data directly from various sources to Iceberg tables and makes it easy to perform analytics on real-time streaming data.

Presto and Trino

Preso and Trino are known for their fast data processing capabilities compared to Hive or other SQL engines. Therefore, if you have massive data that needs to be queried and analyzed, Iceberg and Presto/Trino integration is a great choice.

Trino doesn’t have its built-in catalog. It relies on external catalog services like Hive Metastore or AWS Glue to point to Iceberg tables.

Data lake compatibility

Traditional data lakes don't support ACID properties, leading to incomplete data reads or concurrent data writes. However, integrating a data lake with an Iceberg ensures data consistency and accuracy.

- Amazon S3: Amazon S3 is a popular cloud storage service that supports various file formats. It’s used as the storage layer in data lake architecture. To use Iceberg with S3, you’ll use AWS glue as the catalog service. Once the catalog is configured, you can use any querying language to analyze tables.

- Google Cloud Storage: A data lake on Google Cloud provides a more scalable and flexible data architecture. Many companies having data infrastructure built around Google Cloud can leverage the Iceberg table format. Iceberg tables can be queried using Google’s BigQuery or standard querying languages.

- Azure Blob Storage: This is Microsoft’s storage system optimized to store massive amounts of unstructured data on the cloud. Iceberg integrates with Azure and provides fast and reliable data access.

Apache Iceberg vs Delta Lake

Apache Iceberg and Delta Lake are both advanced table formats designed to bring ACID properties to data lakes, but they differ in their features, integrations, and use cases.

Here's a detailed comparison of the two:

Delta Lake overview

Delta Lake is a table format that provides ACID properties to a collection of Parquet files, ensuring that readers never see inconsistent data.

Developed by Databricks, the company behind Apache Spark, Delta Lake is highly compatible with Spark for big data processing and analytics.

Delta Lake uses transactional logs to manage time travel features. A transaction log is a list of JSON files that track changes made to the table. Each insertion, deletion, or update leads to a new log file that tracks the specific changes.

Delta Lake periodically creates checkpoint files, which represent snapshots of the entire table at specific points in time and are stored in Parquet format.

Apache Iceberg vs. Delta Lake features comparison

|

Feature |

Apache Iceberg |

Delta Lake |

|

Definition |

Iceberg table format provides a scalable infrastructure with support for multiple processing engines. |

Delta Lake is a reliable storage layer, especially suitable for the Databricks ecosystem. |

|

File format |

Iceberg supports various file formats, including Parquet, Avro, and ORC. |

Delta Lake natively supports only the Parquet file format. |

|

ACID properties support |

Iceberg supports ACID transactions. |

Delta Lake offers robust ACID properties. |

|

Partition handling |

Iceberg supports dynamic partitioning, meaning partitions can be updated without rewriting the schema. |

Partitions are constant, and you should define them when creating tables. Modifying defined partitions might involve data rewrites. |

|

Time travel |

Every change made to the table creates a new snapshot. |

It offers time travel features through transaction logs, with changes tracked in JSON files. |

|

Integrations |

Iceberg supports multiple data processing engines, such as SQL, Spark, Trino, Hive, Flink, Presto, and more. |

Delta Lake is tightly coupled with Apache Spark. |

Apache Iceberg vs. Delta Lake use cases

|

Use Case |

Apache Iceberg |

Delta Lake |

|

Engine flexibility |

It's best when using multiple engines, including Apache Spark, Flink, Presto, Hive, etc. It’s Ideal for environments needing different engines for different processing tasks. |

Best for users who natively use Apache Spark, offering tight integration and optimal performance within the Spark ecosystem. |

|

Data streaming |

Supports continuous data ingestion from various sources, processing it in real time. |

It unifies batch and stream processing, which is ideal for use cases requiring both in a single pipeline. |

Apache Iceberg vs. Delta Lake additional considerations

- Community and ecosystem: Both Iceberg and Delta Lake have active communities and extensive documentation. However, Delta Lake benefits from Databricks' backing, providing robust support within the Databricks ecosystem.

- Performance: While both formats offer performance optimizations, Delta Lake's tight integration with Spark can benefit Spark-centric workflows. Iceberg's flexibility with different engines can provide performance gains in multi-engine environments.

Overall, Delta Lake is well-suited for use cases requiring real-time processing and tight integration with Spark and the Databricks ecosystem. On the other hand, Apache Iceberg offers greater flexibility for large-scale data processing and the ability to choose the best engine for specific use cases.

Getting Started With Apache Iceberg

Setting up and using Apache Iceberg involves configuring your environment and understanding basic and advanced operations. This guide will help you get started with it.

Installation and setup

To set up and run Iceberg tables, you need to have the following environments configured on your machine:

- Java JDK

- PySpark

- Python

- Apache Spark

It's also beneficial to have a basic understanding of these tools and technologies. You can learn them through the following courses:

- Big Data with PySpark

- Introduction to PySpark

- Introduction to Spark SQL in Python

- Big Data Fundamentals with PySpark

- Python Programming

Once ready, you can follow the below steps to set up Iceberg tables:

- Download the Iceberg JAR files and place them in a directory on your machine.

- Create a new folder called

iceberg-warehouseto store your Iceberg tables.

mkdir iceberg-warehouse- Configure the jar files in a Spark session, as shown below.

from pyspark.sql import SparkSession

spark = SparkSession.builder \

.appName("Iceberg-sample") \

.config("spark.jars", "jars/iceberg-spark-runtime-<version>.jar") \

.getOrCreate()

Replace “jars/iceberg-spark-runtime-<version>.jar” with the actual directory of jar files and the version you are using.

- Configure PySpark to use Iceberg tables.

from pyspark.sql import SparkSession

spark = SparkSession.builder \

.appName("Iceberg-Sample") \

.config("spark.jars", "jars/iceberg-spark-runtime-<version>.jar") \

.config("spark.sql.catalog.catalog_name", "org.apache.iceberg.spark.SparkCatalog") \

.config("spark.sql.catalog.catalog_name.type", "hadoop") \

.config("spark.sql.catalog.catalog_name.warehouse", "file:///C:/HP/iceberg-warehouse") \

.getOrCreate()

Replace "jars/iceberg-spark-runtime-<version>.jar" with the actual path and version of the Iceberg JAR files, and ensure the warehouse path is correct.

You can now create and use Iceberg tables using PySpark.

Basic operations

- Create an Iceberg table and insert data into it.

spark.sql(""" CREATE TABLE my_catalog.emp_table ( id INT, data STRING ) USING iceberg """)

spark.sql(""" INSERT INTO catalog_name.emp_table VALUES (14, 'james'),('15','john')""")- Command to delete data from Iceberg tables.

spark.sql("DELETE FROM catalog_name.emp_table WHERE id = 14")Advanced operations

Adding, updating, or dropping columns without affecting existing data is a part of schema evolution.

- Here is how you can add a new column.

spark.sql("ALTER TABLE ADD catalog_name.emp_table COLUMN salary INT")Partition evolution means changing partition columns without overwriting existing data files.

- Here is how you can add a new partition column.

spark.sql("ALTER TABLE catalog_name.emp_table ADD PARTITION FIELD emp_join_date")These steps and commands provide a basic framework for getting started with Apache Iceberg and give you a sense of what working with this format looks like.

Conclusion

We’ve covered a lot of ground today; let’s summarize with some key takeaways.

Apache Iceberg offers a robust table structure to a set of data files, providing support for ACID transactions and ensuring data consistency and reliability.

The Iceberg format introduces dynamic schema evolution, seamless partitioning, and scalability to storage systems. Its integration with data processing engines like Apache Spark and Flink enables efficient handling of both batch and real-time streaming data.

If you’re looking to delve deeper into modern data architecture and management, consider exploring these additional resources:

FAQs

Can Apache Iceberg handle complex nested data structures?

Yes, Apache Iceberg can handle complex nested data structures. It supports nested data types like structs, maps, and arrays, making it suitable for a wide range of data scenarios.

How does Apache Iceberg ensure data consistency during concurrent writes?

Apache Iceberg ensures data consistency during concurrent writes by using optimistic concurrency control. It tracks changes with a versioned metadata layer, and each write operation creates a new snapshot. If concurrent writes occur, Iceberg checks for conflicts and ensures only one write succeeds, preserving data integrity.

What are the performance implications of using Apache Iceberg compared to traditional data lakes?

Apache Iceberg can significantly improve performance compared to traditional data lakes by optimizing data layout, supporting dynamic partitioning, and allowing for fine-grained metadata management. These features reduce the amount of data scanned during queries and improve overall query performance.

Can Apache Iceberg be used with non-Hadoop cloud storage solutions?

Yes, Apache Iceberg can be used with various cloud storage solutions, including Amazon S3, Google Cloud Storage, and Azure Blob Storage. It is designed to be storage-agnostic, allowing you to leverage Iceberg's capabilities on multiple platforms.

How does Apache Iceberg handle schema changes without requiring downtime?

Apache Iceberg handles schema changes through its native schema evolution feature. It allows you to add, remove, or update columns without requiring downtime or rewriting existing data. These changes are applied to the metadata, and new data is written according to the updated schema.

Is there a user interface available for managing and querying Iceberg tables?

While Apache Iceberg itself does not provide a built-in user interface, it can be integrated with various data platforms and query engines that offer user interfaces. Tools like Apache Spark, Trino, and Presto can be used to interact with Iceberg tables through their respective UIs.

How does Apache Iceberg compare to other table formats like Apache Hudi?

Apache Iceberg and Apache Hudi both provide ACID transactions and time travel capabilities, but they have different design philosophies and use cases. Iceberg focuses on providing a high-performance table format with support for multiple engines, while Hudi emphasizes real-time data ingestion and incremental processing. The choice between them depends on specific use cases and integration needs.

Can Apache Iceberg be used for machine learning workflows?

Yes, Apache Iceberg can be used for machine learning workflows. Its ability to manage large datasets efficiently and support for multiple file formats (like Parquet and Avro) make it suitable for preparing and processing data for machine learning models. Integration with big data processing frameworks like Apache Spark further enhances its utility in ML workflows.

How does Apache Iceberg handle data security and access control?

Apache Iceberg relies on the underlying storage and processing engines for data security and access control. You can implement security measures such as encryption, IAM policies, and access controls at the storage layer (e.g., Amazon S3, Google Cloud Storage) and processing layer (e.g., Apache Spark, Flink). Additionally, catalog services like AWS Glue and Hive Metastore can be used to manage permissions and access controls.

Srujana is a freelance tech writer with the four-year degree in Computer Science. Writing about various topics, including data science, cloud computing, development, programming, security, and many others comes naturally to her. She has a love for classic literature and exploring new destinations.