Course

Big data processing often involves working with unstructured data, which can be challenging to manage and analyze. Accidental deletions or other errors may occur at any point — posing a major risk to data integrity.

Apache Iceberg and Delta Lake are open-source table formats primarily used for managing large-scale data lakes and lakehouses. Both platforms provide features like schema evolution, time travel, and ACID transactions to address the challenges of handling massive datasets. While each has unique advantages, they share a common goal: maintaining data consistency across datasets.

In this article, I’ll explain the key features, similarities, and architectural differences between Apache Iceberg and Delta Lake to help you choose the right tool for your needs.

What is Apache Iceberg?

Developed by Netflix and later donated to the Apache Software Foundation, Iceberg aims to solve the challenges of managing large-scale data lakes. It’s a high-performance format for large analytic tables that efficiently manages and queries massive datasets. Its features address many of the limitations of traditional data lake storage approaches.

Let’s understand Apache Iceberg in more detail.

Features of Apache Iceberg

Here are some of Apache Iceberg's most prominent features, which are very helpful for data engineers when working with datasets.

- Schema evolution: In traditional databases, changing the structure of your data (like adding a new column) can be a big hassle. Iceberg makes this easy. For example, if you're tracking customer data and want to add a

loyalty_pointsfield, you can do it without affecting existing data or breaking current queries. This flexibility is especially useful for long-term data projects that need to adapt over time. - Partitioning: It helps organize your data into smaller, more manageable chunks. This makes queries faster because you don't have to search through all the data every time. For example, I have a massive dataset of sales records. Iceberg can automatically organize this data by date, location, or any other relevant factor.

- Time travel: This feature allows you to easily access historical data versions. If someone accidentally deletes important information or you need to compare current data with a past state, you can travel back to a specific point in time. These point-in-time queries simplify auditing and data recovery processes.

- Data integrity: Data corruption can happen for many reasons, such as network issues, storage problems, or software bugs. Iceberg uses mathematical techniques (checksums) to detect if even a single bit of your data has changed unexpectedly. This ensures that the data you're analyzing is exactly the data that was originally stored.

- Compaction and optimization: Over time, data systems can get cluttered with many small files, which slows down processing. Iceberg periodically cleans this up by combining small files and organizing data more efficiently.

These features make Iceberg particularly good for large-scale data analytics, especially if you deal with data that changes frequently or needs to be accessed in various ways over long periods.

Check out the Apache Iceberg Explained blog post for a deep dive into this exciting technology.

What is Delta Lake?

Developed by Databricks, Delta Lake seamlessly works with Spark, making it a popular choice for organizations already invested in the Spark ecosystem. It’s an open-source storage layer that brings ACID (Atomicity, Consistency, Isolation, Durability) transactions to Apache Spark and big data workloads.

Delta Lake-based data lakehouses streamline data warehousing and machine learning to maintain data quality through scalable metadata, versioning, and schema enforcement.

Features of Delta Lake

Here are the key features that make Delta Lake a good solution for modern data processing:

- ACID transactions: Traditional data lakes often struggle with maintaining data consistency. To overcome this, Delta Lake brings ACID properties associated with databases to data lakes. This means you can perform complex operations on your data without worrying about data corruption or inconsistencies, even if something goes wrong mid-process.

- Data versioning and time travel: As data regulations like GDPR become more stringent, tracking data changes over time has become invaluable. Delta Lake's time travel feature allows you to access and restore previous versions of your data. This is helpful for compliance and running experiments with different versions of your datasets.

- Unified batch and streaming: Traditionally, organizations needed separate systems for batch processing (handling large volumes of data at once) and stream processing (dealing with real-time data). Delta Lake bridges this gap so you can use the same system for both. This simplifies your data architecture and lets you build more flexible data pipelines.

- Scalable metadata handling: As data volumes grow into the petabyte scale, managing metadata (data about your data) becomes difficult. As a result, many systems slow down considerably when dealing with millions of files. However, Delta Lake can handle massive scales without performance degradation, which makes it suitable for very large data lakes.

- Optimized reads and writes: Performance is critical in big data scenarios. Delta Lake incorporates data skipping, caching, and compaction to speed up read and write operations. This means faster queries and more efficient use of computational resources, which save costs in cloud environments.

The course Big Data Fundamentals with PySpark goes deep into modern data processing with Spark. It is a great refresher on this powerful technology.

Become a Data Engineer

Apache Iceberg and Delta Lake: Similarities

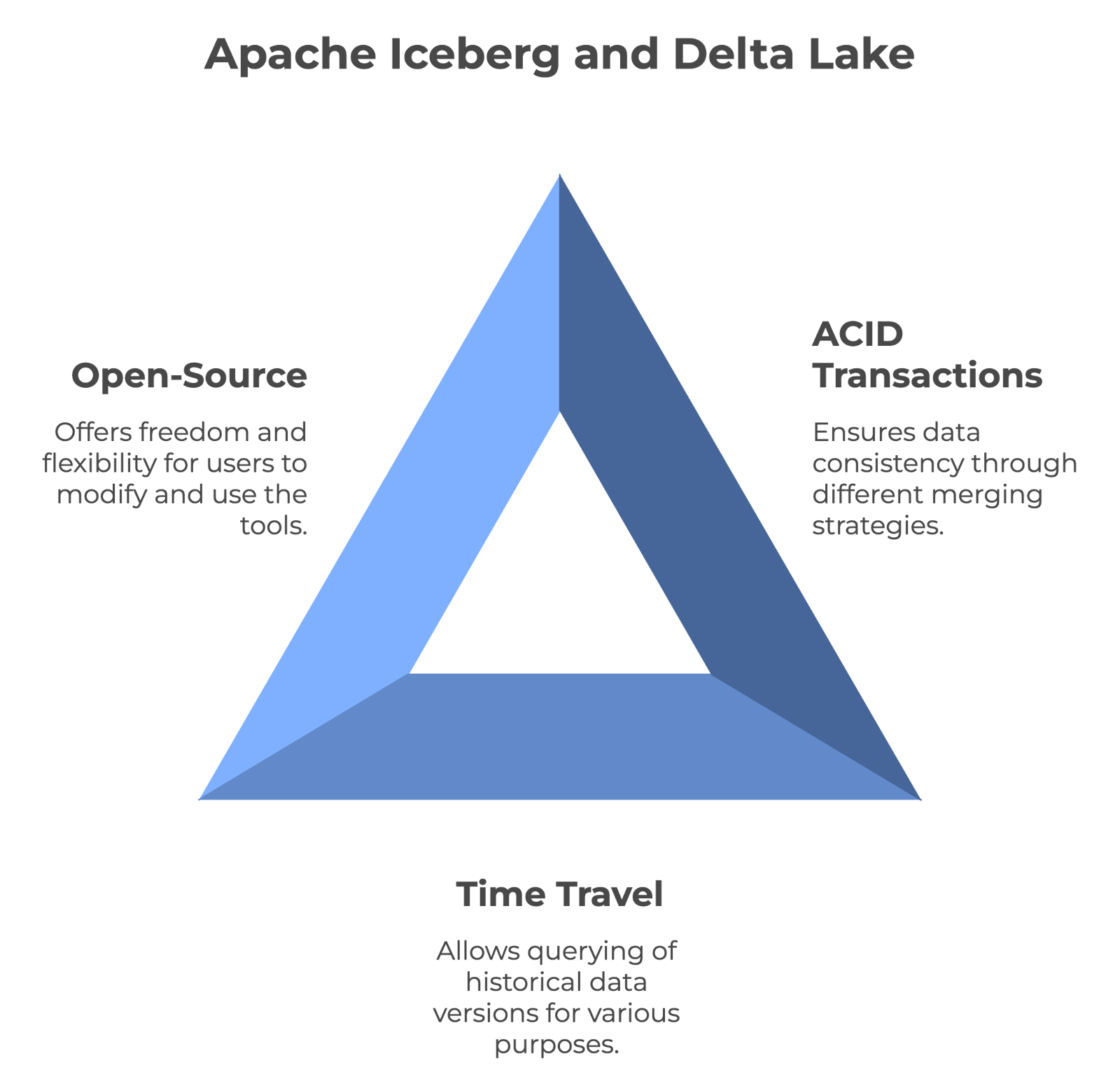

Since Apache Iceberg and Delta Lake both manage large amounts of data, let's examine their fundamental similarities.

ACID transactions and data consistency

Both tools can provide full data consistency using ACID transactions and versioning. However, Iceberg uses the merge-on-read approach, whereas Delta Lake uses the merge-on-write strategy.

As a result, each handles performance and data management differently. Iceberg can provide full schema evolution support, while Delta Lake enforces schema compliance.

Support for time travel

Time travel functionality allows users to query historical versions of data. This makes it invaluable for auditing, debugging, and even reproducing experiments. Both Iceberg and Delta Lake support time travel, which means you can access previous states of data without worrying.

Open-source nature

Apache Iceberg and Delta Lake are open-source technologies. This means anyone can use them for free and even help improve them. In addition, being open-source means you're not tied to one company's product — you have more freedom to switch or combine tools as needed. And since their code is public, you can even optimize it for your specific needs.

The main features shared by Apache Iceberg and Delta Lake. Image by Author created with napkin.ai.

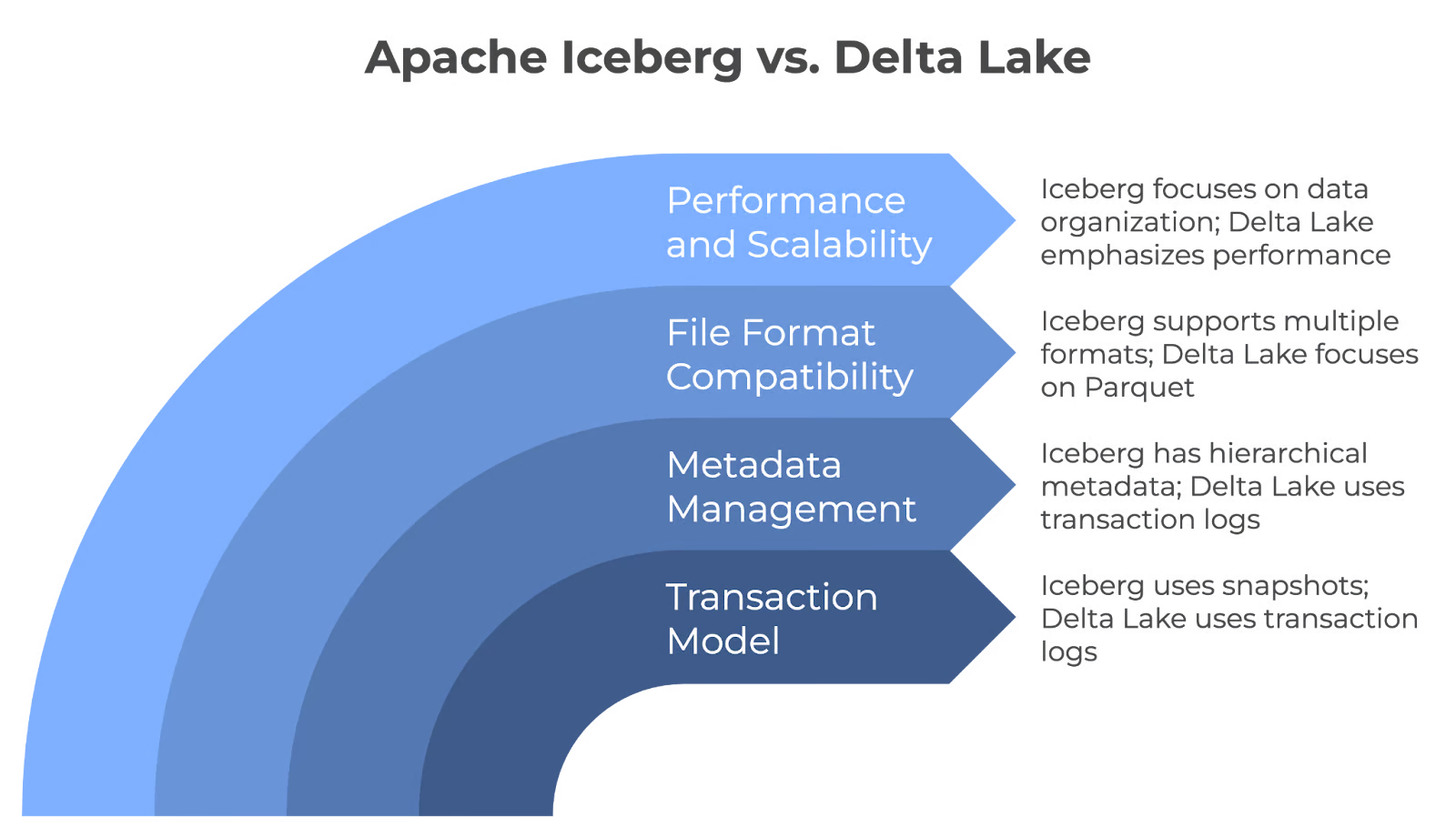

Iceberg vs Delta Lake: Core Architectural Differences

While Iceberg and Delta Lake share similarities, they differ in architecture. Let's see how:

Transaction model

Iceberg and Delta Lake ensure data reliability in data lakes, but they achieve this through different mechanisms. Iceberg uses snapshots for atomic transactions to ensure changes are fully committed or rolled back. But Delta Lake uses transaction logs to ensure that only validated changes are committed to the table and provides reliability in data updates.

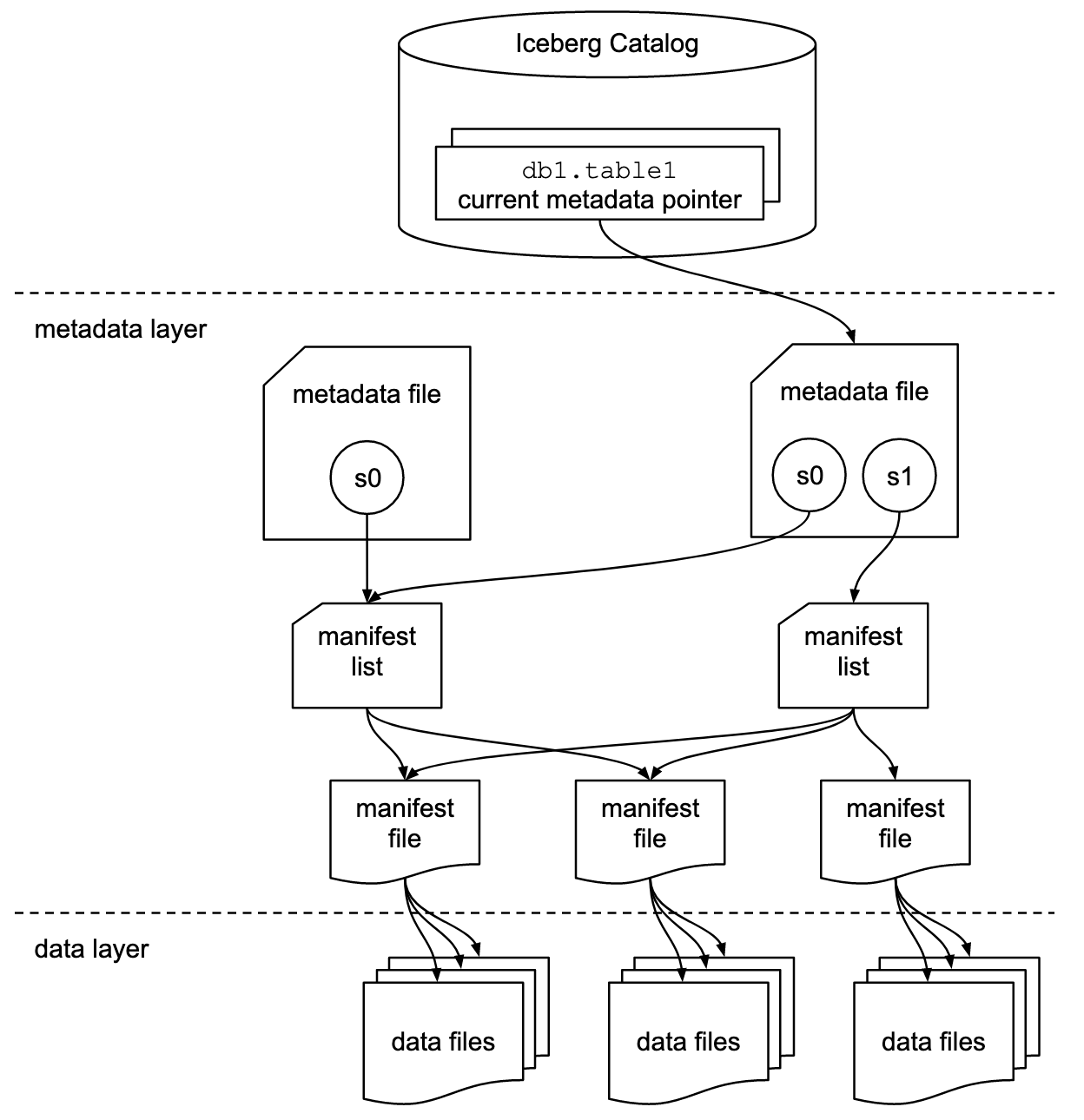

Metadata management

Apache Iceberg employs a hierarchical metadata structure, which includes manifest files, manifest lists, and metadata files. This design streamlines query processing by eliminating costly operations like file listing and renaming.

However, Delta Lake adopts a transaction-based approach to record each transaction in a log. To enhance query efficiency and simplify log management, it periodically consolidates these logs into Parquet checkpoint files, which capture the complete table state.

File format compatibility

Iceberg is flexible with file formats and can work natively with Parquet, ORC, and Avro files. This is helpful if you have data in different formats or if you want to switch formats in the future without changing your entire system.

Delta Lake primarily stores data in the Parquet format because Parquet is efficient, especially for analytical queries. It only focuses on one format to give the best possible performance for that specific file type.

Performance and scalability

Iceberg and Delta Lake scale data lakes but employ different strategies. Iceberg prioritizes advanced data organization with features like partitioning and compaction, while Delta Lake emphasizes high performance through its Delta Engine, auto compaction, and indexing capabilities.

Apache Iceberg and Delta Lake core differences. Image by Author created with napkin.ai.

Use Cases for Apache Iceberg

Due to its unique features, Apache Iceberg has quickly become a go-to solution for modern data lake management. Let’s examine some of its primary use cases to understand its strengths.

Cloud-native data lakes

Here's why Iceberg is a popular choice for organizations building data lakes that operate at a cloud scale:

- Strong schema evolution: It lets you add, drop, or modify columns without affecting existing queries. For example, if you need to add a new field to track user preferences, you can do so without rebuilding your entire dataset or updating all your queries.

- Performance: Advanced techniques like data clustering and metadata management optimize query performance. They quickly prune unnecessary data files to reduce the amount of scanned data and improve query speed.

- Scalability: Manage billions of files and petabytes of data. In addition, its partition evolution feature lets you change how data is organized without downtime or expensive migrations.

Complex data models

Teams dealing with complex data models find Iceberg particularly useful because of the following:

- Schema flexibility: Supports nested data types (structs, lists, and maps) to represent complex relationships. For example, an e-commerce platform could store order details, including nested structures for items and customer data, all within a single table.

- Time-travel queries: This method maintains snapshots of your data to query data as it existed at any point in the past. This is invaluable for reconstructing the state of your data at any given time for compliance purposes or rerunning analyses on historical data snapshots.

Integration with tools

Iceberg is compatible with diversified tools, making it a versatile choice for the data ecosystem. Let’s take a look at some of its key integrations:

Iceberg works seamlessly with the following:

- Apache Spark for large-scale data processing and machine learning.

- Trino for fast, distributed SQL queries across multiple data sources.

- Apache Flink for real-time stream processing and batch computation.

The following major cloud providers offer native support for Iceberg:

- Amazon Web Services (AWS) integrates with AWS Glue, Redshift, EMR, and Athena.

- Google Cloud Platform (GCP) works with BigQuery and Dataproc.

- Microsoft Azure is compatible with Azure Synapse Analytics.

Iceberg also provides client libraries for different programming languages, such as:

- SQL writes standard SQL queries against Iceberg tables.

- Python uses PySpark or libraries like pyiceberg for data manipulation.

- Java leverages the native Java API for low-level operations and custom integrations.

Apache Iceberg table format specification. Image source: Iceberg documentation.

Use Cases for Delta Lake

Delta Lake can solve common challenges and make data management easier. Let's examine some key situations where it really helps.

Unified batch and streaming workloads

With Delta Lake, you don't need separate systems for different data types. Instead, you can have one system that handles everything. You can add new data to your tables in real time, and it's immediately ready for analysis. This means your data is always up-to-date.

You can build a single workflow that handles old and new data to make your whole system less complex and easier to manage. This unified approach will simplify your data pipelines.

ACID transactions for data lakes

Delta Lake brings strong data reliability to data lakes through ACID transactions. For example:

- Hospitals can maintain accurate and up-to-date patient records to provide proper care and follow privacy rules.

- Banks can ensure all financial transactions are recorded accurately and can't be accidentally changed.

- Retail stores can keep their inventory counts precise, even when many updates occur simultaneously. This helps prevent problems like selling items that aren't actually in stock.

Delta Lake achieves this reliability by ensuring that all changes are either completely applied or not at all. In addition, it ensures that data always moves from one valid state to another and that different operations don't interfere with each other.

Apache Spark ecosystem

Delta Lake also works seamlessly with Apache Spark, a big advantage for many organizations. If you're already using Spark, adding Delta Lake would be simple and won’t require major changes to your existing setup. Your team can use the same Spark tools and SQL commands they're familiar with.

As a result, it will make your Spark jobs run faster, especially when dealing with large amounts of data. It does this by organizing data more efficiently and using smart indexing techniques.

Apache Iceberg vs Delta Lake: A Summary

Let's summarize the key differences between Apache Iceberg and Delta Lake to help you quickly understand their format strengths and key features.

|

Features |

Apache Iceberg |

Delta Lake |

|

ACID transaction |

Yes |

Yes |

|

Time travel |

Yes |

Yes |

|

Data versioning |

Yes |

Yes |

|

File format |

Parquet, ORC, Avro |

Parquet |

|

Schema evolution |

Full |

Partial |

|

Integration with other engines |

Apache Spark, Trino, Flink |

Primarily Apache Spark |

|

Cloud Compatibility |

AWS, GCP, Azure |

AWS, GCP |

|

Query Engines |

Spark, Trino, Flink |

Spark |

|

Programming Language |

SQL, Python, Java |

SQL, Python |

Note: The best choice depends on your needs, scalability requirements, and long-term data strategy.

Conclusion

When choosing between Apache Iceberg and Delta Lake, consider your specific use case and existing technology stack. Iceberg's flexibility with file formats and query engines makes it ideal for cloud-native environments. However, Delta Lake's tight integration with Apache Spark is a good option for organizations heavily invested in the Spark ecosystem.

You can check out some relevant DataCamp resources too to strengthen your existing data understanding:

- Understanding Data Engineering course to grasp fundamental concepts

- Database Design and Data Warehousing courses for structuring large-scale data effectively.

- Modern Data Architecture course to learn about current best practices and trends.

Happy learning!

Become a Data Engineer

FAQs

What is the primary difference between Merge-on-Read and Merge-on-Write strategies?

Merge-on-Read (used by Iceberg) reads data from existing files and applies changes during query time. Merge-on-Write (used by Delta Lake) merges updates into the base data during the writing process to ensure faster reads at the cost of slower write operations.

Can Apache Iceberg and Delta Lake handle petabyte-scale data?

Yes, both Apache Iceberg and Delta Lake efficiently handle petabyte-scale datasets. They provide advanced features such as partitioning, metadata management, and compaction to optimize large data lake performance.

How do Apache Iceberg and Delta Lake perform when querying deeply nested data structures?

Apache Iceberg is excellent at querying deeply nested data structures. It supports tabular storage formats like Parquet, which are optimized for nested data. On the flip side, Delta Lake also supports nested data, but its performance may vary depending on query complexity and the size of the dataset.

Can Apache Iceberg and Delta Lake work together in the same data lake architecture?

Yes, both Apache Iceberg and Delta Lake can coexist within the same data lake. Each can be used for different use cases depending on the requirements. For example, you might use Delta Lake for workloads centered around Apache Spark and Iceberg for more flexible integration with diverse query engines.

Are there limitations when using Delta Lake with non-Spark engines?

Delta Lake is tightly integrated with Apache Spark, and while it can work with other engines like Presto or Hive, these integrations are still evolving. The performance might not be as optimized as with Spark, which could limit its effectiveness outside of the Spark ecosystem.

How do Iceberg and Delta Lake handle small file problems in data lakes?

Both Apache Iceberg and Delta Lake address the small file problem through compaction. Iceberg periodically combines small files to optimize query performance, while Delta Lake uses auto compaction to consolidate files during write operations. This ensures better performance by reducing the overhead caused by many small files.

Why did Databricks acquire Tabular (the company behind Iceberg)?

Databricks acquired Tabular to strengthen its capabilities with Apache Iceberg, which was originally created by the founders of Tabular. The acquisition aims to improve compatibility and interoperability between Delta Lake and Iceberg, ultimately working towards a unified lakehouse format that combines the strengths of both open-source projects.

What does the Tabular acquisition mean for Delta Lake and Iceberg users?

The acquisition means that Databricks will be actively working to bring Delta Lake and Iceberg closer together in terms of compatibility. Users will benefit from improved integration, with Delta Lake UniForm already serving as a platform to enable interoperability between Delta Lake, Iceberg, and Apache Hudi. This allows users to work across different formats without being locked into a single solution.

Will Databricks continue to support both Delta Lake and Iceberg?

Yes, Databricks has indicated its commitment to both formats. By acquiring Tabular and bringing the original creators of Apache Iceberg into the fold, Databricks is looking to foster collaboration between the Iceberg and Delta Lake communities. Their goal is to develop a single, open standard for lakehouse format interoperability, which will provide users more options and flexibility.

I'm a content strategist who loves simplifying complex topics. I’ve helped companies like Splunk, Hackernoon, and Tiiny Host create engaging and informative content for their audiences.