Track

Databricks has recently introduced DBRX, its open general-purpose large language model (LLM) built on a mixture-of-experts (MoE) architecture with a fine-grained approach. Instead of using a single neural network for all tasks, the system comprises multiple specialized "expert" networks, each optimized for different types of tasks or data.

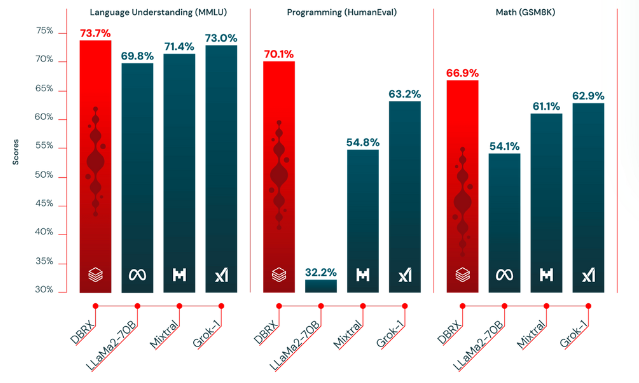

This model has performed better than traditional LLMs like GPT-3.5 and Llama 2 because it's faster and more cost-effective. According to tests, DBRX scores 73.7%, which is higher than LLaMa2 (69.8%) in language understanding tasks.

In this article, we’ll discuss its capabilities and how to get started with Databricsk DBRX in more detail.

What is Databricks DBRX?

DBRX uses a transformer-based decoder-only architecture trained using next-token prediction. It employs a fine-grained mixture-of-experts (MoE) architecture. These ‘experts’ refer to specialized agents based on LLMs, enhanced with domain-specific knowledge and reasoning skills.

DBRX has a large number of smaller experts (16 experts in total) and chooses a subset of them (4 experts) for any given input.

Simply put, this fine-grained approach with 65x more possible combinations of experts improves model quality compared to other open MoE models like Mixtral and Grok-1, which have fewer experts and choose fewer experts per input.

Here are some key details about DBRX:

- Parameter size: DBRX has a total of 132 billion parameters, out of which 36 billion parameters are active on any input.

- Pretraining data: It was pre-trained on 12 trillion tokens of carefully curated data and a context length of 32,000 tokens, which is at least 2x better token-for-token than the data used to pre-train the MPT family of models.

How was DBRX trained?

The model was pre-trained on an impressively large dataset, estimated to be twice as effective as previous datasets used by Databricks. A suite of Databricks tools, such as Apache Spark and Databricks notebooks for data processing and Unity Catalog for data governance, was used to train the model.

During its training, curriculum learning was employed, and the data mix was changed to substantially improve model quality. Such strategic alterations in the training data mix optimized the model's ability to handle diverse inputs effectively.

Some of the key technologies used for DBRX’s pretraining include:

- Rotary Position Encodings (RoPE): A method in transformers that encodes token positions by rotational transformations in high-dimensional space, enhancing sequence understanding.

- Gated Linear Units (GLU): A neural network layer using a gate mechanism to control information flow, aiding in capturing complex patterns.

- Grouped Query Attention (GQA): An attention mechanism that computes weights within groups of queries to reduce computational cost and improve focus.

- GPT-4 tokenizer from the tiktoken repository: A specific tokenizer implementation for GPT-4, converting text into model-ready tokens, optimized for GPT-4's processing needs.

How Does DBRX Compare to Similar Models?

Databricks claims its DBRX model is superior to several top open-source models in terms of efficiency and task performance.

Here’s a detailed comparison of how DBRX stacks up against its competitors:

1) DBRX vs. LLaMA2-70B

- General knowledge (MMLU): DBRX Instruct has a 9.8% higher score over LLaMA2-70B.

- Commonsense reasoning (HellaSwag dataset): DBRX Instruct continues to lead with a 3.1% higher score.

- Databricks gauntlet (a model that assesses pre-trained LLMs' capabilities based on different tasks): DBRX Instruct has a strong lead—a 14% higher score than LLaMA2-70B.

- Programming and mathematical reasoning: DBRX Instruct stands out with 37.9% in programming and 40.2% in math reasoning.

2) DBRX Instruct vs. Mixtral Instruct

- General knowledge: DBRX Instruct edges out Mixtral Instruct with a 2.3% higher score.

- Commonsense reasoning (HellaSwag dataset): DBRX Instruct performs better with a 1.4% higher score.

- Databricks gauntlet: DBRX Instruct surpasses Mixtral Instruct by a notable margin of 6.1%.

- Programming and mathematical reasoning: DBRX Instruct outperformed Mixtral Instruct by 15.3% in programming and 5.8% in mathematical reasoning.

3) DBRX vs Grok-1

- General knowledge: The performance is somehow similar, with DBRX Instruct holding a slight edge of 0.7% over Grok-1.

- Programming and mathematical reasoning: DBRX Instruct outperformed Grok-1 with a 6.9% lead in programming and a 4% lead in math reasoning.

4) DBRX vs Mixtral Base

- General knowledge: DBRX Instruct slightly leads with a 1.8% higher score than Mixtral Base.

- Commonsense reasoning (HellaSwag dataset): DBRX scores higher with a 2.5% difference.

- Databricks gauntlet: DBRX leads with 10% over Mixtral Base.

- Programming and mathematical reasoning: DBRX Instruct shows a remarkable performance lead with a 29.9% higher score than Mixtral Base.

Comparison table

Below, we’ve collated the comparisons into a table, and shown a graph based on some of the results:

|

Model Comparison |

General Knowledge |

Commonsense Reasoning |

Databricks Gauntlet |

Programming Reasoning |

Mathematical Reasoning |

|

DBRX vs LLaMA2-70B |

+9.8% |

+3.1% |

+14% |

+37.9% |

+40.2% |

|

DBRX vs Mixtral Instruct |

+2.3% |

+1.4% |

+6.1% |

+15.3% |

+5.8% |

|

DBRX vs Grok-1 |

+0.7% |

Not available |

Not available |

+6.9% |

+4% |

|

DBRX vs Mixtral Base |

+1.8% |

+2.5% |

+10% |

+29.9% |

Not available |

Comparing DBRX’s quality with other open-source LLMs - source

How to Use DBRX: A Step-By-Step Guide

Before accessing DBRX, ensure your system has at least 320GB of memory. Then follow these steps to access DBRX:

Accessing DBRX

- Install

transformerslibrary

pip install "transformers>=4.40.0"- Then, request an access token with read permissions from Hugging Face (This is needed to download the model.)

- Once you’ve got access, import and load the model using the following code.

from transformers import AutoTokenizer, AutoModelForCausalLM

import torch

tokenizer = AutoTokenizer.from_pretrained("databricks/dbrx-base", token="hf_YOUR_TOKEN")

model = AutoModelForCausalLM.from_pretrained("databricks/dbrx-base", device_map="auto", torch_dtype=torch.bfloat16, token="hf_YOUR_TOKEN")

# Directing tensors to "cuda" (GPU) for faster computation as GPUs are better at handling parallel tasks.

input_text = "Databricks was founded in "

input_ids = tokenizer(input_text, return_tensors="pt").to("cuda")

# DBRX accepts a context length of up to 32768 tokens. Here`max_new_tokens` specifies the maximum number of tokens to generate.

outputs = model.generate(**input_ids, max_new_tokens=100)

print(tokenizer.decode(outputs[0]))Want to learn how to use Transformers and Hugging Face? Read our tutorial on using Transformers and Hugging Face.

Basic tasks with DBRX

DRBX can help you with basic content creation and prompt response tasks like any LLM. Here’s what you can do with DBRX:

- Text completion: DBRX can generate text-based responses based on your prompts.

- Language understanding: It can perform tasks like parsing natural language inputs, summarizing long documents, or translating text between coding languages or script styles.

- Query optimization: DBRX can also optimize database queries to minimize execution time and help data engineers focus on other critical tasks without compromising performance.

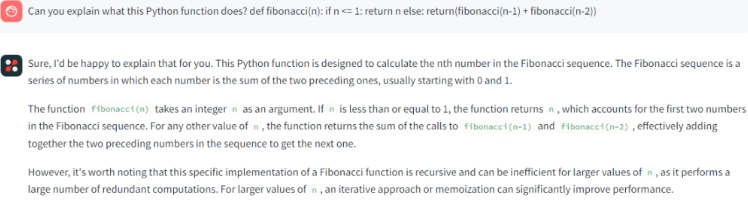

Coding tasks with DBRX

DBRX can also perform advanced coding-related tasks to help data practitioners or coders:

- Code and explanation: It can generate code for various programming tasks and explain existing functions or suggest algorithms for specific problems to understand and optimize code.

DBRX responding to simple commands - source

- Debugging with DBRX: It provides suggested code corrections to improve developer productivity and accelerate your debugging process.

- Vulnerability identification: It can also identify issues in code and propose solutions to mitigate them.

How to Fine-Tune DBRX

You can fine-tune DBRX with Github’s open-source LLM foundry. However, fine-tuning requires training examples to be formatted as dictionaries:

formatted_example = {'prompt': <prompt_text>, 'response': <response_text>}Prompt: This is the starting question or instruction you give the model.

Response: This is the answer the model is trained to generate.

You can use three different data sets to fine-tune any LLM:

- A dataset available on the HuggingFace Hub.

- A dataset that is locally stored on your device or machine.

- A dataset in the StreamingDataset .mds format.

1) Using Hugging Face Hub

If you want to fine-tune using a dataset from the HuggingFace Hub, and the

dataset has a pre-defined preprocessing function or already follows the

"prompt"/"response" format, you can simply point the dataloader to that dataset.

train_loader:

name: finetuning

dataset:

hf_name: tatsu-lab/alpaca

split: train

...If no preprocessing function is defined, Use preprocessing_fn to specify a custom preprocessing function for the dataloader.

train_loader:

name: finetuning

dataset:

hf_name: mosaicml/doge-facts

preprocessing_fn: my_data.formatting:dogefacts_prep_fn

split: train

...

2) Using a local dataset

If you already have a finetuning dataset in your device, define local JSONL files in

the YAML config yamls/finetune/1b_local_data_sft.yaml.

train_loader:

name: finetuning

dataset:

hf_name: json # assuming data files are json formatted

hf_kwargs:

data_dir: /path/to/data/dir/

preprocessing_fn: my.import.path:my_preprocessing_fn

split: train

...

Skip preprocessing_fn if your local data is already in "prompt"/"response" format.

3) Using StreamingDataset

Convert your HuggingFace dataset to MDS format using the

convert_finetuning_dataset.py script.

After converting your HuggingFace dataset to a streaming format, simply adjust your YAML configuration like this.

train_loader:

name: finetuning

dataset:

remote: s3://my-bucket/my-copy-doge-facts

local: /tmp/mds-cache/

split: train

...

If you want to see full parameter finetuning, see the YAML config at

Conclusion

Databricks DBRX uses multiple specialized networks to enhance the model speed and cost-effectiveness. This fine-grained approach allows it to outperform other LLMs in handling complex tasks.

Want to learn more about large language models and how to fine-tune them? Check out these resources:

I'm a content strategist who loves simplifying complex topics. I’ve helped companies like Splunk, Hackernoon, and Tiiny Host create engaging and informative content for their audiences.