Course

High-dimensional data, in terms of number of features, is increasingly common these days in machine learning problems. To extract useful information from these high volumes of data, you have to use statistical techniques to reduce the noise or redundant data. This is because you often need not use every feature at your disposal to train a model. You can improve your model by feeding in only those features that are uncorrelated and non-redundant. This is where feature selection plays an important role. Not only it helps in training your model faster but also reduces the complexity of the model, makes it easier to interpret and improves the accuracy, precision or recall, whatever may the performance metric be.

In this tutorial, you'll tackle the following concepts:

-

First, you'll learn more about feature selection: you'll see when you can use it, and what types of methods you have available to select the most important features for your model!

-

Then, you'll get introduced to the Boruta algorithm.You'll see how you can use it to perform a top-down search for relevant features by comparing original attributes' importance with importance achievable at random, estimated using their permuted copies, and progressively elliminating irrelevant features.

-

You'll also take a brief look at the dataset on which you'll be performing the feature selection. You'll see how you can easily impute missing values with the help of the

Ameliapackage. -

Lastly, you'll learn more about the

Borutapackage, which you can use to run the algorithm.

Feature Selection

Generally, whenever you want to reduce the dimensionality of the data you come across methods like Principal Component Analysis, Singular Value decomposition etc. So it's natural to ask why you need other feature selection methods at all. The thing with these techniques is that they are unsupervised ways of feature selection: take, for example, PCA, which uses variance in data to find the components. These techniques don't take into account the information between feature values and the target class or values. Also, there are certain assumptions, such as normality, associated with such methods which require some kind of transformations before starting to apply them. These constraints doesn't apply to all kinds of data.

There are three types of feature selection methods in general:

-

Filter Methods : filter methods are generally used as a preprocessing step. The selection of features is independent of any machine learning algorithm. Instead the features are selected on the basis of their scores in various statistical tests for their correlation with the outcome variable. Some common filter methods are Correlation metrics (Pearson, Spearman, Distance), Chi-Squared test, Anova, Fisher's Score etc.

-

Wrapper Methods : in wrapper methods, you try to use a subset of features and train a model using them. Based on the inferences that you draw from the previous model, you decide to add or remove features from the subset. Forward Selection, Backward elimination are some of the examples for wrapper methods.

-

Embedded Methods : these are the algorithms that have their own built-in feature selection methods. LASSO regression is one such example.

In this tutorial you will use one of the wrapper methods which is readily available in R through a package called Boruta.

The Boruta Algorithm

The Boruta algorithm is a wrapper built around the random forest classification algorithm. It tries to capture all the important, interesting features you might have in your dataset with respect to an outcome variable.

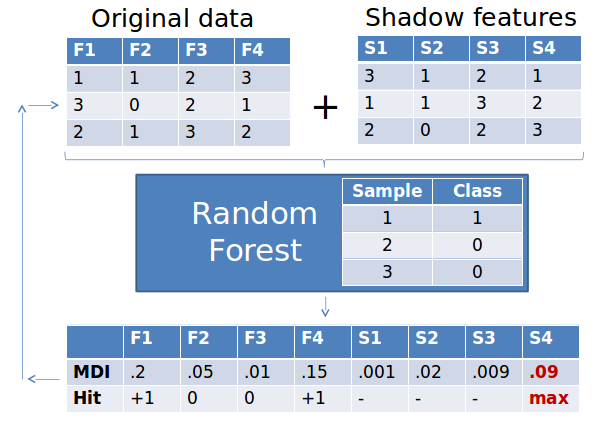

- First, it duplicates the dataset, and shuffle the values in each column. These values are called shadow features. * Then, it trains a classifier, such as a Random Forest Classifier, on the dataset. By doing this, you ensure that you can an idea of the importance -via the Mean Decrease Accuracy or Mean Decrease Impurity- for each of the features of your data set. The higher the score, the better or more important.

- Then, the algorithm checks for each of your real features if they have higher importance. That is, whether the feature has a higher Z-score than the maximum Z-score of its shadow features than the best of the shadow features. If they do, it records this in a vector. These are called a hits. Next,it will continue with another iteration. After a predefined set of iterations, you will end up with a table of these hits. Remember: a Z-score is the number of standard deviations from the mean a data point is, for more info click here.

- At every iteration, the algorithm compares the Z-scores of the shuffled copies of the features and the original features to see if the latter performed better than the former. If it does, the algorithm will mark the feature as important. In essence, the algorithm is trying to validate the importance of the feature by comparing with random shuffled copies, which increases the robustness. This is done by simply comparing the number of times a feature did better with the shadow features using a binomial distribution.

- If a feature hasn't been recorded as a hit in say 15 iterations, you reject it and also remove it from the original matrix. After a set number of iterations -or if all the features have been either confirmed or rejected- you stop.

Boruta Agorithm in R

Let's use the Boruta algorithm in one of the most commonly available datasets: the Bank Marketing data. This data represensts a direct marketing campaigns (phone calls) of a Portuguese banking institution. The classification goal is to predict if the client will subscribe a term deposit or not.

Tip: don't forget to check out detailed description of different features here.

read_file <- read.csv('./bank_bank.csv',header=TRUE,sep=';',stringsAsFactors = F) #read csv into a dataframe

str(read_file)

## 'data.frame': 4521 obs. of 17 variables:

## $ age : int 30 33 35 30 59 35 36 39 41 43 ...

## $ job : chr "unemployed" "services" "management" "management" ...

## $ marital : chr "married" "married" "single" "married" ...

## $ education: chr "primary" "secondary" "tertiary" "tertiary" ...

## $ default : chr "no" "no" "no" "no" ...

## $ balance : int 1787 4789 1350 1476 0 747 307 147 221 -88 ...

## $ housing : chr "no" "yes" "yes" "yes" ...

## $ loan : chr "no" "yes" "no" "yes" ...

## $ contact : chr "cellular" "cellular" "cellular" "unknown" ...

## $ day : int 19 11 16 3 5 23 14 6 14 17 ...

## $ month : chr "oct" "may" "apr" "jun" ...

## $ duration : int 79 220 185 199 226 141 341 151 57 313 ...

## $ campaign : int 1 1 1 4 1 2 1 2 2 1 ...

## $ pdays : int -1 339 330 -1 -1 176 330 -1 -1 147 ...

## $ previous : int 0 4 1 0 0 3 2 0 0 2 ...

## $ poutcome : chr "unknown" "failure" "failure" "unknown" ...

## $ y : chr "no" "no" "no" "no" ...Let's use the summary() function to summarize the common descriptive statistics of different features in your dataset.

summary(read_file)

## age job marital education

## Min. :19.00 Length:4521 Length:4521 Length:4521

## 1st Qu.:33.00 Class :character Class :character Class :character

## Median :39.00 Mode :character Mode :character Mode :character

## Mean :41.17

## 3rd Qu.:49.00

## Max. :87.00

## default balance housing loan

## Length:4521 Min. :-3313 Length:4521 Length:4521

## Class :character 1st Qu.: 69 Class :character Class :character

## Mode :character Median : 444 Mode :character Mode :character

## Mean : 1423

## 3rd Qu.: 1480

## Max. :71188

## contact day month duration

## Length:4521 Min. : 1.00 Length:4521 Min. : 4

## Class :character 1st Qu.: 9.00 Class :character 1st Qu.: 104

## Mode :character Median :16.00 Mode :character Median : 185

## Mean :15.92 Mean : 264

## 3rd Qu.:21.00 3rd Qu.: 329

## Max. :31.00 Max. :3025

## campaign pdays previous poutcome

## Min. : 1.000 Min. : -1.00 Min. : 0.0000 Length:4521

## 1st Qu.: 1.000 1st Qu.: -1.00 1st Qu.: 0.0000 Class :character

## Median : 2.000 Median : -1.00 Median : 0.0000 Mode :character

## Mean : 2.794 Mean : 39.77 Mean : 0.5426

## 3rd Qu.: 3.000 3rd Qu.: -1.00 3rd Qu.: 0.0000

## Max. :50.000 Max. :871.00 Max. :25.0000

## y

## Length:4521

## Class :character

## Mode :character

##

##

## The summary() function gives the measure of central tendency for continuous features such as mean, median, quantiles, etc. If you have any categorical features in your data set, you'll also get to see the Class and Mode of those features.

Now let's convert the categorical features into factor data type:

convert <- c(2:5, 7:9,11,16:17)

read_file[,convert] <- data.frame(apply(read_file[convert], 2, as.factor))

str(read_file)

## 'data.frame': 4521 obs. of 17 variables:

## $ age : int 30 33 35 30 59 35 36 39 41 43 ...

## $ job : Factor w/ 12 levels "admin.","blue-collar",..: 11 8 5 5 2 5 7 10 3 8 ...

## $ marital : Factor w/ 3 levels "divorced","married",..: 2 2 3 2 2 3 2 2 2 2 ...

## $ education: Factor w/ 4 levels "primary","secondary",..: 1 2 3 3 2 3 3 2 3 1 ...

## $ default : Factor w/ 2 levels "no","yes": 1 1 1 1 1 1 1 1 1 1 ...

## $ balance : int 1787 4789 1350 1476 0 747 307 147 221 -88 ...

## $ housing : Factor w/ 2 levels "no","yes": 1 2 2 2 2 1 2 2 2 2 ...

## $ loan : Factor w/ 2 levels "no","yes": 1 2 1 2 1 1 1 1 1 2 ...

## $ contact : Factor w/ 3 levels "cellular","telephone",..: 1 1 1 3 3 1 1 1 3 1 ...

## $ day : int 19 11 16 3 5 23 14 6 14 17 ...

## $ month : Factor w/ 12 levels "apr","aug","dec",..: 11 9 1 7 9 4 9 9 9 1 ...

## $ duration : int 79 220 185 199 226 141 341 151 57 313 ...

## $ campaign : int 1 1 1 4 1 2 1 2 2 1 ...

## $ pdays : int -1 339 330 -1 -1 176 330 -1 -1 147 ...

## $ previous : int 0 4 1 0 0 3 2 0 0 2 ...

## $ poutcome : Factor w/ 4 levels "failure","other",..: 4 1 1 4 4 1 2 4 4 1 ...

## $ y : Factor w/ 2 levels "no","yes": 1 1 1 1 1 1 1 1 1 1 ...Since the data points are shuffled in order to create shadow features and the Z-score is calculated for each of them, it's important to treat missing or blank values prior to using boruta package, otherwise it throws an error.

(Un)fortunately, this dataset doesn't have neither. However, for educational purposes, you'll introduce some NAs in the data.

Let's seed missing values in your data set using prodNA() function. You can access this function by installing missForest package.

Remember that you can use install.packages() to install missing packages if needed!

library(missForest)

# Generate 5% missing values at random

bank.mis <- prodNA(read_file, noNA = 0.05) You can again call summary() function on your new data frame to get the number of imputed NAs, but let's get a little bit more creative instead!

Let's visualize the missingness in the data using the following ggplot2 code:

library(reshape2)

library(ggplot2)

library(dplyr)

ggplot_missing <- function(x){

x %>%

is.na %>%

melt %>%

ggplot(data = .,

aes(x = Var2,

y = Var1)) +

geom_raster(aes(fill = value)) +

scale_fill_grey(name = "",

labels = c("Present","Missing")) +

theme_minimal() +

theme(axis.text.x = element_text(angle=45, vjust=0.5)) +

labs(x = "Variables in Dataset",

y = "Rows / observations")

}

ggplot_missing(bank.mis)The white lines in the plot show you visually that you have seeded missing values in every feature but you see how much pain went into writing that ggplot_missing function? Well, the Amelia package in R, which you will be using in the next section, provides a one line alternative to plot similar figure:

library(Amelia)

missmap(bank.mis)Try it out on your own!

Imputing Missing Values with Amelia

You can now impute the missing values in several ways like imputing with mean, media or mode (for categorical features) but let's use another powerful package for imputing missing values Amelia.

Amelia takes m bootstrap samples and applies the EMB (or expectation-maximization with bootstrapping) algorithm to each sample. The m estimates of mean and variances will be different. Finally, the first set of estimates are used to impute first set of missing values using regression, then second set of estimates are used for second set and so on. Multiple imputation helps to reduce bias and increase efficiency. Also, it is enabled with parallel imputation feature using multicore CPUs.

It has 3 important parameters:

m: the number of imputed datasets to create.idvars: keep all ID variables and other variables that you don't want to impute.noms: keep nominal variables here.

library(Amelia)

amelia_bank <- amelia(bank.mis, m=3, parallel = "multicore",noms=c('job','marital','education','default','housing','loan','contact','month','poutcome','y'))

## -- Imputation 1 --

##

## 1 2 3 4 5 6

##

## -- Imputation 2 --

##

## 1 2 3 4 5 6

##

## -- Imputation 3 --

##

## 1 2 3 4 5To access the imputed data frames, you can use the following subsetting:

amelia_bank$imputations[[1]]To export the imputed dataset to csv files use:

write.amelia(amelia_bank, file.stem = "imputed_bank_data_set")The Boruta R Package

Now let's use Boruta algorithm on one of the imputed datasets. You can make use of the Boruta package to do this:

library(Boruta)

set.seed(111)

boruta.bank_train <- Boruta(y~., data = amelia_bank$imputations[[1]], doTrace = 2)

print(boruta.bank_train)

## Boruta performed 99 iterations in 18.97234 mins.

## 10 attributes confirmed important: age, contact, day, duration,

## housing and 5 more;

## 3 attributes confirmed unimportant: education, job, marital;

## 3 tentative attributes left: balance, campaign, default;Boruta gives a call on the significance of features in a data set. Many of them are already classified as important and unimportant but you see that there are some who have been assigned in tentative category.

But what does this mean?

Tentative features have an importance that is so close to their best shadow features that Boruta is not able to make a decision with the desired confidence in the default number of Random Forest runs.

What do you then do about this?

You could consider increasing the maxRuns parameter if tentative features are left. However, note that you can also provide values of mtry and ntree parameters, which will be passed to randomForest() function. The first allows you to specify the number of variables that are randomly sampled as candidates at each split, while the latter is used to specify the number of trees you want to grow. With these arguments specified, the Random Forest classifier will achieve a convergence at minimal value of the out of bag error.

Remember that an out of bag error is an estimate to measure prediction error of the classifiers which uses bootstrap aggregation to sub sample data samples used for training. It is the mean prediction error on each training set sample X using only trees that did not have X in their bootstrap sample.

Alternatively, you can also set the doTrace argument to 1 or 2, which allows you to get a report of the progress of the process.

The boruta package also contains a TentativeRoughFix() function, which can be used to fill missing decisions by simple comparison of the median feature Z-score with the median Z-score of the most important shadow feature:

#take a call on tentative features

boruta.bank <- TentativeRoughFix(boruta.bank_train)

print(boruta.bank)

## Boruta performed 99 iterations in 18.97234 mins.

## Tentatives roughfixed over the last 99 iterations.

## 12 attributes confirmed important: age, campaign, contact, day,

## default and 7 more;

## 4 attributes confirmed unimportant: balance, education, job,

## marital;Boruta has now done its job: it has successfully classified each feature as important or unimportant.

You can now plot the boruta variable importance chart by calling plot(boruta.bank). However, the x axis labels will be horizontal. This won't be really neat.

That's why you will add the feature labels to the x axis vertically, just like in the following code chunk:

plot(boruta.bank, xlab = "", xaxt = "n")

lz<-lapply(1:ncol(boruta.bank$ImpHistory),function(i)

boruta.bank$ImpHistory[is.finite(boruta.bank$ImpHistory[,i]),i])

names(lz) <- colnames(boruta.bank$ImpHistory)

Labels <- sort(sapply(lz,median))

axis(side = 1,las=2,labels = names(Labels),

at = 1:ncol(boruta.bank$ImpHistory), cex.axis = 0.7)The y axis label Importance represents the Z score of every feature in the shuffled dataset.

The blue boxplots correspond to minimal, average and maximum Z score of a shadow feature, while the red and green boxplots represent Z scores of rejected and confirmed features, respectively. As you can see the red boxplots have lower Z score than that of maximum Z score of shadow feature which is precisely the reason they were put in unimportant category.

You can confirm the importance of the features by typing:

getSelectedAttributes(boruta.bank, withTentative = F)

## [1] "age" "default" "housing" "loan" "contact" "day"

## [7] "month" "duration" "campaign" "pdays" "previous" "poutcome"

bank_df <- attStats(boruta.bank)

print(bank_df)

## meanImp medianImp minImp maxImp normHits decision

## age 11.4236197 11.3760979 8.4250222 15.518420 1.00000000 Confirmed

## job 0.0741753 0.3002281 -1.7651336 1.566687 0.01010101 Rejected

## marital 1.8891283 2.0043568 -1.0276720 4.804499 0.22222222 Rejected

## education 1.5969540 1.6188117 -1.6836346 4.629572 0.28282828 Rejected

## default 2.3721979 2.3472820 -0.1434933 5.044653 0.50505051 Confirmed

## balance 2.3349682 2.3214378 -0.8098151 5.567993 0.51515152 Rejected

## housing 8.4147808 8.4384240 4.7392059 10.404609 1.00000000 Confirmed

## loan 4.1872186 4.2797591 2.0325838 6.263155 0.87878788 Confirmed

## contact 18.9482180 18.9757719 16.0937657 22.121461 1.00000000 Confirmed

## day 9.5645192 9.5828766 6.1842233 13.495442 1.00000000 Confirmed

## month 24.1475736 24.2067940 20.0621966 27.200679 1.00000000 Confirmed

## duration 71.5232213 71.1785055 64.3941499 78.249830 1.00000000 Confirmed

## campaign 2.6221456 2.6188180 -0.4144493 4.941482 0.65656566 Confirmed

## pdays 26.5650528 26.7123730 23.7902945 29.067476 1.00000000 Confirmed

## previous 20.9569022 20.9703991 18.7273357 23.117672 1.00000000 Confirmed

## poutcome 28.5166889 28.4885934 25.9855974 31.527154 1.00000000 ConfirmedYou can easily validate the result as the feature duration has been given the highest importance which is already mentioned in the data description (click here) if you read carefully!

Conclusion

Voila! You have successfully filtered out the most important features from your dataset just by typing a few lines of code. With this you have reduced the noise from your data which will be really beneficial for any classifier to assign a label to an observation. Training a model on these important features will definitely improve your model's performance which was the point of doing feature selection in the first place!

If you want to check out the resources that have been used to make this tutorial, check out the following:

- PACKAGE AMELIA : A Program for Missing Data; James Honaker, Gary King, and Matthew Blackwell

- Feature Selection with the Boruta Package; Miron B. Kursa, Witold R. Rudnicki

- RDocumentation

- UCI Machine Learning Repository