Track

The ability to efficiently integrate, process, and analyze data is important for organizations aiming to gain insights and maintain a competitive edge.

Two powerful tools, Azure Data Factory and Databricks, have emerged as leading solutions for managing data pipelines and performing advanced analytics in the Azure ecosystem. While these tools may look similar at first glance, they address different aspects of data engineering and analytics.

In this article, we will explore the capabilities of Azure Data Factory and Databricks, compare their features, and provide insights into when to use each tool.

What is Azure Data Factory?

Azure Data Factory (ADF) is a cloud-based ETL (Extract, Transform, Load) service that enables data integration, migration, and orchestration across various data stores. It is designed to facilitate the movement and transformation of data from disparate sources to centralized locations, where it can be analyzed and leveraged for

business intelligence.

With ADF, you can create and manage data pipelines that automate the process of extracting data from multiple sources, transforming it into a usable format, and loading it into a destination for analysis.

Features of Azure Data Factory

These are the most interesting features of ADF, in no particular order:

- Drag-and-drop interface: ADF offers an intuitive interface that allows you to create data pipelines without extensive coding. This user-friendly approach is ideal for those who may not have deep technical expertise but still need to manage complex data workflows.

- Integration with a wide range of data sources: ADF supports integration with both on-premises and cloud-based data sources, making it a versatile tool for teams with diverse data environments.

- Support for complex data transformations using Data Flow: ADF provides data transformation capabilities through its Data Flow feature, which enables you to perform complex transformations directly within the pipeline.

- Scheduling and monitoring: ADF includes built-in scheduling and monitoring tools, allowing you to automate the execution of data pipelines and monitor their performance.

- Native integration with other Azure services: ADF seamlessly integrates with other Azure services, such as Azure Synapse Analytics and Azure Blob Storage, providing a comprehensive data management solution within the Azure ecosystem.

As you can see, ADF offers everything you need to create data pipelines within the Azure ecosystem!

Azure Data Factory excels at connecting to and integrating diverse data sources. Image source: Microsoft.

The Introduction to Azure course is an excellent resource for those interested in getting started with Azure. The Azure Management and Governance course is ideal for those more experienced.

What is Databricks?

Databricks is an analytics platform that provides a collaborative environment for big data processing and machine learning. Based on Apache Spark, Databricks is designed to handle large-scale data processing tasks, enabling you to perform complex analytics and develop machine learning models.

Databricks offers a unified platform on which data engineers, data scientists, and analysts can collaborate to process, analyze, and visualize data in a streamlined workflow.

Features of Databricks

Here are some of the features that make Databricks such a popular tool:

- Unified analytics platform: Databricks combines big data processing and machine learning into a single platform, enabling you to perform end-to-end analytics in one environment.

- Collaborative notebooks: Databricks supports collaborative notebooks in languages like Python, Scala, and SQL, allowing teams to collaborate in real time on data processing and analysis tasks.

- High-performance data processing with Apache Spark: Databricks leverages the power of Apache Spark, a distributed data processing engine, to handle large datasets and perform complex transformations efficiently.

- Integration with data lakes and data warehouses: Databricks integrates seamlessly with data lakes and data warehouses, such as Delta Lake, enabling efficient data storage and retrieval for large-scale analytics.

- Advanced analytics and AI/ML capabilities: Databricks provides advanced analytics and machine learning tools, making it a powerful platform for developing and deploying AI models at scale.

- Scalability with Azure Databricks clusters: Databricks allows users to quickly scale their data processing capabilities by leveraging Azure Databricks clusters, ensuring the platform can handle growing data volumes and computational demands.

Databricks Data Lakehouse architecture elements. Image source: Databricks

There you have it! If Databricks got your attention for its extensive capabilities, you can start with the Introduction to Databricks course.

Azure Data Factory vs. Databricks: Main Differences

At this point, you may be able to see how ADF and Databricks are different from each other. Now, let’s go deeper and analyze such differences under several categories.

Purpose and use cases

Azure Data Factory is primarily designed for data integration and orchestration. It excels at moving data between various sources, transforming it, and loading it into a centralized location for further analysis. ADF is ideal for scenarios where you need to automate and manage data workflows across multiple environments.

On the other hand, Databricks is focused on data processing, analytics, and machine learning. It is the go-to platform for companies looking to perform large-scale data analysis, develop machine learning models, and collaborate on data science projects. Databricks is particularly well-suited for big data environments and AI-driven applications.

Data transformation capabilities

Azure Data Factory provides data transformation capabilities through its Data Flow feature, which allows users to perform various transformations directly within the pipeline. While powerful, these transformations are generally more suited to ETL processes and may not be as extensive or flexible as those offered by Databricks.

Databricks, with its foundation in Apache Spark, offers advanced data transformation capabilities. Users can leverage Spark's full power to perform complex transformations, aggregations, and data processing tasks, making it the preferred choice for scenarios that require heavy data manipulation and computation.

Integration with other Azure services

Both ADF and Databricks integrate with other Azure services but do so with different focuses. ADF is designed for ETL and orchestration, making it the ideal tool for managing data workflows that involve multiple Azure services like Azure Synapse Analytics and Azure Blob Storage.

Databricks, on the other hand, is more focused on advanced analytics and AI, integrating with services like Delta Lake for data storage and Azure Machine Learning for model deployment.

Master Azure From Scratch

Ease of use

Azure Data Factory’s drag-and-drop interface makes it relatively easy to use, even for those with limited technical expertise. Its focus on low-code/no-code pipeline development simplifies creating and managing data workflows.

While powerful, Databricks requires a higher level of technical proficiency. Its reliance on coding (in languages like Python, Scala, and SQL) and complex configuration options make it more suitable for data engineers and scientists who are comfortable working with code and big data frameworks.

Scalability and performance

ADF and Databricks are highly scalable but excel in different areas. ADF is designed to handle large-scale data integration and migration tasks, making it ideal for orchestrating complex ETL workflows.

Databricks, with its foundation in Apache Spark, offers unparalleled performance for big data processing and analytics, making it the preferred choice for scenarios that require high-performance computing and scalability.

Cost considerations

When considering the cost of using Azure Data Factory and Databricks, it’s essential to understand their pricing models and how they might impact your budget:

- ADF’s pricing is based on the number of pipeline activities, data movement, and data volume. Costs can escalate with more complex pipelines and larger data volumes, but ADF is generally cost-effective for straightforward ETL tasks.

- Databricks pricing is based on the computational resources used, typically measured in Databricks Units (DBUs) per hour. Costs can rise significantly for large-scale data processing and machine learning workloads, especially if high-performance clusters are required.

When evaluating costs, consider your organization’s specific needs, such as the volume of data, complexity of transformations, and frequency of pipeline execution.

In some cases, using ADF for orchestration and Databricks for processing can provide a balanced approach, leveraging the strengths of both tools while effectively managing costs.

Azure Data Factory vs Databricks: A Summary

Below is a table comparing Azure Data Factory and Databricks across a wide range of aspects:

|

Category |

Azure Data Factory (ADF) |

Databricks |

|

Overview |

A cloud-based data integration service for orchestrating and automating data movement and transformation. |

A unified analytics platform focused on big data processing and machine learning. |

|

Primary Use Case |

Data integration, ETL/ELT pipelines, data orchestration, and workflow automation. |

Big data analytics, real-time data processing, and machine learning. |

|

Data Transformation |

Primarily used for orchestrating data transformations, using built-in or external compute resources like Databricks or Synapse Spark pools. |

Advanced, large-scale data transformation with Apache Spark and Delta Lake for both batch and real-time processing. |

|

ETL/ELT |

Specialized in ETL/ELT orchestration, connecting multiple data sources and destinations, with built-in activities like copy data, data flow, and Azure services. |

Powerful ETL capabilities, especially for complex big data transformations, leveraging Apache Spark for in-memory processing. |

|

Compute Engine |

Does not have a native compute engine; relies on external compute options like Azure Databricks, Azure Synapse, or Azure HDInsight for data transformation. |

Apache Spark-based compute engine for real-time and batch processing. |

|

Data Movement |

Offers a wide range of built-in connectors (e.g., for databases, cloud storage, SaaS platforms), making it suitable for orchestrating large-scale data movement across different environments. |

Typically integrates with storage solutions like Azure Data Lake, S3, and Delta Lake, but data movement is not the primary focus. |

|

Data Integration |

Strong data integration capabilities with support for over 90 connectors, including Azure services, on-prem databases, and third-party systems. |

Data integration mainly focused on big data storage and processing platforms (Azure Data Lake, Delta Lake). Less diverse in connector options. |

|

Data Processing |

Leverages data flows for transforming data at scale using visual, low-code mapping. For more advanced transformations, it uses Databricks or Azure Synapse. |

Advanced data processing using Spark; optimized for real-time and large-scale batch workloads. |

|

Collaboration |

Pipeline collaboration through Git integration (Azure DevOps and GitHub). |

Advanced collaboration features with Databricks Workspaces and integrated version control with GitHub or Databricks Repos. |

|

Developer Experience |

Drag-and-drop UI for building ETL workflows; easy for non-technical users to create data pipelines. |

Advanced developer experience, offering a notebook environment and support for code-based transformations (Python, Scala, R, SQL). |

|

Scheduling & Orchestration |

Excellent scheduling and orchestration capabilities, with triggers and pipeline execution control. |

Limited orchestration capabilities. Databricks can be orchestrated externally using ADF or other tools. |

|

Data Governance |

Integration with Azure Purview for data governance, lineage tracking, and metadata management. |

Governance is typically handled externally, using tools like Azure Purview or other third-party solutions. |

|

Monitoring & Logging |

Built-in monitoring and logging via Azure Monitor, Alerts, and ADF's monitoring dashboard. |

Extensive logging and monitoring capabilities through Databricks REST API, integration with Azure Monitor, and custom dashboards. |

|

Scalability |

Scales easily with on-demand data integration and data movement. Uses external compute services for scaling data processing. |

Highly scalable, especially for large data workloads and machine learning pipelines, with automatic cluster scaling. |

|

Cost Model |

Pay-per-use based on data movement, orchestration, and the external compute services (Databricks, Synapse, etc.) utilized. |

Pay-as-you-go for storage and compute. Clusters can be auto-scaled for cost optimization. |

|

Machine Learning |

No built-in machine learning capabilities. Machine learning can be integrated by orchestrating services like Azure ML or Databricks. |

Built-in machine learning libraries like MLlib and integrations with MLflow for model tracking and management. |

|

Security |

Role-based access control (RBAC), integration with Azure Active Directory, built-in data encryption, and managed identities. |

RBAC, data encryption, integration with Azure Active Directory, and custom security models. |

|

BI Integration |

Can orchestrate data pipelines and feed data to BI tools like Power BI, Azure Synapse Analytics, and other Azure services. |

Supports integration with BI tools like Power BI, Tableau, and others for direct data consumption. |

|

Real-time Data Processing |

Can orchestrate real-time data ingestion with triggers, and use real-time compute services like Stream Analytics or Databricks. |

Optimized for real-time data processing using Spark Streaming. |

|

Ease of Use |

User-friendly with a low-code interface, making it easy for non-technical users to build data pipelines. |

Requires more technical knowledge, as it's focused on big data engineers, data scientists, and developers. |

|

Multi-cloud Support |

Primarily focused on Azure, but can connect to some other cloud services using connectors. |

Supports multi-cloud environments, including Azure, AWS, and GCP. |

|

Deployment |

Fully managed service with automated updates and scaling of pipelines. |

Managed service, but more control is given to users over cluster configurations and scaling. |

|

Community & Ecosystem |

Extensive Azure community, with strong support from Microsoft and integration into the Azure ecosystem. |

Large community, with significant contributions from Databricks and the open-source Apache Spark community. |

|

Compliance & Certifications |

Compliant with various industry standards, including GDPR, HIPAA, and ISO certifications. |

Also meets various industry standards and certifications, including GDPR, HIPAA, and SOC compliance. |

When to Use Azure Data Factory

Azure Data Factory is the preferred choice in scenarios where data integration and migration are the primary concerns. More specifically, it is ideal for:

- Data integration and migration: When organizations need to move data between various sources, including on-premises and cloud-based environments, ADF provides the tools to do so efficiently.

- Orchestrating data pipelines: ADF excels at managing and automating data workflows across multiple data sources, making it ideal for organizations with complex data environments.

- Simple to moderate data transformations: ADF's Data Flow feature provides sufficient capabilities for ETL processes that involve basic to moderately complex data transformations.

- ETL workflows that require scheduling and monitoring: ADF’s built-in scheduling and monitoring tools make it easy to automate and track the performance of ETL workflows.

When to Use Databricks

Databricks is the go-to platform for scenarios that require big data processing, analytics, and machine learning. It is best suited for:

- Big data processing and analytics: Databricks excels at processing large datasets, making it ideal for organizations that analyze vast amounts of data.

- Machine learning and AI model development: Databricks provides advanced tools for developing and deploying machine learning models, making it a powerful platform for AI-driven applications.

- Collaborative data science environments: Databricks’ collaborative notebooks enable data scientists and analysts to collaborate in real time, facilitating team-based data science projects.

- Complex data transformations using Apache Spark: When heavy data manipulation and computation are required, Databricks’ Apache Spark-based transformations offer the power and flexibility to handle complex tasks.

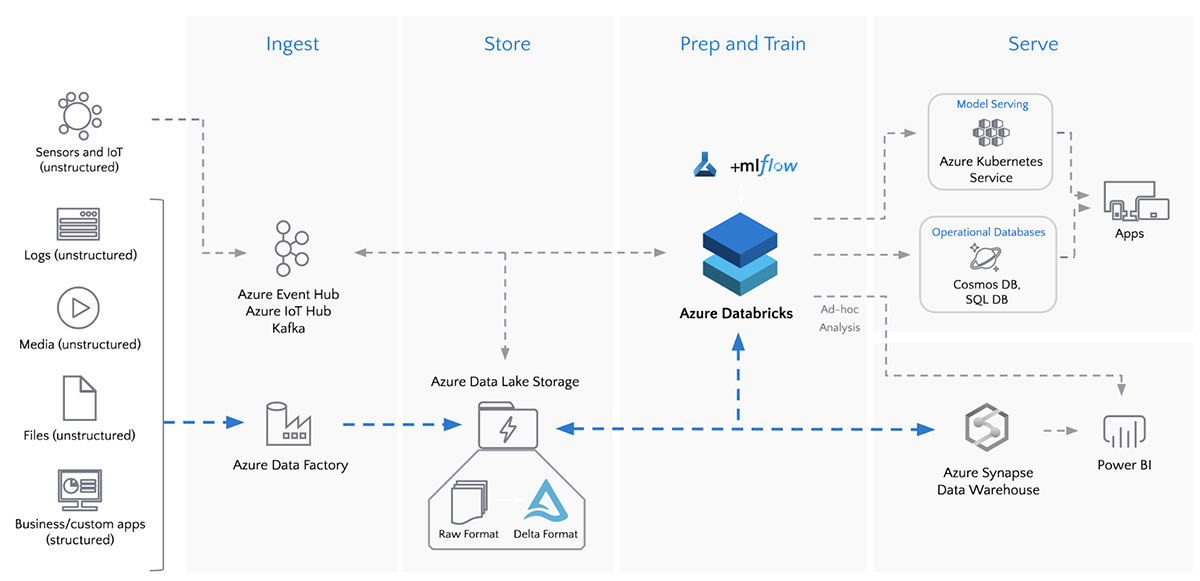

Integrating Azure Data Factory and Databricks

In many cases, Azure Data Factory and Databricks can be used together to create a comprehensive data pipeline.

ADF can orchestrate the data pipeline, managing the extraction, transformation, and loading of data from various sources. Databricks can then perform the more complex data processing and analytics tasks, leveraging its advanced capabilities in big data processing and machine learning.

For example, a typical end-to-end data pipeline might involve ADF extracting data from multiple sources and loading it into a data lake. Databricks could then process the data, perform advanced transformations, and apply machine learning models before the results are loaded into a data warehouse for further analysis.

Batch ETL with Azure Data Factory and Azure Databricks. Image source: Databricks

Conclusion

Azure Data Factory and Databricks are powerful tools within the Azure ecosystem, each with its strengths and ideal use cases. In many cases, combining ADF and Databricks can provide a comprehensive solution that leverages the best of both worlds!

For those looking to dive deeper into these platforms, consider exploring the following resources:

These resources provide a solid foundation for understanding Azure Data Factory and Databricks and how they can be used to build powerful data pipelines and analytics solutions.

Get certified in your dream Data Engineer role

Our certification programs help you stand out and prove your skills are job-ready to potential employers.

FAQs

What are the primary differences between Azure Data Factory and Databricks?

Azure Data Factory is primarily used for data integration, migration, and orchestration, while Databricks is designed for big data processing, advanced analytics, and machine learning.

Can Azure Data Factory and Databricks be used together?

Yes, ADF can be used to orchestrate data pipelines that involve Databricks for complex data processing tasks, creating a comprehensive data management solution.

Which tool is better for data transformation: Azure Data Factory or Databricks?

Databricks offers more advanced data transformation capabilities using Apache Spark, making it better suited for complex transformations. ADF is ideal for more straightforward ETL tasks.

How does the cost of using Azure Data Factory compare to Databricks?

ADF is generally more cost-effective for straightforward ETL tasks, while Databricks can become more expensive due to its computational resource requirements, especially for large-scale analytics and machine learning.

Is Azure Data Factory suitable for big data processing?

While ADF can handle large data volumes, it focuses more on data integration and orchestration. For high-performance big data processing, Databricks is the better choice.

Lead BI Consultant - Power BI Certified | Azure Certified | ex-Microsoft | ex-Tableau | ex-Salesforce - Author