Course

This guide is for anyone who works with data analysts, engineers, scientists, or decision-makers and wants to understand what good data quality looks like and how to achieve it. We’ll break down the key dimensions of data quality, explore how to assess and manage them, and cover practical steps to improve your datasets over time.

If you’re new to the topic, consider starting with our Introduction to Data Quality course, which provides an overview of the fundamentals and everyday challenges.

By the end, you’ll be able to identify data issues early, understand how to resolve them, and establish a foundation for more accurate, consistent, and usable data across your projects.

What Is Data Quality?

Before fixing bad data, you need to know what good data looks like.

Data quality is all about whether your data is fit for its intended use. Good data mirrors real-world values, helping you make confident decisions whether you're analyzing trends, running operations, or building systems.

This definition isn’t one-size-fits-all. A marketing team may care most about having updated emails, while an engineer might focus on schema consistency. So, data quality has both universal standards and context-specific parts.

To bring consistency into how teams evaluate data, most frameworks define a set of data quality dimensions, like accuracy, completeness, validity, timeliness, uniqueness, and consistency. These help teams speak the same language when assessing and improving their data.

Why Is Data Quality Important?

Data touches almost every part of an organization, from customer insights to compliance. When that data is flawed, things break. Decisions go sideways. Teams waste time.

And in regulated industries, the risks are even higher. Let’s say a retailer sends out a promo campaign based on an outdated contact list. Not only will they lose engagement, but they’ll also likely waste ad spend. Or picture a hospital dealing with duplicate or inconsistent patient records.

Bad data can lead to compliance issues, lost revenue, and poor customer experiences. According to Gartner, bad data costs businesses millions each year in inefficiencies alone. On the flip side, reliable data enables faster decisions, cleaner automation, and better collaboration. It’s not a nice-to-have—it’s a core part of running a functional business.

Core Dimensions of Data Quality

Knowing what to fix starts with knowing what to measure. I started to mention these ideas earlier, when explaning what data quality is, but here I’ll go into more detail. The following dimensions provide structure to the process and demonstrate the usability and trustworthiness of your data in the real world.

1. Accuracy and completeness

Accuracy means your data reflects the truth. If a customer’s address is wrong, even perfect logistics won't help. Completeness refers to having all the necessary info. An empty “product category” field might mess up any or everything like filtering, analytics, or compliance reporting. You’ll often need a mix of validation checks, feedback loops, and audits to maintain both accuracy and completeness. One e-commerce company cut fulfillment errors by flagging incomplete order forms before submission.

2. Consistency and timeliness

Consistency refers to your data being in agreement across systems. If a customer is marked as “active” in the CRM but “inactive” in billing, you're likely to encounter some kind of confusion or (worse) possibly billing errors. Timeliness refers to the freshness of the data, so to speak. A weekly-updated sales dashboard can’t help you respond to a drop that happened yesterday. That’s where real-time ETL, scheduled jobs, and/or timestamp monitoring come in.

3. Validity and uniqueness

Validity checks that the data follows expected formats and logic. A phone number missing a country code might pass technically, but still be unusable. It depends. Uniqueness is about avoiding duplicates. Duplicate records can disrupt reporting and lead to frustrating user experiences, such as receiving the same email twice. I think validation rules and deduplication routines go a long way in this regard. One SaaS company improved onboarding accuracy by 40% after adding format checks and fuzzy duplicate detection.

4. Integrity and usefulness

Integrity ensures data stays consistent and connected as it moves between systems. It’s about preserving relationships and avoiding silent data corruption. Usefulness is simpler: Does this data help someone do their job? If you collect every pageview but can’t tie it to users, I think you’ve got noise, not insight. Governance frameworks help manage both. They ensure that data flows cleanly and remains meaningful to the business.

Benefits of Good Data Quality

When data is clean, accurate, and timely, the entire organization feels the difference:

1. Better decisions

Accurate data gives teams confidence. Finance can trust forecasts. Marketing can trust engagement rates. The product can act on user trends. For example, a hospital with clean data can track care gaps more easily and improve treatment plans.

2. Operational efficiency

Bad data, on the other hand, slows everything down. You or someone else wastes hours debugging reports, reconciling systems, or fixing bad imports. Clean data means fewer surprises and less time spent firefighting. One logistics company reduced support tickets by 23% just by cleaning up product metadata.

3. Compliance and risk management

Regulations like GDPR and HIPAA require you to manage your data carefully. That means knowing where it lives, who owns it, and whether it’s correct. Clean data also makes audits smoother and reduces the chance of mistakes.

4. Better customer experiences

When your systems agree, customers don’t have to repeat themselves. No duplicate emails. No broken invoices. No mismatched profiles across touchpoints. A unified view helps teams deliver faster service and more relevant recommendations.

Common Data Quality Challenges and Issues

Below are some of the most data quality common hurdles that I could think of and their corresponding solutions.

1. Incomplete and inaccurate data

Sloppy inputs, outdated sources, or manual errors create holes in your datasets. These errors cascade into reporting and decision-making. Fixes include better input validation, upstream cleanup, and alerts for missing or suspicious data.

2. Duplication and inconsistency

Multiple records for the same customer or mismatched formats can kill trust in your dashboards. Matching and merging logic (here we hope it’s alsobacked by clear formatting rules) helps resolve these sorts of issues.

3. Security, privacy, and freshness

Old or exposed data creates compliance risks. Regulations like GDPR, which I mentioned earlier, and CCPA. which I’m mentioning now, penalize poor data handling. Keeping data fresh and secure means balancing retention policies, masking techniques, and fast updates.

4. Integration and data silos

When departments use separate tools, data becomes fragmented with no single source of truth. Integration—via APIs, warehouses, or event-driven pipelines—brings your data together, reducing duplication.

5. AI, dark data, and emerging challenges

Log files, AI outputs, and unstructured sources generate tons and tons of messy data. This data often hides value, or hides errors. Filtering, enrichment, and tagging pipelines help make this data usable while minimizing the introduction of noise.

Data Quality Management: Processes and Best Practices

The next step and the next thing I’ll tak about is putting effective management systems in place.

Assessment and strategy

Start with a health check. Profile your data, calculate basic metrics (like, for example, missing values, duplicates), and benchmark against the relevant standards in your field. Then, build a strategy that fits. Customer-facing apps might prioritize freshness. Financial systems focus on accuracy.

Cleansing and monitoring

Cleansing means fixing what’s already wrong—missing fields, broken links - you name it. SQL, Python, and Excel are still the workhorses here, along with tools like OpenRefine or Power Query. Monitoring prevents new issues from arising. Add validation rules, scheduled checks, and alerting tools to catch issues on the early side.

Best practices

Automate what you can. Use scheduled jobs for cleanup, write tests for key metrics, and set up pipeline alerts. And assign ownership because someone needs to care when things break. That’s where data stewards come in. Tie data quality goals to actual outcomes and do root cause analysis when issues happen.

Data Quality Tools and Technologies

Managing data quality at scale requires the right tools. Whether you're cleaning millions of records or monitoring real-time data pipelines, having the right tech stack really does make a difference.

1. Evaluation criteria

Scalability, flexibility, and integration are non-negotiable. Look for tools that support your data volume, handle real-time checks, and plug into your stack—whether that’s cloud, hybrid, or on-prem. Bonus points if the tool includes machine learning, which everyone is talking about and for good reason, or rule-building features to automate common fixes.

2. Leading solutions

Well-known tools include Talend, Informatica, Ataccama, and IBM InfoSphere. These come with workflows for profiling, cleansing, and monitoring. Cloud-native stacks like AWS Glue and Azure Purview also offer embedded DQ features.

3. Data quality as a service (DQaaS)

If you don’t want to manage your own infrastructure, DQaaS might be a better fit. These tools expose validation, profiling, or monitoring as APIs, which I imagine is great for adding checks to customer forms or ingestion pipelines without hosting anything yourself.

Data Quality in Data Governance

Data quality doesn't exist in isolation, it's deeply tied to governance. A strong governance framework sets the foundation for well-managed data across the organization.

Governance integration

Policies and standards define what quality looks like, who’s responsible, and how compliance is enforced. Without this structure, expectations vary and issues multiply. Strong governance means fewer surprises and clearer handoffs between teams.

Master data management (MDM)

MDM creates a single, accurate source for core entities, such as customers or products. Done right, it reduces duplication and improves consistency across systems. Retailers use MDM to merge in-store and online records. Manufacturers sync product catalogs across regions. MDM is one of the most direct ways to improve data quality at scale.

Data Quality Frameworks and Standards

Frameworks and standards help organizations structure their data quality efforts in a consistent, measurable way. They offer proven methodologies to assess current practices and identify areas for growth.

1. ISO 8000

ISO 8000 provides a clear definition of data quality and outlines the methods for measuring it. It covers completeness, accuracy, and standardization of format. It’s especially useful for teams working across countries or departments with different definitions.

2. TDQM and DAMA DMBOK

TDQM focuses on continuous improvement. You measure, analyze, improve, and repeat the process. Simple, but effective, especially for teams building iterative processes. DAMA DMBOK expands the picture. It encompasses not only quality but also data architecture, governance, integration, and security. Think of it as a full playbook for enterprise-level data operations.

3. Maturity models

Maturity models show where you are and where to aim. They move from ad hoc (manual cleanup, reactive fixes) to optimized (automated checks, integrated governance). Understanding your current level helps prioritize next steps, whether that’s introducing validation checks or assigning data owners.

Measuring ROI on Data Quality

To justify investments in data quality, it’s good to connect improvement efforts to real business outcomes. This section covers practical methods for measuring return on investment (ROI), including cost savings and performance metrics.

Cost-benefit analysis

Start by listing the cost of bad data: manual fixes, failed reports, lost customers. Then list the gains from clean data: better conversions, fewer errors, faster decisions.

Use a basic ROI formula:

ROI = (Net Benefits – Total Costs) / Total CostsKeep it practical: fewer support tickets, reduced time spent debugging, faster compliance audits.

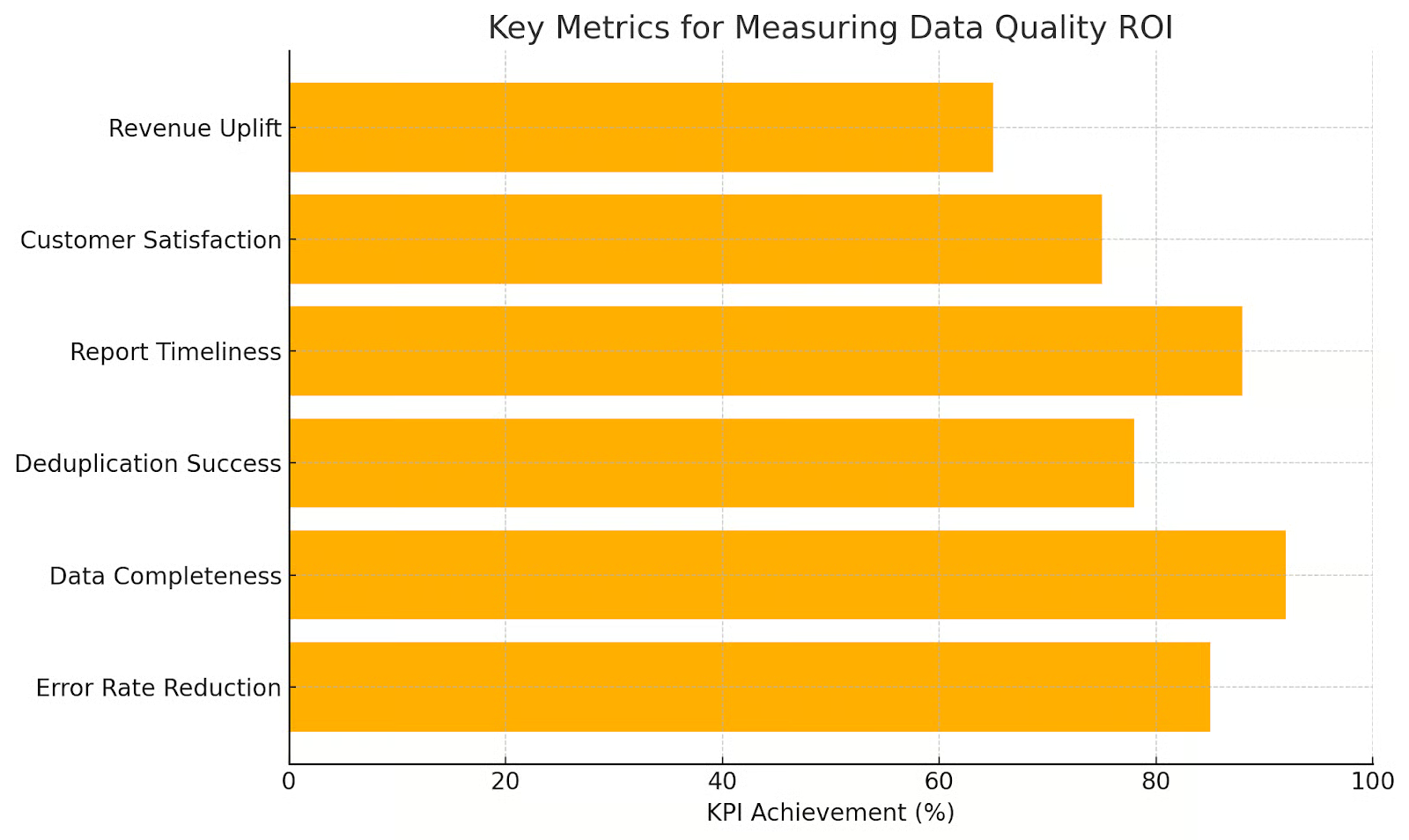

Metrics and KPIs

Track metrics that map to outcomes. Examples:

- Error rate: How many fields are missing or invalid?

- Time to fix: How fast are issues being resolved?

- Forecast accuracy: Are Sales Models Improving?

Cultivating a Data Quality Culture

The last thing I’ll mention: Creating high-quality data systems isn’t just about tools and processes, like a lot of what we’ve talked about so far; It also requires people who understand and value the role of data. Leadership, training, and ongoing commitment contribute to a data quality culture.

Leadership and accountability

Leadership sets priorities. If execs don’t care about data quality, no one else will. That’s guaranteed. Assign ownership, define responsibilities, and tie quality metrics to actual job performance.

Training and tools

Most people want to do the right thing. They just need guidance to help them do so. Offer training. Make it easy to flag insufficient data. Provide tools for self-service cleanup. Tools like Great Expectations or Soda let non-technical teams validate and monitor data without writing code.

Sustaining culture

Culture fades without reinforcement. Run audits. Share wins and misses. Celebrate improvements. Tie efforts back to business goals, such as expanding into new markets or enhancing customer retention. Make data quality part of how work gets done, not a one-off project.

Conclusion

I hope by now you appreciate two very simple but important words: Data quality. Data quality affects every part of the business, and having data quality means lean, consistent, reliable data that builds trust, improves decision-making, and (what businesses really most care about) it supports growth.

If you are in a leadership position and you are reading up on data quality, know that DataCamp for Business has enterprise solutions to help. We can help you build solutions to all kinds of challenges, and work to upskill your entire team at once, plus we create custom learning tracks with unique reporting, so connect with us today.

Tech writer specializing in AI, ML, and data science, making complex ideas clear and accessible.