Track

dbt is growing in popularity in the analytics field for its data transformation and testing functions. In this article, we’ll share what dbt is and how you can conduct some dbt tests to ensure data quality. If you’re just starting out with dbt, be sure to check out our Introduction to dbt course to learn more.

What Is dbt?

As we explore in our separate guide, dbt (data build tool) is a popular open-source tool used by data teams to transform raw data in their warehouse into clean, tested, documented, and analytics-ready datasets.

Unlike ETL tools, dbt focuses solely on the "T" (Transform) component of the data pipeline and operates on top of modern cloud data warehouses like BigQuery, Snowflake, Redshift, and Databricks.

One of dbt’s most powerful features is its built-in data testing framework, which allows data practitioners to write tests that validate assumptions about their data. This testing framework is not only helpful for catching issues early but is also crucial for maintaining data quality and trust in a growing, modular analytics codebase.

Why Test in dbt?

Testing is a foundational part of analytics engineering. As datasets grow and more stakeholders depend on data, the cost of undetected data issues rises. dbt’s testing capabilities help you:

- Detect data issues early: Before downstream dashboards and reports are affected.

- Automate quality checks: Avoid manual validation work.

- Encourage good data modeling practices: Promote modular and testable transformations.

- Support CI/CD practices: Integrate seamlessly into automated workflows.

- Enforce data contracts: Validate that key constraints (e.g., no duplicates) hold true over time.

Types of dbt Tests

In dbt, tests are SQL-based assertions that validate data assumptions. Tests in dbt fall into two main categories:

1. Generic (built-in) tests

These tests are pre-defined by dbt and are applied declaratively in YAML files. Generic tests cover common constraints that are typically found in data modeling and data warehousing.

They are:

- Easy to configure

- Reusable across columns and models

- Highly performant for simple checks

The four built-in generic test types include:

not_null: Ensures a column does not contain any NULL values.unique: Ensures a column’s values are distinct across rows (used commonly for primary keys).accepted_values: Restricts a column to a predefined set of allowed values.relationships: Validates referential integrity between tables, ensuring that foreign keys match values in another table.

These tests are ideal for enforcing baseline data integrity, especially around keys, categorical fields, and core table relationships.

Generic tests work by automatically generating SQL under the hood.

For example, a not_null test on the customer_id column will generate SQL similar to:

SELECT *

FROM {{ ref('customers') }}

WHERE customer_id IS NULLIf this query returns any rows, the test fails.

2. Custom (singular) tests

Custom or singular tests are user-defined SQL queries saved as .sql files in the tests/ directory. These are used for:

- Complex validations that generic tests cannot express

- Business rule enforcement

- Cross-model logic

- Statistical or conditional thresholds

Singular tests offer maximum flexibility. You can validate data in virtually any way, as long as your SQL query returns only rows that violate expectations.

For example:

- Ensuring that a product's launch date is not after its retirement date

- Detecting unusually high or low values (outlier detection)

- Validating that aggregated values align with business totals

Because of their flexibility, custom tests are ideal for analytics teams who want to enforce nuanced data quality contracts in production.

Macros included in dbt can also be used to test, such as the dbt-utils package.

In summary:

|

Feature |

Generic Tests |

Custom (Singular) Tests |

|

Defined in |

schema.yml |

.sql files in tests/ folder |

|

Coverage |

Common constraints (e.g. nulls, keys) |

Any logic expressible in SQL |

|

Complexity |

Simple |

Medium to complex |

|

Reusability |

High |

Low (usually case-specific) |

|

Flexibility |

Limited |

Unlimited (any SQL logic) |

Combining both types gives you the best of both worlds: some consistency and coverage from generic tests, and some precision from custom tests. Let’s explore each type in more detail.

1. Generic (Built-in) Tests

Generic tests are predefined by dbt and used declaratively by adding them to your model’s schema.yml file.

These tests typically validate constraints like uniqueness, non-nullability, referential integrity, or values in a defined set.

Let’s explore a simple tutorial to try out generic tests in dbt.

How to declare a generic test

In your model's corresponding schema.yml file, define tests under the columns: section:

version: 2

models:

- name: customers

description: "Customer dimension table"

columns:

- name: customer_id

description: "Unique customer identifier"

tests:

- not_null

- unique

- name: email

tests:

- not_nullExample: not_null and unique

yaml

columns:

- name: customer_id

tests:

- not_null

- uniqueThis test above ensures that customer_id is both present in every row and distinct. It's commonly used to enforce the assumption that customer_id is a primary key for the table.

Example: accepted_values

columns:

- name: customer_type

tests:

- accepted_values:

values: ['new', 'returning', 'vip']This checks that the customer_type field only contains one of the three allowed strings: new, returning, or vip. This test is often used for categorical fields that must conform to a known set of values, such as enums or statuses.

Example: relationships

columns:

- name: customer_id

tests:

- relationships:

to: ref('customers')

field: idThis enforces a referential integrity constraint by verifying that every customer_id in the current model exists as an id in the customers table. It mimics a foreign key constraint in SQL, but at the analytics layer.

2. Custom (Singular) Tests

When built-in tests are insufficient for your use case, for instance, you want to validate complex business logic, you can write custom tests using SQL. These are known as singular tests.

How to create a custom test

1. Create a .sql file inside the tests/ directory in your dbt project.

2. Write a SQL query that returns rows that fail the test.

Example: No future dates in orders

In the tests folder, create a file called no_future_dates.sql.

SELECT *

FROM {{ ref('orders') }}

WHERE order_date > current_dateThis test checks whether any records in the orders table have an order_date in the future. If rows are returned, the test fails, alerting you to invalid data that may be caused by timezone errors, ETL bugs, or incorrect source system entries.

Example: Duplicate emails per region

-- File: tests/duplicate_emails_per_region.sql

SELECT email, region, COUNT(*) as occurrences

FROM {{ ref('customers') }}

GROUP BY email, region

HAVING COUNT(*) > 1This test ensures that each email is unique within a given region. This might reflect a business rule where the same person cannot register twice in the same region. Any row returned indicates a data quality violation.

How to Implement Tests in dbt

This section walks through the practical steps to implement, configure, and run dbt tests within your project and deployment pipelines.

Step 1: Setting up dbt

Before you begin writing tests or models, you need to have dbt installed and a new project initialized.

Step-by-step Instructions:

1. Create project folder:

Create a folder in a location of your choice.

2. Navigate into the project folder:

cd dbt-test-project3. Create virtual Python environment:

python3 -m venv dbt-envNext, activate the environment once it has been created.

dbt-venv\Scripts\activate4. Install dbt

pip dbt install5. Create .dbt folder

mkdir $home\.dbt6. Initialize dbt

dbt init7. Create profiles.yml file

Create a new file in your .dbt folder with the following contents:

austi:

target: dev

outputs:

dev:

type: sqlite

threads: 1

database: ./dbt_project.db

schema: main

schema_directory: ./schemas

schemas_and_paths:

main: ./dbt_project.dbYou can replace “austi” with the name of your user profile of your Windows computer.

8. Create dbt_project.yml file

Next, you’ll need to create another config file in the .dbt folder with the following contents.

name: dbt_test_project

version: '1.0'

profile: austi

seeds:

dbt_test_project:

+quote_columns: falseOnce again, replace “austi” with your user profile name.

9. Verify the project works:

dbt debugOnce setup is complete, you’re ready to begin creating datasets and building dbt models.

Step 2: Creating the dataset

If you're working without access to a data warehouse, you can simulate dbt tests locally using CSV files and dbt's seed functionality.

Step-by-step Instructions:

1. Create CSV files: Place these inside a new /seeds folder of your dbt project.

Here’s how you should name it:

seeds/customers.csv

customer_id,name,email,customer_type

1,Alice Smith,alice@example.com,new

2,Bob Jones,bob@example.com,returning

3,Carol Lee,carol@example.com,vip

4,David Wu,david@example.com,new2. Create another file in the same directory:

Use this naming convention:

seeds/orders.csv

order_id,customer_id,order_date,order_total,order_status

1001,1,2023-12-01,150.00,shipped

1002,2,2023-12-03,200.00,delivered

1003,1,2023-12-05,175.00,cancelled

1004,3,2024-01-01,225.00,pending3. Create a config file to identify seeds:

Next, you’ll need to create a config file called dbt_project.yml.

Paste the following contents in the config file.

name: dbt_test_project

version: '1.0'

profile: austi

seeds:

dbt_test_project:

+quote_columns: falseChange the profile field to match the name of your user profile on your Windows computer.

4. Load seed data:

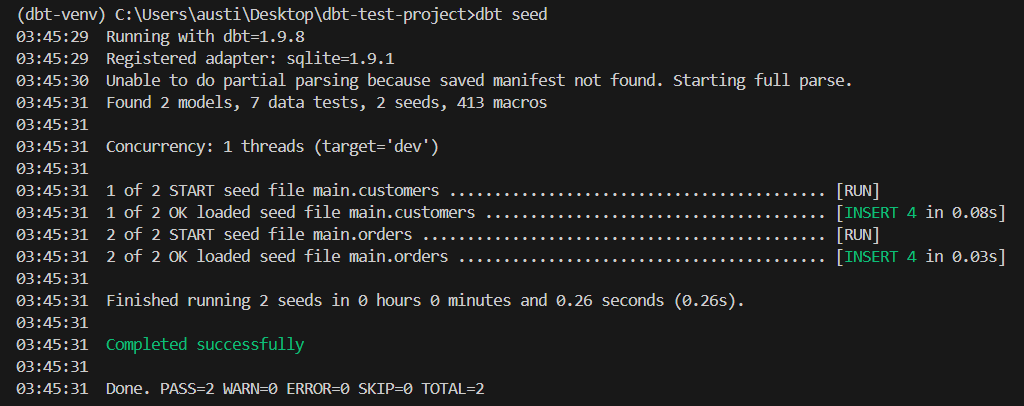

dbt seedThis command creates main.customers and main.orders tables from the CSV files. These are the seeds needed to replace a database.

As you can see from the image above, two seeds have been found and have been loaded.

Step 3: Ingesting into dbt

Now, we’ll create staging models to transform and test your raw data.

Step-by-step Instructions:

1. Create staging models:

Create a new folder called models in your project folder. In that folder, create a file called stg_customers.sql.

Paste the following code in the file:

SELECT

*

FROM {{ ref('customers') }}In another file called stg_orders.sql, paste the following code:

SELECT

*

FROM {{ ref('orders') }}2. Define schema tests:

Create a new file at the following location:

models/schema.yml

Paste the following into the file

version: 2

models:

- name: stg_customers

description: "Customer staging table"

columns:

- name: customer_id

description: "Unique identifier for each customer"

tests:

- not_null:

tags: [critical]

- unique:

tags: [critical]

- name: email

description: "Email address of the customer"

tests:

- not_null

- name: stg_orders

description: "Orders staging table"

columns:

- name: order_id

description: "Unique identifier for each order"

tests:

- not_null:

tags: [critical]

- unique:

tags: [critical]

- name: customer_id

description: "Foreign key to stg_customers"

tests:

- not_null:

tags: [critical]3. Run dbt to build staging models:

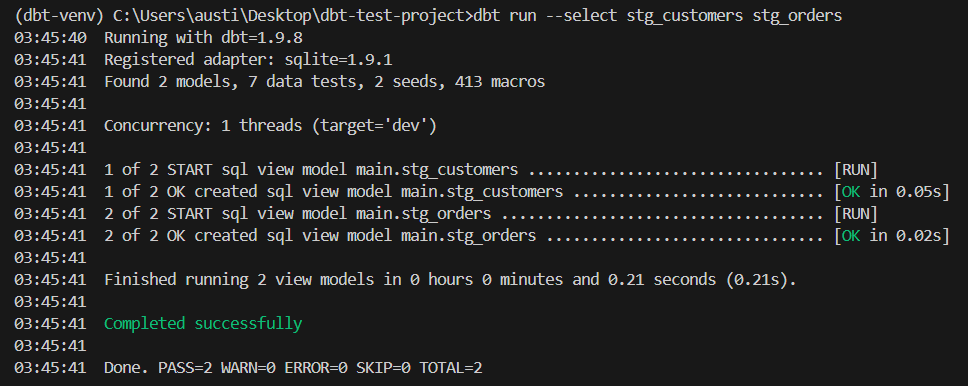

dbt run --select stg_customers stg_ordersThese staging models now act as the foundation for applying dbt tests using your locally seeded data.

An example output would be:

Step 4: Creating your first test

Now that your data is staged, let’s implement your first dbt test using the generic built-in testing framework.

Step-by-step instructions:

1. Open the schema.yml file where your staging model is defined.

2. Under the columns: section of your model, define a test such as not_null or unique.

Example:

models:

- name: stg_customers

columns:

- name: customer_id

tests:

- not_null

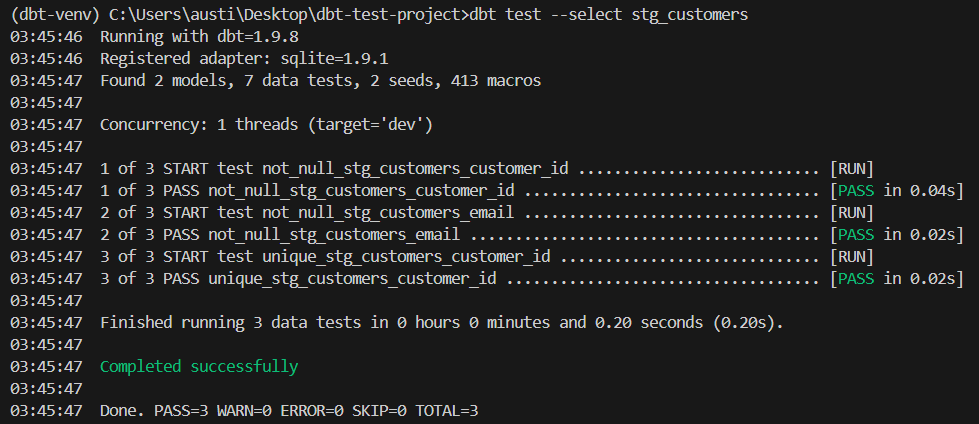

- unique- Run the test using the CLI:

dbt test --select stg_customersHere’s an expected result:

Step 5: Developing custom and singular tests

When your needs go beyond built-in tests, you can create custom tests using SQL.

Step-by-step instructions:

1. Create a new file in the tests/ folder, for example: tests/no_future_orders.sql

2. Add SQL logic that returns failing rows:

SELECT *

FROM {{ ref('stg_orders') }}

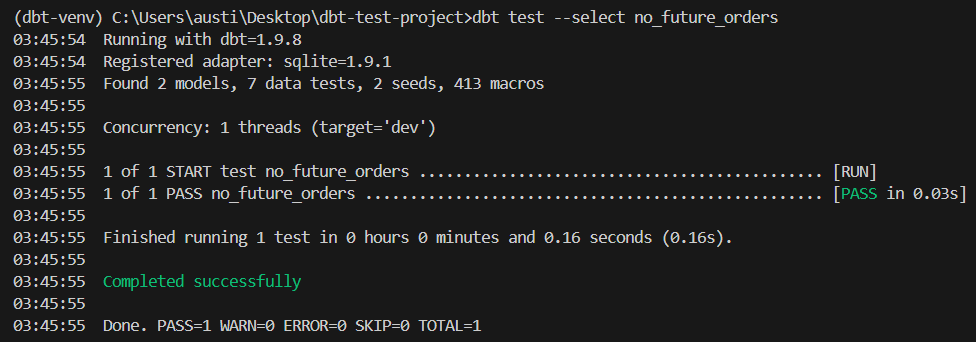

WHERE order_date > current_date3. Run the test:

dbt test --select no_future_ordersThis test will fail if any orders have future-dated order_date values.

Here is an expected result:

Step 6: Running tests in CI/CD pipelines

To ensure consistent test execution, integrate dbt tests into your development pipeline.

Step-by-step instructions:

1. Use tags to prioritize or isolate tests:

You can modify any model schema to include the following tags in this format:

columns:

- name: customer_id

tests:

- not_null:

tags: [critical]2. Run selective tests locally:

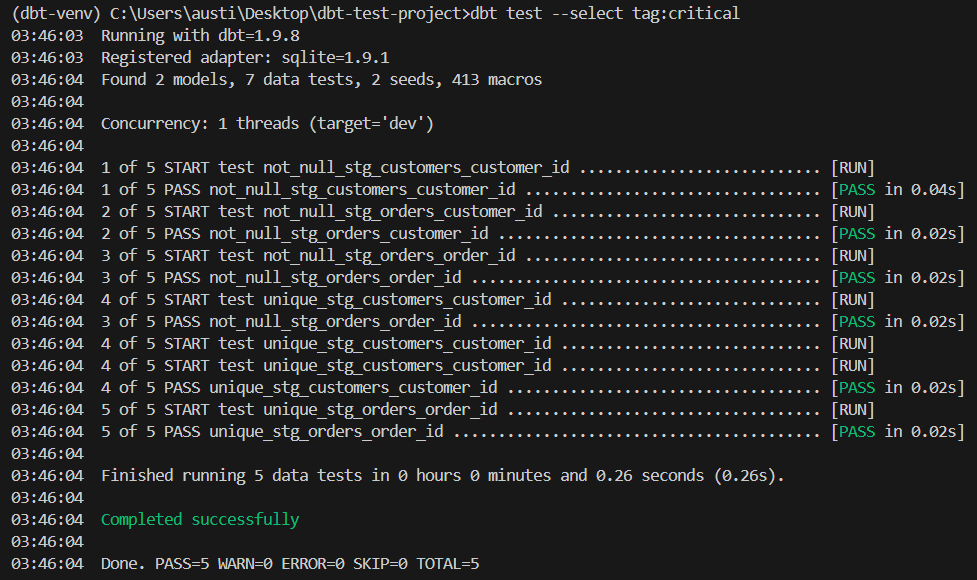

dbt test --select tag:criticalIf the tests run correctly, you should expect this output:

3. Configure your CI workflow to run dbt tests automatically. You can connect this to a CI platform like GitHub Actions.

Best Practices for dbt Tests

Implementing dbt tests effectively is not just about writing them; it's about integrating testing into your team's development lifecycle and culture. Here are some best practices to guide you:

1. Start with primary keys

Always apply not_null and unique tests to primary key columns in dimension and fact tables. These two constraints are the foundation of reliable joins and deduplication logic in downstream queries.

2. Validate foreign keys with relationships

Foreign keys are critical for maintaining referential integrity. Use the relationships test to mimic foreign key constraints, especially in data warehouses that do not enforce them natively.

3. Use accepted_values for categorical columns

Control dimensional consistency by enforcing specific values in columns like status, region, or product_type. This reduces the risk of typos or unhandled enum expansions.

4. Write custom tests for business logic

Business logic doesn’t always map cleanly to generic rules. Examples of logic you should write custom tests for include:

- Sales must be non-negative

- Order dates cannot be in the future

- A user cannot have multiple active subscriptions

5. Organize tests clearly

If you have many tests set in place, it can get messy very quickly. You should consider these best practices to keep them organized:

- Place custom tests in the

tests/folder and name them descriptively. - Avoid overly complex SQL in tests—keep logic focused and readable.

- Group tests by model or domain where possible.

6. Fail fast and integrate with CI/CD

Run dbt test as part of your CI pipeline. This ensures that code cannot be merged unless data quality constraints pass. Integrating tests into CI/CD reinforces accountability and trust in the data pipeline.

7. Avoid over-testing

Excessive or unnecessary testing (especially on large volumes of data) can slow down deployments. Focus on tests that are:

- Business-critical

- Likely to fail due to upstream issues

- Helpful for debugging when broken

Avoid testing calculated fields unless they are part of a contractual SLA.

Advanced Example: Outlier Detection

For an advanced test example, we can perform an outlier detection test.

Create a SQL file in your tests folder with the following code:

SELECT *

FROM {{ ref('orders') }}

WHERE order_total > 100000This is a basic outlier detection test. If your business typically sees orders below $10,000, you can flag orders over $100,000 for manual review. While not a strict data quality violation, this can be valuable for fraud detection or operational monitoring.

Performance Considerations

As your dbt project scales and test coverage grows, performance becomes increasingly important. This section outlines techniques and strategies to maintain test efficiency and control computational costs.

Test optimization techniques

For testing, try these techniques to optimize performance:

- Incremental testing: Focus tests only on newly added or modified data by using dbt's incremental model structure. This reduces unnecessary scans over full datasets.

- Query parallelization: Use dbt’s built-in parallel execution by configuring the threads: parameter in dbt_project.yml to run multiple tests concurrently.

- Selective test execution: Use flags like --select and --exclude to run only relevant tests, especially during development. For example:

dbt test --select customers

dbt test --exclude tag:slowCost management strategies

Implementing cost management strategies ensures dbt tests remain performant and cost-efficient, especially in environments where compute billing is tied to query complexity and frequency.

Some strategies are:

- Tag-based test execution: Assign tags to resource-intensive or optional tests so they can be excluded from standard runs unless explicitly required.

- Result caching: Utilize warehouse-specific features (e.g., result caching in BigQuery or Snowflake) to avoid repeated computation where possible. Organize tests to benefit from cached results of previously executed queries.

- Scheduled test batching: Schedule heavier test suites during off-peak hours or outside of production windows to minimize impact on warehouse workloads.

Conclusion

dbt tests are a powerful way to ensure high data quality, automate validation checks, and catch issues early in your transformation pipeline. Generic and custom tests can be used to create robust and maintainable data workflows.

Learn more about dbt in our Introduction to dbt course or our dbt tutorial.

dbt Tests FAQs

What is the unique test in dbt?

In dbt (data build tool), the unique test ensures that all values in a specified column are distinct, meaning there are no duplicate entries.

What are the four generic tests that dbt ships with?

dbt ships with four tests: not_null, unique, accepted_values, and relationships.

What are some dbt test types?

dbt test types include generic built-in tests and custom singular tests.

I'm Austin, a blogger and tech writer with years of experience both as a data scientist and a data analyst in healthcare. Starting my tech journey with a background in biology, I now help others make the same transition through my tech blog. My passion for technology has led me to my writing contributions to dozens of SaaS companies, inspiring others and sharing my experiences.