Course

If you work with large language models, you know that prompt engineering can be a bit of a challenge. You can spend hours adjusting prompts only to get mixed results. It's frustrating, takes up a lot of time, and often requires lots of trial and error to achieve optimal results.

One solution to this problem is DSPy—a new framework that changes the way we know prompt engineering. Instead of focusing on crafting perfect prompts, DSPy lets us program the models directly.

In this tutorial, I’ll explain DSPy and why it's different from older methods. You'll learn about its main features and benefits and how it works. I'll guide you through your first steps with DSPy and direct you to helpful resources and communities.

Let's get started!

What Is DSPy?

DSPy is an open-source tool created by Stanford University that "compiles declarative language model calls into self-improving pipelines." Instead of spending time crafting perfect prompts, DSPy lets you program the AI models directly.

This makes AI apps more reliable and easier to scale. DSPy separates your app's logic from the text it uses, so you can focus on what you want your AI to do. Meanwhile, DSPy optimizes the prompts behind the scenes.

Let’s explore some of its key features.

Declarative programming

With DSPy, you define the task you want to accomplish and the metrics to measure success. The framework then optimizes the model's behavior for you. It uses easy-to-understand Python syntax, allowing you to concentrate on what your application should do rather than how to prompt the model.

Self-improving prompts

One of DSPy's standout features is its ability to automatically improve prompts over time. DSPy continuously refines the prompts, saving you from the hassle of constant manual adjustments. This is achieved using feedback and evaluation, ensuring that the model performs better with each iteration.

Modular architecture

DSPy also offers a modular architecture, enabling you to mix and match pre-built modules for different natural language processing (NLP) tasks. This modularity makes it highly customizable to fit your specific needs, promoting flexibility and reusability. The framework includes useful modules like ChainOfThought and ReAct, which can be easily integrated into your applications.

How DSPy Works

In this section, I'll walk through the main parts of DSPy and how it makes working with LLMs easier.

Task definition

With DSPy, users start by specifying the task goal and the metrics to optimize for. This means you define what you want the model to achieve and how you’ll measure its success.

DSPy uses example inputs, labeled or unlabeled, to guide the learning process. These examples help the framework understand the task better and improve its performance. Plus, DSPy introduces the concept of modules, which are reusable building blocks for various NLP tasks. These modules can be combined and customized to fit different needs.

Pipeline construction

Once the task is defined, users select and configure the appropriate modules for their specific task. This involves choosing the right modules that match the task's requirements and setting them up accordingly. DSPy allows you to chain these modules together to create complex pipelines, enabling sophisticated workflows. Each module has signatures that define the input and output specifications, ensuring the modules can work together seamlessly.

Optimization and compilation

DSPy optimizes prompts using in-context learning and automatic few-shot example generation. This means the framework continuously refines the prompts to improve the model's performance. DSPy can also fine-tune smaller models for tasks requiring more specific tuning.

Finally, DSPy compiles the entire pipeline into executable Python code, making it easy to integrate into your applications. This compilation process ensures that the pipeline runs efficiently and effectively.

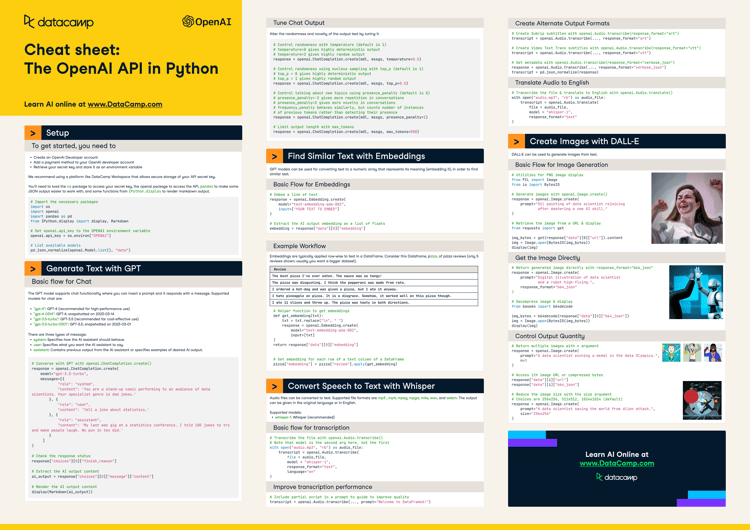

Figure 1: DSPy Workflow: From Data to Optimized AI Model

This diagram illustrates DSPy's core workflow, showing how it transforms raw data into an optimized AI model. The process begins with a dataset, which informs the signature (the input/output structure). This signature is used to create a module, which is then optimized using DSPy's advanced techniques. Finally, the optimized module undergoes evaluation to ensure it meets the desired performance criteria.

This streamlined approach allows you to focus on high-level design while DSPy handles the complexities of prompt engineering and model optimization.

Advantages of DSPy

DSPy offers several key benefits that make it a powerful tool for working with LLMs:

Improved reliability

DSPy's declarative approach leads to more reliable and predictable LLM behavior. Instead of manually crafting prompts, you define what you want the model to do. DSPy then figures out how to make it happen consistently. This means fewer unexpected outputs and more stable performance across different tasks.

Let’s say you're building a customer support chatbot. Instead of writing specific prompts, with DSPy you might define your intent like this:

- Understand the customer's question.

- Retrieve relevant information from the knowledge base.

- Generate a helpful, empathetic response.

- Check if the response answers the original question.

- If not, refine the answer.

DSPy would then handle:

- Crafting optimal prompts for each step.

- Managing the flow of information between steps.

- Optimizing the overall process for accuracy and consistency.

For instance, DSPy might learn that starting responses with "I understand your concern about..." leads to better customer satisfaction in step 3. Or, for step 4, it might develop an effective way to compare the response to the original question.

The key is that you focus on defining the high-level structure and goals. DSPy takes care of prompt engineering and optimization details, leading to more reliable and predictable behavior from the LLM across various customer inquiries.

This approach means you can easily adjust the chatbot's behavior (e.g., make it more formal or add a new step to check for sensitive information) without having to rewrite all your prompts manually. DSPy would adapt and optimize for the new requirements automatically.

Simplified development

The modular architecture and automatic prompt optimization in DSPy make LLM development much easier. You can build complex applications by combining pre-built modules, like putting together building blocks. DSPy handles the tricky part of optimizing prompts behind the scenes so you can focus on your application's logic rather than tweaking prompts endlessly.

Imagine you're creating a content creation assistant for a blog. Without coding, you might conceptualize your application like this:

1. Topic Generator Module

- Input: Blog niche and target audience

- Output: List of potential blog topics

2. Outline Creator Module

- Input: Selected blog topic

- Output: Detailed outline for the blog post

3. Content Writer Module

- Input: Blog outline

- Output: Full blog post draft

4. Editor Module

- Input: Blog post draft

- Output: Edited and polished blog post

5. SEO Optimizer Module

- Input: Edited blog post

- Output: SEO-optimized version of the postIn this scenario, DSPy would:

- Provide these pre-built modules that you can simply select and arrange.

- Automatically optimize the prompts for each module behind the scenes.

- Handle the flow of information between modules.

You don't need to write any code or craft any prompts. Instead, you just choose the modules you need, arrange them in the order you want, and specify your inputs (like blog niche and target audience).

Adaptability

When it comes to adapting to new tasks and domains, DSPy is great. You simply adjust the task definition and metrics, and DSPy reconfigures itself to meet these new requirements. This flexibility means you can quickly apply your LLM to different use cases without starting from scratch each time.

Suppose you've built a customer support chatbot for a tech company using DSPy. Initially, the chatbot's task is to answer tech support questions, with metrics focused on response accuracy and solution relevance, all within the domain of computer hardware and software.

Now, you want to adapt this chatbot for a healthcare company. To do this, you adjust the task definition to "answer healthcare-related customer queries" and modify the metrics to include "medical accuracy" and an "empathy score" for handling sensitive health issues. You also specify the new domain, which now covers general healthcare, medical procedures, and insurance.

With these changes, DSPy automatically reconfigures itself. It adjusts its internal processes to focus on medical knowledge bases, adapts its language generation to use more empathetic and medically accurate terms, and changes its evaluation criteria to prioritize medical accuracy and empathy.

You then provide a small set of healthcare-related Q&A examples. DSPy uses these examples to fine-tune its approach without requiring you to rewrite any prompts.

As a result, your chatbot now effectively handles healthcare queries, providing medically accurate information and communicating with the appropriate empathy for health-related concerns.

In this way, you didn't need to code anything new. Redefining the task, adjusting the metrics, and providing new examples was enough for DSPy to reconfigure the underlying LLM interactions to meet the new requirements.

Scalability

DSPy's optimization techniques show their worth when it comes to handling large-scale tasks. The framework can improve LLM performance on big datasets or complex problems by automatically refining prompts and adjusting the model's behavior. This scalability ensures that your applications can grow and tackle more challenging tasks as needed.

Suppose you're developing a recommendation system for an e-commerce platform. Initially, your system needs to process a large dataset of user interactions and product details to generate personalized recommendations.

Without DSPy, you would manually craft prompts for each step, such as retrieving user history, analyzing preferences, and suggesting products. This process would involve a lot of trial and error to get the prompts just right, especially as the dataset grows and the complexity increases.

With DSPy, the process is much simpler and more efficient.

You start by defining the task: generating personalized product recommendations. You specify the metrics to optimize for, such as recommendation accuracy and user satisfaction.

Next, you provide DSPy with a dataset of user interactions and product details. This dataset helps DSPy understand the task and improve its performance.

DSPy then uses its modular architecture to break the task into smaller, manageable modules. For example, one module might handle retrieving user history, another might analyze preferences, and a third might generate product suggestions.

DSPy automatically optimizes the prompts and adjusts the model's behavior as you provide more data and refine your task definition. You don't have to tweak each prompt manually—DSPy does it for you behind the scenes.

For instance, if the dataset grows or the complexity of user interactions increases, DSPy will reconfigure itself to handle the larger scale. It will refine the prompts and adjust the model's parameters to ensure consistent and reliable performance.

This scalability ensures that your recommendation system can grow and tackle more challenging tasks as needed without requiring you to start from scratch each time. DSPy's optimization techniques make it possible to handle large-scale tasks efficiently, allowing you to focus on the high-level logic of your application rather than the intricacies of prompt engineering.

Use Cases of DSPy

DSPy can be applied to a wide range of natural language processing tasks. Let’s explore a few.

Question answering

DSPy is really good at building robust Question Answering (QA) systems. It can combine retrieval-augmented generation (RAG) with chain-of-thought prompting to create powerful QA tools. This means you can build systems that find relevant information and reason through complex questions step-by-step, providing more accurate and insightful answers.

Text summarization

With DSPy, creating summarization pipelines becomes much simpler. You can easily set up systems that adapt to different input lengths and writing styles. This flexibility allows you to summarize anything from short articles to lengthy documents, maintaining the key points while adjusting the summary style to suit your needs.

Code generation

DSPy can help generate code snippets from descriptions. This is particularly useful for developers who want to quickly prototype ideas or non-programmers who need to create simple scripts.

Language translation

DSPy can make machine translation much better. It helps creating smarter translation systems that don't just translate words, but understand context and culture too.

With DSPy, you can build a translator that gets idioms and sayings right, keeps the original text's style and tone, and works well for specific areas like law, medicine, or tech. It can even explain why it chose certain translations.

Chatbots and Conversational AI

DSPy can make chatbots feel more like talking to a real person. Instead of giving pre-written answers, a DSPy chatbot can remember what you've been talking about and have back-and-forth conversations that make sense. It gives answers that fit your question better and can change how it talks to match what you like. These chatbots can even do tricky tasks that need thinking and decision-making. These improvements make chatbots more helpful and easy to talk to, almost like conversing with a knowledgeable friend.

Getting Started With DSPy

You can install DSPy using pip. Open your terminal or command prompt and run:

pip install dspy-aiThis command will install the latest stable version of DSPy.

If you want to explore DSPy's features with additional integrations, you can install it with extras. For example, to include Pinecone support, use:

pip install "dspy-ai[pinecone]"Similar commands are available for other integrations like Qdrant, ChromaDB, and Marqo.

DSPy Resources

To learn more about using DSPy, check out the official documentation. It provides detailed tutorials and examples to help you get started and make the most of the framework's capabilities.

The official GitHub repo includes the source code, issue tracker, and additional examples.

While DSPy is still a relatively new framework, its community is growing. You can find discussions and get help on GitHub, where you can open issues or participate in discussions. As the community expands, more resources and shared experiences will likely become available to support your journey with DSPy.

Remember, DSPy is actively developed, so keep an eye on updates and new features that might enhance your projects.

This page provides installation instructions and release information for the DSPy package.

If you are a fan of working in Notebooks, DSPy Colab Notebook is an interactive Colab notebook to help you get started with DSPy quickly.

Finally, you can join the Discord server to connect with other DSPy users, ask questions, and share experiences.

Conclusion

In sum, DSPy offers a more intuitive and powerful way to work with AI, moving away from prompt engineering and towards programming foundation models. Let's recap what we have covered in this article:

- DSPy is a declarative, self-improving framework that simplifies LLM application development.

- It features declarative programming, self-improving prompts, and a modular architecture, making it easier to build complex AI systems.

- DSPy allows users to define tasks, construct pipelines, and optimize prompts automatically.

- The framework offers improved reliability, simplified development, adaptability, and scalability compared to traditional prompt engineering methods.

- DSPy can be applied to a wide range of use cases, including question answering, text summarization, code generation, and custom NLP tasks.

As you keep working with DSPy, don't forget to use the community resources. Also, stay up to date with what's new in this evolving field—I recommend reading these blog posts if you want to learn about some of the latest developments:

FAQs

What are the system requirements for installing and running DSPy?

DSPy requires Python 3.7 or higher. It is recommended to have a modern operating system (Windows, macOS, or Linux) and sufficient RAM (at least 8GB) for handling large language models. A GPU is beneficial for faster processing but not mandatory.

Are there any limitations or known issues with DSPy that users should be aware of?

Some known limitations of DSPy include potential performance variability across different language models, the need for substantial computational resources for large-scale tasks, and the ongoing development of community support and documentation. Users should stay updated with the latest releases and community discussions to mitigate these issues.

Does DSPy support multilingual tasks and how effective is it?

DSPy supports multilingual tasks by leveraging language models that are trained on multiple languages. You can specify the language in the task definition, and DSPy will optimize the prompts accordingly. However, the effectiveness may vary depending on the language model's training data.

Does DSPy work with all language models?

DSPy is designed to work with a variety of large language models. It's compatible with popular models like GPT-3, GPT-4, and other OpenAI models. It can also be used with open-source models. However, you should check the latest documentation for the most up-to-date information on model compatibility.

Can I use DSPy for commercial projects?

Yes, DSPy is open-source and available under the Apache License 2.0, which allows for commercial use. However, be sure to check the licensing terms of the specific language models you're using with DSPy, as these may have different restrictions.

Ana Rojo Echeburúa is an AI and data specialist with a PhD in Applied Mathematics. She loves turning data into actionable insights and has extensive experience leading technical teams. Ana enjoys working closely with clients to solve their business problems and create innovative AI solutions. Known for her problem-solving skills and clear communication, she is passionate about AI, especially generative AI. Ana is dedicated to continuous learning and ethical AI development, as well as simplifying complex problems and explaining technology in accessible ways.