Track

Natural Language Processing (NLP) has been around for over seven decades. It started with simple linguistic methods and gradually expanded into fields like artificial intelligence and data science, which shows that this machine learning (ML) technology has greatly evolved.

Its importance surged in 2011 with the launch of Siri, a successful NLP-powered assistant. NLP is central to many AI applications, such as chatbots, sentiment analysis, machine translation, and more.

In this article, I’ll explain how you can learn NLP and in what ways it can benefit you as a data practitioner. I will also break down this broader niche into easy-to-understand concepts and provide a learning plan so that you can start from scratch.

Why Learn NLP?

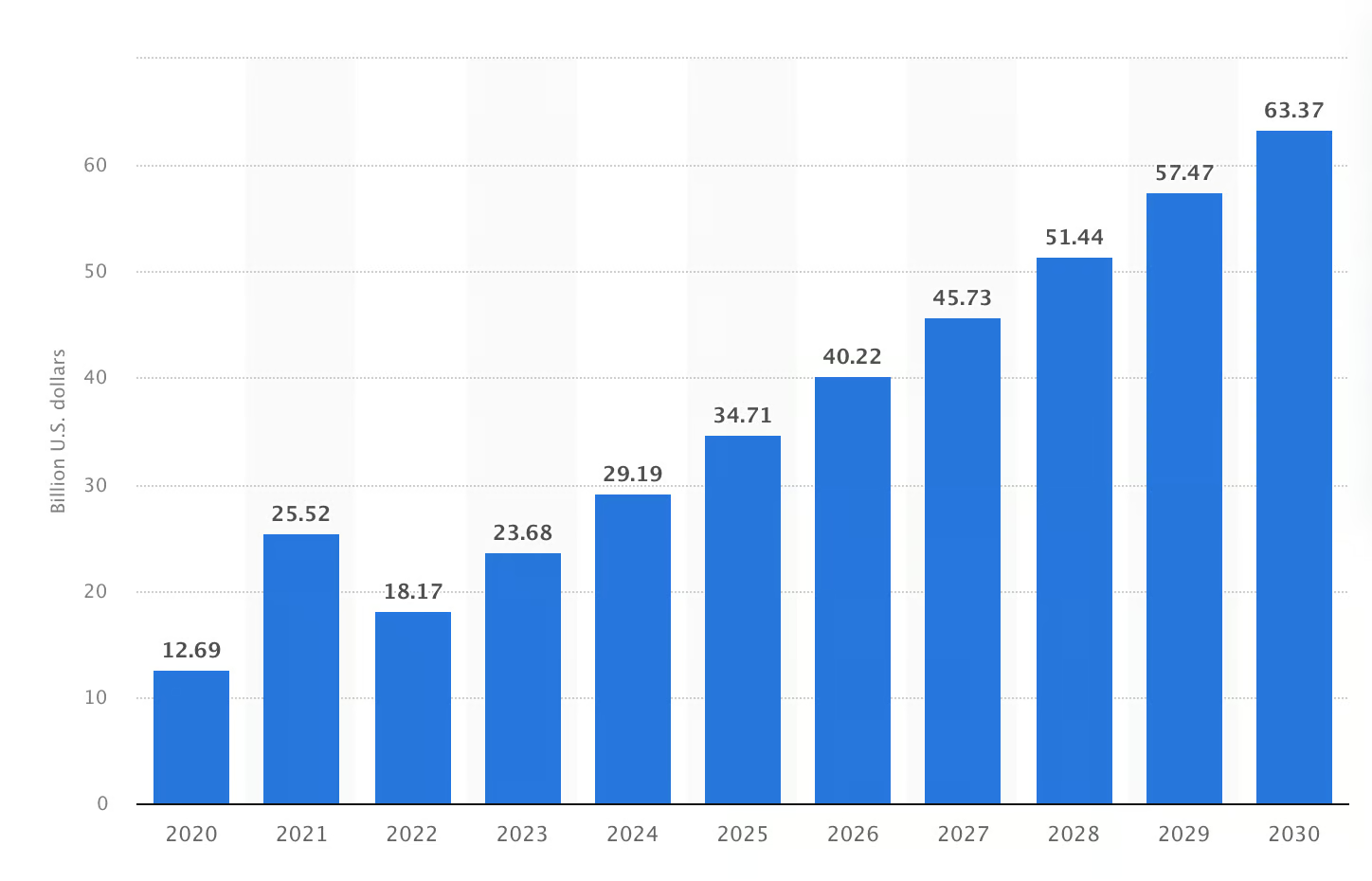

The NLP market grew beyond $23 billion in 2023 and is expected to surpass $60 billion by 2030. But do you know why this upsurge is predicted? NLP bridges the communication gap between technology and humans. Organizations rely on it to process unstructured data in less time to make better decisions.

The expected size of the NLP market worldwide will be from 2020 to 2030 (in billions of U.S. dollars). Image source: Statista

Now, it’s being used in various real-world applications:

- You can analyze medical records in the healthcare sector or understand market trends in finance.

- Six of ten U.S. consumers state that NLP-powered chatbots save them time while exploring eCommerce products.

- Sentiment analysis is another key application of NLP — it interprets the emotions behind social media comments.

This shows NLP is used in almost all fields, from healthcare and finance to eCommerce and marketing. So, learning it can expand your career options, especially in data science, AI, and software development.

Since AI is becoming more common, the demand for NLP experts to handle complex tasks that general models can't solve is also increasing yearly. Simply put, by learning NLP, you can set yourself up for a career with plenty of opportunities and long-term relevance.

Master NLP in Python Today

Core Concepts to Understand in NLP

If you're a complete beginner to NLP and don't know the basics and advanced topics it involves, here are some core NLP concepts you need to learn. This is to give you a feel of what working in NLP could look like.

Text preprocessing

Text preprocessing converts raw data into a suitable format for computer models to understand and process that data. It processes all the data while preserving the actual meaning and context of human language in numbers. This preprocessing is done in multiple steps, but the number of steps can vary depending on the nature of the text and the goals you want to achieve with NLP.

The most common steps include:

- Tokenization: It breaks down text into smaller units called tokens. These tokens can be words, characters, or punctuation marks. For example, the sentence “I want to learn NLP.” would be tokenized into:

I,want,to,learn,NLP,.. - Stopword removal: Stopwords are words without meaning in the text, such as “is”, “the”, and “and”. Removing these words makes it easier to focus on meaningful words.

- Stemming: Stemming strips away suffixes and reduces words to their base form. For example, “going” will be reduced to “go”.

- Lemmatization: Lemmatization reduces words into lemmas that are always meaningful. It's a time-consuming process with a more complex algorithm than stemming.

Bag-of-words (BoW) and TF-IDF

Both bag-of-words and TF-IDF are important concepts in NLP for information retrieval. Here's an overview of both concepts:

- Bag-of-words are documents containing collection words. This approach creates a feature representing each word from the corpus (an organized collection of datasets). Then, it assigns each feature a value based on the number of times a word appears within the text. You can use it to capture word occurrences in large amounts of data.

- TF-IDF builds on the BoW model. However, it gives more importance to words that occur frequently across the entire corpus. You can use this model to highlight notable words in a document's content.

Word embeddings

Word embeddings are representations of words in a continuous vector space. Machine learning models rely on them to understand and work with text data. Three commonly used embedding techniques include Word2Vec, GloVe, and FasText. Let's see what sets them apart:

- Word2Vec uses nearby words to understand the context and capture semantic meaning. However, it struggles with out-of-vocabulary (OOV) words and only learns embeddings for words found in training data.

- GloVe builds a co-occurrence matrix to record how often a word appears in a dataset. Placing similar words in one place allows it to capture semantic relations between those words.

- FastText breaks down words into subwords and learns embeddings for these smaller parts, which allows it to retain semantic meaning.

Language models

Any NLP task — text generation or speech recognition — has to predict the likelihood of a sequence of words first. That's where language models help. They assign probabilities to word sequences and make computers understand human language. Two common language models include:

- Traditional N-Gram models: These models use N-Grams, which are the probabilities of a word based on the previous words in a sequence. Since they rely on counting word sequences from large datasets, they face challenges with data sparsity.

- Modern deep learning-based models: Deep learning completely changed with the introduction of Word2Vec and GloVe. These neural-based models create word embeddings that capture semantic relationships but cannot handle out-of-vocabulary (OOV) words.

Modern deep learning transformer-based models like Bert and GPT were introduced to address issues found in previous models. These models capture context and meaning across entire sentences. Here's how:

- Google's BERT reads the text in both directions to better understand the text context. It incorporates semantic and syntactic features to excel at tasks like answering questions and analyzing documents.

- GPT uses a unidirectional approach to predict the next word in the sequence, which is why it can create human-like and contextually correct content.

Text classification and clustering

Text classification assigns predefined categories to text data using bag-of-words and N-Gram models. For example, if you have the words “BERT” and “GPT”, it will create two categories based on these words. Then, this will be used to train the model to predict the category of unseen text.

While clustering groups similar items together without predefined labels, its algorithm examines the features of each item to find similarities and group similar items together. For example, marketing teams can incorporate clustering to identify consumer groups based on demographics and show more relevant ads to drive growth.

Learning NLP in Python

Python is popular for NLP because of its simplicity and helpful libraries. So, whether you're just starting or have some coding experience, let’s see how to build a solid NLP foundation using Python.

Why Python for NLP?

Python has extensive libraries, such as NLTK, spaCy, and TextBlob, that provide tools for processing, tokenization, and more. Its active community constantly adds new features and fixes bugs to improve these tools. This means you can rely on it for up-to-date resources and online support.

In addition to libraries, Python also has frameworks that are used in NLP. TensorFlow and PyTorch-NLP are two such frameworks that you can use for text classification, question answering, and sentiment analysis.

Python libraries for NLP

Some of the most used Python libraries for NLP tasks include:

- NLTK (Natural Language Toolkit): It has functions to identify named entities and create parse trees. You can use it to tag parts of speech and classify text. These capabilities make it a go-to for beginners and experienced users.

- spaCy: While NLTK is great for basic tasks such as tokenization and working with stopwords, spaCy handles these tasks faster and more accurately. Also, it excels at dependency parsing, meaning it allows you to understand relationships between words in a sentence. You can use it for production-level applications.

- Gensim: If you want to work with word embeddings like Word2Vec, Gensim will be your go-to library. It finds word similarities and cluster-related words. You can also use it to process large corpora since it can handle large text datasets.

- Transformers (Hugging Face): These libraries give easy access to more-trained models, like BERT and GPT. You can use these libraries to fine-tune models on your own datasets. For example, you can perform tasks like named entity recognition and song lyrics generation in your desired style.

How to Learn NLP from Scratch

Since NLP is a vast field, it seems challenging for beginners to learn it from scratch. But you should start with the basics, such as text preprocessing and word embedding, and then move on to advanced topics like deep learning.

I’ve put together a step-wise guide to help you start your NLP journey from scratch:

Step 1: Understand the basics of text data

Since data preparation is the core of any NLP task, you should start by understanding the text data structure and learning to analyze different data types, such as sentences, paragraphs, or documents.

Step 2: Learn text preprocessing techniques

Next, learn about text preprocessing (preparing data for analysis). This way, you’ll understand how punctuations are removed, how text is converted to lowercase, and how special characters are handled. While these tasks may seem simple, the science and logic behind them will help you understand how NLP models process text.

Once you’ve understood everything, practice with real-world examples. Find text samples from websites or social media platforms. Then, apply different cleaning methods to this text. This hands-on approach will help you see firsthand how preprocessing transforms messy data into a format ready for analysis.

As you practice, you'll understand why each step matters and how it contributes to the overall goal of preparing text for NLP tasks.

Step 3: Explore text representation methods

To work effectively with text data in NLP, you need to understand different ways to represent data. Start by learning about basic methods like bag-of-words and TF-IDF. Then, move on to advanced techniques, like word embeddings, and learn how they capture the semantic meaning of words.

Apply these methods to real-world texts like news articles or social media posts. Notice how each technique changes the way text is analyzed and affects NLP model results.

Step 4: Work on NLP tasks

Next, understand concepts like sentiment analysis, text classification, and named entity recognition (NER). Start with sentiment analysis to identify the feeling the text expresses — positive, negative, or neutral.

To better understand these concepts, practice with DataCamp's project, Who's Tweeting? Trump or Trudeau. After finishing it, you'll be able to analyze tweets and the feelings they express.

Then, work on classification and learn to sort text into different categories.

Step 5: Learn advanced NLP topics

Now, learn about advanced skills like deep learning, language models, and transfer learning. Building models from scratch can be expensive and time-consuming. That's why you should learn to use pre-trained models like BERT.

Fine-tune these models to summarize articles, answer questions based on text, and classify different articles. These real-world examples will help you perform better on specific text types.

An Example Learning Plan for NLP

Now you know what steps to take to get started, so follow this weekly plan and start learning:

|

Week |

Focus area |

Learning objectives |

Resources |

|

Week 1 |

NLP concepts |

Learn about syntax, semantics, pragmatics, and basic text representations (strings, lists, dictionaries, sets). |

|

|

Week 2 |

Text representation |

Learn about text representation methods and word embedding techniques like Word2Vec or GloVe. |

|

|

Week 3 |

NLP tasks |

Apply knowledge to sentiment analysis, text classification, and NER. Familiarize with Python libraries. |

|

|

Week 4 |

Study language models |

Learn about language models and fine-tune BERT for text summarization and question answering. |

|

|

Week 5 |

Build an NLP project |

Implement an NLP project (e.g., chatbot or sentiment analysis tool). |

Alternatively, you can follow DataCamp’s NLP in Python skill track, which contains several courses to take you from beginner to advanced.

Best Resources for Learning NLP

Whether you want to learn basics or advanced concepts, there are many resources, from online courses to YouTube tutorials, to get you off to a strong start. Here are my top picks:

Online courses

Online courses provide you with a lifelong learning opportunity. DataCamp offers some great courses that provide in-depth knowledge of NLP. Here are some of the great NLP courses on DataCamp:

- For NLP basics: Introduction to Natural Language Processing

- For production-level applications with NLP: Advanced NLP with SpaCy

- For training models with SpaCy: Natural Language Processing with SpaCy

- Discover basic tools for NLP in R: Introduction to Natural Language Processing in R

- Use Python to process text into a format suitable for machine learning: Feature Engineering for NLP in Python

Books and textbooks

Books and textbooks are great for learning practical NLP problems and solutions. They come in handy when you're working on lengthy projects and need to grasp new concepts.

You can read these books to become proficient in NLP concepts:

- For basics: Speech and Language Processing

- For advanced NLP concepts: Natural Language Processing with Python

YouTube channels and tutorials

When it comes to self-directed learning, YouTube tutorials are my go-to picks. There are some great YouTube channels dedicated to only NLP and its implementations. So you can use them and practice with them.

Here are some of my best picks for you:

- For basics: Natural Language Processing (NLP) playlist

- For word embeddings: A Complete Overview of Word Embeddings

- For transformer-based model: What is BERT and How Does It Work

- For transformers basics: Transformers For Beginners

Practice platforms

If you’re more of a practice enthusiast, try platforms like Kaggle and Hugging Face. They offer thousands of datasets to work on real-life use cases. Some recruiters may even ask you to complete NLP tasks in a single-player Kaggle competition. So, practicing on these platforms is worth your time.

Check out the following resources:

- For simple datasets: Best 25 Datasets for NLP Projects

- For complex datasets: IMDB Datasets at Hugging Face

- For customer reviews: Amazon Reviews for Sentiment Analysis

Tips for Mastering NLP

Mastering NLP takes consistent effort and a hands-on approach. I've learned a lot on my NLP journey, and I'd love to share some tips that have helped me along the way. Regular practice with consistency is a great way to keep advancing. But here are some key advice to stick to if you want to excel fast:

- Practice regularly: Keep practicing to understand the broad spectrum of NLP concepts and tasks. In addition, try to incorporate NLP exercises into your routine, whether through coding challenges or working on text-based datasets.

- Join study groups or forums: I've always benefited from online communities when it comes to tech projects. They bring beginners, data practitioners, researchers, and AI experts on one page. That’s why make sure to join forums so you can find solutions to any NLP-related problems.

- Work on real NLP projects: To stand out, you must turn concepts like word embedding and model validation into actual software. Start your own projects or contribute to open-source initiatives to gain practical experience and build a portfolio.

- Stay curious and keep learning: Since NLP is evolving rapidly, its future seems promising. So, make it a habit to read the latest research papers, follow thought leaders, and take advanced courses to stay ahead of the curve.

Final Thoughts

No matter who you are — a computer science (CS) graduate or someone with years of experience, mastering NLP can land you specialized roles.

But remember, NLP tasks vary widely. What works for one problem may not work for another, so you must adapt your approach based on the specific challenges and data involved. And this is something you learn by applying theoretical knowledge into practice.

To start learning NLP from scratch today, check out our Natural Language Processing in Python skill track.

Build Machine Learning Skills

FAQs

Is coding required for NLP?

Yes, some coding is required for NLP, but you don't need to be an expert. A basic understanding of Python is sufficient to work with NLP tools and libraries. You'll also encounter concepts from machine learning, deep learning, and statistics, which are integral to NLP.

How much can I earn with NLP skills?

According to ZipRecruiter, NLP professionals earn an average salary of around $122,738 per year, depending on experience and location.

Is NLP part of AI or ML?

NLP is a subset of AI that relies heavily on machine learning (ML) techniques. It enables computers to understand and process human language.

Can I learn NLP without machine learning?

No, machine learning is essential for NLP. Many NLP tasks depend on ML algorithms to process and analyze language data effectively.

I'm a content strategist who loves simplifying complex topics. I’ve helped companies like Splunk, Hackernoon, and Tiiny Host create engaging and informative content for their audiences.