Track

Making sure AI is used responsibly is not just a nice goal but a key priority. It’s essential that AI is created, deployed, and used in line with responsible AI (RAI) principles to help reduce risks in society across all sectors.

As AI continues to grow rapidly, it has the potential to bring major benefits if used responsibly, helping to achieve the United Nations Sustainable Development Goals, protect human rights, and always prioritize the well-being of humanity.

In this blog, I will introduce you to responsible AI principles by presenting a comparative study of the work done in this area by literature, academia, international organizations, and industry leaders.

If you want to study this topic in more depth, make sure to check out this course on Responsible AI Practices.

AI Ethics

What Is Responsible AI?

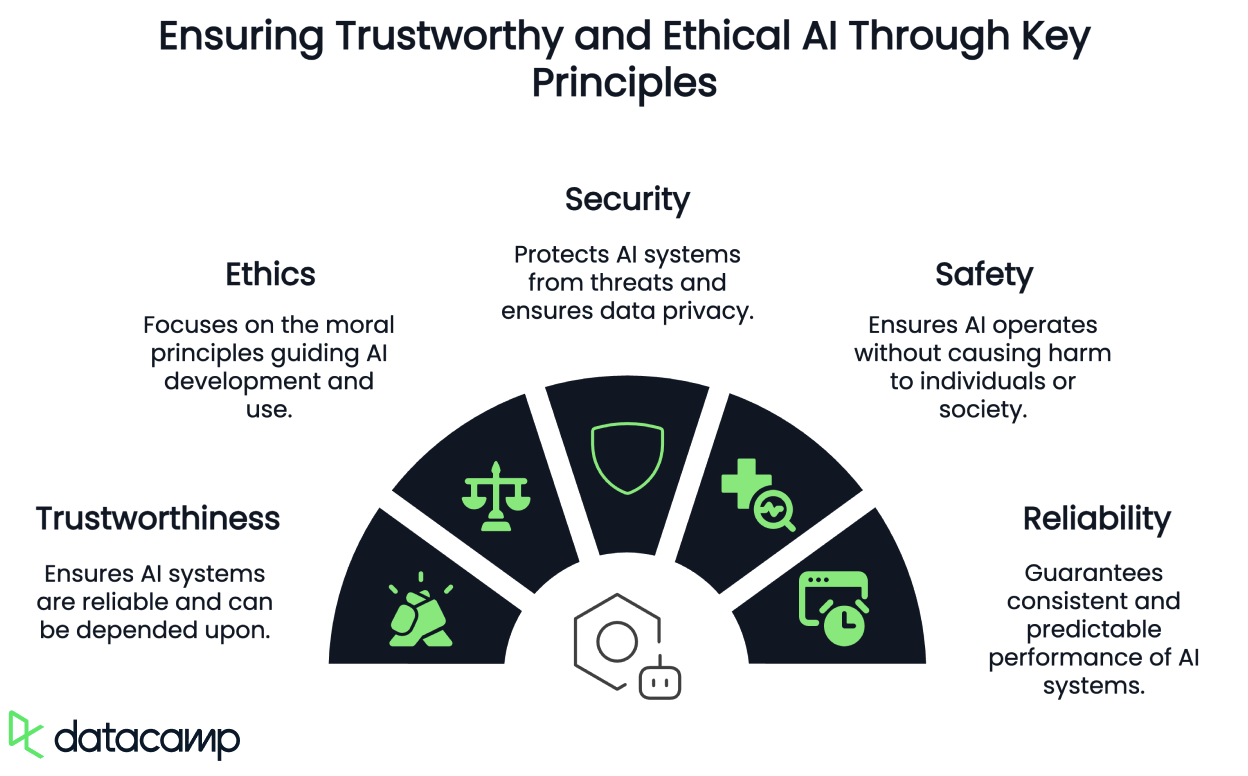

We don’t yet have a universally shared definition of “Responsible AI." In some instances, it is referred to as trustworthy, ethical, secure, or safe AI. In others, these are merely principles subsumed within a broader notion of RAI or ascribed as different categories altogether.

The growing focus on responsible, ethical, trustworthy, secure, and safe AI stems from widespread concern over its potential negative impacts, both intended and unintended.

The Institute of Electrical and Electronics Engineers (IEEE) attributes this concern to the traditional exclusion of ethical analysis from engineering practice, where basic ethical standards related to safety, security, functionality, justice, bias, addiction, and indirect societal harms were often considered out of scope.

However, given the scalability and widespread impact of AI in every aspect of society and our daily lives, it is essential to anticipate and mitigate ethical risks as a standard practice. The current approach of releasing technology into the world without sufficient oversight, leaving others to deal with the consequences, is no longer acceptable.

Yet, shifting from ethical theory and principles to context-specific, actionable practice remains a significant barrier to the widespread adoption of systematic ethical impact analysis in software engineering.

Principles of Responsible AI

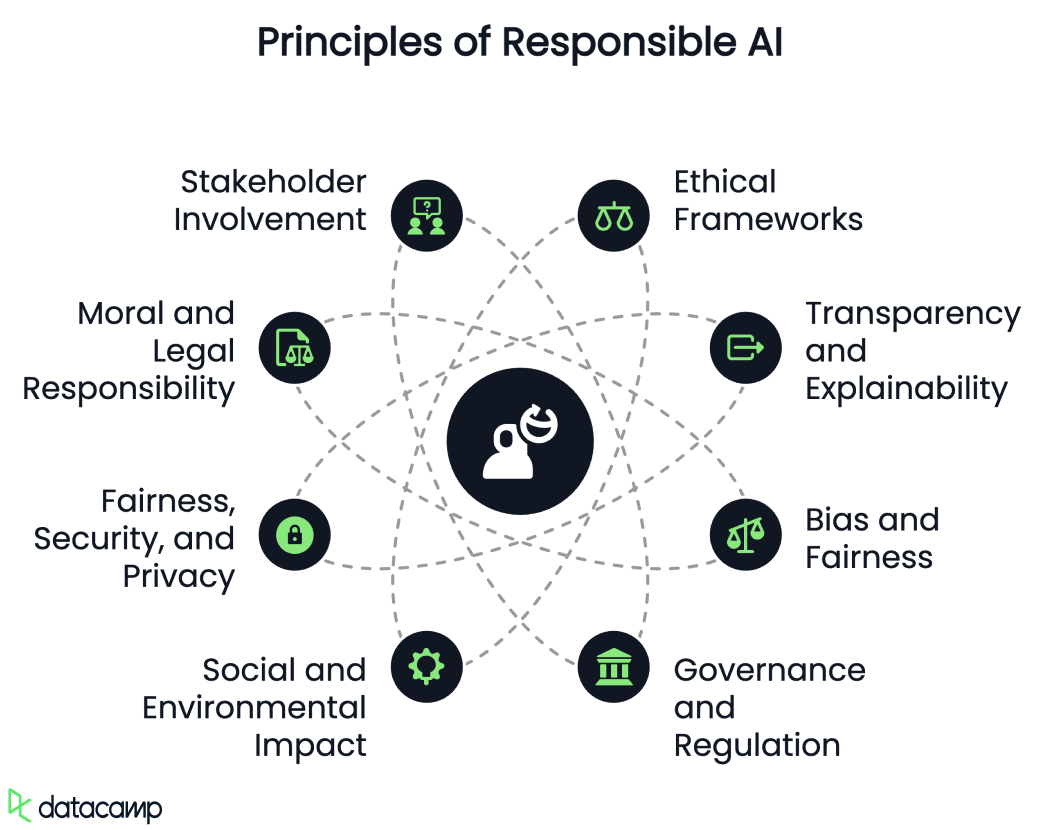

When we look at the literature, we can observe quite some development of principles of RAI being discussed. Let’s discuss each briefly.

Ethical frameworks

Responsible AI emphasizes the use of ethical frameworks to ensure accountability, responsibility, and transparency in AI systems. This includes addressing issues like privacy, autonomy, and bias.

Transparency and explainability

Transparency in AI algorithms and decision-making processes is crucial for building trust among stakeholders. Explainable AI (XAI) aims to create models that are interpretable and understandable, promoting transparency and trust.

Bias and fairness

Responsible AI seeks to identify and correct biases in AI algorithms to promote equitable decisions. This involves using diverse and inclusive datasets to prevent discriminatory outcomes.

To learn more about bias in AI, check out this podcast on Fighting for Algorithmic Justice with Dr. Joy Buolamwini.

Governance and regulation

The development of standards and frameworks for AI governance is essential to guide the responsible use of AI. This includes creating structured approaches to mitigate risks at various levels, from individual researchers to government regulations.

Social and environmental impact

Responsible AI considers the broader socio-economic and environmental impacts of AI, such as its effects on employment, inequality, and resource consumption and their potential to cause unfair digital markets or environmental harm due to resource use.

Fairness, security, and privacy

The European Commission's efforts to regulate AI highlight the importance of fairness, security, privacy, explainability, safety, and reproducibility as components of responsible AI.

Moral and legal responsibility

Discussions around responsible AI often involve moral responsibility, which includes causal and epistemic conditions. This means AI actions should cause outcomes, and the agents should be aware of the moral consequences. Legal frameworks also explore accountability, liability, and blameworthiness for AI systems.

Stakeholder involvement

A responsible AI framework often involves holding all stakeholders accountable, including developers, users, and potentially the AI systems themselves.

Alternative Taxonomies of Responsible AI Principles in Academia

Within Academia, some of the most systematic reviews of AI ethics principles have produced somewhat different taxonomies For instance, Jobin et al. reviewed 84 ethical guidelines and proposed 11 principles:

- Transparency

- Justice and fairness

- Nonmaleficence

- Responsibility

- Privacy

- Beneficence

- Freedom and autonomy

- Trust

- Dignity

- Sustainability

- Solidarity

Naturally, they encountered a wide divergence in how these principles were interpreted and in how the recommendations suggested they be applied. They did, however, note some evidence of convergence around five core principles:

- Transparency

- Justice and fairness

- Nonmaleficence

- Responsibility

- Privacy

However, other proponents suggest that the plethora of ethical AI frameworks can be consolidated into just five meta-principles:

- Respect for autonomy

- Beneficence

- Nonmaleficence

- Justice

- Explicability

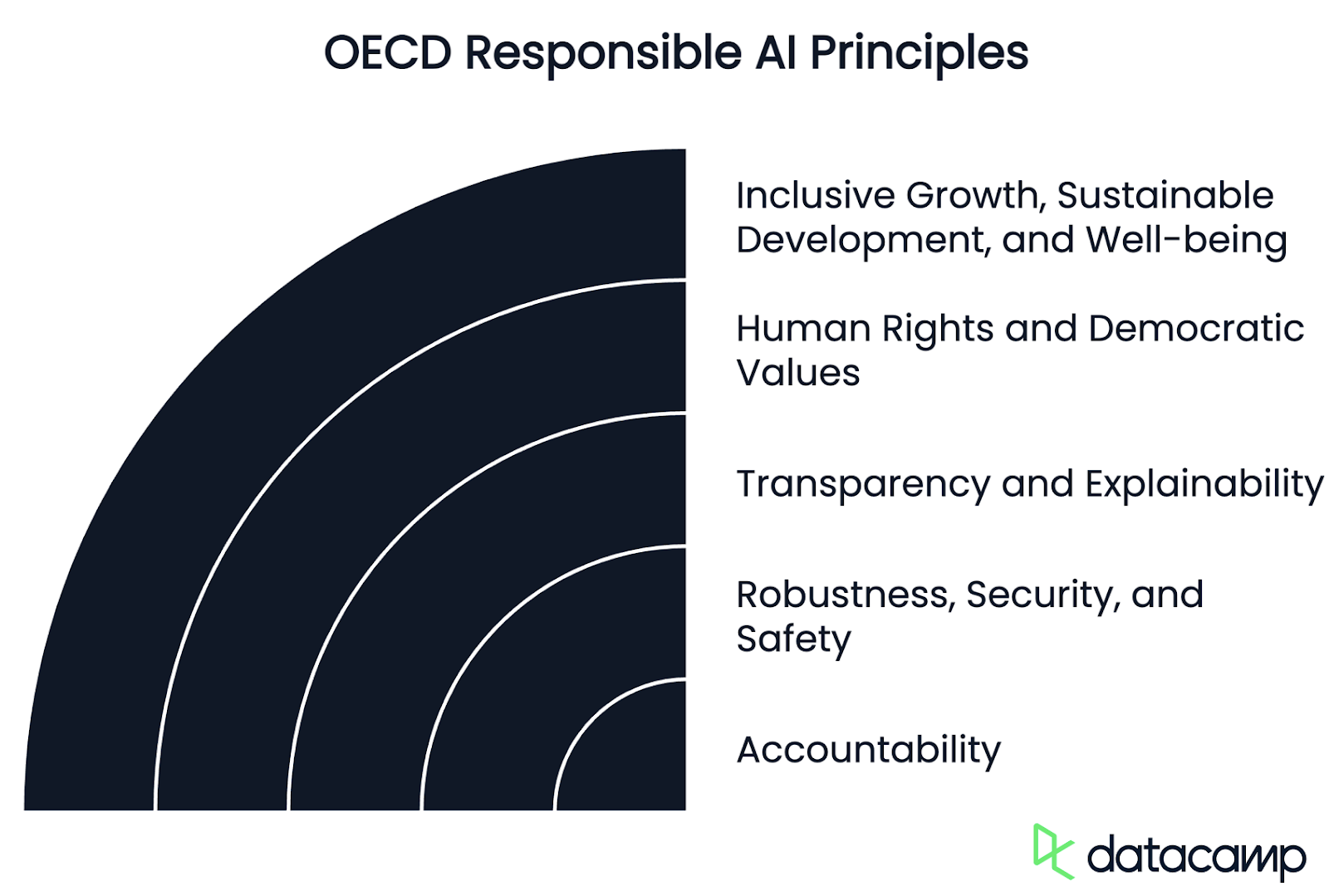

OECD Responsible AI Principles

International organizations have also been actively feeding the global discussions on how these definitions are being shaped, for policy and harmonization purposes.

The Organisation for Economic Co-operation and Development (OECD)—where I serve as an AI Expert for Risks and Accountability, as well as for Incident Monitoring—has put forward a comprehensive list of principles for trustworthy AI.

These principles promote the use of AI that is innovative, trustworthy, and respectful of human rights and democratic values. Initially adopted in 2019, they were updated in May 2024 to ensure they remain robust and fit for purpose in light of new technological and policy developments.

Today, the European Union, the Council of Europe, the United States, the United Nations, and other jurisdictions use the OECD’s definition of an AI system and lifecycle below in their legislative and regulatory frameworks and guidance.

In a nutshell, these are all values-based principles and are grouped in the following categories:

Inclusive growth, sustainable development, and well-being

AI stakeholders should proactively engage in responsible stewardship of trustworthy AI in pursuit of beneficial outcomes for people and for the planet, such as augmenting human capabilities and enhancing creativity, advancing inclusion of underrepresented populations, reducing economic, social, gender, and other inequalities, and protecting natural environments, thus invigorating inclusive growth, well-being, sustainable development, and environmental sustainability.

Human rights and democratic values, including fairness and privacy

AI actors should respect the rule of law, human rights, and democratic, human-centered values throughout the entire AI system lifecycle. These include non-discrimination and equality, freedom, dignity, autonomy of individuals, privacy and data protection, diversity, fairness, social justice, and internationally recognized labor rights.

Transparency and explainability

AI actors should commit to transparency and responsible disclosure regarding their systems. To this end, they should provide meaningful information, appropriate to the context, and consistent with the state of the art, in order to foster a general understanding of AI systems, including their capabilities and limitations.

Robustness, security, and safety

AI systems should be robust, secure and safe throughout their entire lifecycle so that, in conditions of normal use, foreseeable use or misuse, or other adverse conditions, they function appropriately and do not pose unreasonable safety and/or security risks.

Accountability

AI actors should be accountable for the proper functioning of AI systems and for upholding the above principles, based on their roles, the context, and consistent with the state of the art. To this end, AI actors should ensure traceability, including in relation to datasets, processes, and decisions made throughout the AI system lifecycle. This traceability should enable analysis of the AI system’s outputs and responses to inquiries, appropriate to the context and consistent with the state of the art.

ISO Responsible AI Principles

On the other hand, for the International Standards Organization (ISO), there are three key areas to consider for an AI system to be trustworthy:

- Feeding it good, diverse data

- Ensuring algorithms can handle that diversity

- And testing the resulting software for any mislabelling or poor correlations

The organization provides the following guidelines on achieving this:

- Design for humans by using a diverse set of users and use-case scenarios and incorporate this feedback before and throughout the AI’s lifecycle.

- Use multiple metrics to evaluate training and monitoring, including user surveys, overall system performance indicators, and false positive and negative rates sliced across different subgroups.

- Probe the raw data for mistakes (e.g., missing or unaligned values, incorrect labels, sampling), training skews (e.g., data collection methods or inherent social biases,) and redundancies—all crucial for ensuring RAI principles of fairness, equity, and accuracy in AI systems.

- Understand the limitations of any given model to mitigate bias, improve generalization, ensure reliable performance in kinetic scenarios, and communicate these to developers and users.

- Continually test your model against responsible AI principles to ensure it duly considers real-world performance and user feedback, to improve both short- and long-term solutions for the issues.

Industry Leaders’ Take on Responsible AI

Regarding concrete examples of industry leaders, we can observe distinct contrasts in nomenclature and taxonomies, with varying understandings of the concepts of RAI, trustworthy AI, ethical AI, and other diverse interpretations coined for their operations or applications.

Microsoft

Microsoft, for instance, stands by the following Responsible AI Principles:

- Fairness

- Reliability and safety

- Privacy and security

- Inclusiveness

- Transparency and accountability

Apple

On the other hand, Apple claims that their AI is designed with their core values and built on a foundation of groundbreaking privacy innovations, and have created a set of Responsible AI principles to guide how they develop AI tools, as well as the models that underpin them:

- Empower users with intelligent tools: Identifying areas where AI can be used responsibly to create tools for addressing specific user needs, respecting how users choose to use these tools to accomplish their goals.

- Represent users: Building deeply personal products with the goal of representing users around the globe authentically to avoid perpetuating stereotypes and systemic biases across AI tools and models.

- Design with care: Taking precautions at every stage of our process, including design, model training, feature development, and quality evaluation to identify how our AI tools may be misused or lead to potential harm.

- Protect privacy: Achieving this through powerful on-device processing and groundbreaking infrastructure, ensuring that they do not use our users’ private personal data or user interactions when training their foundation models.

Nvidia

Nvidia calls it trustworthy AI, and they believe AI should respect privacy and data protection regulations, operate securely and safely, function in a transparent and accountable manner, avoid unwanted biases and discrimination, and signal their four core principles:

- Privacy

- Safety and Security

- Transparency

- Nondiscrimination

Somewhat diversely, Google speaks about “Objectives for AI Applications” to be:

- Be socially beneficial.

- Avoid creating or reinforcing unfair bias.

- Be built and tested for safety.

- Be accountable to people.

- Incorporate privacy design principles.

- Uphold high standards of scientific excellence.

- Be made available for use in accordance with these principles to limit potentially harmful or abusive applications.

Conclusion

There is no consensus yet on what exactly constitutes responsible AI, trustworthy AI, ethical AI, safe, and secure AI, nor whether these are stand-alone terminologies or if they are convergable. However, it is encouraging to see that the elements of these terms are common and shared—at least in theory—around the same core principles in academia, international organizations, and industry.

Broadly speaking, we can say that RAI is an emerging discipline aimed at ensuring that the development, deployment, and usage of artificial intelligence are done in a human-centered manner that aligns with fundamental human rights in practice, both ethically and legally.

Jimena Sofía Viveros Álvarez is a distinguished international lawyer and scholar, a trusted expert advisor on AI, and peace and security. She is the founder, managing director, and CEO of IQuilibriumAI, a consultancy firm specializing in AI and peace, security, humanitarian, environmental, and rule of law issues. She is also the president of the HumAIne Foundation and the founder of the Global South Synergies and Resilience Platform, serving in various capacities across these fields to bolster scalable and expansive meaningful impact, with a special focus on the Global South and vulnerable communities.