Track

If you’re aware of the rise in cloud computing, you must have heard of Kubernetes. In modern application development, it’s a key tool to learn for managing various infrastructure setups.

In this introductory article, we’ll provide an overview of Kubernetes and its components, as well as a comprehensive tutorial on implementing it locally. If you’re looking for a hands-on learning experience to complement this tutorial, check out our Introduction to Kubernetes course.

What is Kubernetes?

Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. Originally developed by Google, it has become the de-facto standard for running containers at scale.

It abstracts away the complexity of managing individual containers and allows developers to focus on building and deploying their applications.

Why Use Kubernetes?

Here are some key benefits of using Kubernetes:

- Container orchestration: Automates the distribution and scheduling of containers across a cluster.

- Scalability and self-healing: Simplifies horizontal scaling (replicating containers) and vertical scaling (adjusting resource allocation) all with self-healing capabilities.

- High availability: Ensures containers (and thus your services) remain operational even if some nodes fail.

- Portability: Abstracts the underlying infrastructure, making it straightforward to run both on-premises and on different cloud providers.

- Efficient resource utilization: Resizes containers based on resource usage, optimizing resource allocation and reducing costs.

Kubernetes is useful in varied applications like:

- DevOps: Automates the deployment, scaling, and management of containerized applications.

- Microservices: Breaks down large monolithic applications into smaller, manageable services for improved agility and scalability.

- Big data: Simplifies the deployment and management of complex big data systems using containers.

- Edge computing: Enables running Kubernetes on edge devices to process and analyze data closer to where it's generated, reducing latency and improving performance.

- Continuous delivery: Integrates with tools like Jenkins and GitLab for automated continuous delivery pipelines.

- Machine learning: Provides a scalable platform for training and deploying machine learning models, handling large datasets and complex computations.

Key Concepts in Kubernetes

To understand how Kubernetes works, you’ll need to have a good understanding of its key concepts.

These include 4 main concepts:

- Clusters

- Pods

- Namespaces

- Operators

We’ll explore more about this below.

Clusters

Kubernetes clusters are groups of nodes, which are individual machines that run the Kubernetes software. The cluster acts as the control plane for managing applications and services.

In a typical setup, a cluster will have a master node and multiple worker nodes. The master node is responsible for coordinating all activities within the cluster, while the worker nodes handle running and managing containers.

Pods

Pods are the smallest unit of deployment in Kubernetes. They can hold one or more containers, along with shared storage resources and networking settings.

Each pod has its own unique IP address and can communicate with other pods in the same cluster through this address. This allows for efficient communication between different components of an application.

Pods can come in either single or multi-container pods and each comes with their own use case.

- Single-container pods: The most common type of pod, where a single container is running inside. This is useful for simple applications or microservices that only require one container.

- Multi-container pods: Multiple containers are co-located and run together. This can be beneficial for complex applications where different containers need to communicate with each other and share resources.

Namespaces

Namespaces provide a way to logically partition resources within a single cluster. This allows for better organization and management of resources, as well as tighter security controls.

Namespaces can also be used to manage different environments, such as development, staging, and production. This ensures that resources are isolated and not affected by changes made in other environments.

To view the namespaces in your cluster, you can use this command:

kubectl get namespacesTo switch between namespaces, use the following

kubectl config set-context --current --namespace <namespace name>Operators

Operators are software extensions that help automate the management of Kubernetes resources. They use custom controllers and API extensions to manage complex tasks more efficiently and automatically.

Some popular operators include:

- Prometheus for monitoring

- etcd operator for managing etcd clusters

Using operators can greatly simplify the management of applications and resources within your cluster. With their ability to automate tasks and provide advanced features, they are becoming increasingly popular among Kubernetes users.

Core Components of Kubernetes

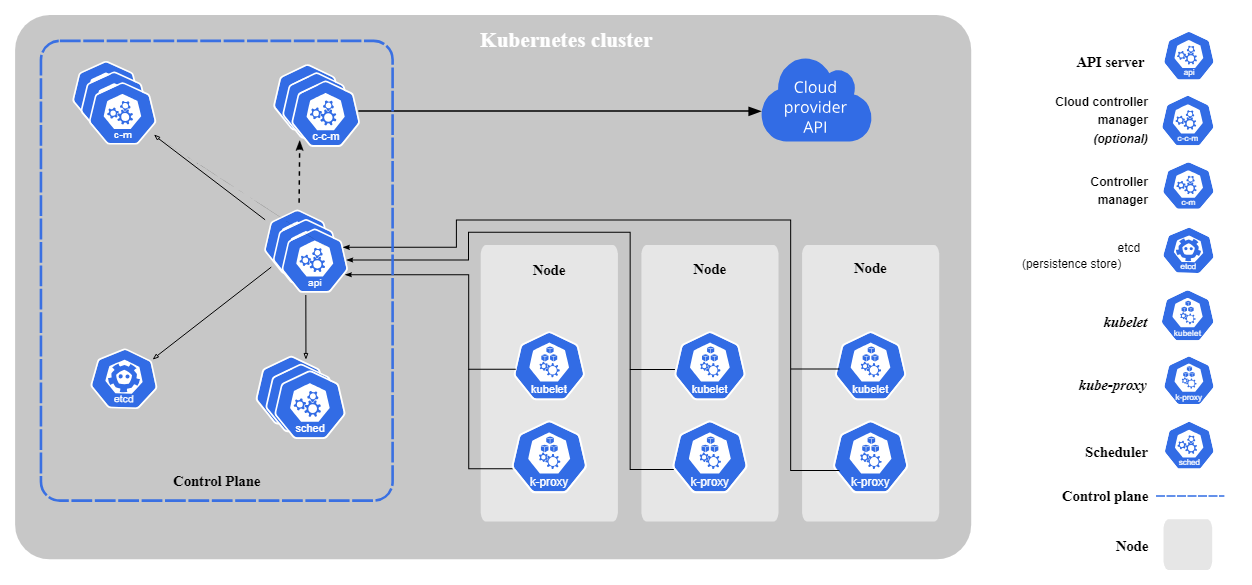

In most Kubernetes environments there is a set of core components.

Source: Kubernetes

Here is a list of components and what they do:

- API Server: This component acts as the central management point for all Kubernetes resources. It receives requests from users and other components and then enforces policies to manage the cluster.

- Controller manager: The controller manager is responsible for maintaining the desired state of the cluster by constantly monitoring and reconciling changes made to objects within the cluster.

- etcd: This is a distributed key-value store that serves as the primary datastore for Kubernetes. It stores all cluster data and ensures data consistency and availability.

- kubelet: This agent runs on each node in the cluster and is responsible for managing containers, ensuring they are running according to their specified configurations.

- Kube Proxy: This component runs on each node and is responsible for routing network traffic to the correct container.

How Kubernetes Works

As we’ve established, Kubernetes automates the deployment, scaling, and management of containerized applications across clusters. It ensures high availability, efficient resource use, and self-healing without manual intervention.

Instead of managing individual containers, Kubernetes groups them into Pods and distributes them across worker nodes, which communicate with the control plane to maintain the system's desired state.

Here’s how Kubernetes works, in brief:

- Define application deployment: You specify the desired state in a YAML file, including replicas, resource limits, and networking rules.

- Schedule workloads: The Scheduler assigns Pods to worker nodes based on resource availability.

- Manage cluster state: The Controller Manager ensures the system maintains the correct number of Pods, replacing any that fail.

- Handle networking: Kubernetes manages communication between services and external access via Services and Ingress controllers.

- Scale and self-heal: Kubernetes adjusts the number of running Pods based on demand and restarts failed containers automatically.

By handling infrastructure complexity, Kubernetes enables teams to focus on building applications rather than managing deployments, making it essential for scalable, resilient workloads across industries.

Common Terminology and Concepts

Let’s look at some of the key terms you need to know and their definitions:

- YAML manifests: These are configuration files that define the desired state of your application or infrastructure. They can be used to create, update, and delete resources in Kubernetes.

- StatefulSets vs. Deployments: These are two types of controllers in Kubernetes that manage the lifecycle of pods. Deployments are typically used for stateless applications, while StatefulSets are used for stateful applications.

- Services: These provide a stable IP address and DNS name for accessing your application within the cluster. They also enable communication between different pods.

- ConfigMaps: These are used to store configuration data in key-value pairs that can be accessed by your application.

- Secrets: Similar to ConfigMaps, these are used to store sensitive information such as passwords or API keys.

Getting Started with Kubernetes

Working with Kubernetes can seem daunting, especially when it comes to understanding the various terminology and concepts. However, once you have a solid understanding of these essential terms, navigating through the platform will become much easier.

Let’s start by looking at some tools used with Kubernetes.

Tools for beginners

As a beginner to Kubernetes, having to deploy applications on the cloud can be too much to handle. As such, you can look to some common tools to deploy locally.

Here are 2 most commonly used tools:

1. Minikube

Minikube is a lightweight Kubernetes implementation that can run on a single host machine. It usually runs a single-node cluster in a virtual machine (VM) on your laptop or workstation.

Why use it:

- Easy to set up and tear down.

- Great for local development and proofs-of-concept.

- Minimal resource usage compared to a full-blown cluster.

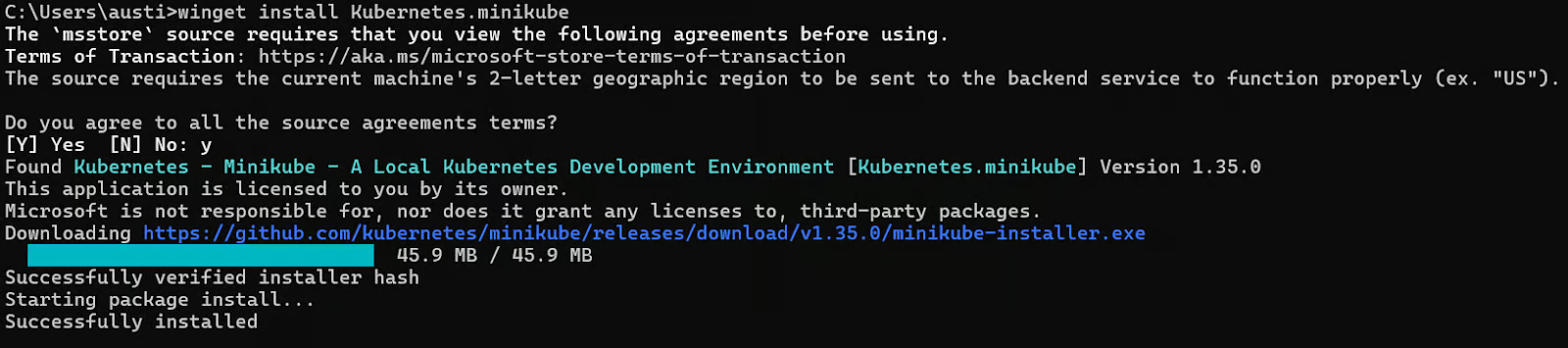

How to Install Minikube (Example on Windows):

# Install via Windows Package Manager

winget install Kubernetes.minikubeYou should be able to see the following installation message. Agree to the terms and conditions if prompted using “Y”.

Next, to start up a simple cluster on minikube, use the following start command:

# Start a single-node cluster

minikube startTo check that the cluster node has started successfully, run the get command:

# Verify the cluster is running

kubectl get nodesNote: You need to have the kubectl API server installed on your local machine (It is installed with Minikube automatically in many cases).

2. Kind (Kubernetes in Docker)

Kind stands for Kubernetes in Docker. It uses Docker containers as "nodes" in a Kubernetes cluster, providing a simple, container-based local cluster environment.

Why use it:

- Faster startup compared to Minikube in many scenarios.

- Easy to spin up multiple test clusters simultaneously, ideal for CI/CD pipelines.

- Often used in automated test environments due to its lower overhead.

How to Install Kind (Example on Windows):

# Install Kind with Windows Package Manager

winget install Kubernetes.kindOnce installed, you can create a simple cluster using the create cluster command and give it a name.

# Create a basic cluster

kind create cluster --name example-clusterTo check to see if your Kubernetes kind cluster has started, use the following get command.

# Check running clusters

kind get clustersYou can also interact with the cluster through kubectl interface:

# Interact with your Kind cluster using kubectl

kubectl get nodesNote: Make sure you have Docker installed and running.

Cloud Providers (EKS, GKE, AKS)

When running Kubernetes on the cloud, here are some cloud providers to choose from:

1. Amazon EKS (Elastic Kubernetes Service)

Amazon EKS is a managed service that makes it easy to run Kubernetes on AWS without the need to manage your own control plane or worker nodes. It integrates with other AWS services for additional features such as load balancing, storage, auto-scaling, and monitoring.

Some additional features include:

- Offers integrations with other AWS services (e.g., IAM, CloudWatch, ECR).

- Scalability and high availability are built into the platform.

2. Google GKE (Google Kubernetes Engine)

Google GKE is a fully managed service for running Kubernetes on the Google Cloud Platform. It offers automatic scaling, self-healing capabilities, and integration with other Google Cloud services.

Some additional features include:

- Deep integration with GCP services like Cloud Logging, Cloud Monitoring, and Container Registry.

- Offers autopilot mode, which manages cluster infrastructure automatically.

3. Azure AKS (Azure Kubernetes Service)

Azure AKS provides serverless Kubernetes clusters that are fully integrated with other Azure services like Storage, Networking, and Load Balancing. It also has built-in support for DevOps tools like Helm and Prometheus.

Some additional features include:

- Integrates with Azure Active Directory, Azure Monitor, Container Registry, etc.

- Offers serverless Kubernetes (Virtual Nodes) using Azure Container Instances.

Resources for learning Kubernetes

Learning Kubernetes for the first time can seem overwhelming, but there are many resources available to help you get started.

Here are some recommended resources:

- DataCamp courses and resources: Our Introduction to Kubernetes course and Containerization tutorial can be great places to start.

- Our dedicated Kubernetes starter tutorials: Created to provide you with the basics.

Kubernetes Deployment Example

To run applications in Kubernetes, you follow a structured workflow that involves setting up a cluster, deploying a containerized application, exposing it as a service, and scaling it as needed.

We’ve covered this process in detail in our Kubernetes tutorial but here’s a high-level breakdown:

Set up a Kubernetes cluster

Use Minikube to create a local cluster for testing and development. Install Minikube and start your cluster using:

minikube start --driver=dockerkubectl get nodesDeploy an application

Define your application’s desired state in a Deployment YAML file. For example, deploying an Nginx web server looks like this:

apiVersion: apps/v1

kind: Deployment

metadata:

name: hello-deployment

spec:

replicas: 1

selector:

matchLabels:

app: hello

template:

metadata:

labels:

app: hello

spec:

containers:

- name: hello-container

image: nginx:latest

ports:

- containerPort: 80Apply the deployment with:

kubectl apply -f hello-deployment.yamlExpose the application as a service

To make the application accessible, create a Service using:

kubectl expose deployment hello-deployment --type=NodePort --port=80 --name=hello-serviceRetrieve the external URL and open the app in your browser:

minikube service hello-serviceScale and monitor the application

Kubernetes allows you to scale applications effortlessly. To increase replicas:

kubectl scale deployment hello-deployment --replicas=3Check running Pods:

kubectl get podsView logs for debugging:

kubectl logs -f <pod-name>Here are some examples for further exploration for advanced projects:

- Run streaming jobs in containers (e.g., Spark on Kubernetes, Kafka on Kubernetes).

- Use Persistent Volumes to mount external storage solutions like AWS EBS, Azure Disks, or NFS.

- Deploy more complex apps (e.g., multi-tier microservices with databases).

- Explore advanced features like Ingress controllers, Service Meshes (e.g., Istio), Helm charts for package management.

Conclusion

Kubernetes is a powerful platform for deploying and managing containerized applications at scale, making it an essential tool for data engineers who need elastic, reliable environments for data processing.

If you’re interested in learning more about Kubernetes, our Introduction to Kubernetes course would be the perfect place to start.

Kubernetes FAQs

Is Kubernetes the same as Docker?

No, Kubernetes is a container orchestration tool while Docker is a containerization platform. They work together to manage and deploy containers, but serve different purposes.

What are the benefits of using Kubernetes?

Kubernetes allows for easier management and deployment of containers, improves scalability and availability of applications, and supports automated updates and rollbacks.

Can Kubernetes be used in any type of environment?

Yes, Kubernetes can be used in both on-premises and cloud environments. It is highly versatile and can adapt to various infrastructure setups.

Is it difficult to learn how to use Kubernetes?

While there is a learning curve involved, there are plenty of resources available online such as DataCamp and starter guides by Kubernetes themselves.

What is the architecture of Kubernetes?

Kubernetes follows a client-server architecture, with a master node controlling the cluster and worker nodes running the application workloads. This allows for efficient communication and management of the entire system.

I'm Austin, a blogger and tech writer with years of experience both as a data scientist and a data analyst in healthcare. Starting my tech journey with a background in biology, I now help others make the same transition through my tech blog. My passion for technology has led me to my writing contributions to dozens of SaaS companies, inspiring others and sharing my experiences.