Snowflake is a cloud-based data platform that has revolutionized data storage and analytics. While most cloud platforms do analytical computation and storage-related tasks like transferring data on the same resources, Snowflake’s unique architecture separates computational load from storage load. With an increasing volume of data, Snowflake’s ability to adapt to the needs of the organization becomes increasingly vital in the modern data economy.

This article introduces you to Snowflake from the ground up. You don’t need any prior experience with databases or cloud computing to follow along. We’ll explain what Snowflake is, what makes it different, how it’s used, and how you can get started today. If you’re looking for a more hands-on approach, check out our Snowflake Foundations skill track.

What Is Snowflake?

Traditional on-premises databases have all their storage space, computing power, and networking equipment on the company’s premises (on-prem).

Snowflake is a cloud-native data platform that enables businesses to store, manage, analyze, and share data at scale. Snowflake lives entirely in the cloud and provides powerful tools for data warehousing, data lakes, business intelligence, and machine learning, all in one platform.

Additionally, it makes sharing data across different teams simple. Data engineers and data analysts often use a programming language known as SQL to perform both data transformation and data analysis respectively. Since Snowflake runs on SQL, these two groups can communicate on their data needs more easily.

It has excellent integration with other cloud providers and is often chosen for its unique architecture that assists with data processing efficiency.

Cloud integration

Snowflake itself is a complete data cloud environment, however, that doesn’t mean users are locked to its ecosystem entirely. It integrates with the three major cloud platforms:

- Amazon Web Services (AWS)

- Microsoft Azure

- Google Cloud Platform (GCP)

These integrations allow Snowflake users to easily share data from Snowflake to their existing cloud platform and vice-versa. This gives organizations flexibility to deploy Snowflake in the cloud environment they already use with minimal development.

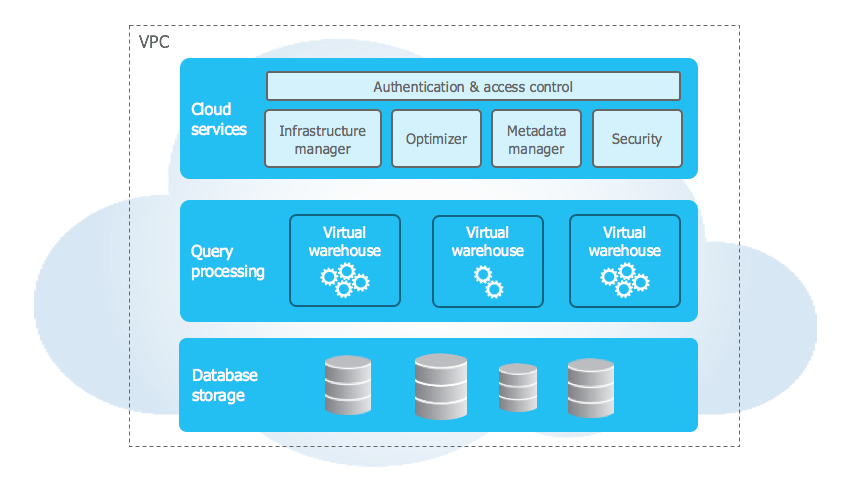

Unique architecture

Snowflake has three main layers: a service layer, a compute layer, and a storage layer. The service layer is common, it hosts all of the cloud functions like security, access, and infrastructure. What makes Snowflake so neat is how it separate the compute and storage layer.

Most of the time, computational resources like CPU and memory are shared for both computing and storing data. Snowflake’s separation of the two offers a few cool benefits that make it easy to scale and more efficient. It does so by using this concept of “virtual warehouses” where each person using Snowflake gets their own set of virtual resources that are not shared with others.

For instance, sharing data is easy since you can spend more resources on transferring and copying data to users. So instead of everyone in your company pulling data from one copy of the data, Snowflake makes temporary copies for each person.

Likewise, now that you’re not spending precious resources on the storage and copying of data, you get access to more CPU for analysis and data processing. This means things like your SQL queries and analytical pipelines run faster as long as your virtual warehouse is big enough to handle the computation.

Here’s a summary of the benefits:

- Storage: Your data lives in a central repository. Anything that accesses the data like partitioning or indexing the data is performed by workers specialized in stored data. Snowflake then shares components of the data that are necessary to the user’s session.

- Compute: Computational tasks are performed by virtual warehouses which basically are a cluster of compute resources (CPU and memory) which handle things like analytical SQL tasks, loading/unloading data, and so on.

Snowflake's architecture provides several key advantages by separating storage from compute resources:

- Scalability: Easily scale compute power and storage independently. Need more storage? Add it without extra CPU costs. Need more processing power? Expand your virtual warehouse without affecting data storage.

- Concurrency: Multiple users and tasks run simultaneously without competing for resources, ensuring consistent performance for everyone.

- Cost efficiency: Only pay for what you use. Quickly scale resources up or down based on demand, reducing unnecessary costs.

Snowflake Architecture (docs.snowflake.com)

For a deep dive, check out this article on Snowflake’s architecture.

Key Features of Snowflake

Snowflake is a fully managed, cloud-native data platform built to meet the needs of modern data teams. As we’ve seen, it offers great scalability, performance, and security. It is also quite an easy platform to use with its SQL support and user-friendly interface.

Scalability

Scalability refers to how well a system can grow and handle more data or more users without losing performance. Snowflake excels here because of its separate storage and compute architecture. If I need more computing power, I can make the virtual warehouses bigger. If I need more storage, I can just keep requesting more storage space.

In traditional systems, scaling often means downtime or reconfiguring hardware. If I want more compute or more storage, it can be a lengthy process of taking out old hardware and copying data or software to new hardware. In Snowflake, it's just a click (or command) away.

Examples:

- A small startup might begin with an X-Small warehouse for light queries and occasional reports.

- As data grows, they can upgrade to a Large or X-Large warehouse, without migrating data. Traditional data services may require you to transfer that data to a larger physical warehouse before being able to access larger compute power.

- For high concurrency (many users accessing the system at once), Snowflake supports multi-cluster warehouses that automatically spin up additional clusters to handle the load.

Snowflake also automatically scales compute clusters up or down based on workload demand. This ensures:

- Fast query performance during peak usage.

- Cost savings during idle periods (compute resources pause automatically).

Performance

Snowflake is optimized for demanding data workloads, offering consistent speed whether running complex analytics, batch uploads, or live dashboards. Key technologies that drive its high performance include:

- Columnar storage: Data stored in columns rather than rows speeds up analytical queries by quickly accessing only the needed data.

- Automatic query optimization: Snowflake automatically analyzes and optimizes queries, enhancing performance the more you use it.

- Result caching: Frequently run queries are instantly served from cached results, reducing both computation time and cost.

- Metadata management: Intelligent tracking of data statistics and query patterns ensures efficient query execution.

Security

Snowflake prioritizes enterprise-level security with robust measures designed to protect your data:

- End-to-end encryption: AES-256 encryption safeguards data both at rest and in transit.

- Role-Based Access Control (RBAC): Granular access management ensures users see only what they need, minimizing risk.

- Multi-factor authentication: Adds an extra security layer for accessing Snowflake.

- Data masking and row-level security: Fine-grained controls limit the visibility of sensitive data by role.

- Compliance certifications: Meets industry standards like HIPAA and GDPR.

- Time travel and fail-safe: Recover historical data and restore deleted records up to 90 days, protecting against errors or malicious activity.

Ease of use

Snowflake is widely praised for its user-friendly design, especially beneficial for SQL-savvy beginners. Key usability features include:

- Web-based interface: An intuitive UI simplifies database navigation, query writing, and user management.

- Standard SQL compatibility: No new language is needed. Snowflake supports familiar SQL statements right out of the box.

- Zero infrastructure management: Fully managed, with automatic scaling and optimization eliminating infrastructure setup or maintenance.

- Broad tool integration: Seamlessly connects with analytics tools (PowerBI, Tableau), ETL services (Fivetran), and languages (Python, Java).

- Data marketplace: Easily explore and subscribe to third-party datasets without moving data, enhancing analytics capabilities.

Snowflake aims to make any transition from another service or platform as simple as possible. Here is a great code-along session to learn more about how easily Snowflake can be used for data analysis with languages like Python and SQL.

| Feature | Description | Key Benefits/Examples |

|---|---|---|

| Scalability | Easily scales computing power and storage independently. |

|

| Performance | Optimized for fast analytics and reliable workload handling. |

|

| Security | Robust enterprise-grade security protecting data integrity. |

|

| Ease of Use | User-friendly interface and familiar SQL compatibility. |

|

What Is Snowflake Used For?

Snowflake has a wide variety of uses from being a data warehouse to real-time analytics. We’ll cover the fundamentals of Snowflake’s function in this section.

Data warehousing

A data warehouse is essentially a central hub for structured data collected from various sources. Snowflake is popular because it handles all the technical complexities automatically. You don't need to worry about server maintenance or manually optimizing your storage, Snowflake takes care of it for you.

- Automatic scaling means your warehouse grows or shrinks based on demand.

- Minimal effort on infrastructure—just focus on analyzing your data.

- Fast query performance without needing specialized skills.

Data lakes

Data lakes store raw, unprocessed data, which can be structured (like spreadsheets), semi-structured (such as emails or JSON files), or unstructured (audio or video). Traditionally, creating a data lake required a lot of upfront setup, but Snowflake simplifies this by natively handling diverse data formats like JSON, Parquet, ORC, Avro, and XML.

- No external processing or complicated setups.

- Combines the flexibility of data lakes with data warehouse convenience, creating what's often called a "lakehouse."

Data sharing

Sharing data across teams or even different organizations usually involves a lot of copying and exporting. Snowflake makes this process effortless—you keep your data in your own environment and simply grant others real-time access.

- Instant and secure data sharing.

- Simplified collaboration without transferring files.

Real-time analytics

Real-time analytics refers to the capability to process and analyze data as it arrives, instead of waiting for periodic batch loads. Snowflake enables this by supporting low-latency data ingestion and streaming pipelines. Snowflake offers a variety of tools which support real-time analytics:

- Snowpipe: A serverless ingestion service that automatically loads files as they arrive in cloud storage (e.g., S3, Azure Blob).

- Snowpipe Streaming: A newer capability that allows applications to push data row-by-row into Snowflake via SDKs with sub-second latency.

- Streams and Tasks: Native features to track changes and automate transformations

Learn more about the different data pipelines in Snowflake which make real-time analytics simple with this beginner’s guide on building pipelines in Snowflake.

Exploring Snowflake's Ecosystem

Beyond just providing powerful data pipeline tools, Snowflake has an entire analytics ecosystem. It offers AI and ML solutions while allowing developers to write in their native programming language to create data transformations.

Snowflake Cortex: Integrating AI and ML

Snowflake Cortex is Snowflake’s built-in artificial intelligence and machine learning (AI/ML) layer. It enables teams to build, run, and scale generative AI, predictive models, and natural language experiences directly where their data lives without needing to move data to external AI platforms.

Snowflake provides out-of-the-box AI functions you can run with simple SQL, such as the ability to use LLMs (like Deepseek or Facebook’s Llama) to summarize, translate, and understand customer reviews. Cortex Analyst allows users to use natural language to query data which is great for users who may be less SQL-oriented. You can simply ask Snowflake questions like “What were the sales like for the last quarter?” and Snowflake will query the data for you.

Snowflake also offers a ML Studio which offers great no/low-code options for testing and evaluating machine learning models on live Snowflake data. For a hands-on guide to Snowflake Cortex AI, follow this guide which talks about text processing in Snowflake.

Snowpark: Empowering developers

Snowpark is a developer framework that allows you to build and run complex data logic using familiar programming languages, like Python, Java, or Scala, directly inside the Snowflake environment.

Traditionally, data engineers have had to extract data from warehouses to process it elsewhere. Snowpark flips this model by bringing the logic to the data, improving performance, security, and cost-efficiency.

Now, you can have in-database processing where your Python or Java runs natively in Snowflake without needing a separate Spark session. Team members can use either SQL or Python on the same platform and collaborate on the same data. Additionally, instead of using connectors to bring data locally to these programming environments, everything runs in Snowflake reducing the risk of data exposure.

For more information on running programming languages in Snowflake, you should learn more about Snowpark in this Snowflake Snowpark introduction.

Snowflake on AWS

Snowflake was built from the ground up to run in the cloud with its first and most mature deployment on AWS. Because of this early and deep integration, Snowflake has tight interoperability with key AWS services, allowing users to build secure, scalable, and highly automated data pipelines using familiar AWS tools and infrastructure.

If your organization is already using AWS, deploying Snowflake on AWS makes it significantly easier to move, process, and analyze your data. There are many services which work seamlessly in Snowflake.

Key AWS services that work with Snowflake

1. Amazon S3 (Simple Storage Service)

S3 is AWS’s cloud storage system, widely used for storing data files like logs, CSVs, JSON, Parquet, and more. Here’s an article that talks more about Amazon S3. Snowflake integrates with S3 out of the box and allows you to:

- Ingest data from S3: You can use

COPY INTOor Snowpipe to load structured or semi-structured data from S3 buckets into Snowflake tables. - Write data to S3: Snowflake also lets you export or stage data to S3 for sharing, backup, or further processing.

- Zero-copy access (via external tables): Snowflake can query data directly in S3 without first copying it, using external tables—saving time and storage costs.

2. AWS PrivateLink

AWS PrivateLink is a networking feature that creates private, secure, high-bandwidth connections between your AWS Virtual Private Cloud (VPC) and Snowflake.

Without PrivateLink, traffic between your AWS systems and Snowflake would typically pass through the public internet. It ensures strict compliance or latency needs with the following features:

- Your data stays inside your AWS network boundary.

- You eliminate exposure to the public internet.

- It helps meet security and regulatory requirements, especially in financial, healthcare, or government sectors.

3. AWS Lambda, SNS, and S3 Event Notifications

Snowflake supports event-driven architecture through automated ingestion triggered by AWS services. Here’s how they work together:

- S3 Event Notifications: Every time a file is uploaded to an S3 bucket, AWS can send a notification.

- SNS (Simple Notification Service): These notifications can trigger messages to other AWS services or systems.

- AWS Lambda: This is a serverless function that runs a script when an event occurs. You can use it to invoke Snowpipe, Snowflake’s continuous data loader.

This workflow removes the need to manually schedule data loads and enables near real-time ingestion pipelines, perfect for log analytics, sensor data, or application telemetry.

To learn more about the nuances of Amazon’s AWS infrastructure, check out these guides on AWS Lambda, on AWS SNS, and S3 EFS.

I also highly recommend this course on AWS Cloud and Service concepts to understand how all these components work in-depth.

Getting Started with Snowflake

This will be a very brief overview of some fundamental steps in getting started with Snowflake. To learn more about Snowflake’s essential features, take this Introduction to Snowflake course which covers the basics of using Snowflake for loading and exploring data.

Accessing Snowflake

The very first step is getting access. Snowflake offers (at the time of writing in May 2025) a trial which gives credits to use Snowflake’s features. Getting started is simple:

- Sign up at signup.snowflake.com

- Choose a cloud provider (AWS, Azure, GCP)

- Log in to the Snowflake UI

Loading data

You can load data in Snowflake through a few options. You can drag-and-drop a supported file type (parquet, JSON, csv, etc;) in the UI which then gets processed into a table in your database and schema of choice, use SQL, or use third-party programs.

A common way is to connect your Snowflake to a cloud storage system like Amazon’s S3 and use SQL commands like COPY INTO to transfer data from your cloud storage to your Snowflake database.

Finally, you can also use third-party ETL tools like Fivetran and Matillion to process data before depositing it into Snowflake. These third-party tools help with getting your data cleaned up before loading it into Snowflake. For more detail on getting data into Snowflake, take a look into this guide on Snowflake Data Ingestion.

Running queries

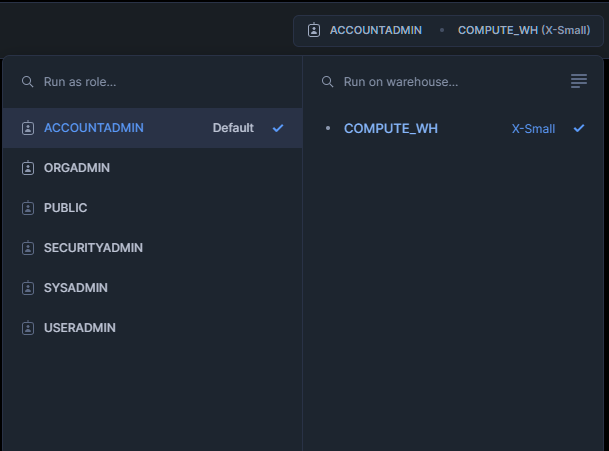

Snowflake makes it easy to run queries in its UI. First, you sign into your Snowflake account then open a worksheet. The biggest thing to understand is Snowflake needs you to select a specific account role and virtual warehouses. This is part of Snowflake’s architecture separating your storage access and computational resources.

If you, or the person managing your Snowflake, has given you different account roles, then each role might have different levels of access. Maybe one role is designed for live production data and one role is designed for testing. This prevents you from accidentally mixing data from different sources in your analysis!

Each role will have its own set of virtual warehouses, select the right warehouse that will give you the computational resources you need. Maybe simple things only need the X-Small warehouse, but bigger things need the Large or even X-Large warehouse.

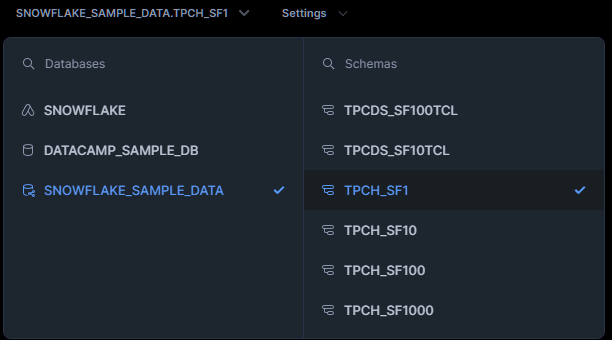

Then, you can choose your database and schema then run SQL like normal. The database is the main storage unit where the schemas are your collections of tables.

Once you have that set up, you can run SQL queries like normal. For instance, an example query may look like the following:

use schema snowflake_sample_data.tpch_sf1;

-- or tpch_sf100, tpch_sf1000

SELECT

l_returnflag,

l_linestatus,

sum(l_quantity) as sum_qty,

sum(l_extendedprice) as sum_base_price,

sum(l_extendedprice * (1-l_discount))

as sum_disc_price,

sum(l_extendedprice * (1-l_discount) *

(1+l_tax)) as sum_charge,

avg(l_quantity) as avg_qty,

avg(l_extendedprice) as avg_price,

avg(l_discount) as avg_disc,

count(*) as count_order

FROM

lineitem

WHERE

l_shipdate <= dateadd(day, -90, to_date('1998-12-01'))

GROUP BY

l_returnflag,

l_linestatus

ORDER BY

l_returnflag,

l_linestatus;For more insight on Snowflake and learning how to master its interface, check out this article on How to Learn Snowflake. If you want to learn more SQL, check out the numerous SQL Courses on Datacamp.

Conclusion

Snowflake is much more than a cloud data warehouse. It’s a powerful platform that brings together data warehousing, lakes, sharing, AI, and developer tools into a single experience. For beginners, it's one of the easiest, most scalable ways to get started in the world of data. With its SQL-friendly interface, developer options like Snowpark, and AI capabilities through Cortex, Snowflake empowers individuals and organizations to do more with their data. For more information on Snowflake, check out the following resources:

Snowflake FAQs

Is Snowflake a database, a warehouse, or a platform?

Snowflake is technically all three. It acts as a relational database engine, a data warehouse, and a unified analytics platform with built-in support for SQL, semi-structured data, and data sharing.

How does Snowflake compare to platforms like Amazon Redshift, BigQuery, or Azure Synapse?

Snowflake stands out due to its multi-cloud support, independent compute/storage scaling, automatic optimization, and zero-maintenance architecture. It is very beginner-friendly and enterprise-ready.

What does it mean that Snowflake separates storage and compute?

This means you can scale compute power (virtual warehouses) independently of how much data you store. You only pay for what you use, and multiple users or teams can query data without affecting each other’s performance.

How do I get started with Snowflake?

Start by signing up for a free trial account, create a database, schema, and table, and use the UI or SQL commands to load and query data. Tutorials and preloaded sample datasets are also available.

Is Snowflake only for big companies?

No. Snowflake’s pay-per-use model and automatic scaling make it suitable for startups, mid-sized businesses, and large enterprises alike.