Course

In modern businesses, there is a growing need to minimize the time it takes to deploy data insights. A fast approach is needed to expedite the transition from data collection to analytics, artificial intelligence, and machine learning implementation.

Traditional ETL (extract, transform, load) architectures face challenges in meeting the technical demands of big data and real-time data analysis. Therefore, a new data management architecture called zero-ETL has emerged to minimize or eliminate the need for ETL processes.

What Is Zero-ETL?

Zero-ETL is a system of integrations designed to eliminate or reduce the need to create ETL data pipelines. By enabling queries across different data silos without physically moving the data, zero-ETL aims to streamline data processing and improve efficiency.

The term “zero-ETL” was introduced during the AWS re:Invent conference in 2022, when Amazon Aurora's integration with Amazon Redshift was announced. Since then, AWS advanced this concept, mainly through services that support direct data analysis and transformation within the data platforms without requiring separate ETL pipelines.

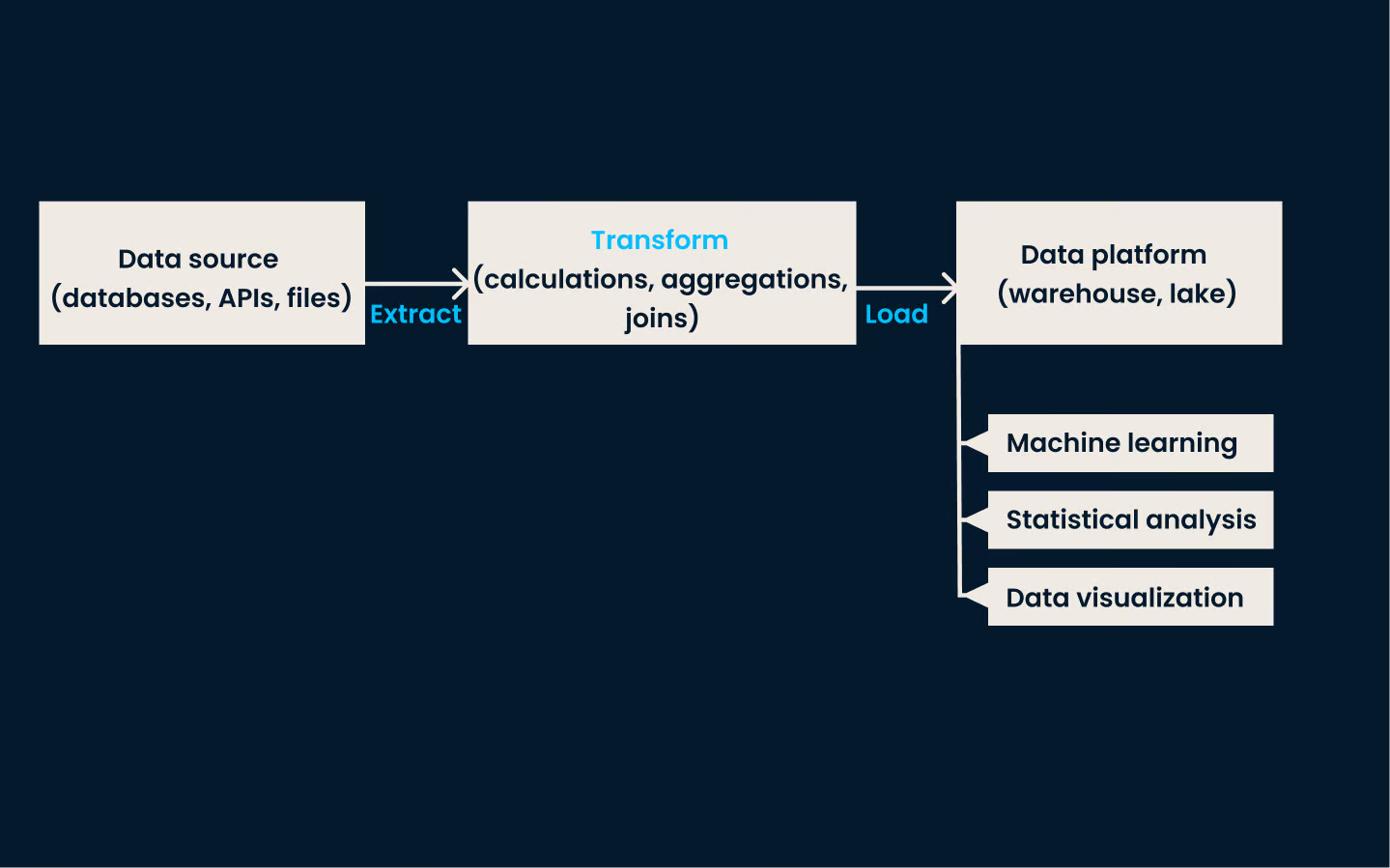

In a typical ETL data processing pipeline, a data professional, such as a data engineer or a data scientist, gathers data from a source, such as a database, an API, a JSON, or an XML file.

After extracting the data, various transformations are applied, such as combining data, performing calculations, merging tables, or removing unnecessary information like timestamps or user IDs.

Finally, the transformed data is loaded into a platform for further analysis, such as machine learning, statistical analysis, or data visualization. This process demands significant time, cost, and effort due to its complexity.

Traditional ETL architecture

Consider this analogy: In traditional photography, a picture is captured with a negative film (data extraction), processed in a dark room (transformation), and then developed and displayed (loading). Now, imagine a digital camera where the picture is captured, developed, and ready to be displayed (or instantly transmitted in live broadcast) all in one place.

Similarly, zero-ETL changes data processing by eliminating extraction, transformation, and loading. This architecture minimizes data movement and enables us to transform and analyze all data within a single platform.

Zero-ETL promises real-time or minimum latency data analytics for data scientists and business stakeholders.

How Does Zero-ETL Work?

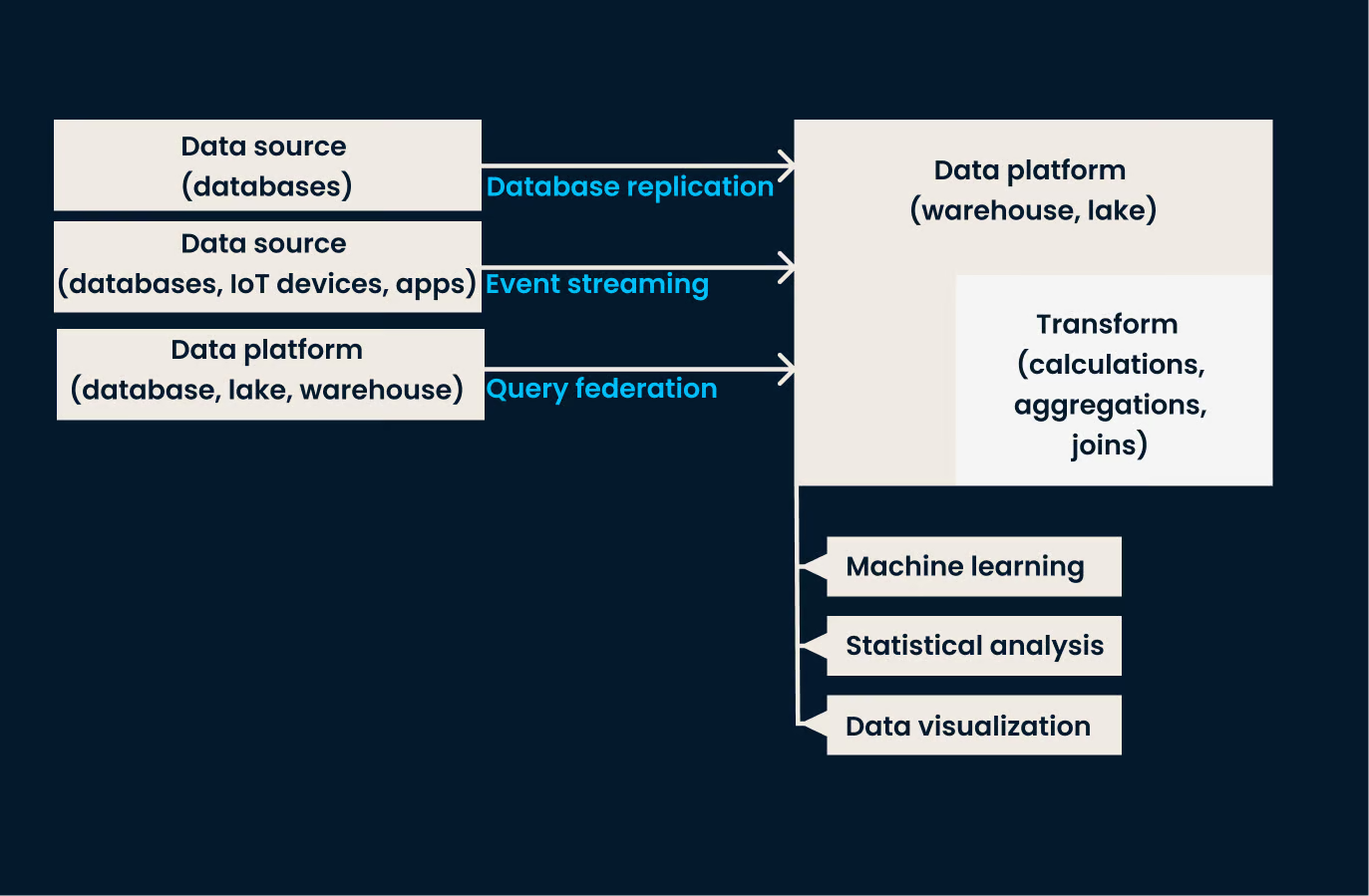

Zero-ETL simplifies data integration by directly linking data sources to data warehouses or lakes, ensuring real-time data availability for analytics and reporting. This is made possible by several cloud-based technologies and services, such as:

Database replication

Database replication is the process of copying and synchronizing data from one database to another.

In the context of zero-ETL between a database and a data warehouse, replication ensures that the data in the data warehouse is automatically updated in real-time or near real-time, eliminating the need for separate ETL processes. This is the case for the integration between Amazon Aurora and Amazon Redshift.

Federated querying

Federated querying refers to the ability to run queries across multiple data sources, such as databases, data warehouses, or data lakes, without the need to move or replicate the data into a single location.

Regarding zero-ETL, federated querying allows data professionals to access and analyze data stored in different data platforms directly, providing a unified view of the data without the overhead of traditional ETL processes.

Data streaming

Data streaming refers to the continuous, real-time processing and transfer of data as it is generated.

In zero-ETL, data streaming involves capturing data from several sources (like databases, IoT devices, or applications) and immediately delivering it to a data warehouse or data lake. This ensures the data is available for analysis and querying almost instantly, without batch ETL processes.

In-place data analytics

To achieve in-place data analytics, the necessary transformations are integrated into the cloud data platform, such as a data lake. This integration allows for real-time data processing and analysis directly where the data resides, reducing latency and improving efficiency.

For example, unstructured data collected in a JSON or XML format will be transformed and analyzed using “schema-on-read” technologies within the data lake itself, eliminating the intermediate steps of data movement to reporting-ready storage.

Example Zero-ETL data architecture

The Different Components of Zero-ETL

Although zero-ETL architecture may sound like it has no components or that all components are unified, different elements and services can be used depending on the needs of the target analytics and resources. These services include:

Direct data integration services

Cloud providers offer specialized services that automate zero-ETL integration. As mentioned before, AWS provides Amazon Aurora’s integration with Amazon Redshift, where data written to Aurora is automatically replicated to Redshift. These services manage data replication and transformation internally, removing the need for traditional ETL processes.

Change Data Capture (CDC)

CDC technology is a core element of zero-ETL architectures. It continuously monitors and captures changes (inserts, updates, deletes) in the source databases and replicates these changes in real time to the target systems.

Streaming data pipelines

Streaming pipelines move data in real time from different sources to the target system. Platforms like Amazon Kinesis and Apache Kafka enable continuous data flow, ensuring low-latency updates.

Serverless computing

Serverless architectures support zero-ETL by automatically managing the necessary infrastructure and scaling resources based on demand. Services like AWS Lambda and Google Cloud Functions exemplify this approach by allowing functions to be executed in response to data events.

Schema-on-read technologies

By applying the schema when reading the data rather than when it is written, schema-on-read supports flexibility in handling unstructured and semi-structured data formats like JSON and XML. This approach reduces the need for predefined schemas and allows for dynamic data analysis.

Data federation and abstraction

Zero-ETL facilitates the ingestion and duplication of data from different sources through data federation. This involves using data lakes and cross-platform data virtualization to create an abstract object layer, simplifying data duplication without requiring extensive transformation and data movement. Data virtualization allows users to access and query data across different systems as if in a single location.

Data lakes

In a zero-ETL approach, transformations and analyses are performed within the data platform. This allows for managing unstructured data in various formats (video, images, text, and numerical) within a multi-format data storage system, like a data lake, often without the need for intermediate transformations.

Advantages and Disadvantages of Zero-ETL

Zero-ETL may sound like a promising approach to increase efficiency in data science. However, it is important to compare both the advantages and disadvantages a zero-ETL implementation may bring.

Advantages of zero-ETL

Zero-ETL brings several benefits to data management and data analytics, including:

- Streamlined engineering: Zero-ETL simplifies the data pipeline architecture by integrating extraction, transformation, and loading into a single process or eliminating it altogether. This streamlining reduces complexity and accelerates data analytics and machine learning tasks, making it easier for data scientists to derive insights quickly.

- Real-time analytics: Zero-ETL enables real-time data analytics by merging extraction, transformation, and loading phases directly into the data platform. When new data is collected, it can be analyzed immediately, allowing for faster decision-making and timely insights.

Disadvantages of zero-ETL

Despite its benefits, zero-ETL may pose some challenges, including:

- Complicated troubleshooting: Identifying and troubleshooting issues can be more complex because all data processes are performed in one integrated step. Isolating the source of problems requires a deep understanding of the entire system.

- Steeper learning curve: Eliminating traditional ETL pipelines might reduce the need for intermediate data engineering roles. This shift can lead to a steeper learning curve for data scientists and machine learning engineers, who must now manage tasks previously handled by data engineers.

- Cloud dependency: Zero-ETL solutions are typically cloud-based by design. Organizations not yet prepared to integrate cloud technologies into their systems may face challenges in adopting zero-ETL. This dependency can raise concerns about data security, compliance, and control.

Typical Use-Cases of Zero-ETL

Zero-ETL offers significant advantages in different real-time data processing and analytics scenarios. Here are some typical use cases:

- Real-time analytics: Zero-ETL eliminates the traditional batch ETL process and enables real-time access to newly generated data, including customer interactions, user behaviors, and vehicle traffic patterns. This automation allows teams to make data-driven decisions instantly, improving responsiveness and operational efficiency.

- Instant data transfer: In a zero-ETL data management architecture, eliminating the ETL pipeline means data duplication to another warehouse, where data scientists can access it, happens significantly faster or even in real time.

- Machine learning and AI: Zero-ETL is particularly beneficial for machine learning and artificial intelligence applications, where timely data is key. Real-time data streaming and immediate availability enable continuous training and updating machine learning models with the latest data, improving the accuracy and relevance of AI predictions and insights.

Comparison of Zero-ETL vs Traditional ETL

The following table provides a detailed comparison between Zero-ETL and traditional ETL processes.

|

Zero-ETL |

Traditional ETL |

|

|

Data virtualization |

It uses data virtualization technologies to ease the duplication of data across warehouses. |

Data virtualization may be redundant or challenging to implement because data is moved from transformation to the loading phase in ETL. |

|

Data quality monitoring |

It is essentially an automated data management approach; hence, data quality issues may arise. |

Because of the discrete nature of the data movement pipeline in ETL, data quality is better monitored and remedied. |

|

Data type diversity |

Cloud-based data lakes allow multiple data types and formats without architectural constraints. |

The extraction and transformation architecture may constrain the data types (additional engineering effort is needed at the extraction and transformation phases for different kinds of data). |

|

Real-time deployment |

Data analysis can be done in the platform with minimal latency from data generation to transformation and analysis. |

The scheduled batch nature of the pipeline prevents real-time data analysis. |

|

Cost and maintenance |

It is more cost-effective and easier to maintain because it requires fewer computational components and coding. Data transformation and loading can be done on demand. |

It is more expensive because it requires more computational resources and experienced data professionals. |

|

Scale |

Faster and less expensive to scale because of eliminating intermediate hardware for data movement. |

It can be slow and costly due to the increased need for better hardware and code optimization to accommodate more extensive data sources. |

|

Data movement |

None or minimal. |

Data movement is required because the pipeline is discrete and data must be transferred to the loading stage. |

Zero-ETL vs Other Data Integration Techniques

Below is a comparison of zero-ETL with other prominent data integration techniques, highlighting their commonalities and central differences.

Zero-ETL vs ELT

- Commonalities: Zero-ETL and ELT (Extract, Load, Transform) reduce data analytics time and complexity by postponing the data transformation process until the data is loaded into the target system. ELT is considered a predecessor to zero-ETL, as it laid the groundwork for deferring transformation to a later stage in the data processing pipeline.

- Main differences: Zero-ETL eliminates the intermediate staging phase required in the ELT approach, thereby minimizing latency and improving real-time data availability. Zero-ETL simplifies the data pipeline further by reducing the number of steps and infrastructure requirements.

Zero-ETL vs API

- Commonalities: Zero-ETL and APIs enable queries to multiple data sources, facilitating data integration across different systems.

- Main differences: Zero-ETL is primarily a codeless technology requiring minimal manual coding for data integration and management. In contrast, APIs need custom code to connect and interact with different data sources. Additionally, APIs can be more prone to security breaches due to the code-based nature of their integration, which can introduce vulnerabilities if not properly managed and secured.

Top Zero-ETL Tools

As we have seen throughout this blog post, zero-ETL is a cutting-edge data management tool that has gained traction in the industry, largely popularized by AWS and adopted by other cloud providers.

Here’s a brief overview of the top zero-ETL tools available today:

AWS zero-ETL tools

- Aurora and Redshift direct integration: AWS has integrated Amazon Aurora and Amazon Redshift to enable real-time analytics without the need for traditional ETL processes.

- Redshift Spectrum: Allows users to run SQL queries against exabytes of data in Amazon S3 without loading or transforming the data. This service supports seamless querying of structured and unstructured data, making it ideal for diverse datasets.

- Amazon Athena: A serverless analytics solution that processes large volumes of data for in-place analytics, including machine learning and AI, using SQL or Python to connect streaming data from cloud services.

- Amazon Redshift Streaming Ingestion: A service that provides real-time data ingestion from Amazon Kinesis Data Streams or Amazon MSK, supporting intensive real-time machine learning tasks.

Zero-ETL tools by other cloud providers

Apart from AWS, other cloud providers offer unified data platforms with zero-ETL capabilities:

- Snowflake: Supports the creation of data warehouses and lakes capable of handling unstructured data using zero-ETL architecture. Snowflake simplifies data workflows and supports real-time analytics and machine learning.

- Google BigQuery: Executes complex SQL queries on large datasets in real time, supporting seamless integration with other Google Cloud services for real-time analytics. BigQuery is designed to handle vast amounts of data efficiently.

- Microsoft Azure Synapse Analytics: Provides real-time data ingestion and analysis with a unified analytics platform, supporting advanced analytics and business intelligence applications. Synapse integrates with various Azure services.

Conclusion

Eliminating traditional ETL phases in the data analytics and machine learning pipeline significantly shifts the data engineering paradigm. Integrating zero-ETL architecture offers substantial benefits, including increased speed, enhanced security, and greater scalability.

However, this shift also brings challenges. The need for traditional data engineering skills may diminish, requiring data analysts, machine learning scientists, and data scientists to acquire more advanced data integration concepts and skills.

Zero-ETL focuses on the needs of data analysts and machine learning engineers, hinting at a future where these roles become more central, potentially reshaping job market demands and skillsets.

If you’re interested in learning more about data architecture, check out our course on ETL and ELT in Python!

FAQs

How can a business transition from traditional ETL to a Zero-ETL architecture?

To transition, assess your current data processes and identify areas for reducing data movement. Focus on adopting cloud-based technologies like data lakes and real-time streaming. Retraining your team to adapt to new tools and methodologies is also essential to this shift.

What types of organizations or projects are best suited for Zero-ETL?

Zero-ETL fits well with organizations that need real-time data analysis, such as in e-commerce, financial services, and telecoms. Projects that benefit include real-time monitoring, dynamic pricing, and instant fraud detection.

What are the long-term implications of adopting Zero-ETL for data management and analytics?

In the long term, Zero-ETL can increase organizational agility and prompt faster responses to data insights. It may also shift data-related job roles towards more integration-focused responsibilities. Regular updates to security and compliance measures will be essential as data processes are streamlined.

My educational training has been in mathematics and statistics. I have extensive experience is statistical modeling and machine learning applications. In addition, I research on the mathematics of ML.