Track

The data architecture landscape is evolving, driven by the increasing use of cloud technologies and the adoption of the modern data stack. As a result, ELT processes are becoming more widely used. But what exactly is involved in this process?

In this article, we will explore ELT and its role in advanced data architecture.

What Is ELT?

ELT stands for Extract, Load, Transform. It is a data integration process that involves extracting data from various sources, loading it into a data storage system, and transforming it into a format that can be easily analyzed.

The ELT process is largely used in modern data stack architecture, where data is stored, transformed, and analyzed in a data lake or warehouse.

How Does ELT Work?

As its name suggests, ELT involves three main steps: Extract, Load, and Transform. Let's examine each step in more detail.

1. Extract

The first step in the ELT process is extracting data from various sources such as databases, files, APIs, or web services. This can be done using tools like ELT software or custom scripts written by developers.

Some examples of data extraction platforms include Airbyte and Fivetran. For writing custom scripts, Apache Spark and Python are widely used.

The extracted data can be structured, semi-structured, or unstructured and may come from different types of systems, such as relational databases, NoSQL databases, or cloud storage.

2. Load

Once the data has been extracted, it is loaded into a centralized data storage system like a data lake or a data warehouse. This step involves organizing and storing the extracted data in its raw format without any transformation.

Data engineers are typically involved in this step, where they load the data to platforms like:

These data platforms allow for the fast loading of large amounts of data and provide a single source of truth for all the different types of data collected from various sources.

3. Transform

The final step in the ELT process is transforming the raw data into a format optimized for analysis and reporting. This involves cleaning, filtering, aggregating, and structuring the data in a way suitable for business intelligence and analytics tools to work with.

Data engineers or data scientists are usually responsible for this step, and they may use tools such as:

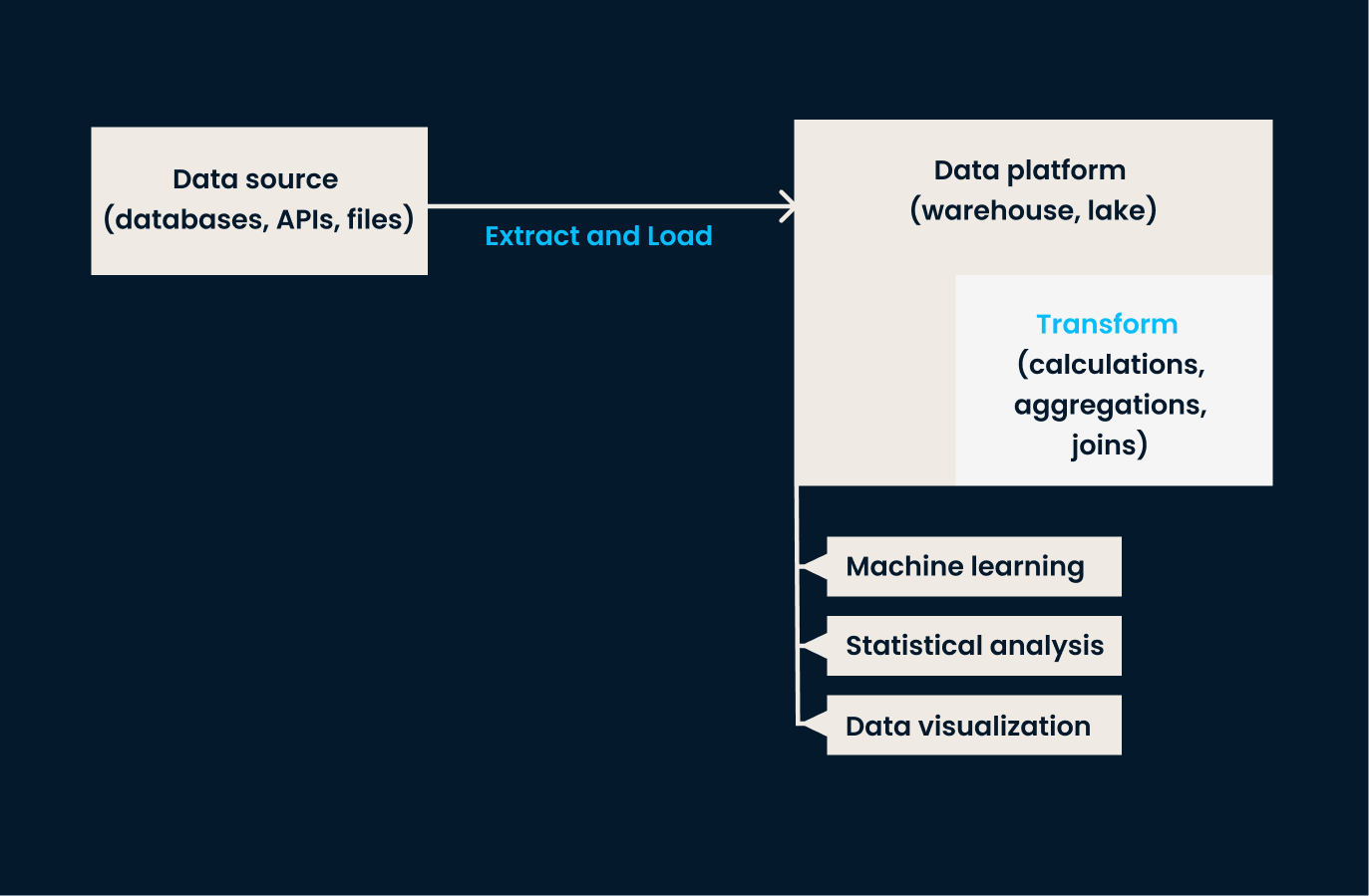

ELT data pipeline: The extract and loading phases happen before any transformation is applied to the data. The transformation step is performed within the data platform.

Data transformation is done within the data warehouse or data lake, which allows for easier handling of large volumes of data. With modern cloud technologies, this process can be done in near real-time, providing organizations access to fresh and accurate data for analysis.

Want to learn more about using Python for ELT? The ETL and ELT in Python course may be exactly what you need.

Advantages of ELT

ELT comes with many benefits, and here are some notable ones:

- Scalability: ELT allows for the extraction, loading, and transformation of large amounts of data faster than traditional ETL processes, thanks to the power of cloud computing.

- Flexibility: ELT allows for integrating data from various sources, including structured, semi-structured, and unstructured data.

- Speed of real-time insights: The raw data stored in a data lake or data warehouse can be transformed on-demand rapidly, providing real-time insights for faster decision-making.

ELT vs ETL

Let's now compare the differences between ELT and ETL.

As previously mentioned, ELT is a newer approach to data processing where data is first loaded into a central repository, allowing transformations to occur post-loading.

Conversely, ETL (Extract, Transform, Load) is a traditional methodology in which data is transformed before being loaded into the destination system. These fundamental differences impact not only the process but also the speed, costs, and security.

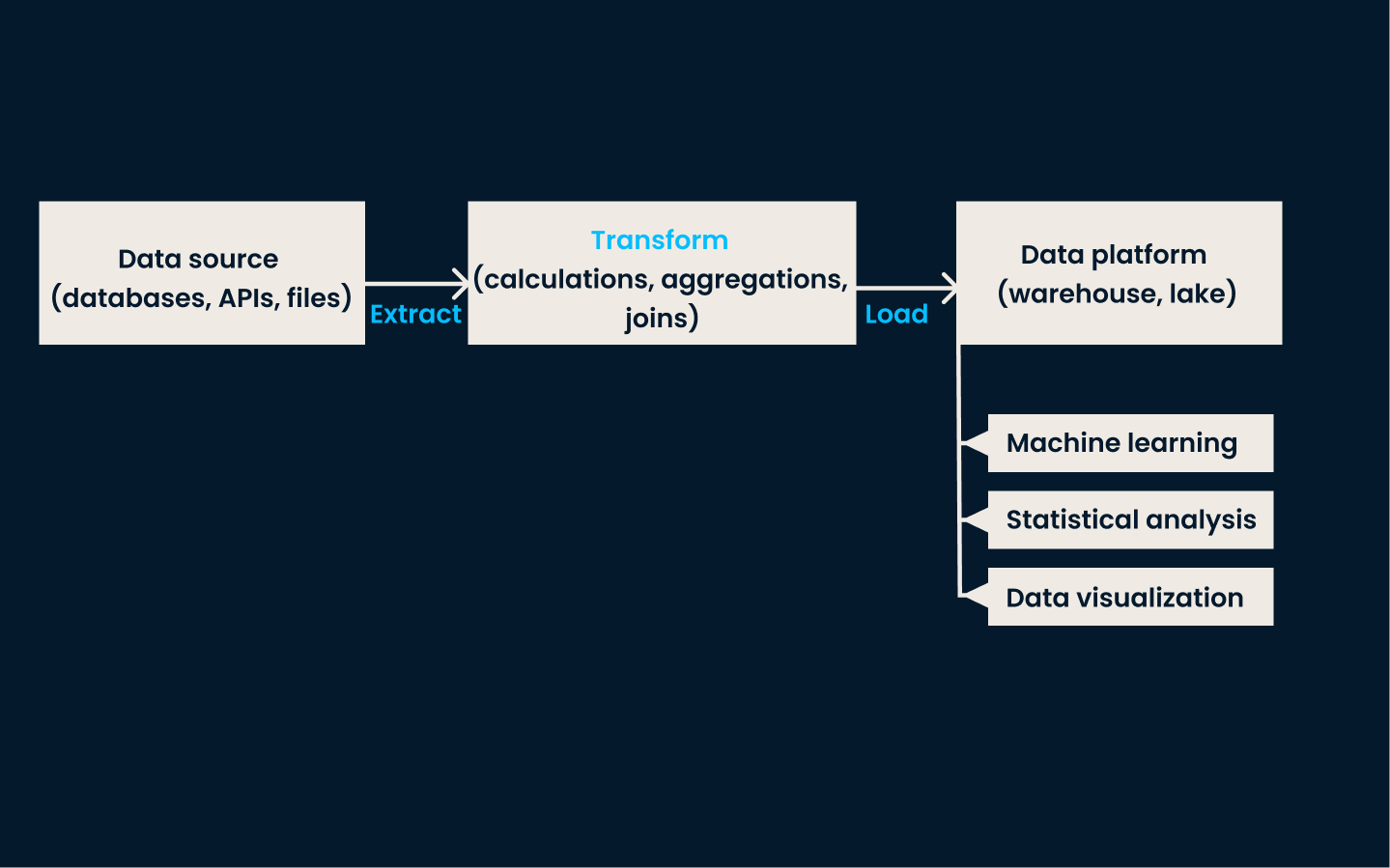

ETL pipeline: The transformation step occurs before loading the data to a destination platform. Typically, transformation occurs on a separate server rather than in the data platform itself.

Process

ELT follows a 'load-first, transform-later' approach where data is loaded into a storage system in its raw form and then transformed for analysis.

On the other hand, ETL follows an 'extract-transform-load' approach where data is extracted from various sources, then transformed, and finally loaded into a data warehouse.

Speed

The ELT approach is known for its faster data processing speed, as it eliminates the need for an intermediate staging area. This allows for real-time or near-real-time data analysis.

Conversely, ETL can often take longer due to the multiple steps involved in transforming and loading the data.

Costs

Additionally, ELT eliminates the need for expensive ETL software, as transformations can be done using SQL queries or other open-source tools.

In contrast, traditional ETL processes tend to fit better in on-premises infrastructure, where data sources are limited, and hardware resources are less scalable than in cloud-based environments. On-premises infrastructure often has higher costs than cloud infrastructure.

Security

Finally, ELT allows data encryption and data masking of personally identifiable information (PII) during the load process since it is done in a secure central repository.

ETL processes must ensure data security throughout the extraction and transformation process. However, that proves difficult, given that the data has to move to additional staging storage and is often processed in separate servers.

ELT Use Cases

With its faster data processing speed and cloud computing analytics, the ELT process has seen some use cases where it shines compared to ETL.

Here are some common uses of the ELT process:

- Real-time app reporting: ELT allows businesses to gain real-time insights into user app behavior.

- Data warehousing: Many organizations use ELT to load and transform data into a centralized data warehouse for reporting and analytics purposes.

- Cloud-based data integration: With the rise of cloud technologies, ELT has become a popular choice for integrating data sources from various on-premises and cloud applications.

ELT Tools and Technologies

There are various tools and technologies available for organizations to implement ELT processes. These include:

Data warehouses and data lakes

In the loading step of the ELT process, data lakes and data warehouses are essential to providing a centralized data storage space within a company.

Here are the most popular cloud data platforms:

- Amazon Redshift: A cloud data warehouse service that can handle massive amounts of data quickly and efficiently.

- Google BigQuery: A serverless, scalable cloud data warehouse that allows for fast querying of large datasets.

- Snowflake: A cloud-based data warehouse that supports ELT processes and provides advanced security and performance.

- Amazon S3: A cloud storage service that can be used as a data lake for storing raw data, which can then be transformed using SQL queries or other tools.

Open-source ELT tools

Many organizations also leverage open-source tools for their ELT processes for their cost-effectiveness.

These tools can help with different stages of the ELT process:

- Apache Airflow: Used for workflow automation and scheduling of ETL/ELT processes.

- dbt: Open-source software for transforming data in a data warehouse using SQL.

- Airbyte: Open-source data integration platform that supports both ELT and ETL processes.

- Singer: Open-source tool used in both ELT and ETL processes through a modular approach.

- Apache NiFi: Open-source data integration tool that supports data routing, transformation, and system mediation logic.

Open-source tools are an excellent match for ELT processes because they provide flexibility, developer support, and many integration options.

Custom-built scripts

Developers can also choose to use scripts built using programming languages for added customizability. These tend to involve using packages and libraries to process and transform data.

Some common programming languages used for these scripts include:

- Python: Using libraries such as Pandas, NumPy, and SQLAlchemy can help with the extraction, loading, and transformation of data.

- Apache Spark: Used for big data processing and analytics, often leveraging languages like Python (PySpark), Java, and Scala.

- R: The tidyverse framework, along with other R libraries like dplyr and data.table, can be used for data extraction and transformation.

- SQL: SQL queries can be used for transformation tasks, and SQL Server Integration Services (SSIS) is a popular ETL tool that also supports ELT processes.

- Scala: Often used with Apache Spark for big data processing and ELT tasks.

- Java: Commonly used with big data frameworks like Apache Hadoop and Apache Spark for ELT processes.

Final Thoughts

In conclusion, ELT is a new and emerging trend in data processing that offers many advantages over traditional ETL methods. It allows faster and more efficient data transformation and provides greater flexibility and scalability.

If you’re thinking about learning more about ELT or other data engineering concepts, you might like our Data Engineer Certification or our Data Engineer in Python Career Track.

FAQs

How does ELT handle data quality and consistency issues?

ELT handles data quality and consistency by leveraging the capabilities of the data warehouse or data lake where data is stored. Data quality checks, cleansing, and validation processes are applied during the transformation phase to ensure consistency and accuracy. Tools like dbt (data build tool) can be used to create transformation pipelines that include these quality checks, helping to maintain high standards of data integrity.

Can ELT be used for real-time data streaming, and if so, how?

Yes, ELT can be used for real-time data streaming. This is achieved by integrating real-time data ingestion tools like Apache Kafka or AWS Kinesis with the ELT pipeline. These tools can continuously stream data into a data lake or warehouse, where transformations can be applied in near real-time using tools like Apache Spark or SQL-based transformations, enabling timely analysis and decision-making.

How does ELT integrate with machine learning workflows?

ELT integrates with machine learning workflows by providing a robust data pipeline that feeds clean, transformed data into machine learning models. Once data is loaded into a data warehouse, it can be transformed and prepared for machine learning using tools like Apache Spark MLlib or Python libraries such as scikit-learn. This integration allows seamless data preprocessing, model training, and evaluation within the same environment, enhancing efficiency and scalability.

What are the security considerations when implementing ELT in cloud environments?

When implementing ELT in cloud environments, security considerations include data encryption, access control, and data masking. Data should be encrypted in transit and at rest to protect sensitive information. Access control mechanisms, such as role-based access control (RBAC), should be implemented to ensure that only authorized users can access and manipulate the data. Data masking techniques can be applied to protect personally identifiable information (PII) during the loading and transformation processes.

How do data engineers handle the challenge of schema evolution in ELT processes?

Data professionals handle schema evolution in ELT processes using schema management tools and practices that accommodate changes over time. This includes using tools like Apache Avro or JSON schema for flexible data serialization and schema evolution. Additionally, versioning strategies and automated schema migration scripts can be employed to manage changes without disrupting the data pipeline. Data catalogs and metadata management tools also play a crucial role in tracking and documenting schema changes to ensure data consistency and reliability.

I'm Austin, a blogger and tech writer with years of experience both as a data scientist and a data analyst in healthcare. Starting my tech journey with a background in biology, I now help others make the same transition through my tech blog. My passion for technology has led me to my writing contributions to dozens of SaaS companies, inspiring others and sharing my experiences.