Track

What if an AI model could work successfully without needing thousands of labeled examples?

Zero-shot learning (ZSL) is a technique that allows models to do this, so they can identify and classify new concepts without any labeled examples during training and handle tasks they weren't specifically trained for.

This is great because it allows AI systems to take on new tasks, products, or markets without the constant need for retraining. It lowers the data collection and annotation costs and improves a model’s ability to apply what it’s learned to situations it hasn’t seen before.

In this article, I will explain how ZSL works, the challenges it faces, its key applications alongside some examples. Let’s dive into it!

Become an ML Scientist

What Is Zero-Shot Learning?

Zero-shot learning (ZSL) is a machine learning technique that allows models to deal with tasks or recognize things they have never encountered before. It does this by using what it already knows and connecting it to new situations, even without specific training for them.

Suppose a model is trained to recognize animals but has never been taught about zebras in its training phase. With ZSL, the model could still figure out what a zebra is by using a description. But how? Allow me to simplify things a bit (we’ll get into more technical details in a bit) and let me explain:

- The model knows about animals and their features, like "has four legs," "lives in the savanna," or "has stripes."

- It’s given a new description: "A horse-like animal with black and white stripes living in African grasslands."

- Using its understanding of animal attributes and the provided description, the model can deduce that the image likely represents a zebra, even though it has never seen one before.

- The model makes this inference by connecting the dots between known animal characteristics and the new description.

How Does Zero-Shot Learning Work?

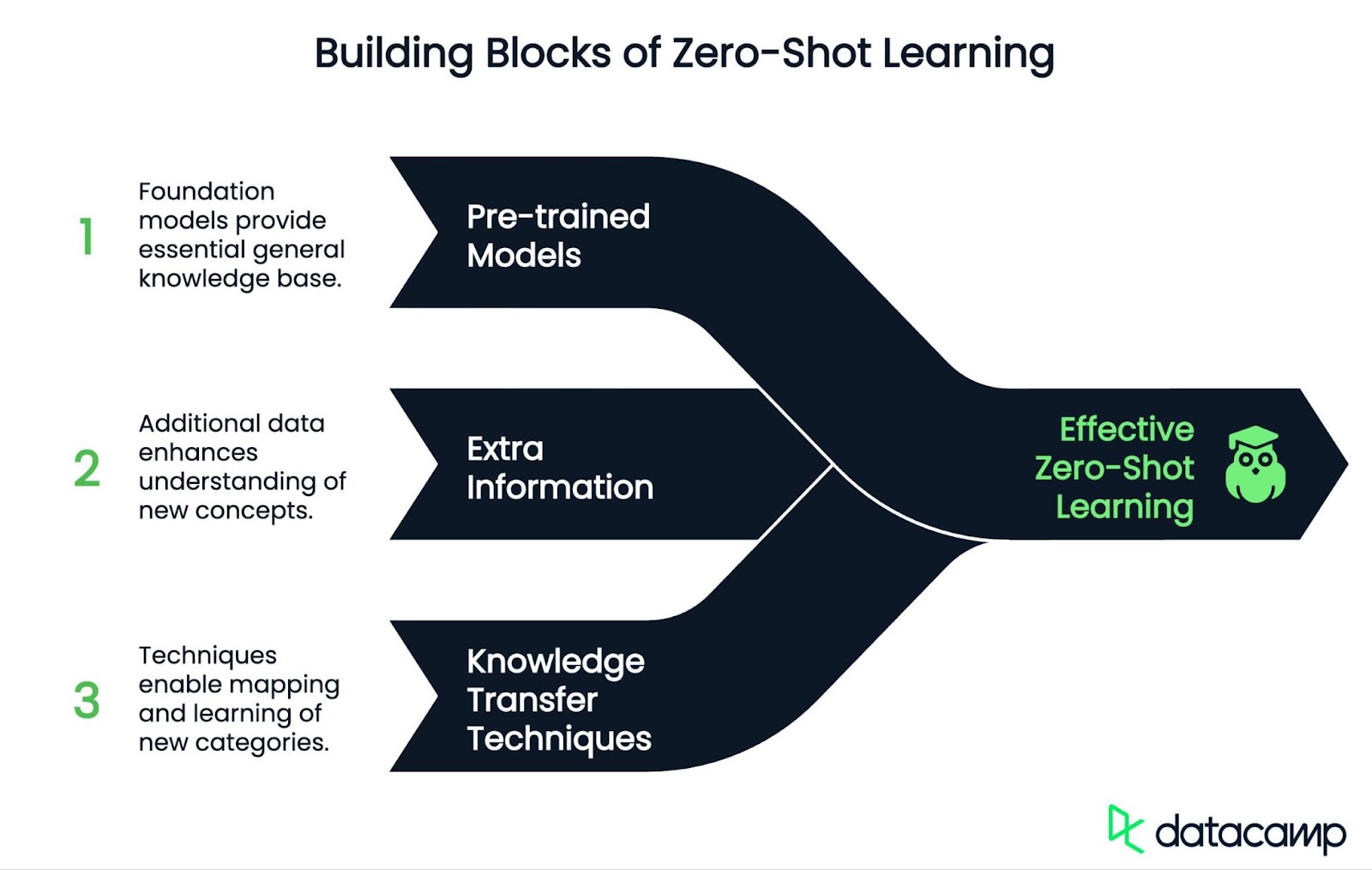

Zero-shot learning is a two-stage process (training and inference) and uses three key components: pre-trained models, extra information, and knowledge transfer.

Pre-trained models

ZSL relies on pre-trained models that have been trained on a lot of data. For example, the family of GPTs (for language) or CLIP (for image-text connections). These models provide a solid base of general knowledge.

Extra information

Extra information helps the model understand new things. This can include:

- Text descriptions.

- Attributes or features.

- Word associations or vectors.

Knowledge transfer

ZSL maps both known and new classes into a shared "semantic space" where they can be compared. It often uses techniques like:

- Semantic embeddings: A shared way to represent both known and unknown categories.

- Transfer learning: Reusing knowledge from similar tasks to tackle new ones.

- Generative models: Creating fake examples of unseen classes to help the model learn.

Training and inference

ZSL can be conceptualized as a two-stage process:

- Training: The model acquires knowledge about labeled data samples and their attributes.

- Inference: The acquired knowledge is extended to new classes using the provided auxiliary information. It happens in three steps:

- The model converts new input (like an image) into a semantic representation.

- It compares this representation with those of known classes or descriptions.

- It picks the closest match based on similarity.

This approach allows ZSL models to dynamically recognize an open-ended set of new concepts over time, using only descriptions or semantic information, without the need for additional labeled training data.

Let’s come back to our animal classification example.

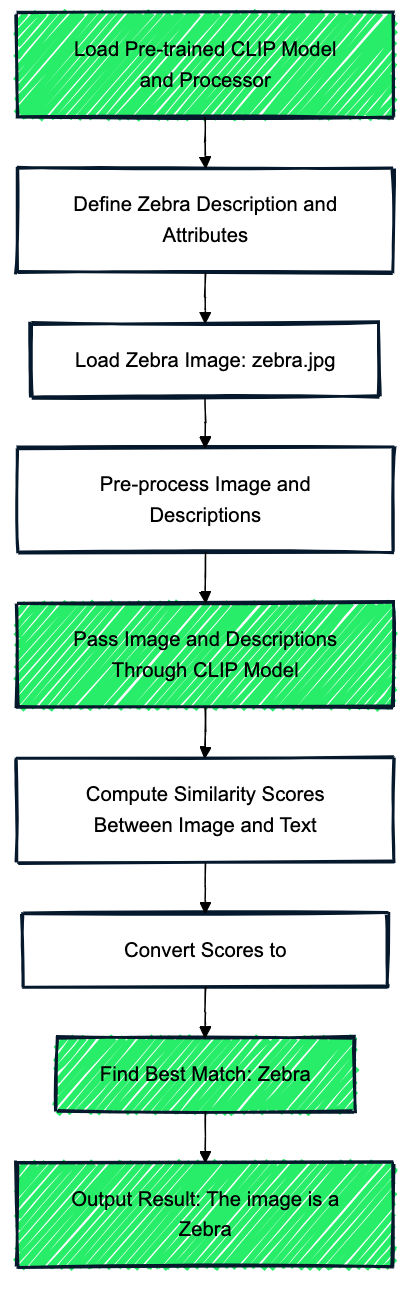

Example: Classifying Animals

Imagine we are using the model CLIP, which is pre-trained on a massive dataset of image-text pairs. This pre-trained model provides a foundation of general knowledge about animals, even though it hasn’t been explicitly trained on zebras.

In our zebra example, auxiliary data (extra information) includes text descriptions like: “A horse-like animal with black and white stripes living in African grasslands." and attributes like "has four legs," "has stripes," "lives in the savanna." These descriptions and attributes connect what the model knows (general animal traits) and the unseen class (zebra).

Then, the model maps both seen classes (e.g., horse, tiger) and the unseen class (zebra) into a shared semantic space. The model encodes the zebra description ("black and white stripes, horse-like") into the same space as known animals and uses its understanding of animals like horses and tigers to reason about zebras.

The model compares the zebra’s embedding to embeddings of known animals and descriptions (e.g., horse, tiger, "black and white stripes"). Using similarity scores, the model identifies the zebra as most closely matching the description: "A horse-like animal with black and white stripes living in African grasslands."

Zero-Shot Learning vs. Few-Shot Learning

Zero-shot learning (ZSL) and few-shot learning (FSL) are two methods that help models deal with new tasks or objects, even when there’s little or no data available. However, they have some differences. Let’s look at an overview of both techniques and their key differences:

|

Aspect |

Zero-Shot Learning (ZSL) |

Few-Shot Learning (FSL) |

|

What It Does |

Handles new tasks without labeled training data. |

Learns new tasks from a few labeled examples. |

|

How It Works |

Infers new categories by mapping descriptions to known knowledge. |

Learns patterns from a few examples to classify new instances. |

|

Data Needed |

Requires zero labeled examples for new tasks. |

Requires 1–5 labeled examples for new tasks. |

|

Prior Knowledge |

Relies on learned relationships between concepts and descriptions. |

Uses prior knowledge but also updates based on the provided examples. |

|

Adaptability |

Can generalize to completely new tasks but may be less precise. |

Adapts quickly to specific new tasks and is usually more accurate. |

|

Example 1 |

Spam Detection: Identifies spam using definitions (e.g., “emails with suspicious links”) without prior labeled spam data. |

Customer Support Intent Detection: Learns to detect a new intent (e.g., “cancel subscription”) after seeing a few labeled conversations. |

|

Example 2 |

Text Classification in Sentiment Analysis: Determines sentiment (e.g., “satisfied,” “angry”) using only definitions. |

Document Type Identification: Learns to classify new document types (e.g., “purchase orders”) after seeing a few examples. |

Which one to choose?

ZSL is best when you don’t have any labeled data to work with. It’s useful for situations where new categories or tasks appear that the model wasn’t trained for, and there’s no time or resources to gather labeled examples.

For example, an online store might add new product categories, and the model can organize these items based on descriptions without needing labeled examples.

ZSL is perfect when you need flexibility and collecting labeled data is too expensive, slow, or impossible.

On the other hand, FSL works well when you can provide a small number of labeled examples (usually 1-5) and need the model to learn quickly with better accuracy.

For example, if a chatbot gets a new type of question like "How do I cancel my subscription?", showing it just a few examples of this type of query can help it classify similar ones accurately.

FSL is great for situations where you can afford to provide a few labeled examples and need the model to perform well, especially in tasks where accuracy is important, like customer support or medical imaging.

Applications of Zero-Shot Learning

There are so many areas where ZSL is useful. Let’s have a look at just a few.

Text & language processing

ZSL is widely used in text classification, allowing models to categorize text into new labels without prior training.

For example, it can sort emails as spam or not spam based on descriptions of those categories, without needing labeled examples. This capability also benefits chatbots by helping them understand user requests without training on every possible query.

In sentiment analysis, ZSL enables models to determine whether a review is positive or negative purely by interpreting label meanings. It also plays a role in social media moderation, identifying harmful or misleading content based on text descriptions, such as detecting misinformation labeled as “spreading false medical claims,” even if the model has never encountered such cases before.

Image & visual recognition

In image classification, ZSL allows models to recognize objects they have never seen by linking images with text descriptions. Tools like CLIP can identify unfamiliar objects, such as a “red panda,” and align images with text, making visual search engines more effective at retrieving images based on user descriptions.

ZSL is also valuable in environmental monitoring, where it detects changes in satellite imagery without labeled training data. For instance, it can identify illegal logging by recognizing areas described as “significant canopy loss in forested regions,” even if the model has never been explicitly trained on deforestation patterns.

Retail & recommendations

In retail, ZSL helps classify new products into inventory categories using only textual descriptions. A model can automatically assign new items to labels like “eco-friendly materials” even if that category was not included during training.

It also solves the cold-start problem in recommendation systems by suggesting products or content without prior user data. Algorithms like ZESRec can recommend items from a completely new dataset without any overlap with previously seen data.

Challenges of Zero-Shot Learning

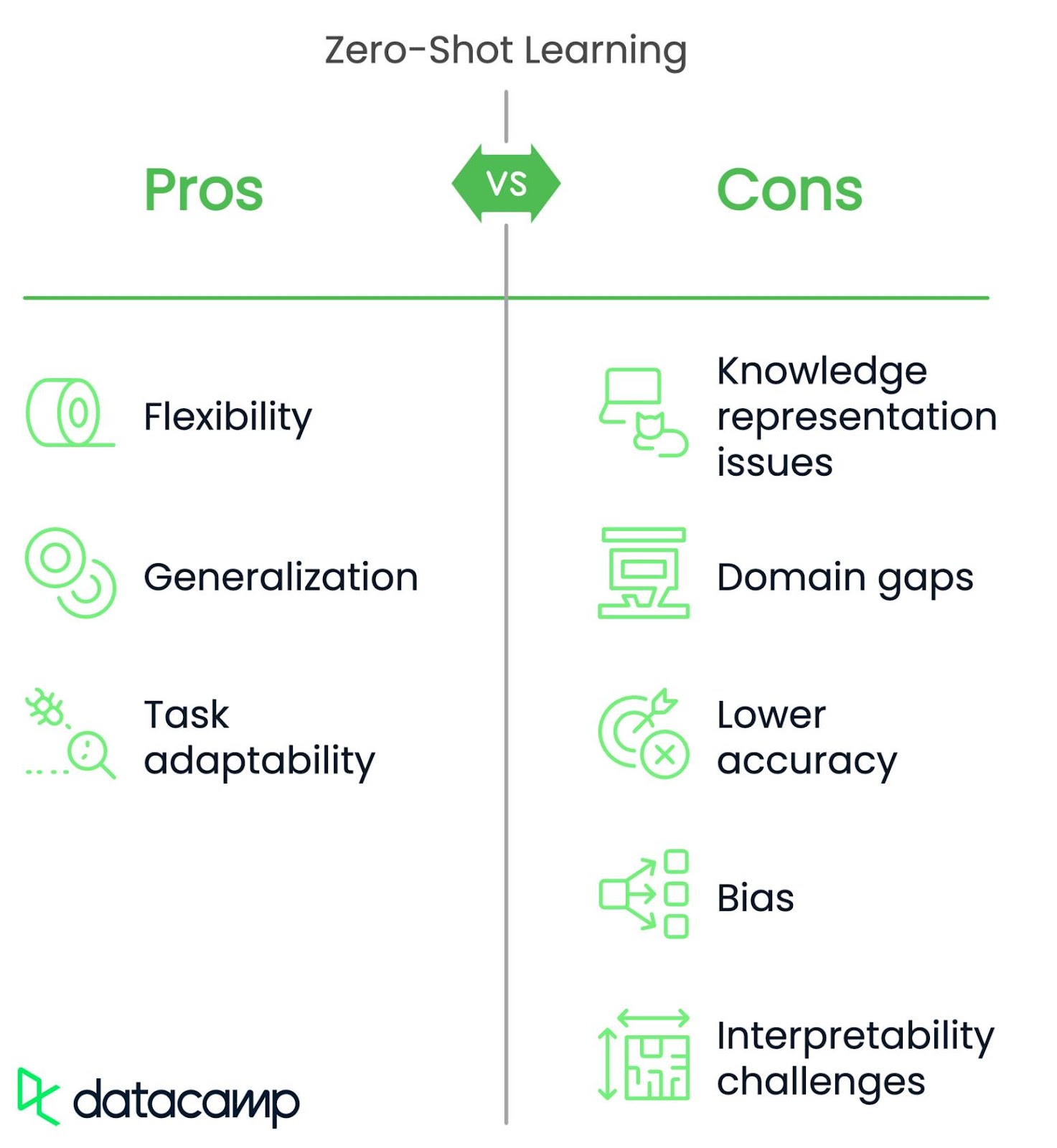

While ZSL is flexible and can generalize to new tasks, it faces several challenges.

Knowledge representation

ZSL models struggle to represent detailed or subtle differences between things. For example, a model might confuse a leopard and a cheetah because both are described as "spotted big cats," and the descriptions don’t capture the finer differences.

Domain gaps

ZSL models may fail when the new task or data is very different from what they were trained on. For example, a model trained to recognize household objects might fail to identify medical tools because they’re too different.

Performance

ZSL is often less accurate than supervised learning (where the model is trained on labeled data) for specific tasks. A solution to this challenge is combining ZSL with some fine-tuning on specific data to improve accuracy while maintaining flexibility.

Bias

ZSL relies on pre-trained data, which might contain biases. This can often lead to unfair predictions. Imaging a hiring model using ZSL. It could favor certain demographics if the pre-trained data has gender or racial biases. One mitigation strategy is to detect and reduce biases in the data beforehand or use methods like adversarial debiasing to make the model fairer.

AI Ethics

Interpretability

It can be hard to understand how a ZSL model makes decisions, especially when it uses complex reasoning. In diagnosing a rare disease, it might be unclear why the model chose that diagnosis without training data examples, for example.

Scalability

As the number of new tasks or categories grows, ZSL models can become slow and inefficient. A ZSL-based recommendation system might struggle to handle millions of new products across different categories. Using better methods for organizing and retrieving data, like efficient indexing or grouping similar tasks together can solve this problem in some cases.

Conclusion

Zero-shot learning avoids the need for large, labeled datasets, saves time and money, and works well in many areas, such as sorting text, recognizing images, diagnosing health problems, and giving personalized recommendations.

While ZSL isn’t perfect—it sometimes struggles with complex ideas or accuracy—it’s great for scenarios when flexibility is key and data is too expensive or scarce.

Ana Rojo Echeburúa is an AI and data specialist with a PhD in Applied Mathematics. She loves turning data into actionable insights and has extensive experience leading technical teams. Ana enjoys working closely with clients to solve their business problems and create innovative AI solutions. Known for her problem-solving skills and clear communication, she is passionate about AI, especially generative AI. Ana is dedicated to continuous learning and ethical AI development, as well as simplifying complex problems and explaining technology in accessible ways.