Track

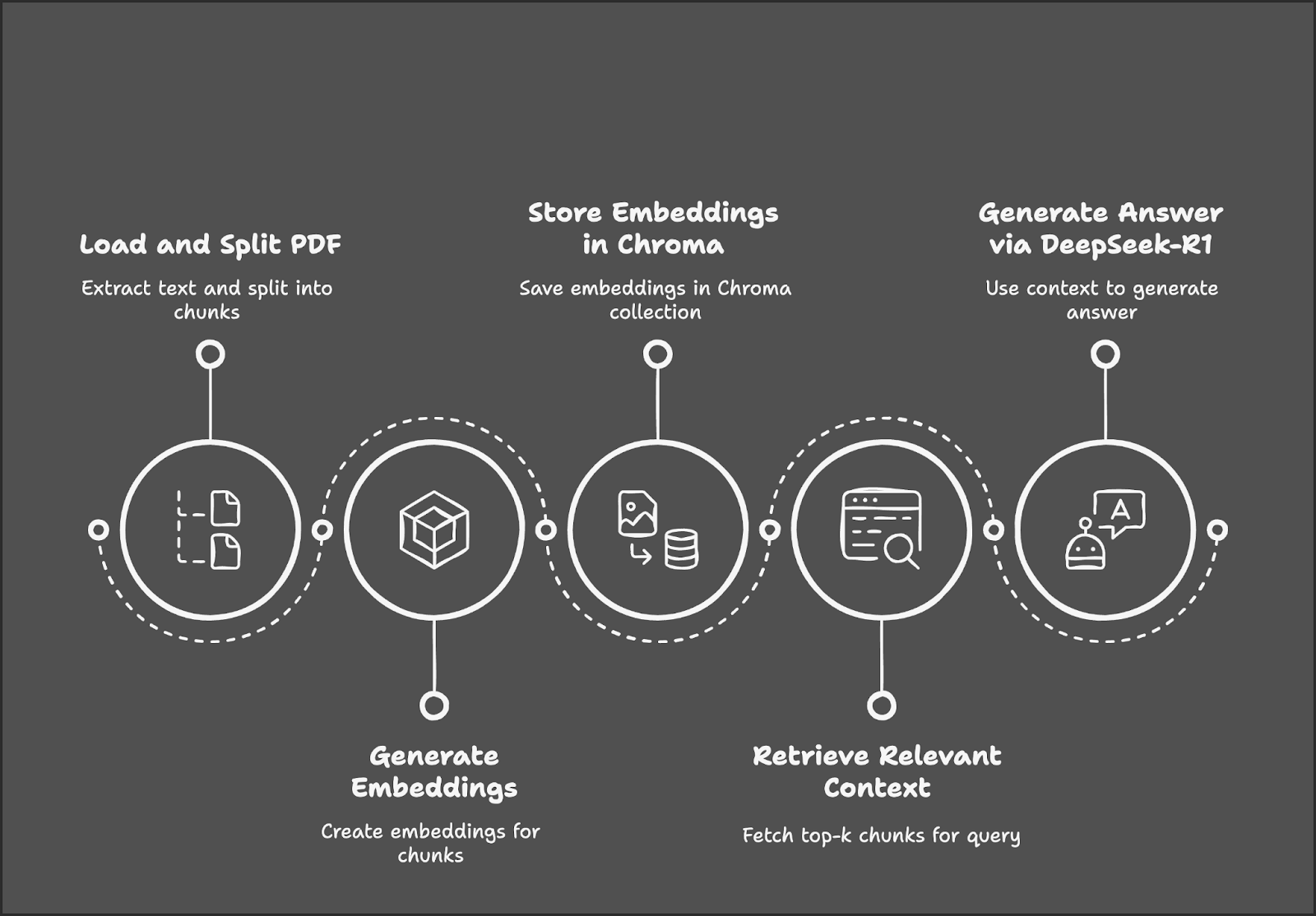

Retrieval-augmented generation (RAG) has emerged as a powerful approach for building AI applications that generate precise, grounded, and contextually relevant answers by retrieving and synthesizing knowledge from external sources.

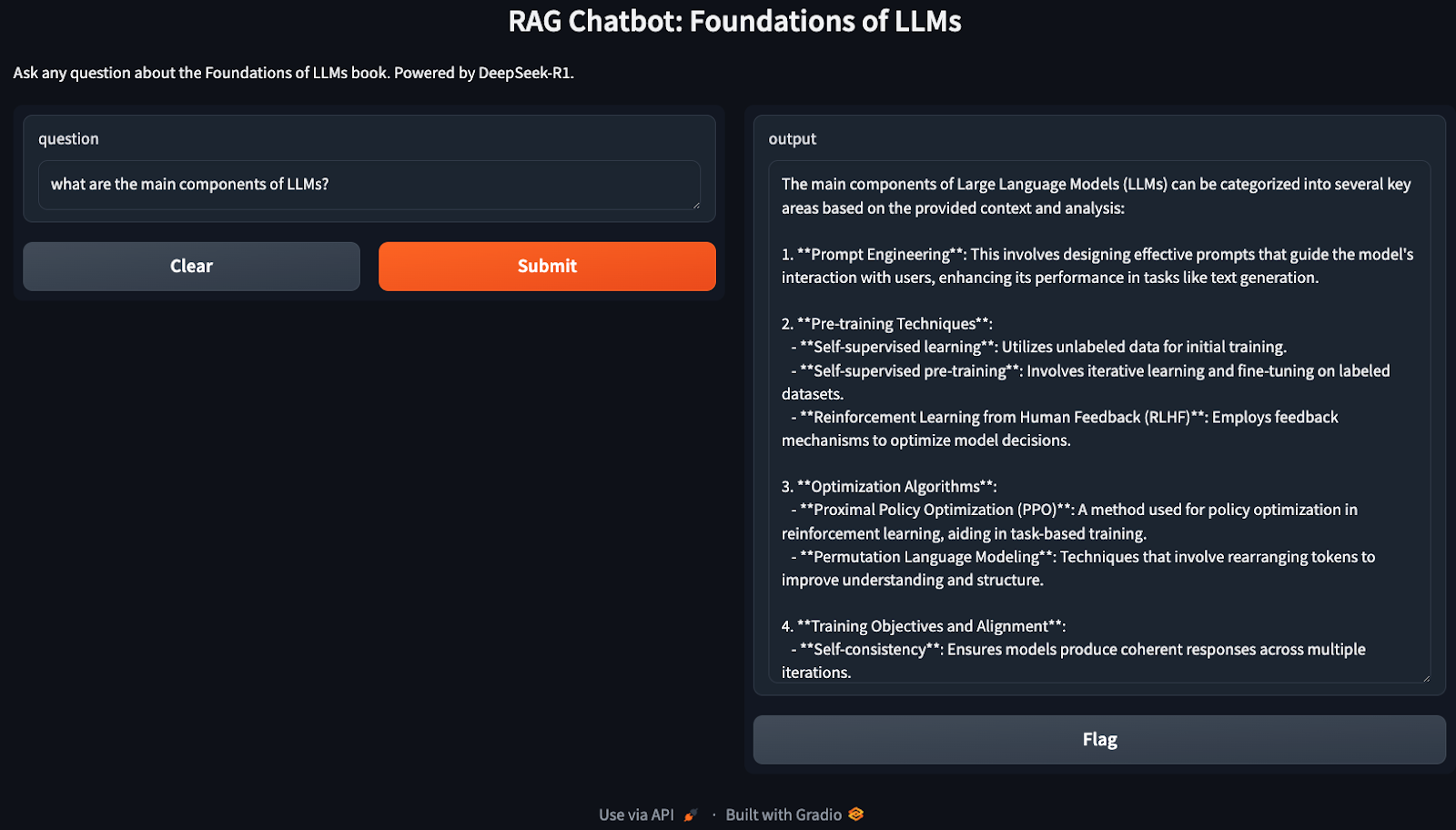

In this tutorial, I’ll explain step-by-step how to build a RAG-based chatbot using DeepSeek-R1 and a book on the foundations of LLMs as the knowledge base. By the end of this tutorial, you will be able to create a local RAG application capable of answering questions from the book and interacting with users via a Gradio interface.

RAG with LangChain

Why Use DeepSeek-R1 With RAG?

DeepSeek-R1 is an ideal fit for RAG-based systems due to its optimized performance, advanced vector search capabilities, and flexibility across different environments, from local setups to scalable deployments. Here are some reasons why it’s effective:

- High-performance retrieval: DeepSeek-R1 handles large document collections with low latency.

- Fine-grained relevance ranking: It ensures accurate retrieval of passages by computing semantic similarity.

- Cost and privacy benefits: You can run DeepSeek-R1 locally to avoid API fees and keep sensitive data secure.

- Easy integration: It easily integrates with vector databases like Chroma.

- Offline capabilities: With DeepSeek-R1 you can build retrieval systems that work even without internet access once the model is downloaded.

Overview: Building a RAG Chatbot With DeepSeek-R1

Our demo project focuses on building a RAG chatbot using DeepSeek-R1 and Gradio.

The process begins with loading and splitting a PDF into text chunks, followed by generating embeddings for those chunks. These embeddings are stored in a Chroma database for efficient retrieval. When a user submits a query, the system retrieves the most relevant text chunks and uses DeepSeek-R1 to generate an answer based on the retrieved context.

Step 1: Prerequisites

Before we start, let’s ensure that we have the following tools and libraries installed:

- Python 3.8+

- Langchain

- Chromadb

- Gradio

Run the following commands to install the necessary dependencies:

!pip install langchain chromadb gradio ollama pymypdf

!pip install -U langchain-communityOnce the above dependencies are installed, run the following import commands:

import ollama

import re

import gradio as gr

from concurrent.futures import ThreadPoolExecutor

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain_community.document_loaders import PyMuPDFLoader

from langchain_community.embeddings import OllamaEmbeddings

from chromadb.config import Settings

from chromadb import Client

from langchain.vectorstores import ChromaStep 2: Load the PDF Using PyMuPDFLoader

We will use LangChain’s PyMuPDFLoader to extract the text from the PDF version of the book Foundations of LLMs by Tong Xiao and Jingbo Zhu—this is a math-heavy book, which means our chatbot should be able to explain well the math behind LLMs. You can find the book on arXiv.

# Load the document using PyMuPDFLoader

loader = PyMuPDFLoader("/path/to/Foundations_of_llms.pdf")

documents = loader.load()Once the document is loaded, we can start dividing the text into chunks for further processing.

Step 3: Split the Document Into Smaller Chunks

We’ll split the extracted text into smaller, overlapping chunks for better context retrieval. You can vary the size of chunk and chunk overlap as per your system within the RecursiveCharacterTextSpilitter() function.

# Split the document into smaller chunks

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=200)

chunks = text_splitter.split_documents(documents)Now, we have the chunks of extracted text which are ready to be converted into embeddings.

Step 4: Generate Embeddings Using DeepSeek-R1

We’ll use Ollama Embeddings based on DeepSeek-R1 to generate the document embeddings. Depending on the size of the document, embedding generation can take time, so it's preferable to parallelize it for faster processing.

Note: model="deepseek-r1" by default considers the 7B parameter model. You can change it as required to 8B, 14B, 32B, 70B, or 671B. Replace X in the following model name with model size: model="deepseek-r1:X"

# Initialize Ollama embeddings using DeepSeek-R1

embedding_function = OllamaEmbeddings(model="deepseek-r1")

# Parallelize embedding generation

def generate_embedding(chunk):

return embedding_function.embed_query(chunk.page_content)

with ThreadPoolExecutor() as executor:

embeddings = list(executor.map(generate_embedding, chunks))The above function initializes DeepSeek-R1 via Ollama to generate high-dimensional semantic embeddings, which will later be used for similarity-based document retrieval.

The generate_embedding() function takes a document chunk’s text and generates its embedding. Finally, ThreadPoolExecutor() applies generate_embedding() to each chunk concurrently, collecting embeddings into a list for faster processing compared to sequential execution.

Step 5: Store Embeddings in Chroma Vector Store

We’ll store the embeddings and corresponding text chunks in a high-performance vector database, Chroma.

# Initialize Chroma client and create/reset the collection

client = Client(Settings())

client.delete_collection(name="foundations_of_llms") # Delete existing collection (if any)

collection = client.create_collection(name="foundations_of_llms")

# Add documents and embeddings to Chroma

for idx, chunk in enumerate(chunks):

collection.add(

documents=[chunk.page_content],

metadatas=[{'id': idx}],

embeddings=[embeddings[idx]],

ids=[str(idx)] # Ensure IDs are strings

)We start by following these steps to store embeddings:

1. Initialize Chroma client and reset collection:

- The

Client(Settings())initializes the Chroma client to manage the vector store. - Delete any existing collection similar to your collection name using

client.delete_collection()to avoid running into errors. Finally, useclient.create_collection()to create a new collection to store the document chunks and their embeddings.

2. Iterate through document chunks:

- Iterate over each document chunk and its corresponding embedding using its unique string ID.

3. Add chunks and embeddings to Chroma:

- For each chunk,

collection.add()stores: - The document content (

chunk.page_content) - Metadata (

{'id': idx}) to reference the chunk - Its corresponding embedding vector for retrieval

- A unique ID string to identify the entry

This setup ensures that each document chunk is indexed correctly for efficient vector-based retrieval.

Step 6: Initialize the Retriever

We’ll initialize the Chroma retriever, ensuring it uses the same DeepSeek-R1 embeddings for queries.

# Initialize retriever using Ollama embeddings for queries

retriever = Chroma(collection_name="foundations_of_llms", client=client, embedding_function=embedding_function).as_retriever()The Chroma retriever connects to the "foundations_of_llms" collection and uses DeepSeek-R1 embeddings via Ollama to embed user queries. It retrieves the most relevant document chunks based on vector similarity for context-aware responses.

Step 7: Define the RAG pipeline

Next, we’ll retrieve the most relevant chunks of text and format them for DeepSeek-R1 to generate answers.

def retrieve_context(question):

# Retrieve relevant documents

results = retriever.invoke(question)

# Combine the retrieved content

context = "\n\n".join([doc.page_content for doc in results])

return contextThe retrieve_context function embeds the user query using DeepSeek-R1 and retrieves the top relevant document chunks via the Chroma retriever. It then combines the content of the retrieved chunks into a single context string for further processing.

Step 8: Query DeepSeek-R1 for contextual answers

Now, we have the question and retrieved context. Next, send it to DeepSeek-R1 via Ollama for our final answer.

def query_deepseek(question, context):

# Format the input prompt

formatted_prompt = f"Question: {question}\n\nContext: {context}"

# Query DeepSeek-R1 using Ollama

response = embedding_function.chat(

model="deepseek-r1",

messages=[{'role': 'user', 'content': formatted_prompt}]

)

# Clean and return the response

response_content = response['message']['content']

final_answer = re.sub(r'<think>.*?</think>', '', response_content, flags=re.DOTALL).strip()

return final_answerTo get the final answer, we start with combining user question and retrieved context into a structured prompt. Then send this prompt to the DeepSeek-R1 model via Ollama to receive a response. To make the final output presentable, we remove unnecessary tags and return the final answer.

Step 9: Build the Gradio Interface

We have our RAG pipeline in place. Now, we’ll use Gradio to create an interactive interface for users to ask questions related to its knowledge base (Foundations of LLMs in this case).

def ask_question(question):

# Retrieve context and generate an answer using RAG

context = retrieve_context(question)

answer = query_deepseek(question, context)

return answer

# Set up the Gradio interface

interface = gr.Interface(

fn=ask_question,

inputs="text",

outputs="text",

title="RAG Chatbot: Foundations of LLMs",

description="Ask any question about the Foundations of LLMs book. Powered by DeepSeek-R1."

)

interface.launch()The ask_question() function retrieves relevant context using the Chroma retriever and generates the final answer via DeepSeek-R1. The Gradio interface, built with gr.Interface(), enables users to ask questions interactively and receive contextually accurate, grounded answers.

Congrats! You now have a locally running chatbot ready to discuss anything related to LLMs.

Optimizations

The above demo covers a very basic implementation of RAG, which can be optimized further for efficiency. Here are a few things to try:

- Chunk size adjustment: Adjust the

chunk_sizeandchunk_overlapparameters to balance performance and retrieval quality. - Smaller model versions: If DeepSeek-R1 is too resource-heavy, you can use different versions (deepseek-r1:7b or deepseek-r1:8b or deepseek-r1:14b) via Ollama.

- Scale using Faiss: For larger documents, consider integrating Faiss for faster retrieval.

- Batch processing: If embedding generation is slow, batch the chunks to improve efficiency.

Conclusion

In this tutorial, we built a RAG-based local chatbot using DeepSeek-R1 and Chroma for retrieval, which ensures accurate, contextually rich answers to questions based on a large knowledge base.

To learn more about DeepSeek, I recommend these blogs:

I am a Google Developers Expert in ML(Gen AI), a Kaggle 3x Expert, and a Women Techmakers Ambassador with 3+ years of experience in tech. I co-founded a health-tech startup in 2020 and am pursuing a master's in computer science at Georgia Tech, specializing in machine learning.