Track

Change Data Capture (CDC) helps keep data pipelines efficient by capturing only inserts, updates, and deletes rather than reloading entire datasets. This reduces processing time and system load.

CDC is also essential in real-time data streaming, integrating with platforms like Apache Kafka to enable event-driven architectures.

Let’s dive into CDC and how it can improve your data projects!

What Is Change Data Capture (CDC)?

Change Data Capture is an approach that detects, captures, and forwards only the modified data from a source system into downstream systems such as data warehouses, dashboards, or streaming applications.

Rather than reprocessing entire datasets, CDC focuses solely on incremental changes, ensuring that target systems always have access to the most current data available.

In one real-time analytics project, I faced significant reporting delays due to batch updates. By switching to a log-based CDC solution, I dramatically reduced the data freshness lag from hours to seconds, making the analytics dashboard truly real-time.

With this personal experience underscoring CDC’s practical value, let’s now move on to the different methods used to implement CDC.

Methods for Implementing Change Data Capture

Before diving into specific techniques, it’s important to recognize that no single approach fits every scenario. Below are four common CDC methods, each with its own advantages and trade-offs.

Log-based CDC

Log-based CDC reads a database’s transaction logs (commonly called Write-Ahead Logs, or WAL) to identify changes instantly as they occur. This method is highly efficient because it operates at a low level, capturing changes with minimal disruption to the production system.

- Advantages: Low system overhead and near‑real‑time performance make it ideal for high-volume environments.

- Disadvantages: It requires privileged access to transaction logs and depends on proper log retention settings.

For example, in PostgreSQL, you can set up logical replication to capture changes from the WAL:

-- Enable logical replication

ALTER SYSTEM SET wal_level = logical;

-- Create a logical replication slot to capture changes

SELECT pg_create_logical_replication_slot('cdc_slot', 'pgoutput');

-- Fetch recent changes from the WAL

SELECT * FROM pg_logical_slot_get_changes('cdc_slot', NULL, NULL);This approach allows a CDC tool (such as Debezium or AWS DMS, which we will explore later) to continuously stream database changes to a downstream system without relying on scheduled queries.

Trigger-based CDC

Trigger-based CDC uses database triggers attached to source table events (inserts, updates, or deletes) to automatically record changes as soon as they occur. This method is straightforward for databases that support triggers and provides immediate change capture; however, it may add extra load to the database and complicate schema changes if not managed carefully.

- Advantages: Straightforward to implement on databases that support triggers and ensures immediate change capture.

- Disadvantages: It can add extra load to the database and may complicate schema changes if not managed carefully.

Trigger-based CDC offers immediacy, but at the potential cost of added overhead, so it is most suitable in environments with moderate transaction volumes.

In PostgreSQL, you can create a trigger to log changes from a customers table into a separate customers_audit table:

-- Create an audit table to store changes

CREATE TABLE customers_audit (

audit_id SERIAL PRIMARY KEY,

operation_type TEXT,

customer_id INT,

customer_name TEXT,

modified_at TIMESTAMP DEFAULT now()

);

-- Create a function to insert change records

CREATE OR REPLACE FUNCTION capture_customer_changes()

RETURNS TRIGGER AS $

BEGIN

IF TG_OP = 'INSERT' THEN

INSERT INTO customers_audit (operation_type, customer_id, customer_name)

VALUES ('INSERT', NEW.id, NEW.name);

ELSIF TG_OP = 'UPDATE' THEN

INSERT INTO customers_audit (operation_type, customer_id, customer_name)

VALUES ('UPDATE', NEW.id, NEW.name);

ELSIF TG_OP = 'DELETE' THEN

INSERT INTO customers_audit (operation_type, customer_id, customer_name)

VALUES ('DELETE', OLD.id, OLD.name);

END IF;

RETURN NULL; -- No need to modify original table data

END;

$ LANGUAGE plpgsql;

-- Attach the trigger to the customers table

CREATE TRIGGER customer_changes_trigger

AFTER INSERT OR UPDATE OR DELETE ON customers

FOR EACH ROW EXECUTE FUNCTION capture_customer_changes();This trigger ensures that every INSERT, UPDATE, or DELETE on the customers table is logged into customers_audit in real time.

Polling-based CDC

Polling-based CDC periodically queries the source database to check for changes based on a timestamp or version column. While this method avoids the need for direct access to transaction logs or triggers, it can introduce latency because changes are only detected at fixed intervals.

- Advantages: Simple to implement when log access or triggers are unavailable.

- Disadvantages: It can delay the capture of changes and increase load if polling is too frequent.

This approach works well in circumstances where real-time access to logs is unavailable, though the trade-off is a slight delay in detecting changes.

Imagine a products table with a version_number column that increments on each update:

SELECT *

FROM products

WHERE version_number > 1050

ORDER BY version_number ASC;Here, 1050 is the last processed version number from the previous polling cycle. This ensures that only new changes are fetched without relying on timestamps, making it more robust when system clocks are unreliable.

Timestamp-based CDC

Timestamp-based CDC relies on a dedicated column that records the last modified time for each record. By comparing these timestamps, the system identifies records that have changed since the previous check.

This method is similar to polling-based CDC but is more structured, as it requires an explicit mechanism to track changes:

- Polling-based CDC is a broader approach that periodically queries for changes using any identifiable pattern (timestamps, version numbers, or other indicators).

- Timestamp-based CDC depends on a timestamp column that must be accurately maintained in the source database.

While it is easy to implement when the database automatically updates timestamps, it depends on the consistency of system clocks and the reliability of timestamp updates.

- Advantages: Simple approach when systems automatically update timestamps.

- Disadvantages: Accuracy depends on consistent clock synchronization and reliable timestamp updates.

Assume we have an orders table with a last_modified column that updates whenever a row is inserted or modified. The following SQL query fetches all changes since the last check:

SELECT *

FROM orders

WHERE last_modified > '2025-02-25 12:00:00'

ORDER BY last_modified ASC;This method works well when the database automatically updates the timestamp upon modification, such as using triggers or built-in mechanisms like PostgreSQL’s DEFAULT now() or MySQL’s ON UPDATE CURRENT_TIMESTAMP.

CDC methods comparison table

Here’s a comparison table highlighting the key differences between the four Change Data Capture (CDC) methods:

|

Feature |

Log-based CDC |

Trigger-based CDC |

Polling-based CDC |

Timestamp-based CDC |

|

How it works |

Reads database transaction logs (WAL, binlog, etc.) to capture changes in real time. |

Uses database triggers to log changes in an audit table. |

Periodically queries for changes using a version number or other criteria. |

Compares timestamps in a column to detect changes. |

|

Latency |

Near real-time (low latency). |

Immediate (triggers execute instantly). |

Scheduled intervals (can introduce delays). |

Depends on polling frequency (low to moderate latency). |

|

System overhead |

Low (does not require querying tables). |

High (triggers run on every change). |

Moderate (depends on polling frequency). |

Low to Moderate (relies on timestamps). |

|

Implementation complexity |

High (requires access to transaction logs and proper retention). |

Medium-High (requires creating triggers and maintaining an audit table). |

Low (relies on simple SQL queries). |

Low (simple if timestamps are automatically managed). |

|

Access requirements |

Privileged access to transaction logs. |

Requires DDL access to create triggers. |

No special access needed (standard SQL). |

No special access needed (standard SQL). |

|

Supports deletes? |

Yes (captured from logs). |

Yes (if logged in the audit table). |

Needs extra tracking (e.g., a separate delete table). |

Only if soft deletes (deleted_at) are used. |

|

Best use case |

High-volume, real-time replication where minimal database load is crucial. |

Small to medium workloads that need instant change capture. |

When log-based CDC and triggers are unavailable, but periodic updates are acceptable. |

When timestamps are automatically updated and frequent polling is feasible. |

|

Common tools |

Debezium, AWS DMS, StreamSets, Striim, HVR |

Database-native triggers (PostgreSQL, MySQL, SQL Server, Oracle) |

Apache Airflow, ETL scripts (Python, SQL) |

ETL jobs, batch data pipelines |

Associate Data Engineer in SQL

Use Cases for Change Data Capture

Understanding the methods is only half the story; seeing how CDC delivers tangible value in real-world scenarios is equally important.

Real-time data warehousing

CDC enables continuous, incremental updates to data warehouses by propagating only the changes instead of reloading complete datasets. This approach ensures that business intelligence tools and dashboards display the most current data.

For example, a retail company can update its sales dashboard in near‑real‑time to quickly reveal emerging trends and insights.

Data replication

CDC replicates data across systems by ensuring that any change made in the source system is immediately mirrored in target databases. This is especially useful during data migration projects or when maintaining backups and replicas across hybrid environments.

For instance, replicating data from on‑premises systems to cloud databases such as AWS RDS or Snowflake guarantees consistency across platforms.

Data synchronization

In distributed systems—such as those involving microservices or multiple applications—CDC ensures that every component operates on the most up‑to‑date data by synchronizing changes in real time.

For example, synchronizing customer information across various platforms maintains a consistent user experience across the board.

Data auditing and monitoring

Finally, CDC provides detailed change logs that are essential for auditing purposes. By tracking who made changes and when these changes occurred, teams can meet regulatory requirements, troubleshoot issues, and perform in-depth forensic analyses.

Financial institutions, for example, rely on comprehensive CDC logs to audit customer data modifications and ensure compliance with stringent data governance policies.

Tools for Implementing Change Data Capture

After exploring the methods and use cases, let’s examine some popular tools that facilitate CDC implementations. The right choice depends on your use case—whether you need real-time streaming, cloud migration, or enterprise ETL solutions.

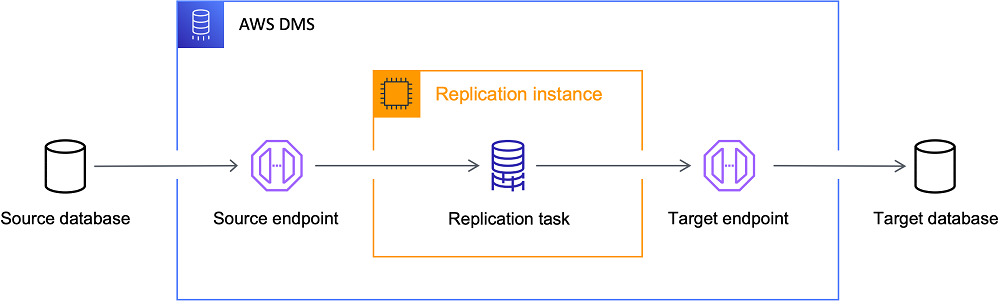

AWS Database Migration Service (DMS)

AWS DMS uses log-based CDC to continuously replicate data from on‑premises systems to the AWS cloud with minimal downtime, making it an excellent choice for migrations. AWS DMS is a robust solution if your goal is to move data to the cloud with reliable uptime.

Best for: Cloud migrations and AWS-based architectures.

The AWS DMS Architecture. Image source: AWS

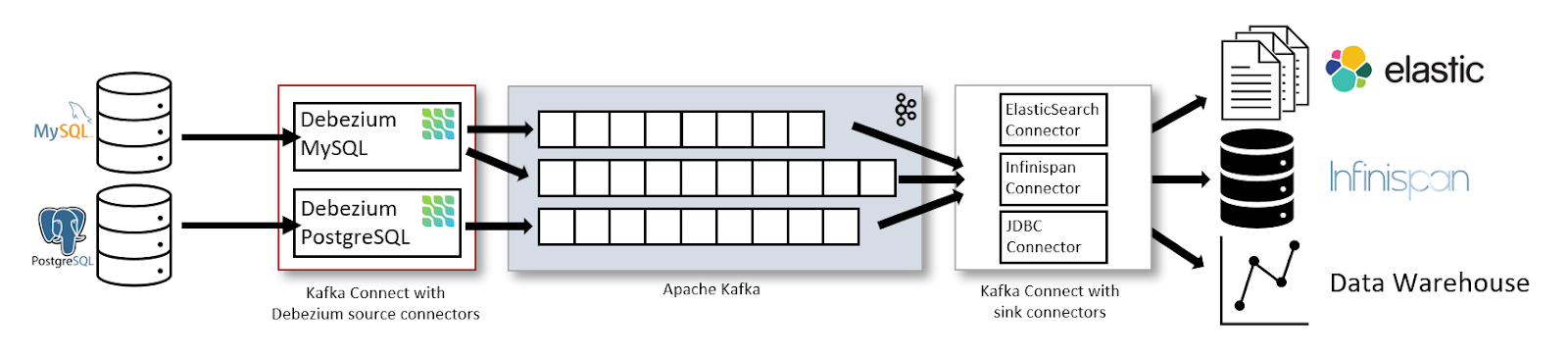

Debezium

Debezium is an open‑source CDC platform that captures and streams database changes into systems like Apache Kafka.

Personally, I’ve found Debezium extremely useful for streaming database changes into Kafka, especially in distributed environments where multiple services depend on real‑time updates. Its scalability and integration capabilities make it a standout option.

Best for: Real-time data streaming and event-driven architectures.

The Data Streaming Architecture with Kafka Connect and Debezium. Image source: Debezium

Apache Kafka

Apache Kafka isn't a CDC tool itself but serves as the backbone for processing CDC events when paired with tools like Debezium. Kafka enables reliable event-driven pipelines, real-time analytics, and data synchronization across multiple consumers.

Best for: Streaming CDC data to event-driven architectures.

To illustrate how CDC events can be sent to Kafka, consider the following Python snippet. The code initializes a Kafka producer and sends a CDC event (representing an update operation on an orders table) to a Kafka topic named cdc-topic:

from kafka import KafkaProducer

import json

# Initialize the Kafka producer with bootstrap servers and a JSON serializer for values.

producer = KafkaProducer(

bootstrap_servers='localhost:9092',

value_serializer=lambda v: json.dumps(v).encode('utf-8')

)

# Define a CDC event that includes details of the operation.

cdc_event = {

"table": "orders",

"operation": "update",

"data": {"order_id": 123, "status": "shipped"}

}

# Send the CDC event to the 'cdc-topic' and flush to ensure transmission.

producer.send('cdc-topic', cdc_event)

producer.flush()

print("CDC event sent successfully!")Talend and Informatica

Talend and Informatica are comprehensive ETL platforms offering built‑in CDC functionality to capture and process data changes, reducing manual configurations. They are especially advantageous in complex data transformation scenarios, where integrated solutions can simplify operations.

Best for: Enterprise-grade ETL solutions with built-in CDC.

Database-native CDC solutions

Several relational databases offer native CDC features, reducing the need for external tools:

- PostgreSQL logical replication: Captures changes in WAL and streams them to subscribers.

- SQL Server change data capture (CDC): Uses transaction logs to track changes automatically.

- MySQL binary log (binlog) replication: Logs changes for replication purposes.

Best for: Minimizing dependencies on external CDC tools.

Google Datastream and Azure Data Factory

Cloud providers also offer CDC solutions for their ecosystems:

- Google Datastream: A fully managed CDC and replication service for Google Cloud users.

- Azure Data Factory CDC: Enables change tracking and replication for Azure SQL, CosmosDB, and Synapse Analytics.

Best for: CDC within Google Cloud or Microsoft Azure environments.

Challenges and Limitations of CDC

While CDC offers significant benefits, it also comes with challenges that must be managed for a reliable implementation.

Handling data integrity issues

Maintaining data integrity can be challenging when dealing with network interruptions, delayed transactions, or system glitches. Robust error-handling protocols and regular reconciliations are vital to prevent discrepancies between the source and target systems. Addressing these issues early helps maintain a dependable data pipeline.

Performance overhead

Certain CDC methods—particularly those that rely on triggers or frequent polling—can introduce additional load on source databases. Balancing the need for near‑real‑time updates with the performance constraints of your production systems is key to a smooth operation.

Managing complex data transformations

Although CDC efficiently captures raw changes, additional downstream processing, such as data cleansing or transformation, may be required. Integrating transformation logic without delaying deliveries or introducing errors adds complexity to CDC implementation, so careful planning is necessary.

Best Practices for Implementing CDC

Putting theory into practice requires adherence to best practices. Here are actionable tips from my experience that helped me build robust CDC pipelines.

Choose the right implementation method

Select a CDC approach that aligns with your data volume, latency requirements, and system architecture. Log-based CDC is usually optimal for high-transaction environments, while trigger‑ or polling‑based methods might be more suitable for smaller applications. Evaluating your specific needs upfront can save time and resources later.

Monitor jobs

Implement comprehensive monitoring using real‑time dashboards and automated alerts. Regular log reviews and health checks are essential to ensure that every change is captured accurately and that any issues are promptly addressed.

A minor log-based CDC misconfiguration in one project went unnoticed for days, leading to silent data loss in downstream analytics. Implementing Grafana alerts helped catch missing updates instantly, preventing costly errors.

Ensure data quality

Integrate data validation checkpoints within the CDC pipeline to ensure that only accurate and consistent changes are propagated.

From my experience, setting up automated validation checks in the CDC pipeline saved me from hours of debugging incorrect data propagation issues. Tools like dbt and Apache Airflow have been instrumental in enforcing consistency across multiple downstream systems.

Test implementations before deployment

Before moving your CDC solution to production, thoroughly test it in a staging environment. Simulate real‑world workloads and failure scenarios and validate features such as rollback and time travel to ensure the system behaves as expected under all conditions. This rigorous testing is important for a smooth production rollout.

Handle schema evolution strategically

Your CDC system must adapt to schema changes without disruption as your datasets evolve. Use tools that support automatic schema evolution and maintain proper version control so that new fields are integrated smoothly. This strategic approach prevents unexpected errors and downtime when data structures change.

Conclusion

By precisely capturing only the modified data, CDC minimizes system load and enables real‑time analytics and streaming applications to function properly. Whether you are implementing data replication, synchronization, or audit logging, selecting the appropriate CDC method and following best practices is key to building a reliable and efficient data pipeline.

For those who want to deepen their understanding, I encourage you to explore the following DataCamp courses:

- Introduction to Data Engineering – Learn foundational techniques for building robust data pipelines and integrating various data sources.

- Big Data Fundamentals with PySpark – Gain hands‑on experience with Apache Spark and PySpark to process and analyze large datasets.

- Data Engineer in Python – Build end‑to‑end data pipelines using Python, with practical exposure to tools like Apache Kafka for streaming data integration.

Happy coding—and here’s to building resilient, real‑time data systems!

Become a Data Engineer

FAQs

How does CDC enhance real-time analytics in modern data architectures?

CDC minimizes the need for bulk ETL jobs by capturing only incremental changes from source databases. This ensures that analytical dashboards and streaming applications always have access to the most recent data, reducing latency and system load.

What are practical steps to test a CDC implementation before full deployment?

Set up a staging environment to simulate production loads; monitor data integrity, latency, and error-handling; and perform rollback tests using time travel features if available, to validate recovery procedures.

How can I handle schema evolution when using CDC?

Incorporate schema versioning and automated validation steps in the CDC pipeline. Test schema changes in a controlled environment to ensure backward compatibility, and leverage CDC tools that support automatic schema evolution.

What monitoring tools or practices do you recommend for CDC pipelines?

Integrate dashboards (using tools like Grafana) and alerting systems to track CDC execution metrics. Regular log reviews and automated health checks can help detect performance bottlenecks or data quality issues early.

Which CDC method should be chosen for a high-volume, low-latency environment?

Log-based CDC is generally the best option for such environments, as it reads directly from database transaction logs (WAL) and minimizes the impact on production workloads, providing near‑real‑time updates.

I’m a data engineer and community builder who works across data pipelines, cloud, and AI tooling while writing practical, high-impact tutorials for DataCamp and emerging developers.