Track

We have all heard of CPUs (Central Processing Units) and GPUs (Graphics Processing Units), but do you know the differences in how they handle processing? While both are essential to modern computing, they’re designed for different purposes.

In this guide, I will break down the differences, use cases, and evolving roles of CPUs and GPUs.

What is a CPU?

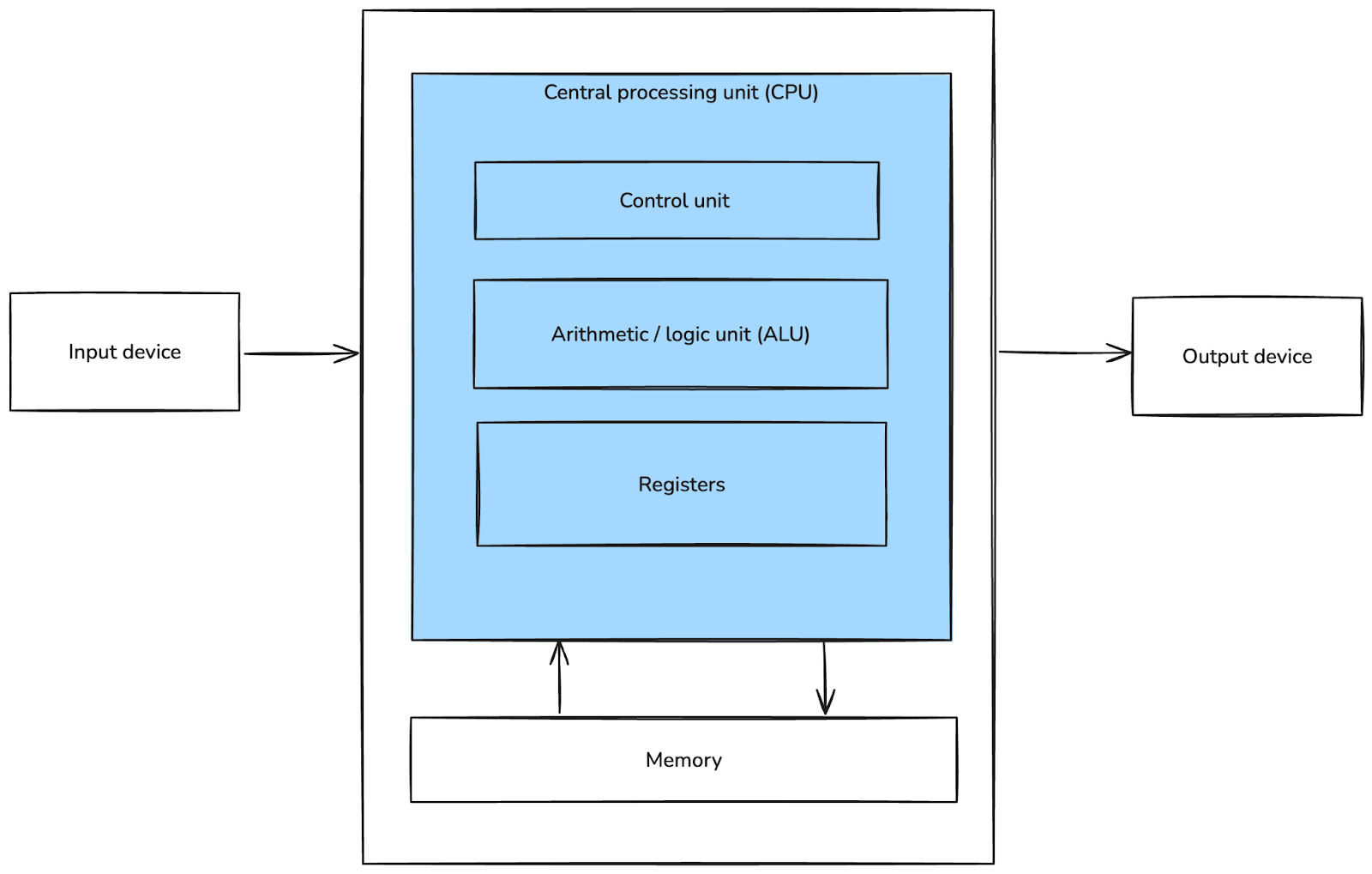

The CPU, often called the computer's "brain," is the central unit that executes general-purpose computing tasks. It is optimized for managing complex, sequential instructions and involves nearly every aspect of computing, from handling applications to running the operating system. Applications include everything from your browser to chat programs and email.

CPUs are really good at tasks that demand precision and reliability, such as performing arithmetic operations, executing logic-based decisions, and controlling data flow.

Basic Von Neumann architecture. Image by Author.

Modern CPUs often feature multiple cores, allowing them to execute several tasks simultaneously (albeit at a smaller scale compared to GPUs). They also incorporate advanced technologies like hyper-threading and cache hierarchies to improve efficiency and reduce latency during task execution.

How a CPU works

CPUs process tasks sequentially, following an instruction cycle that involves fetching, decoding, executing, and storing data. This step-by-step approach enables high precision and reliability, making CPUs ideal for single-threaded tasks such as database queries, code compilation, and complex simulations.

While modern CPUs can multitask to some extent, their strength actually lies in their ability to handle complex computations where accuracy and order are essential.

CPUs also feature robust error-handling mechanisms to perform computations without data corruption or logical faults.

Characteristics of CPUs

CPUs are characterized by a few cores with high clock speeds. Each core focuses on one or a few threads at a time, making them highly efficient for tasks that require significant processing power but limited parallelism.

The core characteristics of CPUs include:

- Clock speed: Measured in GHz, this determines how many cycles a core can execute per second, directly impacting performance for single-threaded tasks.

- Cache memory: CPUs have integrated caches (L1, L2, and L3) that store frequently accessed data, reducing the time needed to fetch data from RAM.

- Instruction sets: CPUs use complex instruction sets (like x86) to handle a diverse range of tasks, from mathematical computations to multimedia processing.

Despite their precision and versatility, CPUs are limited in their ability to handle massively parallel workloads efficiently, such as training large machine learning models or rendering high-resolution graphics. For such tasks, specialized processors like GPUs often take the lead. Let’s examine why that’s the case in the next section.

What is a GPU?

A GPU is a specialized processor optimized for tasks that involve parallel processing or processing multiple bins of information simultaneously.

GPUs were initially developed to handle image rendering for video games and visual applications but have evolved into powerful tools used in various fields, including data science, machine learning, cryptocurrency mining, and scientific simulations. Their ability to process huge amounts of data in parallel has made them critical for high-performance computing and AI development.

How a GPU works

GPUs have thousands of smaller cores that can execute simple tasks independently, making them ideal for parallel workloads.

Unlike CPUs, which focus on precision and order, GPUs divide large problems into smaller tasks, process them in parallel, and aggregate the results. This architecture allows GPUs to excel at operations where the same instructions are applied repeatedly across large datasets.

For instance, when rendering an image, a GPU assigns each pixel to a separate core for simultaneous processing, significantly speeding up the operation. In machine learning, this parallelism enables faster training by processing data batches and performing computations like matrix multiplications across many cores at once.

Modern GPUs also include features like Tensor Cores (in NVIDIA GPUs) or other specialized units designed to accelerate AI tasks, making them even more efficient for tasks like neural network training.

Characteristics of GPUs

In summary, these are the characteristics that distinguish GPUs:

- Core count: A GPU can have thousands of cores, enabling it to handle extensive parallel workloads. While these cores are less powerful individually than CPU cores, their combined output is immense.

- Clock speed: GPUs have lower clock speeds compared to CPUs. This trade-off allows more cores to fit into a chip, emphasizing throughput over single-threaded performance.

- Memory bandwidth: GPUs are equipped with high-bandwidth memory (like GDDR6 or HBM) to handle the data-intensive demands of parallel processing.

- Scalability: Modern GPUs support multi-GPU setups, where tasks are distributed across multiple GPUs for greater performance gains.

Learn Python From Scratch

Differences Between CPUs and GPUs

Let’s now zoom into the specific differences between CPUs and GPUs. A major one we discussed was serial vs. parallel computation, but there are other important ones.

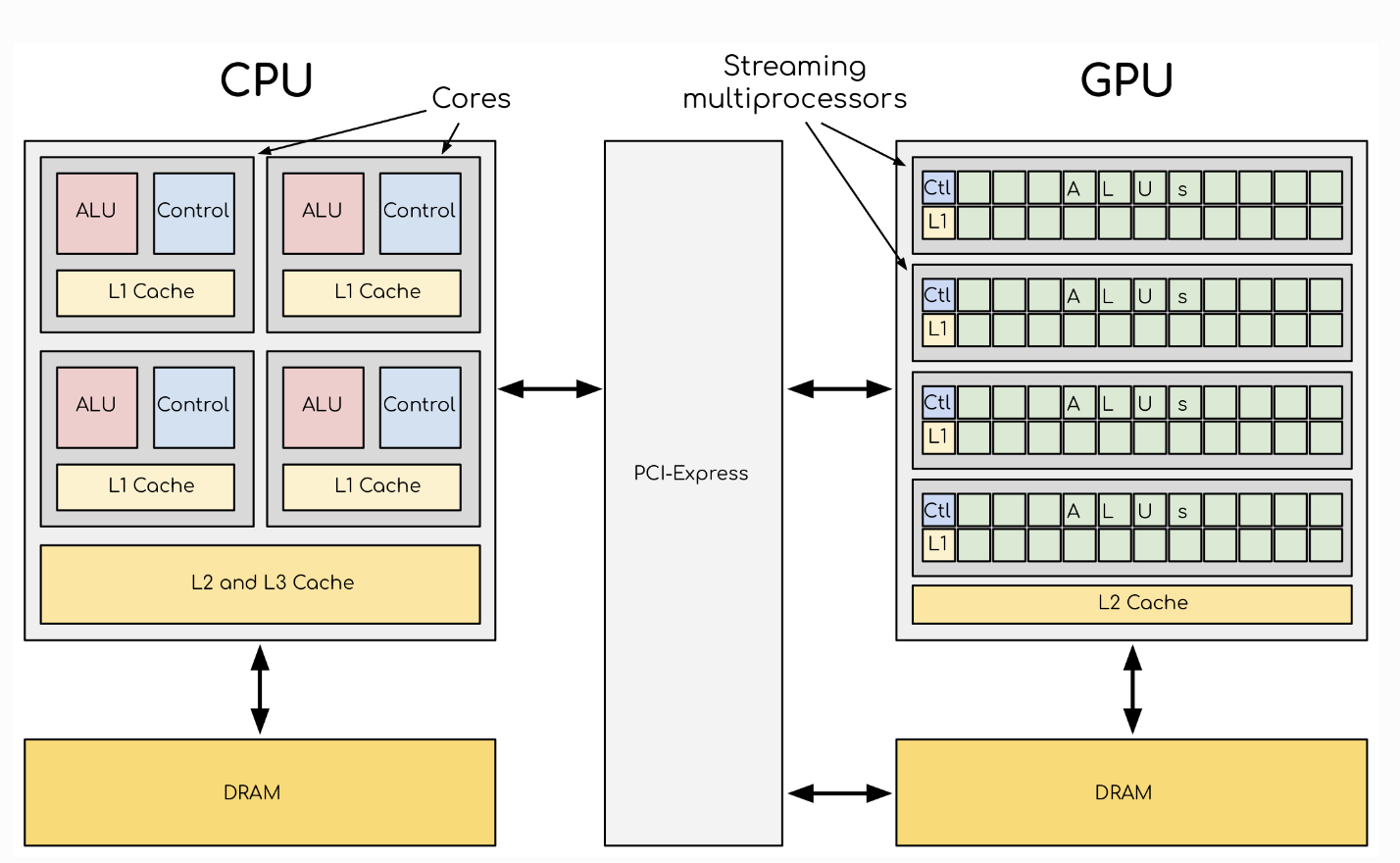

Architecture and design

CPUs are built with fewer, more powerful cores optimized for single-threaded and sequential processing. These cores feature advanced control units, larger caches (L1, L2, and sometimes L3), and support for sophisticated instruction sets like x86-64 or ARM.

In contrast, GPUs have a massive array of smaller cores designed to handle parallel tasks. These cores operate under a Single Instruction, Multiple Data (SIMD) model, where the same operation is applied across multiple data points simultaneously.

A comparison of the GPU and CPU architecture. Image source.

Performance and efficiency

As you can imagine, the differences in architecture directly influence performance.

With their high clock speeds and advanced instruction handling, CPUs excel at low-latency tasks that require high precision and logical operations. Examples include operating system management, database queries, and running application threads. CPUs are also more versatile and capable of efficiently switching between diverse workloads due to their instruction decoding and branch prediction capabilities.

As mentioned, GPUs thrive in tasks where parallelism is key. Their ability to process multiple data streams simultaneously makes them indispensable for high-throughput operations, such as video encoding, scientific simulations, and neural network training. For instance, in deep learning, GPUs accelerate matrix multiplications and backpropagation, drastically reducing training times compared to CPUs.

The trade-off lies in the nature of the workload: CPUs outperform GPUs in sequential tasks, whereas GPUs dominate in data-heavy, parallel tasks.

Power consumption

GPUs generally consume more power due to their high core count and intensive parallel processing demands, while CPUs tend to have lower power requirements.

A high-end GPU can consume several hundred watts under load, particularly during intensive operations like training machine learning models or rendering 4K graphics. Additionally, their high memory bandwidth requirements further increase power draw.

CPU features like dynamic voltage and frequency scaling (DVFS), low-power states (C-states), and efficient cooling mechanisms allow CPUs to effectively balance performance with power consumption. This makes CPUs more suitable for devices requiring longer battery life or lower thermal output, such as laptops and mobile devices.

Cost and availability

CPUs are widely available in consumer devices and are typically more affordable. Their broad utility and market saturation make them relatively inexpensive for consumers. Entry-level CPUs can cost under $100, while high-performance models for servers or workstations may range into the thousands.

GPUs, especially high-performance models, are more expensive and commonly found in workstations, gaming systems, and high-performance computing environments. Consumer-grade GPUs for gaming may range from $200 to $1,000, while specialized GPUs for data centers or scientific research, such as NVIDIA’s A100 or AMD’s Instinct series, can cost tens of thousands of dollars.

The following table offers a detailed comparison of CPUs vs GPUs, making it easier to see their differences at a glance:

|

CPU (Central Processing Unit) |

GPU (Graphics Processing Unit) |

|

|

Primary function |

General-purpose processing for sequential tasks |

Specialized for parallel processing and data-intensive tasks |

|

Architecture |

Fewer, powerful cores optimized for single-threaded performance |

Thousands of smaller, simpler cores optimized for parallelism |

|

Processing model |

Serial execution: tasks processed one at a time |

Parallel execution: multiple tasks processed simultaneously |

|

Core count |

Typically 4–64 cores in consumer-grade CPUs |

Can have thousands of cores in high-performance GPUs |

|

Clock speed |

Higher clock speeds (up to ~5 GHz) |

Lower clock speeds (~1–2 GHz) |

|

Strengths |

Precision, sequential tasks, versatility, and logic operations |

High throughput for large-scale operations like matrix math |

|

Use cases |

Running operating systems, application logic, databases |

Graphics rendering, machine learning, scientific computing |

|

Power consumption |

Lower due to fewer cores and energy-efficient designs |

Higher due to dense cores and memory bandwidth demands |

|

Memory bandwidth |

Lower, typically optimized for latency |

Higher, optimized for throughput (e.g., GDDR6, HBM memory) |

|

Cost |

Relatively affordable and widely available |

More expensive, especially for high-performance models |

|

Applications |

Laptops, desktops, servers, mobile devices |

Gaming systems, workstations, HPC environments, AI workloads |

|

Flexibility |

Broad compatibility for diverse tasks |

Optimized for specific workloads requiring parallelism |

CPU Use Cases

Let’s briefly discuss some more specific use cases for CPUs:

- General-purpose computing: CPUs are perfect for everyday tasks, such as browsing the internet, using productivity applications, and using your computer for daily use. The majority of your computer’s workload is based on the CPU.

- Single-threaded applications: Tasks that require single-threaded processing, like specific financial calculations, are better suited for CPUs. Other single-threaded applications are word processors, browsers, and music apps.

- Operating system management: CPUs handle essential system processes, managing inputs, outputs, and background tasks that keep the system running smoothly.

GPU Use Cases

Let's briefly go over some GPU-specific use cases:

- Graphics and video processing: GPUs are designed to render high-quality graphics, making them indispensable in gaming, video editing, and graphics-intensive applications. This is possible because of their parallel processing capability, as I mentioned throughout the article. Many games with intensive graphics require a reasonably strong GPU to play without lagging or issues.

- Machine learning and AI: GPUs are often used to train machine learning models and process large datasets quickly, meeting the high-performance needs of AI algorithms. Due to the volume of calculations, they are especially necessary for projects utilizing neural networks. Since many calculations can be done in parallel, GPUs are a critical component of complex machine learning.

- Data science and scientific computing: GPUs can accelerate large-scale computations and matrix operations in data science, making them valuable for data-intensive analyses and simulations. Calculating things like tensors, image analysis, and convolutional layers requires massive computation. For example, Polars offers a GPU engine for query acceleration.

The Future of CPUs and GPUs

Both CPUs and GPUs continue to grow in power and complexity! Their futures show an increase in computational ability and widespread usage.

CPUs adapting to more cores

CPUs are increasing core counts to meet modern multitasking demands. This improves their serial computing capability and offers some parallel computing through multithreading.

Some trends in CPU development include:

- Increased core counts: Consumer-grade CPUs now often feature 8–16 cores, while server-grade processors can include 64 or more cores. This enables improved parallel computing capabilities through technologies like simultaneous multithreading (SMT) and hyper-threading.

- Heterogeneous architectures: Modern CPUs, such as Intel’s Alder Lake series or ARM’s big.LITTLE design, combine high-performance cores with energy-efficient cores to optimize both power consumption and task performance.

- Integration with specialized units: CPUs are increasingly integrating accelerators, such as AI inference engines or graphics processing units, directly onto the chip to enhance performance in specific workloads.

GPU development for general-purpose computing

GPUs are evolving to handle general-purpose tasks, making them increasingly versatile for data-heavy operations. Manufacturers like NVIDIA and AMD are investing in hardware-agnostic software ecosystems (e.g., CUDA, ROCm) to expand GPUs' applicability beyond graphics.

Many machine learning packages are now capable of using GPUs. Frameworks like TensorFlow and PyTorch now natively support GPU acceleration, dramatically reducing computation times.

Specialized processing units

The future of processing isn’t limited to just CPUs and GPUs. The rise of specialized processors, such as TPUs (Tensor Processing Units) and NPUs (Neural Processing Units), reflects a growing demand for task-specific hardware:

- Tensor Processing Units (TPUs): Developed by Google, TPUs are designed specifically for accelerating neural network computations. Unlike GPUs, TPUs focus on matrix-heavy operations and are optimized for large-scale AI tasks. If you have more questions, read the article on TPUs vs. GPUs.

- Neural Processing Units (NPUs): Found in mobile devices and edge computing, NPUs are built to handle AI-related tasks like image recognition, speech processing, and natural language understanding, all while consuming minimal power.

- FPGA and ASIC advances: Field-Programmable Gate Arrays (FPGAs) and Application-Specific Integrated Circuits (ASICs) are also gaining traction for their ability to be customized for specific applications, such as cryptocurrency mining, network packet processing, and real-time analytics.

Conclusion

CPUs and GPUs each bring unique strengths to computing: CPUs excel at general-purpose and precise tasks, while GPUs shine in parallel processing and data-heavy workloads. Understanding these differences helps data scientists, gamers, and professionals make informed hardware choices.

If you’re interested in exploring how GPUs power deep learning models, check out Introduction to Deep Learning with PyTorch. For a broader understanding of AI technologies and their applications, the AI Fundamentals track provides a comprehensive overview. To dive deeper into the mechanics behind machine learning, explore the course Understanding Machine Learning.

Become a ML Scientist

FAQs

Can a GPU replace a CPU for everyday computing tasks?

No, GPUs are not a replacement for CPUs. While GPUs excel in parallel processing tasks, they lack the versatility and single-threaded performance that CPUs provide for general-purpose tasks.

When should I use both a CPU and a GPU together?

A hybrid approach can provide optimal performance by leveraging the strengths of both processor types for tasks like video editing and neural networks.

Why are GPUs used in machine learning and AI instead of CPUs?

Machine learning and AI tasks often involve processing large datasets and running numerous calculations simultaneously. GPUs, with their high core count and parallel processing capabilities, can perform these tasks much faster than CPUs.

Are there specialized processors besides CPUs and GPUs for specific tasks?

Yes, specialized processors like TPUs (Tensor Processing Units) are designed to handle specific workloads like machine learning. TPUs combine elements of CPU and GPU architectures to optimize performance for particular types of data processing.

I am a data scientist with experience in spatial analysis, machine learning, and data pipelines. I have worked with GCP, Hadoop, Hive, Snowflake, Airflow, and other data science/engineering processes.