Track

When ChatGPT launched in November 2022, attracting a hundred million users in just two months, I honestly dismissed it as an overhyped chatbot. That assessment aged poorly. Today, I (and nearly everyone I work with) rely on AI coding tools daily, and the old debates from 2023 about whether a bot can write a decent email feel completely dated. So what is the future of AI?

The industry has moved beyond those initial questions to a completely different scale. Anthropic recently crossed $7 billion in recurring revenue, and tech giants are pouring money into data centers at levels that would have seemed absurd five years ago. Consequently, the question that matters now is no longer if AI works, but what happens when it stops waiting for prompts and starts deciding what to do next.

This article covers where the technology appears to be heading. I'll examine agentic AI, the heated AGI timeline debate, and the rise of smaller models you can run locally. We will also look at employment shifts, coding tools, healthcare applications, and the regulatory situation. Let’s dive in!

If you are not yet familiar with some of the AI concepts, I recommend our AI Fundamentals skill track, which covers all the basics you need to know.

From Generative to Agentic AI

The back-and-forth chatbot model is giving way to a more autonomous approach. This section covers what that shift looks like in practice, where the real implementation challenges are, and why most organizations still struggle to get agents into production.

The rise of autonomous agents

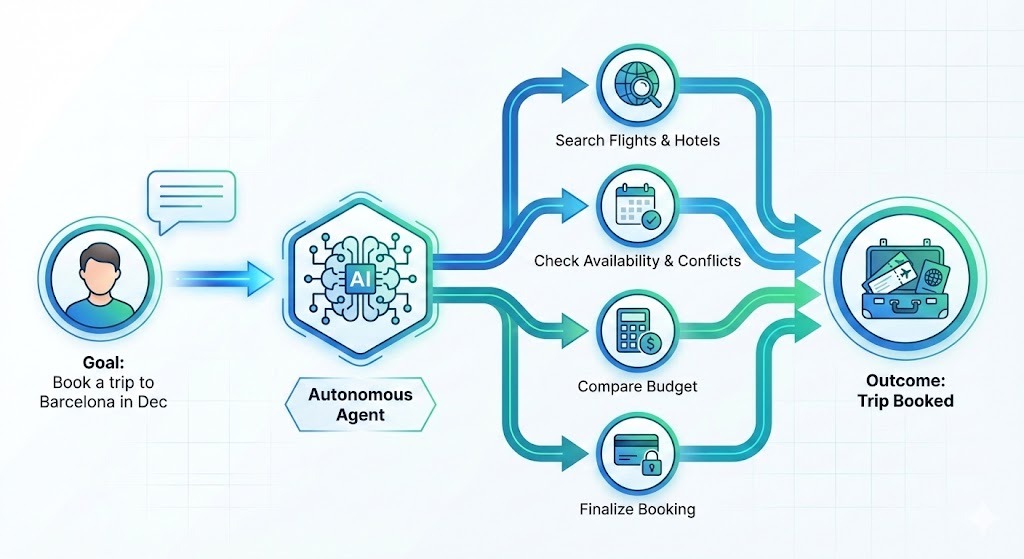

For the past couple of years, AI tools followed a predictable rhythm. You prompt, it answers. The human drove, and AI was along for the ride. That dynamic is shifting: Agentic AI systems don’t just answer questions; they formulate plans to achieve goals.

Say you want to book a trip to Barcelona in December. Instead of producing a list of hotels for you to research, an agent would instead:

- Search for options

- Filter by your preferences

- Cross-check pricing against your budget

- Check your calendar for conflicts

- Make the booking

You describe an outcome—the system handles the steps to take you there.

Corporate appetite for this is massive. Deloitte predicts 75% of companies will invest in agentic AI by late 2026. However, reality is currently lagging behind the vision. A mid-2025 UiPath survey found that while interest is high, only 30% of agent deployments actually reach production.

Bridging the gap with MCP

Why the gap? Integration, mostly. When researchers asked companies what blocked them, 46% pointed to connecting AI agents with existing systems. Writing an agent that can draft code is one challenge. Making it work reliably with your CRM, your ticketing platform, your internal APIs, without breaking things or creating new problems, turns out to be a different challenge entirely.

To solve this issue, the industry is rallying around open standards like Anthropic’s Model Context Protocol (MCP) and OpenAI's AGENTS.md specification, which both aim at neutral stewardship for open infrastructure and were contributed to the Agentic AI Foundation.

MCP, in particular, has gained a lot of traction. Think of it as "USB-C for AI"—a standard connector allowing agents to talk to different tools and platforms easily. To name a few, it offers integration with Claude, Cursor, Microsoft Copilot, VSCode, Gemini, and ChatGPT.

If you want to move beyond demos, check out our courses on Agentic AI for concepts and Designing Agentic Systems with LangChain for hands-on application.

Is Artificial General Intelligence (AGI) near?

The timeline to Artificial General Intelligence (AGI), AI systems capable of performing tasks on a human level across domains, depends entirely on who you ask.

The CEOs of major AI labs are aggressive: Anthropic’s Dario Amodei has suggested 2026–2027 for systems that match Nobel laureates across fields ("a country of geniuses in a datacenter"). Similarly, Sam Altman and Demis Hassabis point to the next 3–5 years.

It is worth noting that these companies rely on investor enthusiasm to fund expensive research, so optimistic timelines serve a strategic purpose.

Skeptics offer a cooler take. Gary Marcus calls recent months "devastating" for AGI optimism, and former OpenAI researcher Andrej Karpathy places AGI at least a decade away. A broad 2023 survey of researchers pegged the median arrival date even further out, at 2047.

Ultimately, Stanford’s HAI offers the most useful framing: we are moving from "evangelism to evaluation." The most important question isn't when AGI arrives, but how well current systems handle specific tasks today vs. where they still require human oversight.

Emerging AI Technologies Beyond LLMs

Not everything interesting in AI involves making models bigger. Smaller models, quantum computing, and digital twins are reshaping what's possible at the edges of the field.

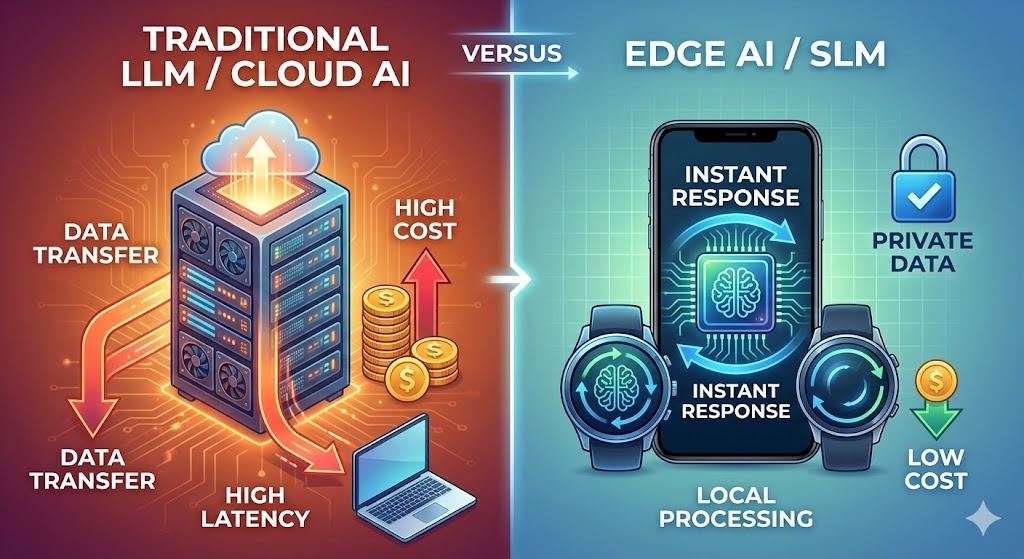

Small Language Models (SMLs) and edge AI

While everyone talks about larger and larger models, significant investment is going into smaller ones. Gartner predicts that organizations will deploy Small Language Models (SMLs), task-specific models, at three times the rate of general-purpose large models by 2027.

The reasons are practical:

- Cost: Running massive models is expensive. OpenAI reportedly spent nearly double its revenue on inference in early 2025. SLMs cut these costs dramatically.

- Privacy: On-device models keep data local, a requirement for healthcare and finance. Consequently, the SLM market is projected to grow from $930 million in 2025 to $5.45 billion by 2032.

- Speed: Local inference eliminates network latency. For manufacturing quality control or fraud detection in banking, milliseconds matter. Cloud can't always deliver that.

NVIDIA researchers argue the next leap in AI won't be size, but specialization. Since tasks like email parsing or SQL queries don't require encyclopedic knowledge, the future architecture looks like model fleets: multiple specialized models working in concert rather than one massive generalist.

Google, Mistral, Microsoft, and Alibaba are all pushing hard here. Google AI Edge supports over a dozen models, including Gemma 3n, their first multimodal on-device model.

Comparing the approaches of SLMs and LLMs

The following table compares the two approaches. For a deeper dive, check out our article on SLMs vs LLMs.

|

Aspect |

Large Language Models |

Small Language Models |

|

Where they run |

Cloud servers |

Devices, edge, local servers |

|

Cost per request |

Higher ($0.01-0.10 range) |

Fractions of a cent |

|

Response time |

Network-dependent (100-500ms) |

Under 50ms |

|

Data handling |

Leaves the device |

Stays local |

|

Customization |

Resource-intensive |

More accessible |

|

Best for |

Complex reasoning, creative work, broad knowledge |

Specific tasks, reliability, speed |

|

Energy use |

Substantial |

Modest |

Large models aren't becoming irrelevant. Complex problems still benefit from their power. The future likely involves both, with interesting questions about when to use each.

The convergence of quantum computing and AI

Quantum computing has been “five years away” for decades, but something shifted in late 2025, and it's worth paying attention to.

Google demonstrated a 13,000x speedup over the Frontier supercomputer using 65 qubits for physics simulations. IBM is targeting quantum advantage by 2026, and researchers have started using neural networks to predict and correct qubit errors in real time.

The field moved from theoretical promise to engineering problem.

The term gaining traction is "quantum utility." It is not about a full quantum advantage across all problems, but quantum systems solving specific problems faster or cheaper than classical alternatives. The targeted problems are math-heavy, involving so many variables that classical computers choke:

- Battery chemistry optimization

- Drug compound screening

- Portfolio risk modeling

JPMorgan Chase researchers recently achieved a milestone with a quantum streaming algorithm that processes large datasets with an exponential space advantage. That's not a press release. That's peer-reviewed work with measurable results.

That being said, the prediction range here is wide.

Manifold Markets, which tracks over 100 quantum-related forecasts, shows broad skepticism that quantum computers will outperform classical systems in cryptography or complex biological simulation by 2026. Most observers expect incremental engineering progress, not a breakthrough quantum advantage.

But the same markets show strong confidence that useful fault-tolerant quantum computers will arrive within the decade.

What does this mean practically?

Practically, the standalone quantum computer is a myth. The industry standard is now “heterogeneous compute”: hybrid architectures where quantum processors sit inside high-performance centers alongside GPU clusters, with AI systems managing the workflow.

For data scientists working in R&D sectors, this creates a new skill requirement. Quantum cloud APIs from IBM, Google, Amazon Braket, and Microsoft Azure Quantum let you run quantum subroutines without owning hardware. The learning curve isn't trivial, but it's more accessible than building your own quantum lab.

The shift in focus is subtle but important. While classical data science obsesses over "big data,” quantum computing cares about "complex optimization." It tackles problems with combinatorial explosions, like molecular modeling, logistics, or financial risk with thousands of correlated factors. For these fields, quantum tools are moving from theoretical to relevant.

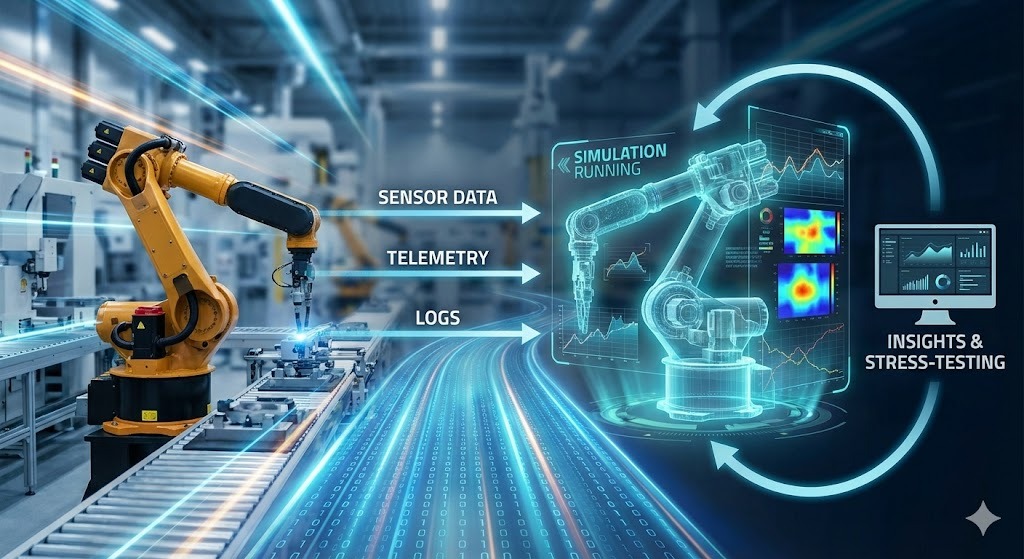

Digital twins and simulation

Digital twins are moving from buzzword to working infrastructure. The idea is straightforward: build a living virtual replica of a physical system (a factory line, a warehouse network, a power grid, a city district) and keep it synced with the real one through sensors, logs, and operational data. Then you use the twin to stress-test decisions before you touch reality.

Factories are the cleanest early case. A modern plant already produces the data a twin needs: PLC signals, machine telemetry, maintenance history, work orders, quality measurements, inventory movements. AI turns that flood into a model you can query. Not just dashboards, but counterfactuals. You can answer questions such as:

- What if a stamping press goes down for six hours on Tuesday morning?

- What if we swap supplier B for supplier C on this component, with a slightly different defect profile?

- What if we change the shift schedule so the experienced operators concentrate on the last two stations?

The value isn’t predicting the disruption. It’s tracing the downstream effects–missed windows, inventory gaps, cost of rerouting–so you can precompute solutions rather than improvising at 3 a.m.

The enabling shift is that AI is reducing the cost of building and maintaining these models. Modern ML models learn behavior directly from live data, detecting drift and keeping the twin aligned as the real system changes.

Small, specialized models also fit well here: one model for anomaly detection on a turbine, another for schedule optimization, another for demand forecasting, all feeding the same simulated environment.

If you want a sharper map of what’s next, consider reading 9 Open-Source LLMs for 2026 to run and fine-tune models without vendors and Top 13 AI Conferences for 2026, where the real implementation talk will happen.

The Future of AI in Work

Headlines about AI taking jobs tend to miss the nuance in the actual data. The picture that emerges from research is messier, more gradual, and probably less dramatic than either optimists or pessimists suggest.

Will AI replace jobs?

The numbers deserve more nuance than headlines typically provide.

The World Economic Forum surveyed over 1,000 leading employers for their 2025 Future of Jobs Report. They projected 170 million new roles created and 92 million displaced between 2025 and 2030, a net gain of 78 million jobs.

Although this is a net positive, the distribution matters considerably. In a study published in November 2025, MIT and Boston University researchers estimated AI will replace around two million manufacturing workers by 2026.

The distinction worth making is between tasks and roles. AI tends to automate specific activities within jobs more often than it eliminates whole positions at once. Your accountant isn't going anywhere, but the hours spent on data entry and reconciliation are shrinking.

MIT research from 2025 estimated that currently automatable tasks cover about 11.7% of U.S. workforce activity, which equals to roughly $1.2 trillion in wages. That doesn't mean 11.7% of workers lose their jobs, but it means significant chunks of what they do can now run as a software.

Jobs facing more pressure include:

- Customer service, especially text-based support

- Data entry and processing

- Administrative work following predictable patterns

- Some retail cashier positions

- Routine legal research and paralegal tasks

Jobs involving the following features will face less pressure:

- Managing people

- Specialized judgment

- Ambiguous situations

- Physical presence in unpredictable environments

These patterns apply to healthcare, skilled trades, creative work, and strategic decision-making, among others.

Emerging career paths

New job categories are appearing, some of which didn't exist even five years ago:

- AI Systems Architect involves designing how AI components fit within organizations. You need to combine both technical understanding and knowledge of business processes.

- Prompt Engineer became an actual job title, though it's evolving toward what people call AI collaboration specialist. Understanding how to work effectively with these systems, not just writing clever inputs.

- AI Ethics and Compliance Manager covers regulatory requirements and auditing. Organizations need people who understand both the technical capabilities and the legal landscape to minimize risks related to AI systems.

- AI Trainer and Data Curator handles feedback loops, edge case annotation, and training data quality control.

The pattern I discovered is that domain expertise combined with AI fluency is what creates value. A nurse who understands AI diagnostic tools produces better outcomes than one who avoids them. A lawyer using AI for research while knowing its limits works faster without quality loss.

Employer surveys consistently rank analytical thinking as the most sought-after skill. Seven out of ten companies call it essential. Resilience, adaptability, and leadership follow.

Industry-Specific Changes

Some fields adopted AI tools faster than others, and the results vary more than you'd expect. Coding, healthcare, and finance each tell a different story about what works, what doesn't, and where the surprises show up.

Coding and data science

Software development adopted AI tools faster than most fields. The tools keep evolving.

According to the 2025 Stack Overflow survey, around 85% of developers use AI tools regularly as of late 2025. GitHub reports 41% of code created by Copilot users globally involves AI assistance. We're past autocomplete: Current systems understand entire repositories and make changes across multiple files.

Market leaders have emerged: GitHub Copilot holds about 42% of the market share, with over 20 million users and a presence in 90% of the Fortune 100 companies. Cursor came from nowhere to an 18% market share in eighteen months. They're reportedly doing $500 million in recurring revenue.

AI tools excel at certain types of coding work:

- Boilerplate, repetitive patterns

- Exploring unfamiliar APIs

- Deciphering legacy code

However, the gap between perception and reality matters.

In a July 2025 METR study, experienced developers used tools like Cursor Pro and Claude 3.5 Sonnet. Those developers completed tasks 19% slower than without AI assistance–yet they assumed they were working 20% faster.

The takeaway is, productivity gains depend on context. AI tools can actually slow you down when the type of work does not match their strengths and you understand the system better than the AI does.

Nowadays, the direction is headed toward more autonomous capabilities. GitHub's Project Padawan represents a model where developers assign issues to AI systems, the AI implements, and humans review. The shift goes from AI suggesting code to AI completing features.

For data science, expect less time on preparation and more on interpretation. AI can take over handling SQL queries and data cleaning. This gives human data scientists the time to focus on asking the right questions and understanding what the results mean.

Healthcare

AI in healthcare sits in a complicated territory. Real potential, but cautious deployment–which is probably appropriate given the stakes.

AI-supported drug discovery is accelerating. AlphaFold and similar tools predict protein structures in hours instead of years. Compound screening happens at previously impractical scales. Some estimates suggest AI could compress drug development timelines by four or five years per medication.

Diagnostics have improved in specific areas, too, with radiology, pathology, and ophthalmology the furthest along. AI trained on medical imaging can find patterns human reviewers miss.

According to McKinsey, Mayo Clinic expanded its radiology staff by over 50% since 2016 while deploying hundreds of AI models. That combination is notable: AI augmented the work rather than replacing the people.

However, caution makes sense. Healthcare decisions carry consequences that social media recommendations don't: Wrong diagnoses can harm patients. The scientific and medical demand for understanding how models reach conclusions, not just whether they're accurate, creates real constraints.

Near-term reality is AI as a diagnostic assistant. Systems can flag potential issues, suggest tests, and catch things that might slip through in busy environments. But in the end, physicians make the final calls and maintain patient relationships.

Remote monitoring is accelerating as small language models move directly onto wearables. By processing data locally on the device, these systems minimize latency and ensure privacy, using AI to flag only specific anomalies for human review.

Finance

Financial services were early adopters of AI, having mastered high-speed fraud detection years ago, but agentic capabilities are pushing the sector into new territory.

While established tools handle fraud and routine customer service queries, the active frontier is now hyper-personalization. New systems can analyze individual risk tolerance and financial goals to offer sophisticated wealth management strategies to a much broader population.

However, this progress comes with a warning: regulators are increasingly scrutinizing these models to ensure that historical biases in lending data are not reproduced by the algorithms.

AI Challenges and Regulation

Technical limitations and policy gaps create real constraints on what AI can do responsibly. The black box problem, generated misinformation, and a fragmented regulatory landscape all shape what's actually deployable versus what's theoretically possible.

Technical and social issues

The black box problem hasn't been solved. When models make decisions, understanding why remains difficult. That's problematic when decisions affect loans, employment, and medical care.

Research focuses on interpretability. The Stanford HAI faculty expects increased attention to what they call "archaeology of high-performing neural nets" in 2026. Techniques like sparse autoencoders help identify which training features actually drive behavior.

Generated content creates further problems: Deepfakes and AI-produced misinformation get harder to detect by the day. Some estimates suggest AI-generated articles online may already exceed human-written content in volume.

Model collapse can be a consequence and is another issue that concerns researchers. If models increasingly train on AI-generated content, which constitutes a growing share of available data, quality could degrade. Models are consuming their own outputs in ways that compound errors.

Regulatory landscape

Jurisdictions are taking different approaches.

The EU AI Act is the most wide-ranging AI rulebook currently in place. It’s built around a risk ladder: the higher the risk, the heavier the obligations. Systems used in employment and credit decisions sit in the “high-risk” bucket, which means stricter requirements around documentation, controls, and oversight.

The Act entered into force on 1 August 2024, but most obligations arrive on a staged timetable over the following years.

Energy shows up in a narrower, more practical way: providers of general-purpose AI models may need to document energy use (or provide estimates derived from compute when exact measurement isn’t feasible). Separately from the AI Act, EU data-centre and energy-efficiency reporting is tightening, which matters because AI workloads are a major new driver of electricity demand.

In practice, this drives teams toward a "good enough" architecture that prioritizes efficiency over raw power. By using smaller models and running inference at the edge, developers can process fewer tokens through expensive cloud systems, ultimately reducing compute costs, power consumption, and data transfer.

In the U.S., the situation is far less settled. Federal legislation remains deadlocked, and with the failure of 2025 efforts to override state-level rules, the regulatory environment is splintering. Consequently, builders through 2026 face a moving target of state-by-state compliance rather than a unified federal framework.

Preparing for the Future of AI

What actually helps for staying professionally relevant? To prepare, I suggest studying AI-related concepts, developing skills in technical areas, and getting hands-on experience.

Data and AI literacy

Data literacy is no longer optional. You don't need to be a data scientist, but you do need to understand how data moves, what makes it reliable, and how to interpret model outputs.

If you need a structured baseline, the Data Literacy Professional skill track covers all the essentials and offers you a certification to show what you’ve learned. For a more AI-oriented theoretical starter, I recommend enrolling in the AI Fundamentals skill track.

Technical skills

Basic technical skills remain the differentiator. AI tools make coding more accessible, but you still need to understand the logic to verify the output. Python and SQL are the "daily drivers" here.

A solid approach is to learn the language first, then the AI layer. Start with Introduction to Python to grasp the syntax. Follow that with Generative AI Concepts to understand how modern assistants behave—and where they fail.

Domain expertise and agents

The biggest shift is from chatbots to agents. Mere prompting is becoming a commodity. Designing workflows that integrate with real-world systems is where the value lies.

Domain expertise retains value. Knowing AI tools well, combined with deep field knowledge, produces more than either alone. The quickest way to make that combination real is to pick one domain workflow and learn how agents would plug into it.

To understand this architecture, enroll in our AI Agent Fundamentals skill track for the mental model. Once you’ve covered the theoretical part, take the Designing Agentic Systems with LangChain course to get hands-on experience in building a functioning system end-to-end. Seeing where these agents break is just as valuable as seeing them work.

Managing uncertainty

Finally, cultivate comfort with uncertainty AI model outputs are probabilistic, so they're wrong sometimes. Understanding failure modes is crucial for anyone building customer-facing tools. You should be familiar with concepts like model collapse (how synthetic data degrades models) and the mechanics behind deepfakes.

To keep pace with the industry rather than the hype cycle, follow the practitioners. The Top 13 AI Conferences for 2026 list is a good place to find where engineers are comparing notes on what actually works in production.

Conclusion

Stanford faculty recently characterized the current moment as a shift from "evangelism to evaluation." That framing captures the reality perfectly. We are moving past the question of whether AI matters and into the gritty details of where it helps and how to deploy it responsibly.

While AGI timelines remain speculative and regulatory landscapes shift, the practical direction is clear: Agentic AI will enter enterprise workflows, and small models will handle routine tasks. The recurring theme is augmentation, not replacement. The most interesting future isn't one where AI does everything, but one where humans design the systems that amplify their own expertise.

The best strategy now is straightforward: Engage with the tools, maintain a healthy skepticism of both hype and doom, and treat learning as a continuous process. The changes are happening regardless of your readiness, so it is better to be in the driver's seat!

Future of AI FAQs

What is agentic AI, and why does it matter?

Unlike standard chatbots that answer one question at a time, agentic AI creates and executes a plan to achieve a goal. If you ask it to book a trip to Madrid, it doesn’t just list flights; it autonomously compares hotels, checks your calendar, and finalizes the booking. Deloitte predicts that 75% of companies will be investing in agentic systems by late 2026.

When will we reach Artificial General Intelligence (AGI)?

There is no consensus among experts. Optimistic estimates, like those discussed by Dario Amodei, suggest late 2026 or 2027 is possible if current trends hold (which he doubts). Others, like Demis Hassabis, view it as a 5-to-10-year journey, while skeptics like Gary Marcus believe it is much further away. A 2023 survey of researchers placed the median prediction around 2047.

Will AI take my job?

AI is unlikely to replace entire roles wholesale. The World Economic Forum predicts a "net positive" outcome through 2030, with 92 million jobs displaced but 170 million created. The shift will be in tasks rather than titles, with AI automating routine work. This puts pressure on customer service and data entry roles, while jobs requiring complex judgment and ambiguity should remain safe.

What are Small Language Models (SLMs)?

SLMs are compact versions of AI models designed for specific tasks rather than general knowledge. Because they are smaller, they can run directly on devices (like smartphones), offering lower costs and better data privacy. Gartner expects that by 2027, organizations will utilize these specialized models three times more often than massive general-purpose ones.

How is AI changing software development?

AI has become a standard companion for coders, with GitHub reporting that 41% of code in Copilot-assisted projects is AI-generated. Modern tools can now understand entire project repositories, not just single files. However, one study revealed that while experienced developers felt faster using AI, they actually completed tasks more slowly.

Josep is a freelance Data Scientist specializing in European projects, with expertise in data storage, processing, advanced analytics, and impactful data storytelling.

As an educator, he teaches Big Data in the Master’s program at the University of Navarra and shares insights through articles on platforms like Medium, KDNuggets, and DataCamp. Josep also writes about Data and Tech in his newsletter Databites (databites.tech).

He holds a BS in Engineering Physics from the Polytechnic University of Catalonia and an MS in Intelligent Interactive Systems from Pompeu Fabra University.