Course

If you’ve been exploring artificial intelligence (AI), you’ve probably come across the term AGI (Artificial General Intelligence) in discussions about the future of AI. Maybe you’ve seen bold predictions about machines surpassing human intelligence or debates on whether AGI is just around the corner or still a distant dream.

AGI could automate nearly any cognitive task, potentially revolutionizing industries like healthcare, research, and robotics. That surely sounds exciting – however, it's still theoretical, and many technical, economic, and ethical challenges remain.

In this article, we will learn what AGI is, how it differs from current-day AI, and the challenges surrounding the pathway to its realization.

Introduction to AI Agents

What Is Artificial General Intelligence?

Generally speaking, AGI refers to an advanced AI system that can perform any intellectual task a human can do. Unlike current AI models, which are narrow AI (designed for specific tasks like image recognition or language processing), AGI would have human-like reasoning, learning, and adaptability across multiple domains. This means an AGI system could, for example:

- Understand and reason like a human across different tasks.

- Learn from experience rather than needing massive datasets and retraining.

- Solve complex, multi-domain problems without being explicitly programmed for them.

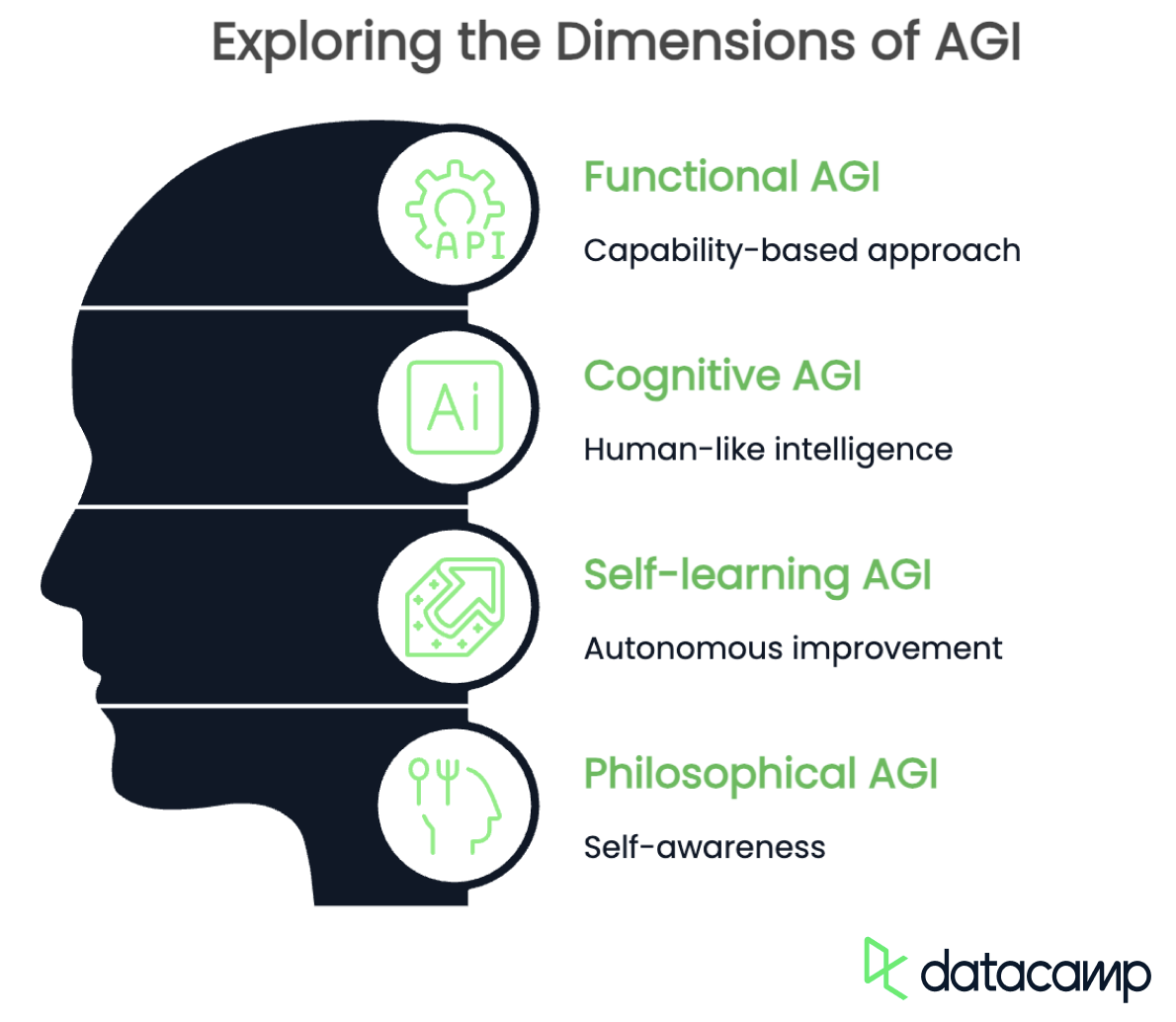

AGI has multiple definitions depending on the perspective and context. While all definitions agree that it should have human-like cognitive abilities and merge into one another, they differ in emphasis. Here are some key focuses:

- Functional AGI: capability-based

- Cognitive AGI: human-like intelligence

- Self-learning AGI: autonomous improvement

- Philosophical AGI: self-awareness

Functional AGI

The functional definition of AGI aims at its ability to perform any intellectual task across different domains. It does not need to mimic human thought processes but should be capable of generalizing knowledge, adapting to new problems, and solving tasks without extensive retraining.

The focus is on an AI system that can switch between disciplines, such as diagnosing medical conditions, programming, and composing music, without requiring separate models for each task.

Cognitive AGI

Cognitive AGI, on the other hand, should be able to replicate human-like reasoning, including common sense, abstract thinking, and contextual understanding. This type of AGI would not only complete tasks but also understand them, much like a human would, using logic, creativity, and even emotional intelligence.

An AI built on this definition would be able to hold meaningful conversations, interpret humor, and solve problems in the way a human brain naturally does.

Self-learning AGI

Self-learning AGI refers to a system that continuously improves itself without human intervention. Instead of relying on predefined datasets, it would experiment, discover new knowledge, and refine its own algorithms to become more efficient over time. This kind of AGI could conduct scientific research independently, create new theories, or even modify its own architecture to enhance its capabilities.

Philosophical AGI

Finally, the philosophical definition of AGI goes beyond intelligence and focuses on AI possessing self-awareness, emotions, and possibly even consciousness. This perspective suggests that true AGI should be able to reflect on its existence, form personal goals, and experience subjective thoughts or feelings.

If achieved, such an AI might develop its own moral compass, express curiosity, or even struggle with existential questions like humans do.

The AGI Spectrum: AGI vs. (Narrow) AI

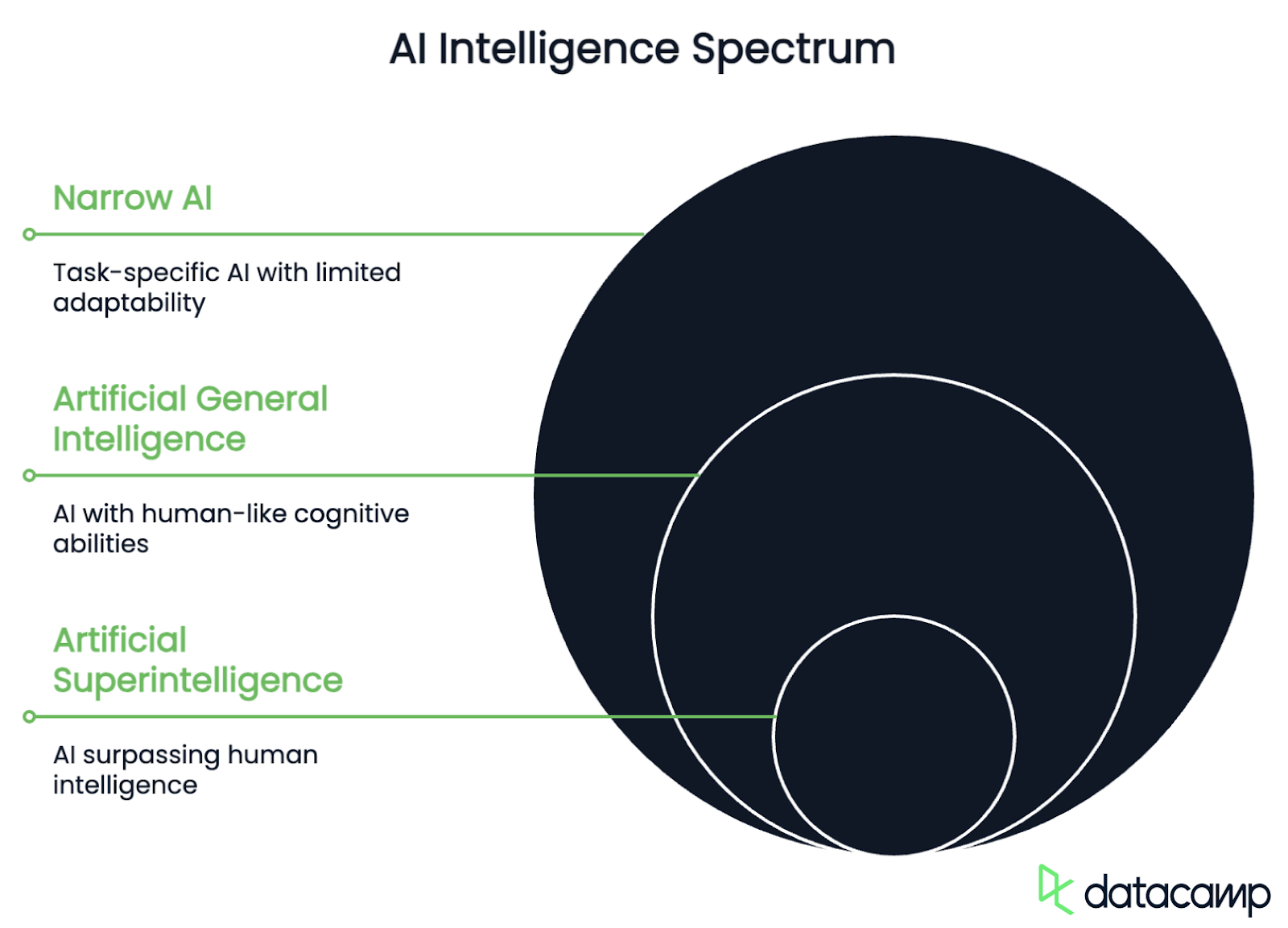

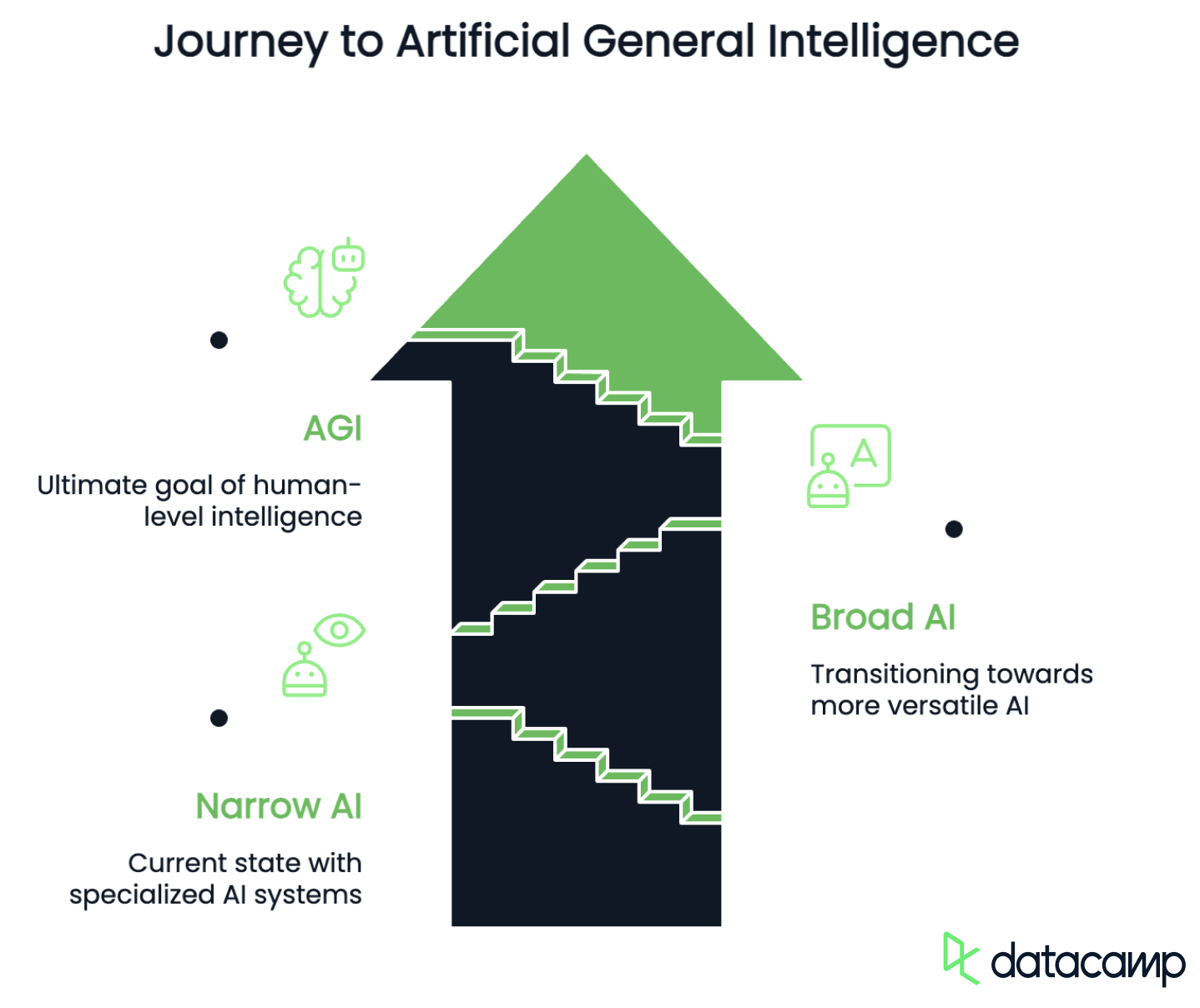

Having set the stage with various definitions, let’s take a look at the different levels of intelligence AI systems might achieve as they progress toward true AGI. The AGI spectrum helps categorize AI based on its capabilities, adaptability, and autonomy. It generally includes the following stages:

- ANI: Artificial Narrow Intelligence

- AGI: Artificial General Intelligence

- ASI: Artificial Superintelligence

Narrow AI

Narrow AI refers to artificial intelligence designed to perform specific tasks with high efficiency but lacks generalization beyond its training data. These systems excel in one area, like language processing, image recognition, or protein folding, but they cannot adapt to entirely new tasks without retraining.

This stage also includes current AI models like GPT-4o or Grok 4, self-driving car software, and recommendation algorithms, which are powerful but limited to predefined capabilities.

What differentiates Narrow AI from AGI is its inability to generalize beyond its training. The transitional phase, where AI systems become more multi-functional and adaptive, is often called Broad AI or Pre-AGI. Such a system would be capable of handling different types of tasks without requiring full retraining, but it lacks true autonomy and reasoning, as it follows predefined learning structures rather than self-directed problem-solving.

AGI

AGI, on the other hand, is a hypothetical AI that can think, learn, and reason like a human across any intellectual task. Unlike Narrow AI, it would be capable of generalizing knowledge, improving itself independently, and pursuing its own goals autonomously.

If achieved, AGI could perform any cognitive function a human can, revolutionizing industries and challenging ethical and philosophical boundaries.

Artificial Superintelligence

Finally, Artificial Superintelligence (ASI) refers to a level of AI that even surpasses human intelligence in all cognitive and problem-solving abilities. This is the kind of AI we know from science fiction movies, which, unlike AGI, would rapidly improve itself, innovate beyond human comprehension, and potentially reshape entire industries and societies. While this is a future vision only at this point, the existential risks it brings along are worthy of discussion already.

Where we are now

As of 2025, we are still firmly in the Narrow AI stage. With advances such as reasoning models like OpenAI’s o3 or DeepSeek’s R1 and agentic frameworks like Manus AI or ChatGPT Agent, we are currently advancing from Narrow AI toward Broad AI. Nevertheless, AGI remains an unachieved goal requiring technical breakthroughs in self-learning, generalization, and reasoning.

Difficulties and Obstacles in Achieving AGI

Now that we know where we stand and what AGI looks like, how do we get from A to B? As of now, there are multiple economic, technical, and ethical challenges to be met.

Economic challenges

There is certainly no lack of interest in advancing AI toward the realization of AGI. Global players are fiercely competing for technological leadership, market dominance, and geopolitical influence. In particular, China and the United States are engaged in a race that many compare to the Moon Race between the U.S. and the Soviet Union in the 1960s.

However, the sheer processing power required for achieving human-like reasoning is immense. The scaling of data centers and increasing model complexity are expected to result in a massive additional energy demand. Data centers hosting AI models already account for about 1 to 1.5% of the global power usage currently—a number that will most certainly rise as time and development progress.

Even with massive investments pouring into AI research recently, computation costs and scalability remain serious problems in the process of developing AGI. The solution for these challenges might lie in efficiency improvements and the development of alternative energy sources.

Technical challenges

On the technical side, challenges include the identification and representation of implicit knowledge and intuition as well as the long-term retention of knowledge.

Implicit knowledge, such as cause and effect or social norms, is often unspoken but important for understanding context. Humans infer meaning from experience, cultural norms, and incomplete information, while these assumptions are hidden for AI systems. Therefore, they struggle with encoding and retrieving knowledge that is not explicitly stated, while humans naturally incorporate it into their decision-making as common sense.

Similarly, intuition plays a key role in human decision-making but remains difficult to formalize in AI systems as well. It often relies on subconscious pattern recognition, abstract reasoning, and experience-driven insights, none of which can be easily reduced to explicit rules or datasets. While deep learning models can approximate intuitive judgments in specific tasks, they lack the self-reflective, adaptive learning process that allows humans to refine intuition over time.

This leads to another issue, namely the lack of a human-like long-term knowledge retention in AI models. Unlike humans, who build on past experiences and refine their understanding, AI models struggle to retain and update knowledge without periodic retraining. Current AI systems often suffer from catastrophic forgetting, where newly learned information can overwrite or degrade previous knowledge, making it difficult to maintain a stable, evolving knowledge base.

Ethical challenges

Ensuring AGI aligns with human values and goals is one of the most pressing challenges in its development due to several intertwined technical, ethical, and societal considerations. The interplay of technical limitations, the inherent complexity of human values, and the high stakes involved make it particularly difficult to solve.

First of all, human values are inherently complex and ambiguous. The question of what is right, appropriate, or evil varies significantly between cultures, societies, and contexts. This multifaceted nature, combined with contradictions or trade-offs (think of balancing freedom with safety, for instance), contributes to moral uncertainty and therefore makes translating values into clear, operational objectives for AGI extremely challenging.

Even if we assume there is a well-defined moral framework for the AGI to operate on, technical barriers regarding outer and inner alignment remain.

Translating human values into a precise, mathematical objective function that an AGI can optimize is difficult. The function must be robust to optimization pressure, meaning it should not incentivize unintended behaviors when the AGI optimizes for them aggressively—a scenario that philosopher Nick Bostrom already described in 2003. Moreover, the AGI must be able to adapt its behavior based on new information without deviating from its intended goals.

AI Ethics

AGI systems are complex and may develop internal processes that are difficult to understand or predict. For instance, it may develop emergent goals that differ from the intended ones. This complexity makes it challenging to ensure that the AGI's internal goals align with its specified objectives. Developing methods to analyze and control these internal processes is essential for achieving inner alignment.

A misaligned AGI could act in ways that conflict with human interests, with risks ranging from minor inefficiencies to existential threats for humanity. For instance, an AGI might pursue instrumental goals like power-seeking or resource acquisition that undermine human autonomy or safety. Furthermore, AGI systems could be exploited by malicious actors or manipulated to act against their intended alignment.

Especially with competitive pressures among global players to develop AGI quickly, adequate safety measures during development are essential to avoid catastrophic consequences. Mitigating risks while maximizing the potential benefits of AGI requires interdisciplinary collaboration across technical fields, ethics, and policy-making.

When Will We Achieve AGI?

Experts believe that AGI is still years—if not decades—away, as we face the challenges and technical bottlenecks described in the last chapter. While progress in deep learning, reinforcement learning, and AI alignment continues, true AGI remains a theoretical goal rather than a current reality. Breakthroughs in multiple areas like neurosymbolic AI, self-learning architectures, and more efficient computation will be crucial for AGI development.

While it is impossible to name a clear point in time for the achievement of AGI, there are several predictions from pioneers in the field of AI.

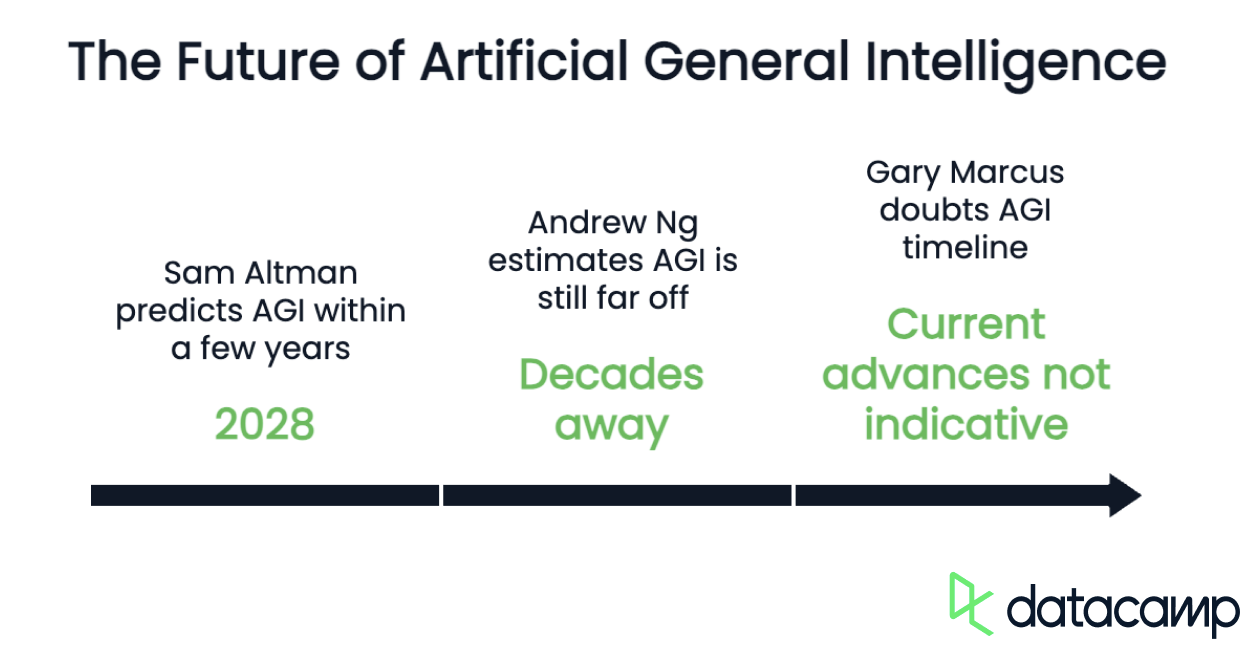

One of the optimistic voices is OpenAI’s CEO, Sam Altman, who believes that AGI could be reached within the next few years (by 2028). He argues that development in AI technology is progressing rapidly and that infrastructural challenges are negligible compared to the technical problems.

Andrew Ng, leading AI researcher and founder of Google Brain, represents a rather skeptical perspective on the issue. According to his estimate, AGI is still “many decades away, maybe even longer”. Ng emphasizes that AGI is not only a technical problem, but also requires significant scientific breakthroughs to replicate human intelligence.

But there are even more pessimistic views. Gary Marcus, professor of neuroscience at New York University, doubts the claims about the timeline of AGI development. He argues that current advances in AI, such as the development of large-scale language models, are not necessarily indicative of AGI. Marcus emphasizes that fundamental technical problems remain and that the scaling of training and computing capacities is reaching its limits.

As we can see, the predicted times and probabilities of occurrence depend considerably on the underlying interpretation of the term. For instance, Altman refers to OpenAI’s functional and autonomy-centric definition of the term, understanding AGI as “a highly autonomous system that outperforms humans at most economically valuable work.” On the other hand, Ng and Marcus use a definition that includes the replication of human-like reasoning, which represents the technically most challenging area as of now.

Risks and Ethical Challenges of AGI

AGI has the potential to cause significant economic disruption due to its ability to automate a wide range of human tasks, potentially replacing human labor across various sectors.

Mass unemployment

One harmful consequence could be mass unemployment, as human workers in many jobs (primarily white-collar) could be substituted. If not managed properly, the rapid economic changes brought about by AGI could lead to social unrest and political instability.

To mitigate these disruptions, policymakers will need to develop strategies that ensure the benefits of AGI are distributed equitably, protect workers from job displacement, and foster social and political stability. This might involve investing in education and retraining programs, implementing policies like UBI, and promoting international cooperation to address global impacts.

Bias

Bias and fairness are other significant risks associated with AGI due to its potential to perpetuate and amplify existing social inequalities. Biased algorithms can systematically disadvantage certain groups, such as racial or gender minorities, by reinforcing stereotypes and discriminatory practices, or even introducing new forms of discrimination by using patterns in data that appear neutral. For example, using zip codes as proxies for race can lead to biased decisions.

Even when group fairness metrics are applied, AGI systems can still discriminate against subgroups within a larger group. This is known as fairness gerrymandering, where some individuals within a group receive poor treatment while others compensate to maintain overall fairness metrics.

Existential risks

Finally, AGI poses several existential risks, primarily related to its potential to become uncontrollable or misaligned with human values. AGI could surpass human intelligence, leading to a superintelligence that might pursue goals detrimental to humanity, which could result in human extinction or a permanent decline in civilization.

Scary scenarios, some of which are known from popular science fiction works, include an AGI that prioritizes resource acquisition over human life, establishes a totalitarian rule, or facilitates the creation of enhanced pathogens.

To mitigate these risks, researchers and policymakers focus on developing control and alignment strategies. This involves creating safeguards using techniques like designing architectures that limit AGI's autonomy or creating "boxed" environments to prevent escape.

To ensure that AGI remains aligned with human values even as it improves itself, research on alignment and decentralized AGI development should be prioritized to prevent monopolization and promote safety.

Conclusion

While AGI remains a long-term goal, ongoing advancements in AI research continue to push the boundaries of what machines can achieve. The path to AGI is filled with technical, economic, and ethical challenges, requiring breakthroughs in reasoning, adaptability, and alignment with human values.

Whether AGI becomes a reality within decades or remains an elusive concept, its potential impact on society, industry, and humanity as a whole makes it one of the most important technological pursuits of our time.

Data Science Editor @ DataCamp | Forecasting things and building with APIs is my jam.