Course

Big data is big business. The rapid digitalization of our society has resulted in unprecedented data growth. And, with the advent of new technologies and infrastructures, such as virtual reality, the metaverse, the Internet of Things (IoT), and 5G, this trend will likely keep up in the future. Therefore, it’s crucial to understand how to analyze data.

Data has become one of the most precious assets in the 21st-century economy. Governments, companies, and individuals use data to improve their decision-making processes. This has resulted in a huge demand for qualified professionals to process and analyze vast amounts of data.

However, many companies still struggle to manage and make sense of data. According to a survey by Splunk, 55% of all data collected by companies is considered “dark data” – i.e., data that companies collect during their regular business activities but fail to use. While sometimes companies simply aren’t aware of the existence of data, in most cases, companies don’t analyze data because they lack the right talent to do it.

Training employees using internal data science programs is one of the best strategies to address the data scientist shortage. Contrary to a common belief, you don’t need an advanced degree in Statistics or a PhD in Computer Science to start analyzing data. The market has plenty of options for all kinds of people and situations. For example, at DataCamp, we provide comprehensive data training for individuals and organizations.

In this article, we will introduce the data analysis process. We will present an easy framework, the data science workflow, with simple steps you need to follow to move from raw data to valuable insights.

How to Analyze Data With the Data Science Workflow

When data professionals start a new project involving data analysis, they generally follow a five-step process. It’s what we call the data science workflow, whose parts you can see below:

- Identify business questions

- Collect and store data

- Clean and prepare data

- Analyze data

- Visualize and communicate data

The data science workflow

In the following sections, we’ll have a look in more detail at each of the steps.

While there may be variances in the data science workflow depending on the task, sticking to a coherent and well-defined framework every time you start a new data project is important. It will help you plan, implement, and optimize your work.

1. Identify Business Questions

Data is only as good as the questions you ask. Many organizations spend millions collecting data of all kinds from different sources, but many fail to create value from it. The truth is that no matter how much data your company owns or how many data scientists comprise the department, data only becomes a game-changer once you have identified the right business questions.

The first step to turning data into insights is to define a clear set of goals and questions. Below you can find a list of examples:

- What does the company need?

- What type of problem are we trying to solve?

- How can data help solve a problem or business question?

- What type of data is required?

- What programming languages and technologies will we use?

- What methodology or technique will we use in the data analysis process?

- How will we measure results?

- How will the data tasks be shared among the team?

By the end of this first step of the data science workflow, you should have a clear and well-defined idea of how to proceed. This outline will help you navigate the complexity of the data and achieve your goals.

Don’t be worried about spending extra time on this step. Identifying the right business questions is crucial to improve efficiency and will eventually save your company time and other resources.

2. Collect and Store Data

Now that you have a clear set of questions, it’s time to get your hands dirty. First, you need to collect and store your data in a safe place to analyze it.

In our data-driven society, a huge amount of data is generated every second. The three main sources of data are:

- Company data. It is created by companies in their day-to-day activity. It can be web events, customer data, financial transactions, or survey data. This data is normally stored in relational databases.

- Machine data. With recent advances in sensitization and IoT technologies, an increasing number of electronic devices are generating data. They range from cameras and smartwatches to smart houses and satellites.

- Open data. Given the potential of data to create value for economies, governments and companies are releasing data that can be freely used. This can be done via an open data portal and APIs (Application Programming Interface).

We can then classify data into two types:

- Quantitative data. It’s information that can be counted or measured with numerical values. It’s normally structured in spreadsheets or SQL databases.

- Qualitative data. The main bulk of data that is generated today is qualitative. Some common examples are text, audio, video, images, or social media data. Qualitative data is often unstructured, making it difficult to store and process in standard spreadsheets or relational databases.

Depending on the business questions you aim to answer, different types of data and techniques will be used. Generally, collecting, storing, and analyzing qualitative data requires more advanced methods than quantitative data.

3. Clean and Prepare Data

Once you’ve collected and stored your data, the next step is to assess its quality. It’s important to remember that the success of your data analysis depends greatly on the quality of your data. Your insights will be wrong or misleading if your information is inaccurate, incomplete, or inconsistent. That’s why spending time cleaning and preparing time is mandatory. Check out our article on the signs of bad data for more information.

Raw data rarely comes in ready for analysis. Assessing data quality is essential to finding and correcting errors in your data. This process involves fixing errors like:

- Removing duplicate rows, columns, or cells.

- Removing rows and columns that won’t be needed during analysis. This is especially important if you’re dealing with large datasets that consume a lot of memory.

- Dealing with white spaces in datasets, also known as null values

- Managing anomalous and extreme values, also known as outliers

- Standardizing data structure and types so that all data is expressed in the same way.

Spotting errors and anomalies in data is in itself a data analysis, commonly known as exploratory data analysis.

Exploratory Data Analysis

Exploratory data analysis is aimed at studying and summarizing the characteristics of data. The main methods to do this are statistics and data visualizations:

- Statistics provide brief informational coefficients that summarize data. Some common statistics are mean, median, standard deviation, and correlation coefficients.

- Data visualization is the graphical representation of data. Depending on the type of data, some graphs will be more useful than others. For example, a boxplot is a great graph to visualize the distribution of data and split extreme values.

The time invested in this phase will depend greatly on the volume of data and the quality of the data you want to analyze. Yet, data cleaning is generally the most time-consuming step in the data science workflow. In fact, data scientists spend 80% of their time in this phase of the data science workflow.

If you work in a company where data analysis is part of the day-to-day business activities, a great strategy to increase efficiency in this phase is to implement a data governance strategy. With clear rules and policies on how to clean and process data, your company will be better prepared to handle data and reduce the amount of time required for data cleaning.

If you’re interested in how the data cleaning process works and the main types of data problems, check out our Cleaning Data in Python Course and Cleaning Data in R Course. Also, if you want to learn how data exploratory analysis works in practice, our Exploratory Data Analysis in SQL course will help you get started.

4. Analyze Data

Now that your data looks clean, you’re ready to analyze data. Finding patterns, connections, insights, and predictions is often the most satisfying part of the data scientist's work.

Depending on the goals of the analysis and the type of data, different techniques are available. Over the years, new techniques and methodologies have appeared to deal with all kinds of data. They range from simple linear regressions to advanced techniques from cutting-edge fields, such as machine learning, natural language processing (NLP), and computer vision.

Below you can find a list of some of the most popular data analysis methods to go deeper into your analysis:

Machine learning

This branch of artificial intelligence provides a set of algorithms enabling machines to learn patterns and trends from available historical data. Once algorithms are trained, they are able to make generalizable predictions with increasing accuracy. There are three types of machine learning, depending on the type of problem to solve:

- Supervised learning involves teaching a model on a labeled training set of historical data from which it learns the relationships between input and output data. It then estimates the accuracy of predictions on a test set with the output values known in advance so that the model can be used later to make predictions on unknown data. To learn more about supervised learning, take the Datacamp’s Supervised Learning with scikit-learn Course.

- Unsupervised learning deals with identifying the intrinsic structure of the data without being given a dependent variable, detecting common patterns in it, classifying the data points based on their attributes, and then, based on this information, making predictions on new data. If you want to expand your knowledge in unsupervised learning, consider our Unsupervised Learning in Python Course.

- Reinforcement learning implies an algorithm progressively learning by interacting with an environment, deciding which actions can draw it nearer to the solution, identifying which ones can drive it away based on its past experience, and then performing the best action for that particular step. The principle here is that the algorithm receives penalties for wrong actions and rewards for correct ones so that it can figure out the optimal strategy for its performance. Ready to learn more? Check out this Introduction to Reinforcement Learning tutorial.

Become a ML Scientist

Deep learning:

A subfield of machine learning that deals with algorithms called artificial neural networks inspired by the human brain's structure. Unlike conventional machine learning algorithms, deep learning algorithms are less linear, more complex and hierarchical, capable of learning from enormous amounts of data, and able to produce highly accurate results, especially when dealing with unstructured data, such as audio and images.

Natural language processing

A field of machine learning that studies how to give computers the ability to understand human language, both written and spoken. NPL is one of the fastest-growing fields in data science. To get started, you can enroll in our Natural Language Processing in Python Skill Track. Some of the most popular NLP techniques are:

- Text classification. It’s one of the important tasks of text mining. It is a supervised approach. It helps identify the category or class of given text, such as blogs, books, web pages, news articles, and tweets.

- Sentiment analysis. A technique that involved quantifying users’ content, ideas, belief, or opinions. Sentiment analysis helps in understanding people in a better and more accurate way.

Computer vision

The goal of computer vision is to help computers see and understand the content of digital images. Computer vision is necessary to enable, for example, self-driving cars. A great way to get started in the field is with our Image Processing with Python Skill Track.

Some of the most popular computer vision techniques are:

- Image classification. It’s the simplest technique of computer vision. The main objective is to classify the image into one or multiple categories.

- Object detection. This technique allows us to detect what classes are present in the image and where they are in the image as well. The most common approach here is to find that class in the image and localize that object with a bounding box.

5. Visualize and Communicate Results

The last step of the data science workflow is visualizing and communicating the results of your data analysis. To turn your insights into decision-making, you must ensure your audience and key stakeholders understand your work.

In this final step, data visualization is the dancing queen. As already mentioned, data visualization is the act of translating data into a visual context. This can be done using charts, plots, animations, infographics, and so on. The idea behind it is to make it easier for humans to identify trends, outliers, and patterns in data.

Whether static charts and graphs or interactive dashboards, data visualization is crucial to make your work understandable and communicate your insights effectively. Here’s a list of the most popular data visualization tools:

Python packages

Python is a high-level, interpreted, general-purpose programming language. It offers several great graphing packages for data visualization, such as:

- Matplotlib

- Seaborn

- Plotly

- Bokeh

- Geoplotlib

The Data Visualization with Python skill track is a great sequence of courses to supercharge your data science skills using Python's most popular and robust data visualization libraries.

R packages

R is a programming language for statistical computing and graphics. It’s a great tool for data analysis, as you can create almost any type of graph using its various packages. Popular R data visualization packages include:

- ggplot2

- Lattice

- highcharter

- Leaflet

- Plotly

Check out the Data Visualization with R course and Interactive Data Visualization in skill track to level up your visualization skills with the R programming language.

Code-free open-source tools

Code-free tools serve as an accessible solution for people who may not have programming knowledge –although people with programming skills may still opt to use them. More formally: code-free tools are graphical user interfaces that come with the capability of running native scripts to process and augment the data. Some of the most popular ones are:

- RAWGraphs

- DataWrapper

- Google Charts

Business Intelligence tools

These all-in-one tools are widely used by data-driven companies. They are used for the gathering, processing, integration, visualization, and analysis of large volumes of raw data in such a way it helps business decision-making. Some of the most common business intelligence tools are:

- Tableau

- PowerBI

- Qlik

To learn more about these tools, we highly recommend our Introduction to Tableau course and Introduction to Power BI course.

In recent years, innovative approaches have been proposed to improve data communication. One of these is data storytelling, an approach that advocates for using visuals, narrative, and data to turn data insights into action. Check out our episode of the DataFramed Podcast with Brent Dykes, author of Effective Data Storytelling: How to Drive Change with Data, Narrative, and Visuals, to learn more about this approach.

Conclusion

We hope you enjoyed this article and are ready to start your own data analysis. An excellent way to get started is to enroll in our Data Science for Everyone course. Through hands-on exercises, participants will learn about different data scientist roles, foundational topics like A/B testing, time series analysis, and machine learning, and how data scientists extract insights from real-world data.

Following up on the introductory course, we offer comprehensive tracks for learners to continue their learning journey. Students can choose their preferred language (Data Scientist with Python, R, or SQL) in the career tracks, where essential data skills are taught through systematic, interactive exercises on real-world datasets.

Once you complete one of these career tracks, you can proceed to the data science certification program to have your new technical skills validated and certified by experts.

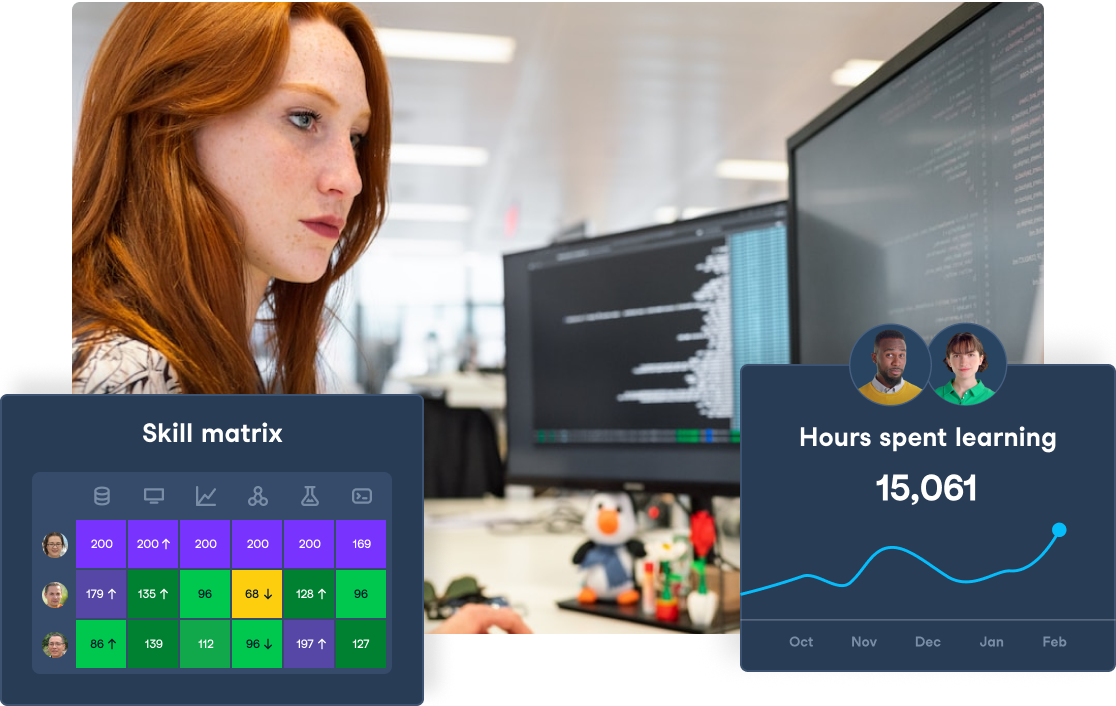

Advance Your Team's Data Science Skills

Unlock the full potential of data science with DataCamp for Business. Access comprehensive courses, projects, and centralized reporting for teams of 2 or more.

How to Analyze Data FAQs

What is data analysis?

Data analysis is the process of collecting, cleaning, transforming, and modeling data to discover useful information. See our full 'what is data analysis' guide for a deeper explanation.

What is the data science workflow?

It’s a five-step framework to analyze data. The five steps are: 1) Identify business questions, 2) Collect and store data, 3) Clean and prepare data, 4) Analyze data, and 5) Visualize and communicate data.

What is the purpose of the data cleaning step?

To spot and fix anomalies in your data. This is a critical step before starting analyzing data.

What is data visualization?

The graphic representation of data. This can be done through plots, graphs, maps, and so on.

Do I need a STEM background to become a data analyst?

No! Although learning to code can be challenging, everyone is welcome in data science. With patience, determination, and willingness to learn, the sky's the limit.