Track

Traditional operating systems (OS) like Windows, macOS, and Linux have long dominated the computing landscape. However, imagine a scenario where you type, “Organize my cluttered desktop and set a calming wallpaper,” and it happens within seconds.

This future isn’t far off, thanks to the development of large language model operating systems (LLM OS).

Unlike traditional OSs that rely on predefined commands and graphical interfaces, an LLM OS centers on an AI model that interprets and executes commands based on natural language.

This paradigm shift is a move towards a more conversational and intuitive interaction model, where the OS becomes more of an intelligent assistant rather than a tool requiring constant manual input. As a result, computers will become more accessible to a broader audience, regardless of their technical proficiency.

In this article, you will learn about what LLM Operating Systems are, how they differ from traditional systems, the milestones that have shaped their development, and the challenges and ethical considerations that come with their adoption.

Develop AI Applications

What Are LLM Operating Systems?

An LLM operating system (LLM OS) represents a significant evolution in how computers work, moving from traditional, rigidly structured systems to more fluid, AI-driven environments.

Basically, an LLM OS is an operating system where the core functionalities—such as user interaction, task management, and system control—are powered by an LLM.

This means that instead of interacting with your computer through predefined commands or menus, you interact through natural language. You can speak or type instructions in everyday language, and the LLM interprets these instructions to carry out tasks, manage applications, and even automate complex workflows.

For example, you could say, “Install the latest version of Photoshop," and the LLM would handle the entire process, including finding the software, downloading it, and configuring it correctly.

Key Characteristics of LLM OS

Let’s explore the key characteristics of an LLM OS in more detail.

Natural language interface

The most prominent feature of an LLM OS is its ability to understand and execute commands delivered in natural language. This means that instead of needing to know specific commands or navigate through complex menus, users can simply instruct the system in the same way they would speak to another person.

Example: If you want to send an email, instead of opening an email client and manually typing everything, you could simply say, "Send an email to John saying I'll be late to the meeting," and the system would handle the rest.

AI-driven task execution

The LLM not only interprets what you ask it to do but also decides the best way to execute those tasks. This involves understanding context, managing resources, and potentially handling multiple applications to achieve the desired outcome.

Example: A request like "Organize all my files by project and send the latest drafts to the team" could involve sorting files, creating folders, selecting relevant documents, and sending emails—all tasks managed smoothly by the LLM OS.

Adaptability and flexibility

Unlike traditional systems that require manual customization and have limited adaptability, an LLM OS can learn from your interactions and adjust its behavior to better suit your preferences and workflows. Over time, it becomes more aligned to your needs, offering suggestions and automating repetitive tasks without explicit instructions.

Example: If you frequently work on reports every Monday, the LLM OS might start preparing draft reports for you automatically, based on the data and formats you’ve used in the past.

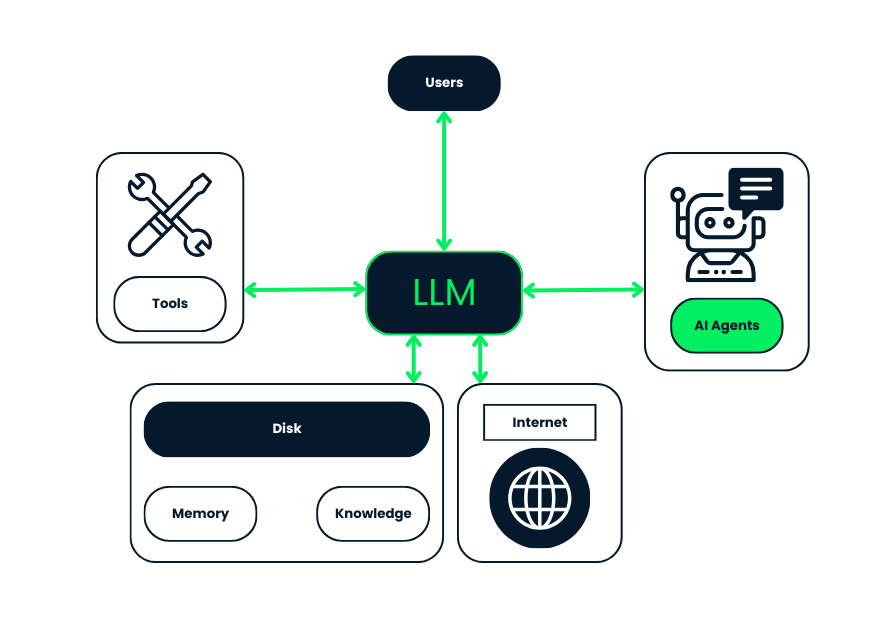

This diagram illustrates the architecture of an LLM OS, showing how the different components interact within the system.

- LLM: It serves as the core engine of the system. It processes and understands natural language inputs, giving the OS the ability to interpret user commands, manage tasks, and communicate with other components.

- Users: They can interact with the system by giving natural language commands. The LLM interprets these inputs to execute tasks.

- Tools: The LLM OS integrates with various software tools and applications, which it can access to perform specific tasks. The LLM coordinates with these tools to carry out the user's instructions efficiently.

- AI Agents: These can handle specific tasks autonomously. The LLM communicates with these AI agents to delegate tasks or gather information, improving the system's overall functionality and responsiveness.

- Disk: This part of the system is the storage resources, including memory and knowledge bases. The LLM accesses these resources to maintain context, retrieve information, and learn from previous interactions, improving its responses over time.

- Internet: The system’s access to the internet allows the LLM to search for information, perform tasks requiring online connectivity, and integrate external data and resources into its operations.

In the next section, we will explore how LLM Operating Systems differ from traditional operating systems, diving deeper into their unique interaction paradigms and task execution capabilities.

LLM OS vs. Traditional OS

LLM operating systems differ from traditional ones in three key areas: interaction paradigm, task execution, and adaptability.

Interaction paradigm

The most significant difference between an LLM OS and traditional operating systems lies in the interaction paradigm. Traditional operating systems, such as Windows, macOS, and Linux, rely on GUIs and CLIs to facilitate user interaction. Users typically interact with these systems by clicking on icons, navigating menus, or entering specific commands via a terminal.

An LLM OS introduces a natural language interface at the core of the system, allowing users to interact through conversational commands.

This means you could simply instruct the system using everyday language—like telling it to "create a new document and summarize the latest meeting notes"—and the LLM OS would understand and execute these commands without needing to open specific applications or follow a series of manual steps. The AI-driven system interprets the intent behind the user’s words and carries out the appropriate actions.

Task execution

In traditional operating systems, task execution is typically linear and compartmentalized. Users perform tasks by opening specific applications designed for particular functions (e.g., using a word processor for writing, a browser for internet access). Each task requires explicit instructions and manual navigation through the operating system.

LLM OSs handle tasks in a more integrated and fluid way. The LLM interprets complex user requests and can execute multiple related tasks simultaneously or in sequence without requiring the user to manage each step individually.

For example, instead of separately opening a spreadsheet, email client, and calendar app to organize a project, you could simply ask the LLM OS to "prepare the project budget, schedule a team meeting, and send out the agenda," and it would perform all these tasks in the background, coordinating between different tools as needed.

Adaptability

Traditional operating systems offer customization options, but these are typically static and require manual input. For instance, users can change the layout of their desktop, customize themes, or install specific software, but the OS itself does not inherently adapt to the user's behavior over time.

In an LLM OS, adaptability is a core feature. The system learns from each interaction, gradually understanding the user’s preferences, routines, and workflow patterns. This ongoing learning process enables the LLM OS to offer increasingly personalized suggestions and automate repetitive tasks without needing explicit commands from the user.

Let’s summarize what we’ve covered in this section:

|

Aspect |

Traditional OS |

LLM OS |

|

Interaction Paradigm |

Users interact through GUIs and CLIs, using icons, menus, and command lines. |

Users interact through natural language, giving commands through conversation. |

|

Task Execution |

Tasks are performed linearly and require explicit instructions in specific applications. |

Tasks are managed together, with the LLM handling multiple related tasks at the same time or one after the other, without needing detailed instructions. |

|

Adaptability |

Customization is possible but static, requiring manual input and configuration. |

The system learns and adapts over time, offering personalized suggestions and automating repetitive tasks based on user behavior. |

In the next section, we will explore the key milestones in the development of LLM Operating Systems, highlighting some of the pioneering projects and their contributions to this field.

From AIOS to MemGPT: Key Milestones in LLM OS Development

The development of LLM OS has been characterized by several key milestones that have significantly advanced these systems. Here’s a look at some key projects and innovations that have contributed to the evolution of the LLM OS.

AIOS: Pioneering the concept

AIOS (AI Operating System) is one of the earliest attempts to integrate large language models into the core of an operating system. Developed as a research project, AIOS was designed to demonstrate how LLMs could work as the brain of an OS, handling tasks that typically require human intervention or pre-programmed instructions.

AIOS focuses on embedding LLMs directly into the OS’s kernel, allowing them to manage resources, execute commands, and interact with applications more intelligently.

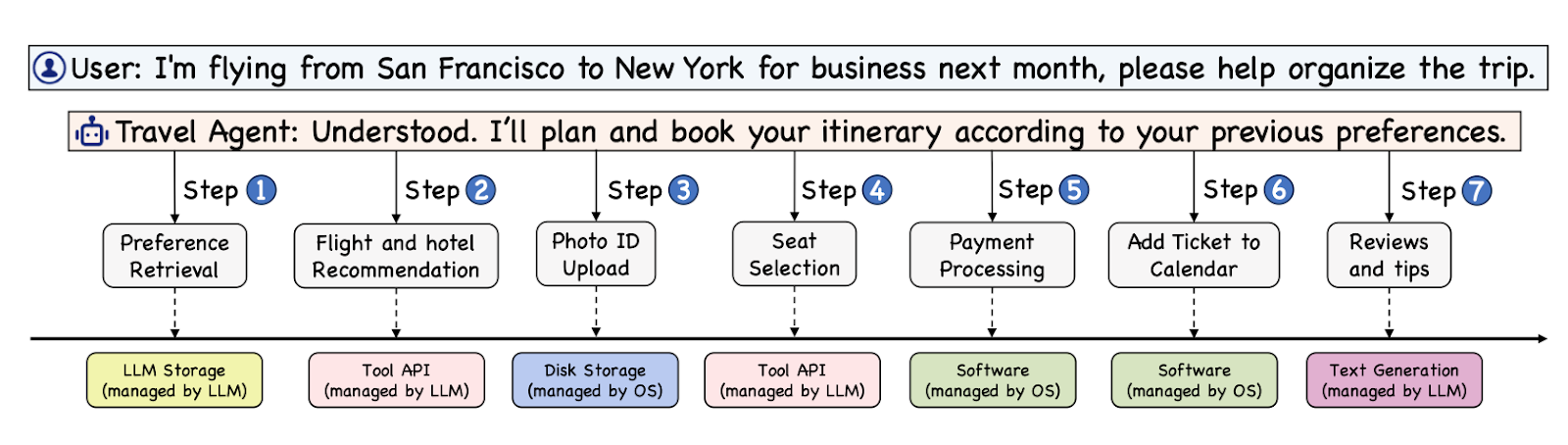

Illustrative example taken from the original research paper.

One of the standout features of AIOS is its LLM-specific kernel, which is optimized to handle the unique demands of LLM-based tasks, such as prioritizing agent requests and managing memory efficiently. The system is designed to address the complexities of running multiple LLM agents simultaneously, ensuring smooth operation without the typical bottlenecks associated with high-demand computational tasks.

You can learn more about it here.

BabyAGI: The autonomous agent

BabyAGI is another important project in the development of LLM OS, introducing the concept of autonomous agents powered by LLMs. This system extends the capabilities of LLMs by enabling them to perform tasks autonomously, such as navigating the web, gathering information, and executing multi-step processes without continuous user input.

BabyAGI’s logo.

BabyAGI’s significance lies in its ability to automate workflows and decision-making processes. For example, a user might instruct BabyAGI to research the best travel options and book a flight, and the system would autonomously handle each step—from searching for flights to completing the booking process.

You can learn more about it here.

MemGPT: Bridging the gap between memory and intelligence

MemGPT represents a more recent advancement in LLM OS development, focusing on integrating long-term memory and improved reasoning capabilities into LLM-driven systems. One of the challenges with traditional LLMs is their limited context windows, which restrict how much information they can process at once. MemGPT addresses this by introducing a multi-level memory architecture that mimics traditional OS memory management techniques, such as virtual memory.

MemGPT’s logo.

MemGPT’s architecture includes a "main context" (analogous to RAM) and "external context" (similar to disk storage), allowing the system to manage larger datasets and maintain context across longer interactions. This is particularly useful in applications requiring continuous, context-aware conversations or in-depth document analysis.

You can learn more about it here.

In the next section, we will explore the potential of LLM Operating Systems, discussing how these systems could transform productivity, accessibility, and user experiences.

Challenges and Limitations of LLM OS Adoption

LLM operating systems bring exciting possibilities for more natural and powerful ways to interact with technology. However, there are important challenges that need to be solved before these systems can be widely used. These challenges, including technical, ethical, and practical issues, influence how effective and viable LLM OS can be in different scenarios.

Reliability and safety

One of the biggest concerns with LLM Operating Systems is making sure their outputs are reliable and safe. LLMs can sometimes generate incorrect or misleading information that appears believable but is factually wrong or makes no sense.

In an operating system, these errors can cause serious problems, especially if the LLM misinterprets commands or provides incorrect information during important tasks like financial transactions or processing medical data. Ensuring that LLM OS can consistently tell the difference between accurate and inaccurate information and make safe choices is a major challenge.

Performance and efficiency

The performance of LLMs is a critical concern, especially when it comes to responding in real time. These models require significant computational power, particularly when handling complex commands or large amounts of data.

This demand can lead to delays, where the system takes longer than expected to respond, negatively affecting the user experience. Additionally, the high energy consumption needed by LLMs raises environmental concerns. Ensuring that LLM OS can scale to support multiple users and tasks simultaneously without major drops in performance is a key focus of ongoing research and development.

Privacy and security

Privacy and security are crucial in any operating system, and LLM OS faces specific challenges in these areas. These systems often need access to large amounts of personal and sensitive data to operate effectively.

It's essential to protect this data from unauthorized access and prevent the LLM from accidentally leaking sensitive information. Additionally, because LLMs can be vulnerable to attacks where malicious inputs manipulate the model's output, developing strong defenses against these threats is very important.

Ethical and legal considerations

The deployment of LLM OS also raises ethical and legal issues, particularly concerning bias and intellectual property.

LLMs can unintentionally propagate biases present in their training data, leading to outputs that are discriminatory or unfair. Moreover, because LLMs are trained on vast datasets that may include copyrighted content, their use in an OS environment could lead to legal challenges related to intellectual property rights. Addressing these ethical and legal challenges is important for the responsible adoption of LLM OS.

The Future of LLM Operating Systems

The future of LLM OSs is set to redefine how we interact with technology. Here’s what we can anticipate as these systems evolve.

Expansion of LLM capabilities

As LLMs become more advanced, they will likely be integrated into operating systems in ways that allow them to handle increasingly complex tasks. These systems could manage everything from basic user interactions to more sophisticated operations like automated content creation, data analysis, and even strategic decision-making.

This expansion of capabilities will enable LLM OS to serve as powerful assistants that not only execute commands but also provide great insights and recommendations based on big datasets.

Specialized and smaller models

In addition to the advancements in LLM OS, we are also seeing a trend towards the development of small language models (SLMs). These models are more efficient and can be deployed on edge devices with limited processing power, such as smartphones or IoT devices.

SLMs offer the advantage of being more specialized, allowing for tailored solutions that are better suited to specific tasks or industries. This could lead to a more decentralized approach to AI, where different models manage various aspects of an operating system, all working together to provide a unified and smooth user experience.

Impact on business and productivity

The integration of LLM OS into business environments is expected to drive significant productivity gains. These systems can automate routine tasks, improve operations, and provide real-time data-driven insights, allowing businesses to operate more efficiently and make better-informed decisions.

As LLMs continue to improve in areas like context understanding and natural language processing, their role in business strategy and customer interactions will likely become even more prominent, transforming traditional business models and workflows.

Conclusion

The development of LLM OSs represents a significant evolution in how we interact with technology, marking a shift towards more intuitive, efficient, and adaptive systems.

These operating systems use the capabilities of LLMs to offer users a more natural and responsive computing experience, transforming both personal and professional workflows.

FAQs

Can LLM operating systems run on mobile devices or low-power hardware?

Yes, LLM operating systems can run on mobile devices and low-power hardware, but there are challenges. Recent advancements have allowed smaller LLMs to be deployed on mobile devices through optimized apps like MLC LLM, which supports iOS and Android. However, the performance can be limited, especially on older devices. For example, some models may generate outputs at slower rates due to the limited processing power available on mobile devices. Efforts are ongoing to optimize these systems, such as leveraging dedicated processing units like NPUs in modern smartphones to improve performance.

How do LLM operating systems handle data privacy in comparison to traditional OS?

LLM OS can implement advanced privacy features like differential privacy, where data is anonymized before processing. These systems also adapt privacy settings based on user behavior, offering a more dynamic and proactive approach to safeguarding personal information compared to traditional operating systems. However, these systems also face challenges, such as ensuring that the AI does not inadvertently expose sensitive information through its outputs, making continuous advancements in privacy technology crucial for the safe deployment of LLM OS.

How does an LLM OS manage software updates and compatibility with existing applications?

LLM operating systems manage updates and compatibility through a combination of backward compatibility mechanisms and modular design. These systems are being developed to seamlessly integrate with existing software environments while allowing for regular updates that enhance their capabilities. Compatibility layers or modules ensure that older applications can still function within an LLM OS, even as the core AI-driven components evolve.

How do LLM operating systems leverage cloud services, and what are the benefits?

LLM operating systems can use cloud services to improve their capabilities by offloading resource-intensive tasks such as complex computations and large-scale data processing to cloud-based infrastructure. This allows the OS to access more computational power and storage than what might be available locally on a user's device, enabling smoother performance and handling of larger datasets.

Can you use different types of LLMs in LLM operating systems?

Yes, you can use different types of LLMs in LLM operating systems, each tailored for specific tasks and functionalities. For example. The flexibility to integrate different LLMs allows LLM Operating Systems to handle a broader range of tasks, from content creation to complex data interpretation, by using the strengths of each model type. This modularity enables the OS to provide a more tailored and efficient user experience.

Ana Rojo Echeburúa is an AI and data specialist with a PhD in Applied Mathematics. She loves turning data into actionable insights and has extensive experience leading technical teams. Ana enjoys working closely with clients to solve their business problems and create innovative AI solutions. Known for her problem-solving skills and clear communication, she is passionate about AI, especially generative AI. Ana is dedicated to continuous learning and ethical AI development, as well as simplifying complex problems and explaining technology in accessible ways.