Course

Image by author

We have moved past making large language models (LLMs) better and are now focused on using them to create AI applications that help businesses. This is where large language model operations (LLMOps) tools come in, simplifying the process of creating fully automated systems for building and deploying LLM solutions into production.

In this article, we will look at different tools like LLM APIs, fine-tuning frameworks, experiments tracking tools, LLM Integration ecosystem, vector search tools, model serving framework, deployment platforms, and observability tools. Each tool is excellent at what it does and is designed to solve specific problems related to LLMs.

What is LLMOps?

LLMOps is an emerging field that focuses on the operational management of large language models in production environments. It is essentially MLOps (machine learning operations) specialized for language and other multimodal models.

Take the Master Large Language Models (LLMs) Concepts course to learn about LLM applications, training methodologies, ethical considerations, and the latest research.

LLMOps encompasses the entire lifecycle of large language models, including data collection, model training or fine-tuning, testing and validation, integration, deployment, optimization, monitoring and maintenance, and collaboration. By structuring projects and automating processes, LLMOps helps you reduce errors and efficiently scale AI applications, ensuring models are robust and capable of meeting real-world demands.

In short, LLMOps tools help you build an automated system that collects data, fine-tunes the model on it, tracks the model's performance, integrates it with external data, creates the AI app, deploys it to the cloud, and observes the model's metrics and performance in production.

The Developing Large Language Models skill track consists of 4 courses that will help you build a strong foundation on how LLM works and how you can fine-tune pre-trained LLMs.

Now, let’s explore the top LLMOps tools available today.

API

Access language and embedding models using API calls. You don't have to deploy the model or maintain the server; you only have to provide an API key and start using the state-of-the-art models.

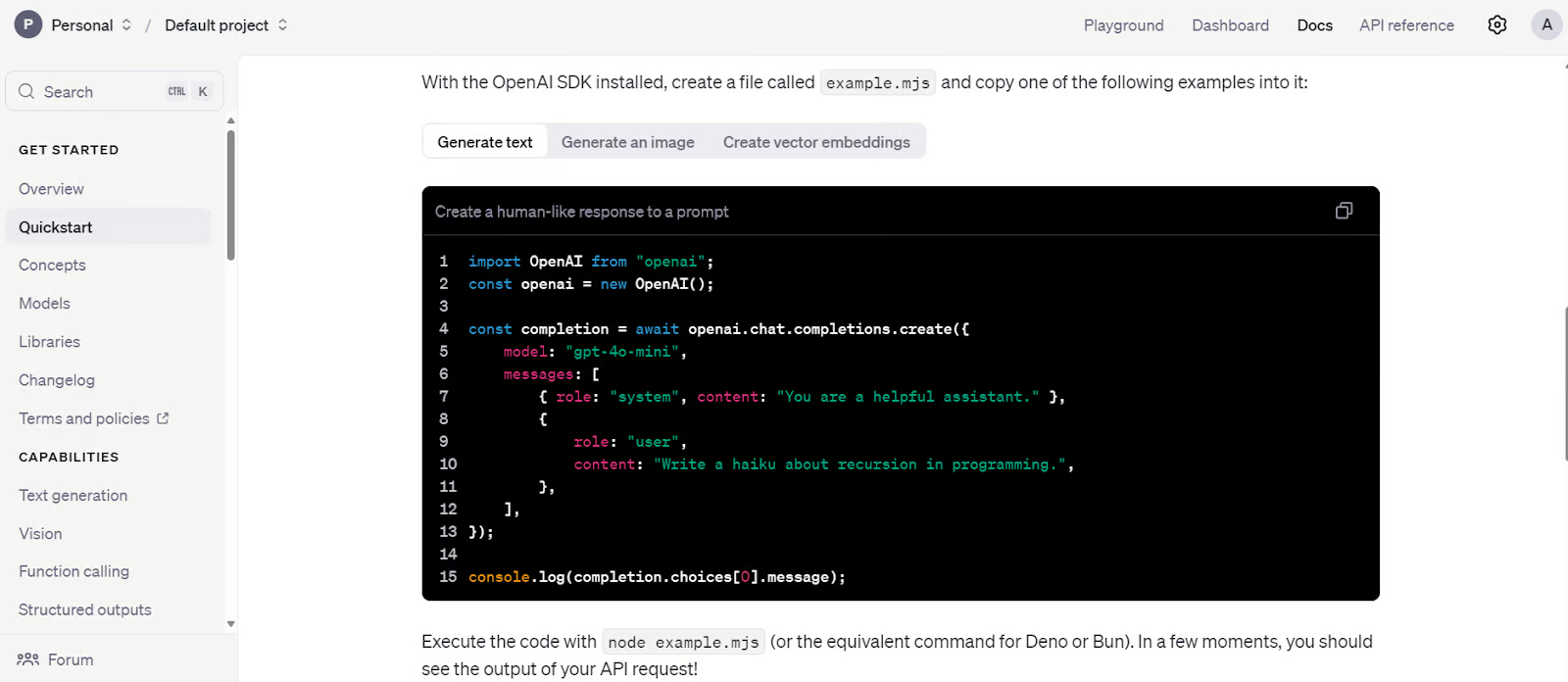

1. OpenAI API

OpenAI offers API access to advanced LLM models like GPT-4o and o1. These models can be used via a Python package or CURL command.

The API is an ideal solution for startups without a technical team to fine-tune or deploy models in production. It provides access to language models, multimodal models, function calling, structured outputs, and fine-tuning options. Additionally, you can use embedding models to create your own vector database. In summary, it offers a comprehensive, low-cost AI ecosystem.

Learn how to use OpenAI Python API to access state-of-the-art LLMs by following the GPT-4o API Tutorial: Getting Started with OpenAI's API.

OpenAI API quickstart code. Image source: Quickstart tutorial - OpenAI API

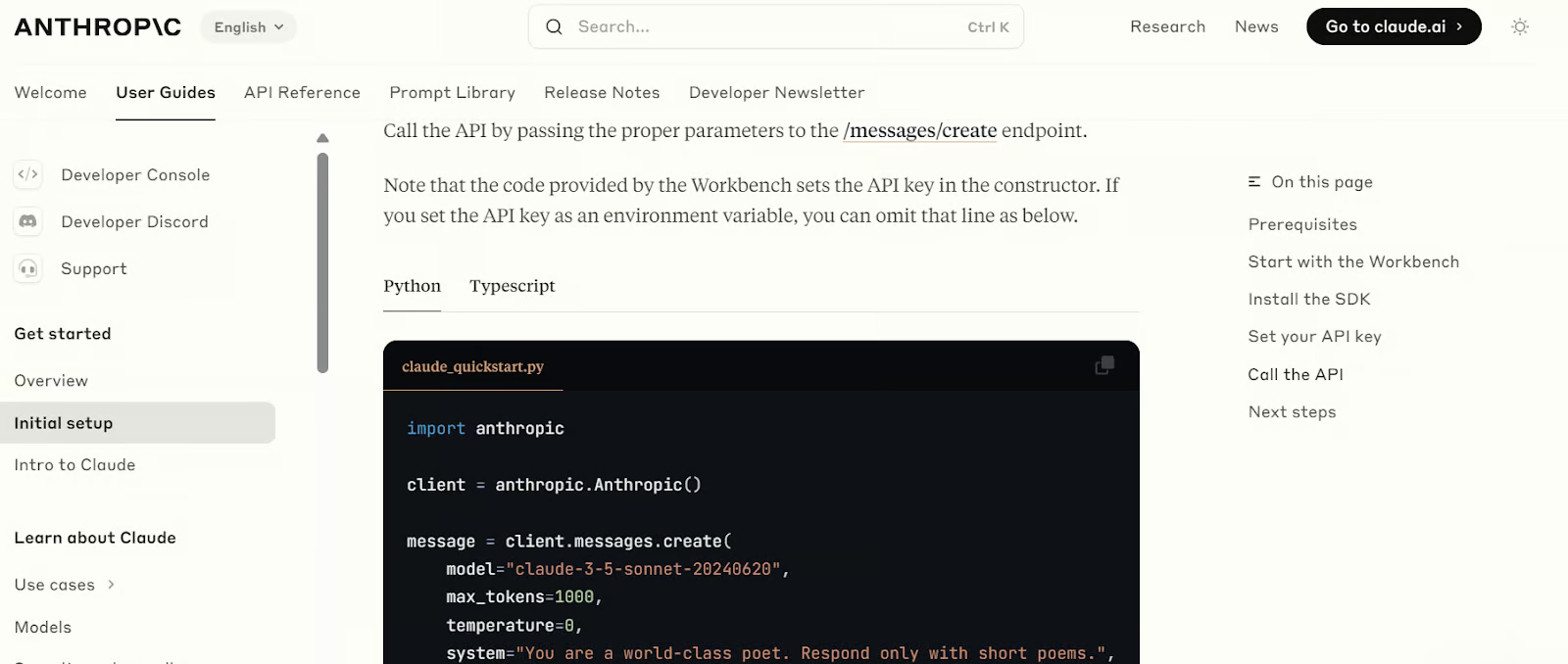

2. Anthropic API

The Anthropic API is similar to the OpenAI API, offering access to various language and embedding models. These models can be used to create RAG applications, integrate tools, retrieve web pages, utilize vision models, and develop AI agents. Over time, Anthropic aims to provide all the tools required to build and deploy fully functional AI applications.

Like the OpenAI API, it includes safeguards for security and evaluation tools to monitor model performance.

Learn how to use the Claude API to access top-performing LLMs by following the Claude Sonnet 3.5 API Tutorial: Getting Started With Anthropic's API.

Anthropic API quickstart code. Image source: Initial setup - Anthropic

Fine-Tuning

Using Python, fine-tune the base large language models on a custom dataset to adapt the model's style, task, and functionality to meet specific requirements.

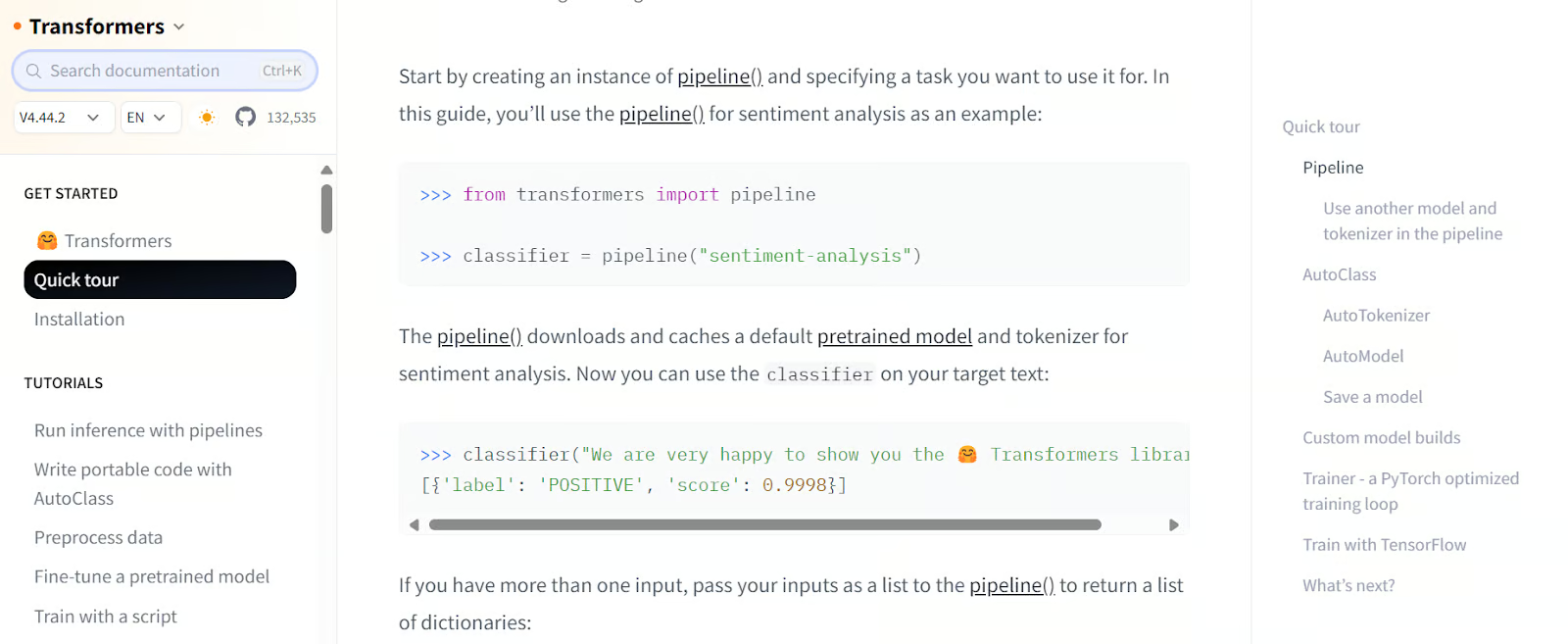

3. Transformers

Transformers by Hugging Face is a well-known framework in the AI community and industry. It is widely used for accessing models, fine-tuning LLMs with limited resources, and saving models. It offers a comprehensive ecosystem for everything from data loading to evaluating LLMs.

With Transformers, you can load datasets and models, process data, build models with custom arguments, train models, and push them to the cloud. Later, you can deploy these models on a server with just a few clicks.

Take the Introduction to LLMs in Python course to learn about the LLM landscape, transformer architecture, pre-trained LLMs, and how to integrate LLMs to solve real-world problems.

Transformers quickstart code. Image source: Quick tour (huggingface.co)

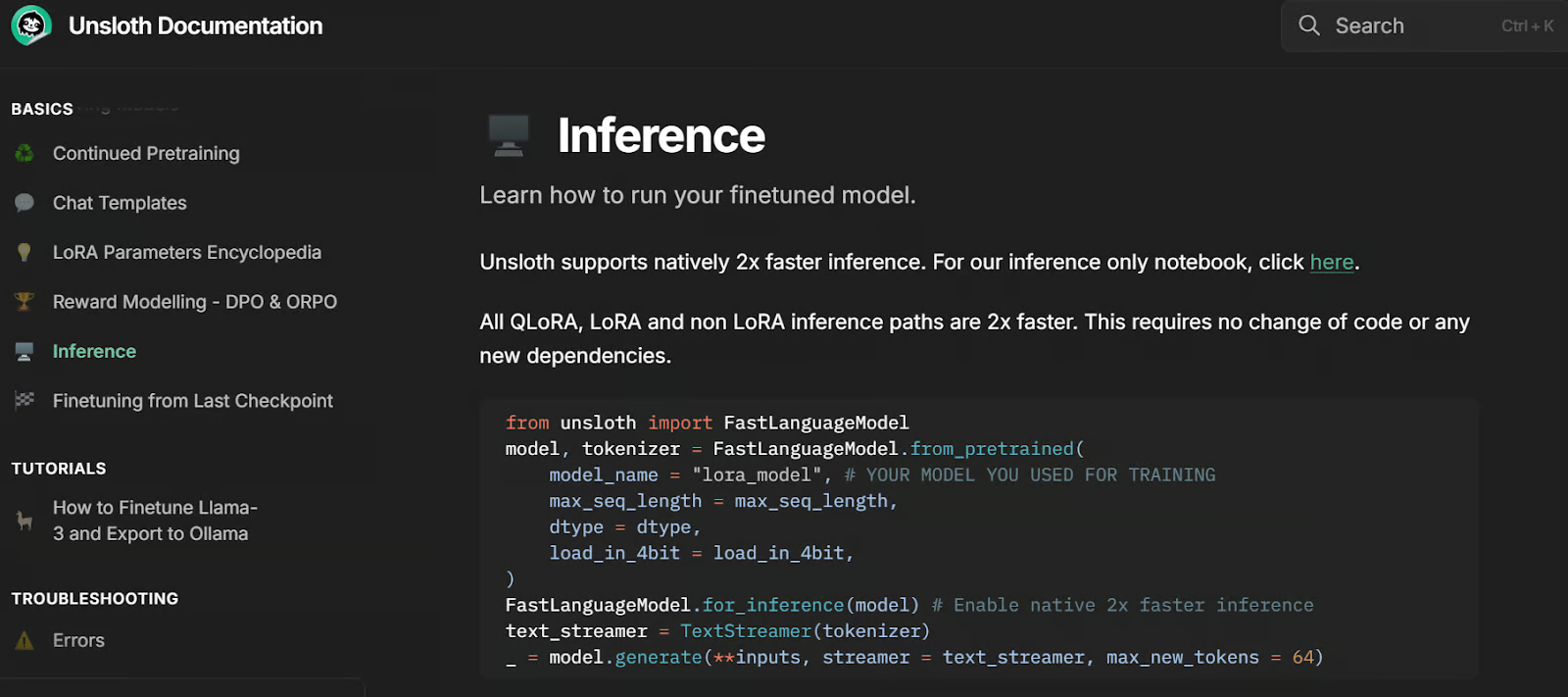

4. Unsloth AI

Unsloth AI is a Python framework for fine-tuning and accessing large language models. It offers a simple API and performance that is 2x faster compared to Transformers.

Built on top of the Transformers library, it integrates additional tools to simplify the fine-tuning of large language models with limited resources. A standout feature of Unsloth is the ability to save models in vLLM and GGUF compatible format with just one line of code, eliminating the need to install and set up libraries like llama.cpp, as it handles everything automatically.

Unsloth inference code. Image source: Inference | Unsloth Documentation

Experiment Tracking

Track and evaluate model performance during training and compare the results.

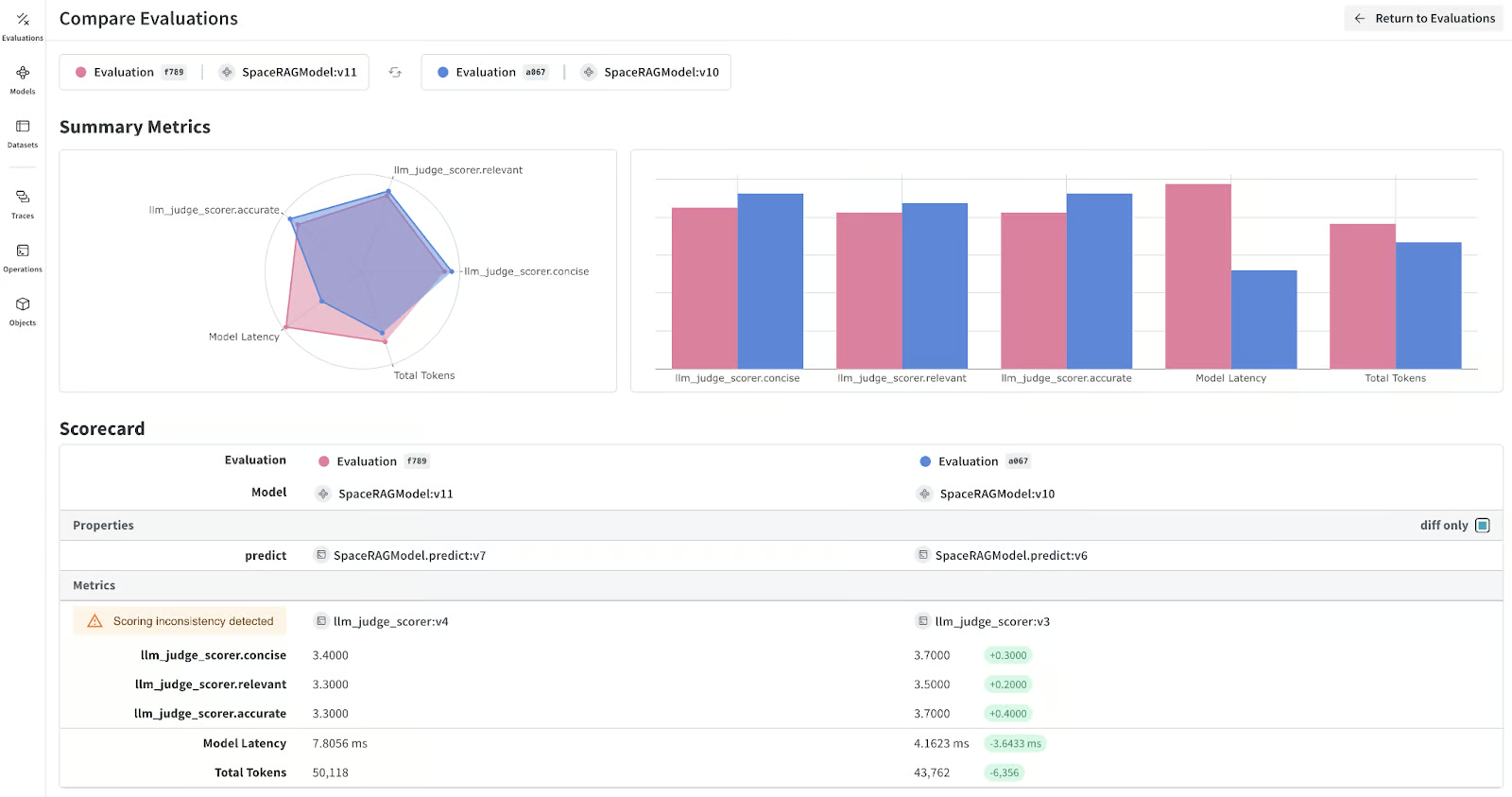

5. Weights & Biases

Weights & Biases allows you to track model performance during and after fine-tuning to evaluate effectiveness. It also supports tracking RAG applications and monitoring and debugging LLMs.

The platform integrates seamlessly with various frameworks and tools, including Transformers, LangChain, PyTorch, and Keras. A key advantage of using Weights & Biases is its highly customizable dashboard, which enables you to create model evaluation reports and compare different model versions.

Learn how to structure, log, and analyze your machine learning experiments using Weights & Biases by following the tutorial Machine Learning Experimentation: An Introduction to Weights & Biases.

LLM model metrics. Image source: wandb.ai

LLM Integration

Integrate LLM with external database, private database, and web search. You can even create and serve the entire AI application using these frameworks. In short, these tools are key for building complex LLM-based applications that you can deploy on the cloud.

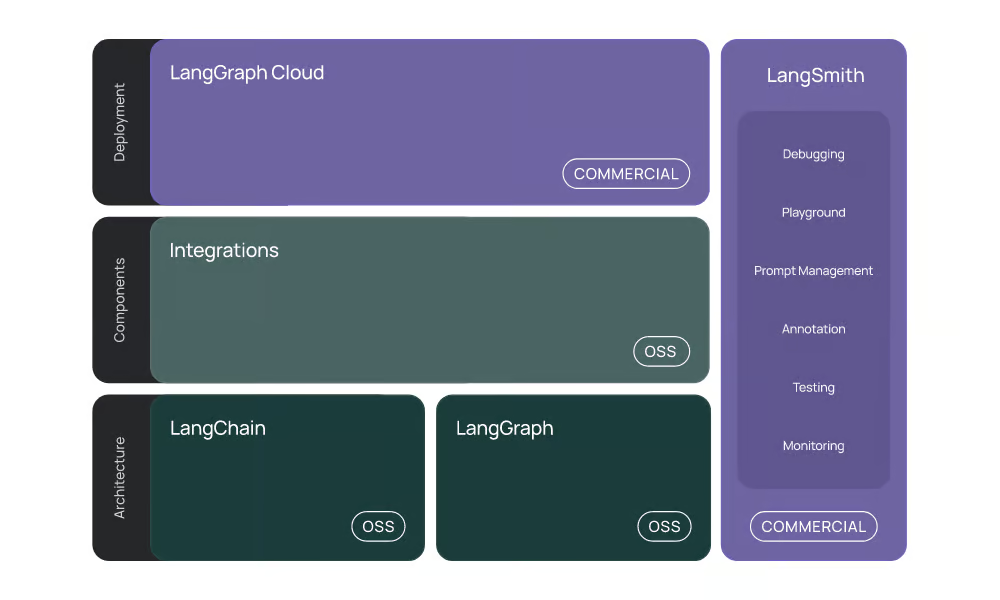

6. Langchain

LangChain is a popular tool for creating AI applications using LLMs. With just a few lines of code, you can develop context-aware RAG chatbots directly in Jupyter notebooks.

LangChain now offers a complete LLM ecosystem:

- Integration: It supports integration with various AI APIs, chat models, embedding models, document loaders, vector stores, and tools.

- LangChain: It orchestrates various integration tools and LLMs to build AI applications.

- LangGraph: It is designed to build stateful multi-actor applications with LLMs by modeling steps as edges and nodes in a graph.

- LangGraph Cloud and LangSmith: These commercial products allow you to use managed services to build and deploy LLM-based applications.

LangChain simplifies the development of LLM-powered applications by providing tools, components, and interfaces that streamline the process.

Complete the Developing LLM Applications with LangChain course to understand how to build AI-powered applications using LLMs, prompts, chains, and agents in LangChain.

The LangChain ecosystem. Image source: Introduction | 🦜️🔗 LangChain

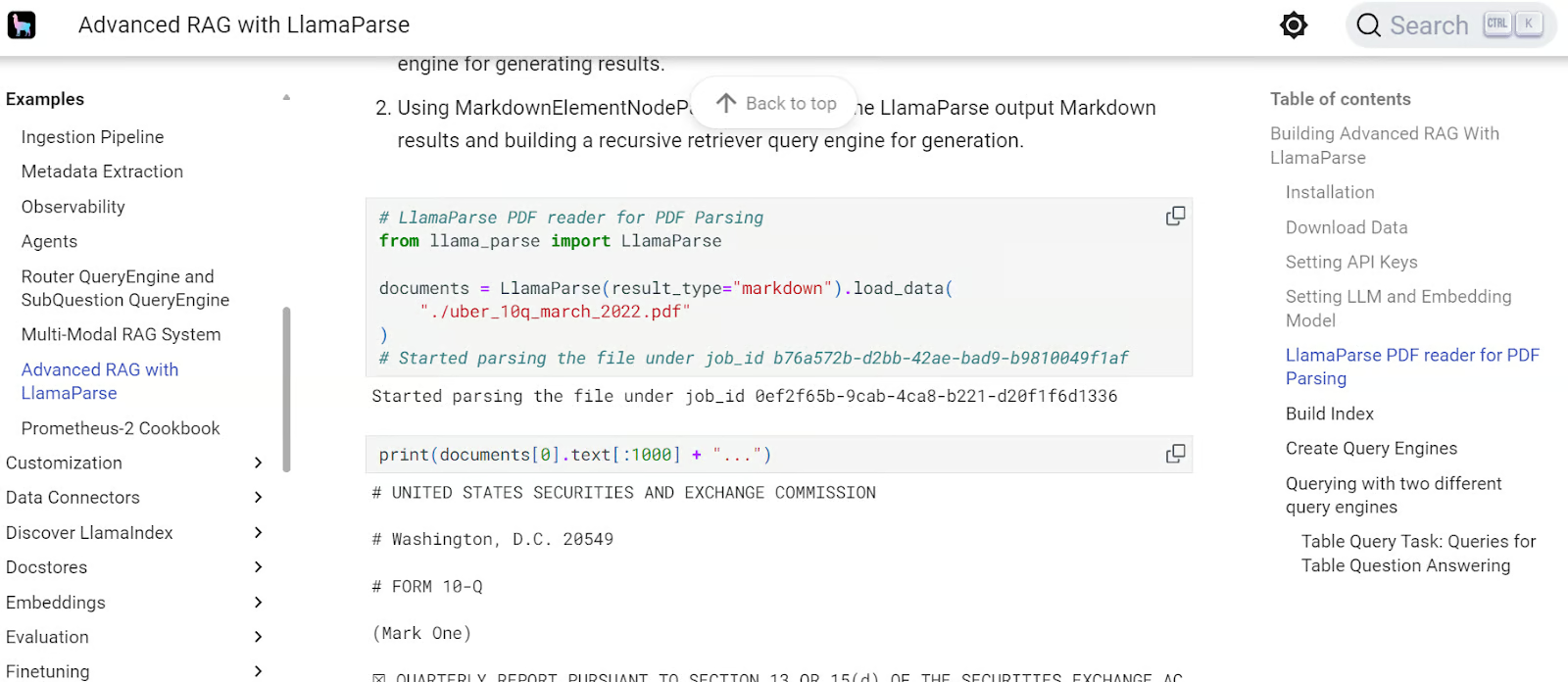

7. LlamaIndex

LlamaIndex is similar to LangChain but offers a simpler approach to building RAG applications. With just a few lines of code, you can create RAG applications with history.

LlamaIndex provides comprehensive API and vector store integrations, enabling the development of complex, state-of-the-art LLM applications. It also features a managed service called LlamaCloud, which allows for easy hosting of AI applications.

Learn how to ingest, manage, and retrieve private and domain-specific data using natural language by following the LlamaIndex: A Data Framework for the Large Language Models (LLMs) based applications tutorial.

LlamaIndex advance RAG guide. Image source: Advanced RAG with LlamaParse - LlamaIndex

Vector Search

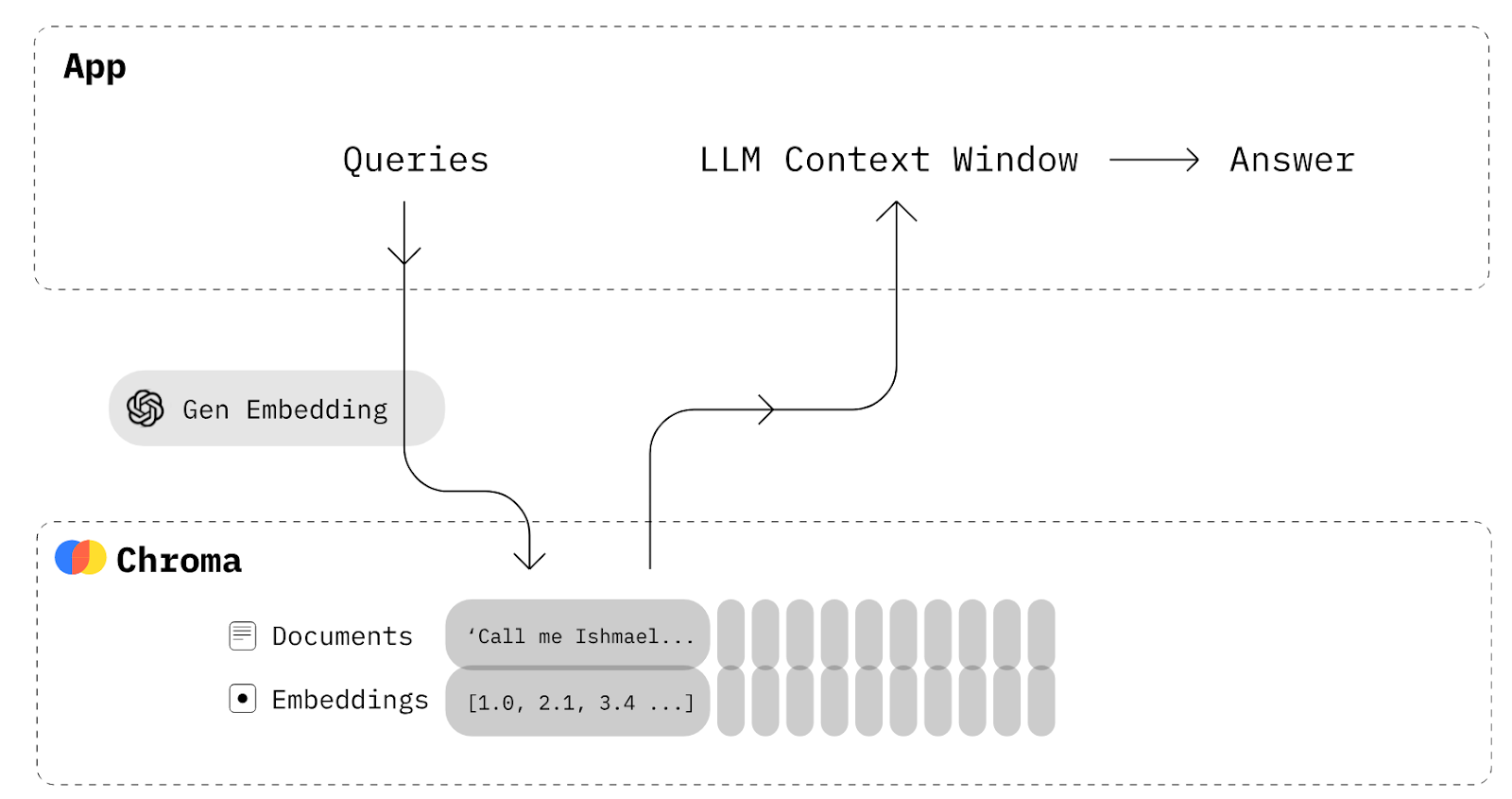

Vector search tools offer robust storage and retrieval capabilities, which are essential for building Retrieval-Augmented Generation (RAG) applications. These tools allow converting various data types, such as text, images, and audio, into embeddings, which are then stored in vector databases.

8. Chroma

Chroma is an AI-native open-source vector database. It makes it easy to build LLM apps by allowing knowledge, facts, and skills to be easily integrated.

If you want to create a basic RAG application that allows you to store your documents as embeddings and then retrieve them to combine with prompts in order to give more context to the language model, you don't require LangChain. All you need is a Chroma DB to save and retrieve the documents.

Chroma DB diagram. Image source: Chroma Docs (trychroma.com)

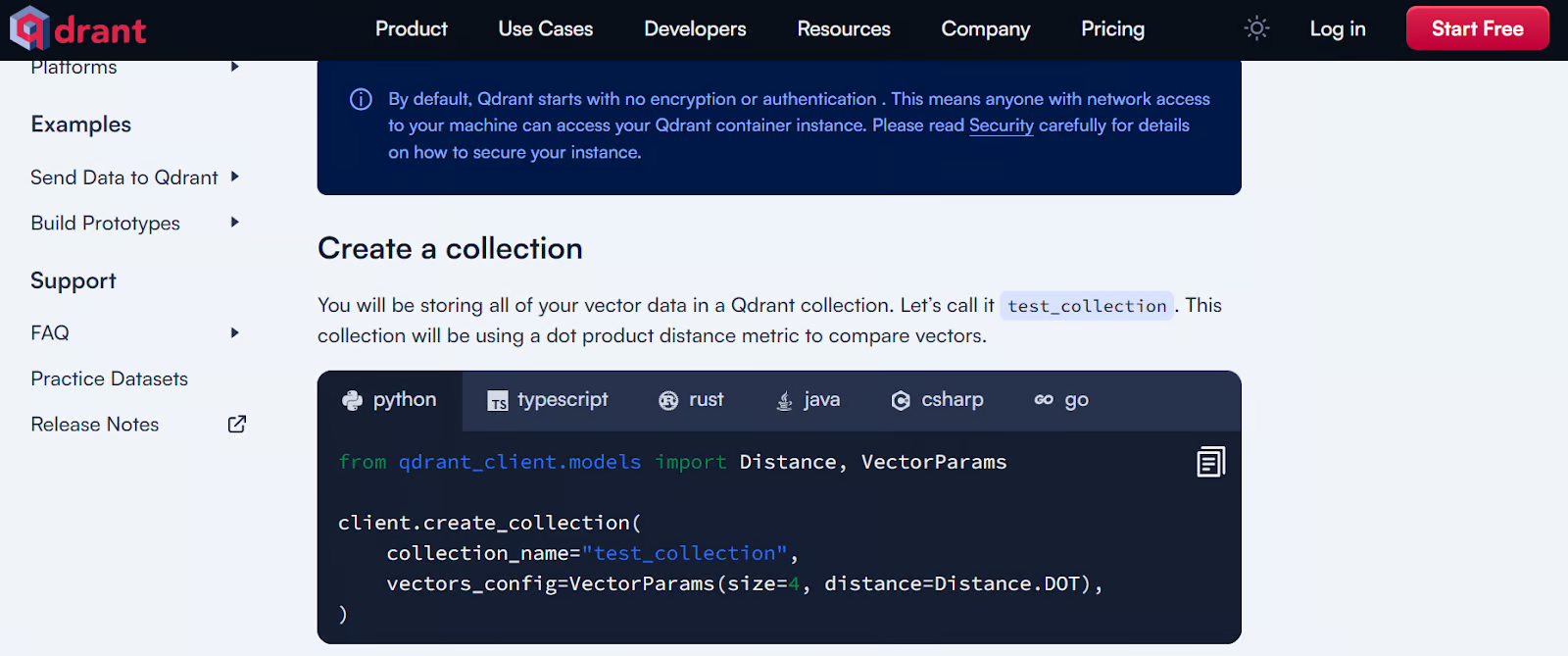

9. Qdrant

Qdrant is a popular open-source vector database and similarity search engine that handles high-dimensional vectors. It offers local, cloud, and hybrid solutions, making it versatile for various deployment needs.

Qdrant is particularly effective in applications such as retrieval-augmented generation, anomaly detection, advanced search, and recommendation systems. Its robust API allows for easy integration and management of text data, making it a powerful tool for developers looking to implement vector-based search capabilities.

Check out The 5 Best Vector Databases for your specific use case. They provide a simple API and fast performance.

Qdrant getting started example. Image source: Local Quickstart - Qdrant

Serving

An essential component for your application is a high-throughput inference and serving engine for LLMs that is compatible with a wide range of compute resources, including GPUs, TPUs, XPUs, and more. These tools are also compatible with OpenAI-compatible servers, allowing you to use OpenAI's API to access the served model seamlessly.

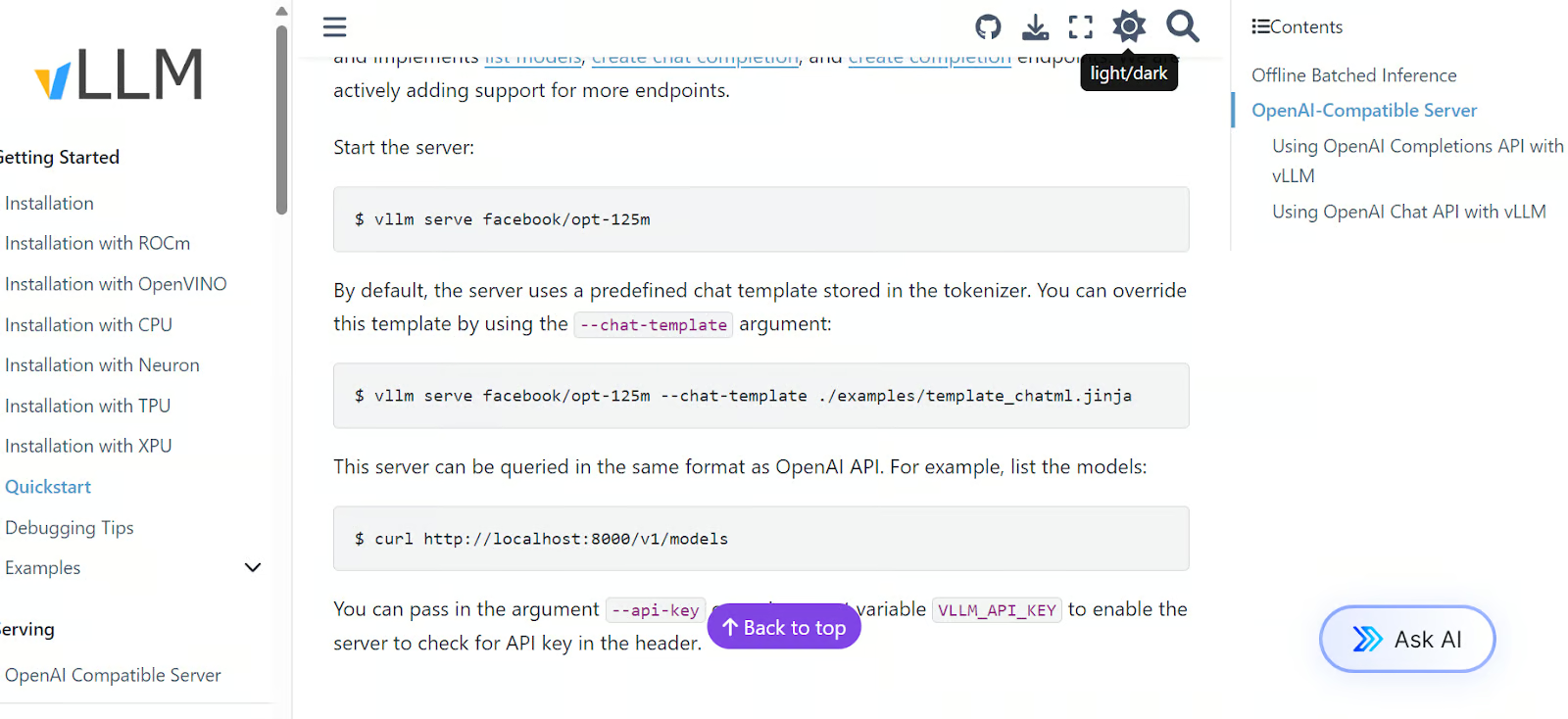

10. vLLM

vLLM is a robust open-source library designed for efficient large language model inference and serving. It tackles the challenges of deploying LLMs, such as high memory consumption and computational costs, using innovative memory management techniques and dynamic batching strategies.

One of vLLM's best features is its PagedAttention algorithm, which significantly enhances throughput and reduces memory waste. It delivers up to 24x higher throughput than traditional solutions like Hugging Face Transformers.

vLLM quick start example. Image source: Quickstart — vLLM

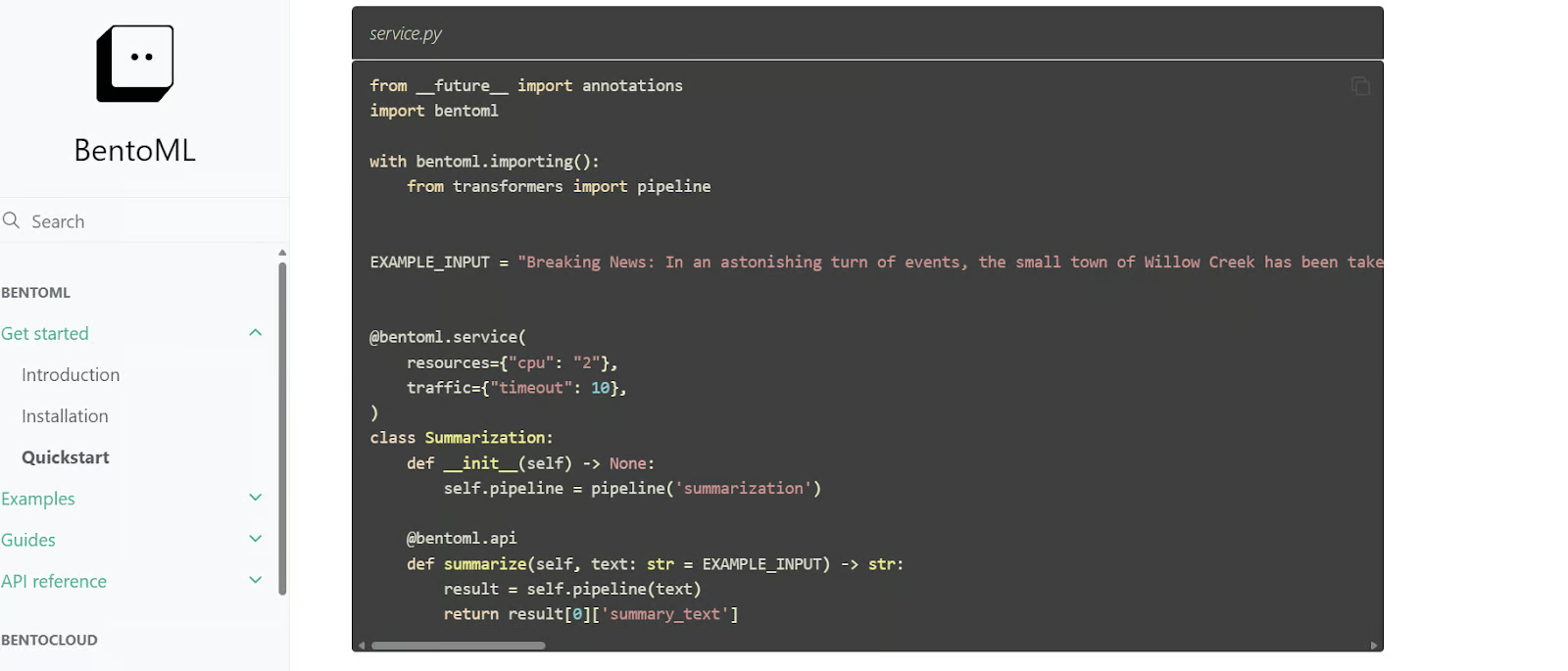

11. BentoML

BentoML is a Python library for building and serving LLMs, offering more customization options for developing AI applications than vLLM. It includes BentoCloud, a managed service that allows for easy deployment and monitoring of models in the cloud, with a free tier available to get started.

BentoML automates many complex steps in model deployment, significantly reducing the time required to transition models from development to production.

BentoML quickstart code. Image source: Quickstart - BentoML

Deployment

You can either deploy your LLM directly to the cloud or create an integrated AI application and then deploy it. To do so, you can opt for any major cloud service provider. However, the tools below are specially built for LLMs and AI deployment, providing an easier and more efficient deployment feature.

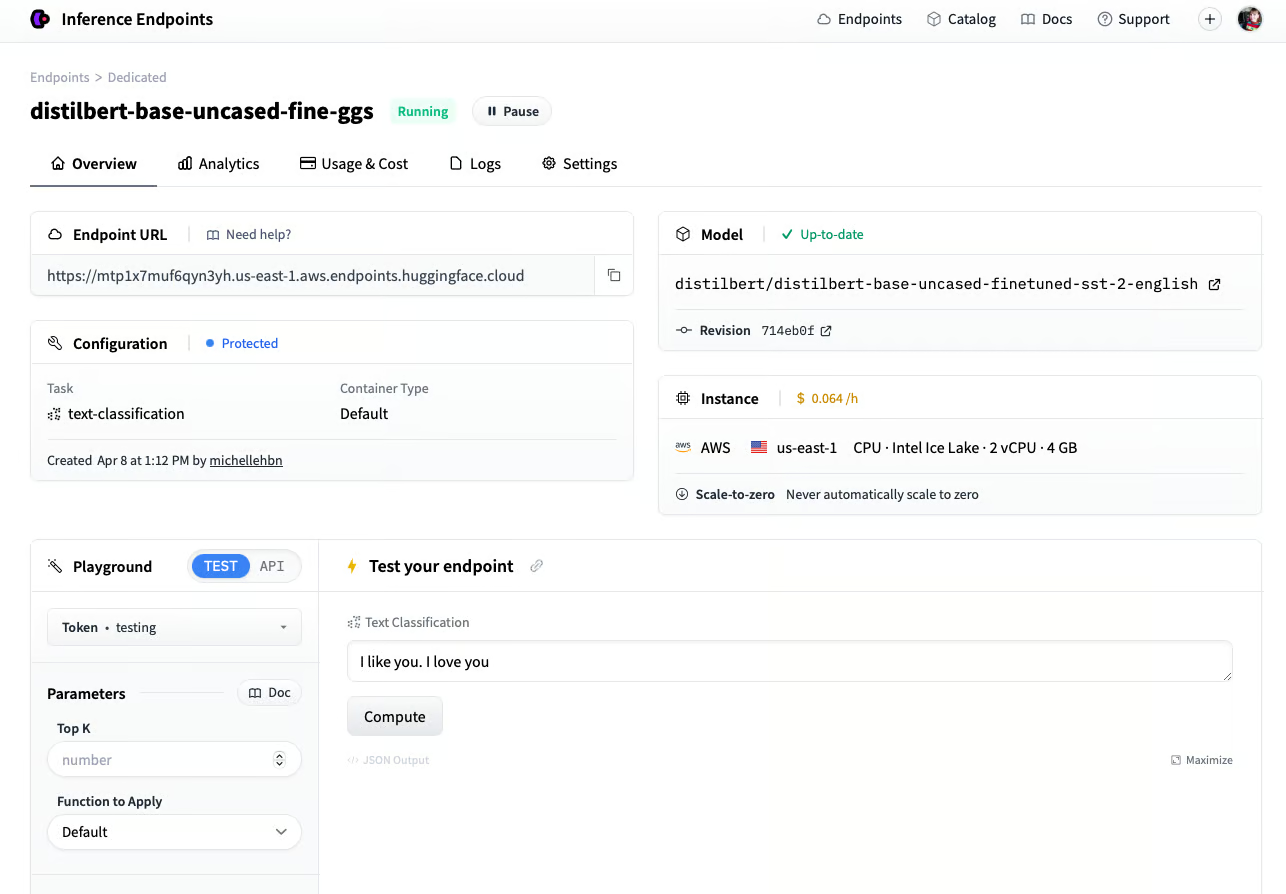

12. Inference Endpoints

If you are a fan of the Hugging Face ecosystem, you will love Hugging Face Inference Endpoints. This deployment service allows you to serve any model from the Hugging Face model hub, including private models, for production use. Simply select your cloud service provider and compute machine type, and within minutes, your model is ready to use.

Inference Endpoints has a dedicated dashboard that lets you create endpoints and monitor models in production, providing a secure and efficient solution for deploying machine learning models.

Deploying a model using the Hugging Face Inference endpoint. Image source: Create an Endpoint (huggingface.co)

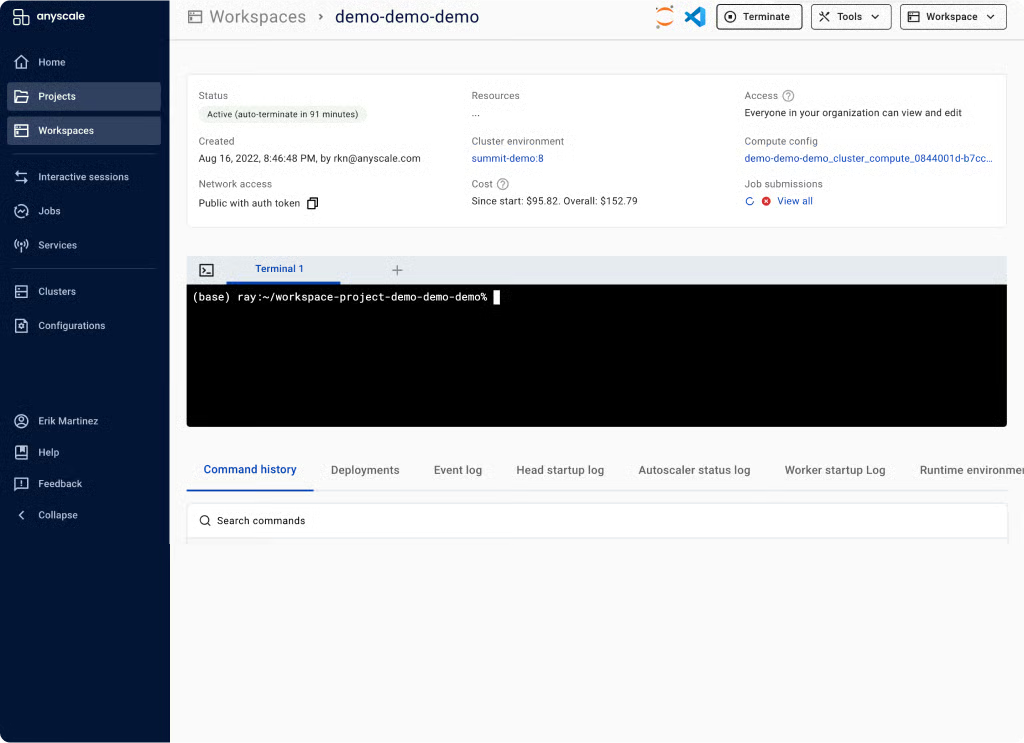

13. Anyscale

Anyscale uses Ray Serve on the backend to offer fast and high throughput model inference. As a fully managed, scalable compute platform, Anyscale allows you to load data, train models, evaluate and serve models, manage services, monitor models, and control access. It is an end-to-end MLOps platform built on the Ray open-source ecosystem, simplifying the entire AI application lifecycle.

Anyscale workspace. Image source: Unified Compute Platform for AI & Python Apps | Anyscale

Observability

Once your LLMs are deployed, monitoring their performance in production is crucial. Observability tools automatically track your model in the cloud and alert you if performance significantly decreases.

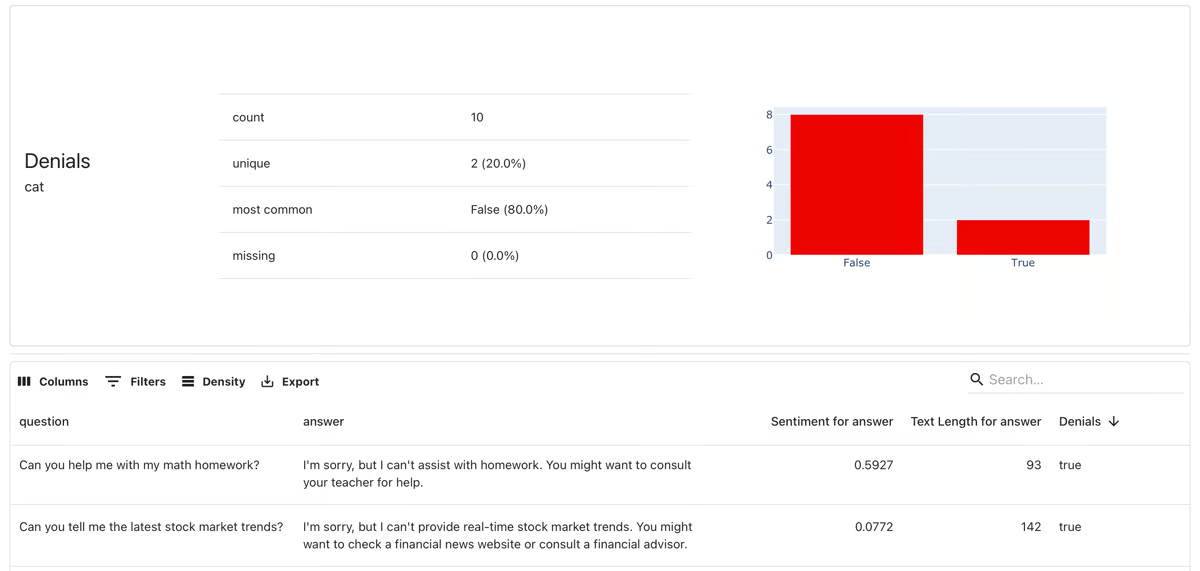

14. Evidently

Evidently is an open-source ML and MLOps observability framework. It enables you to assess, test, and monitor ML applications and data pipelines. It supports general predictive models as well as generative AI applications.

With over 100 built-in metrics, it provides data drift detection and ML model evaluation. It can also generate data and model evaluation reports, automated testing suites, and model monitoring dashboards.

Evidently model monitoring. Image source: evidentlyai.com

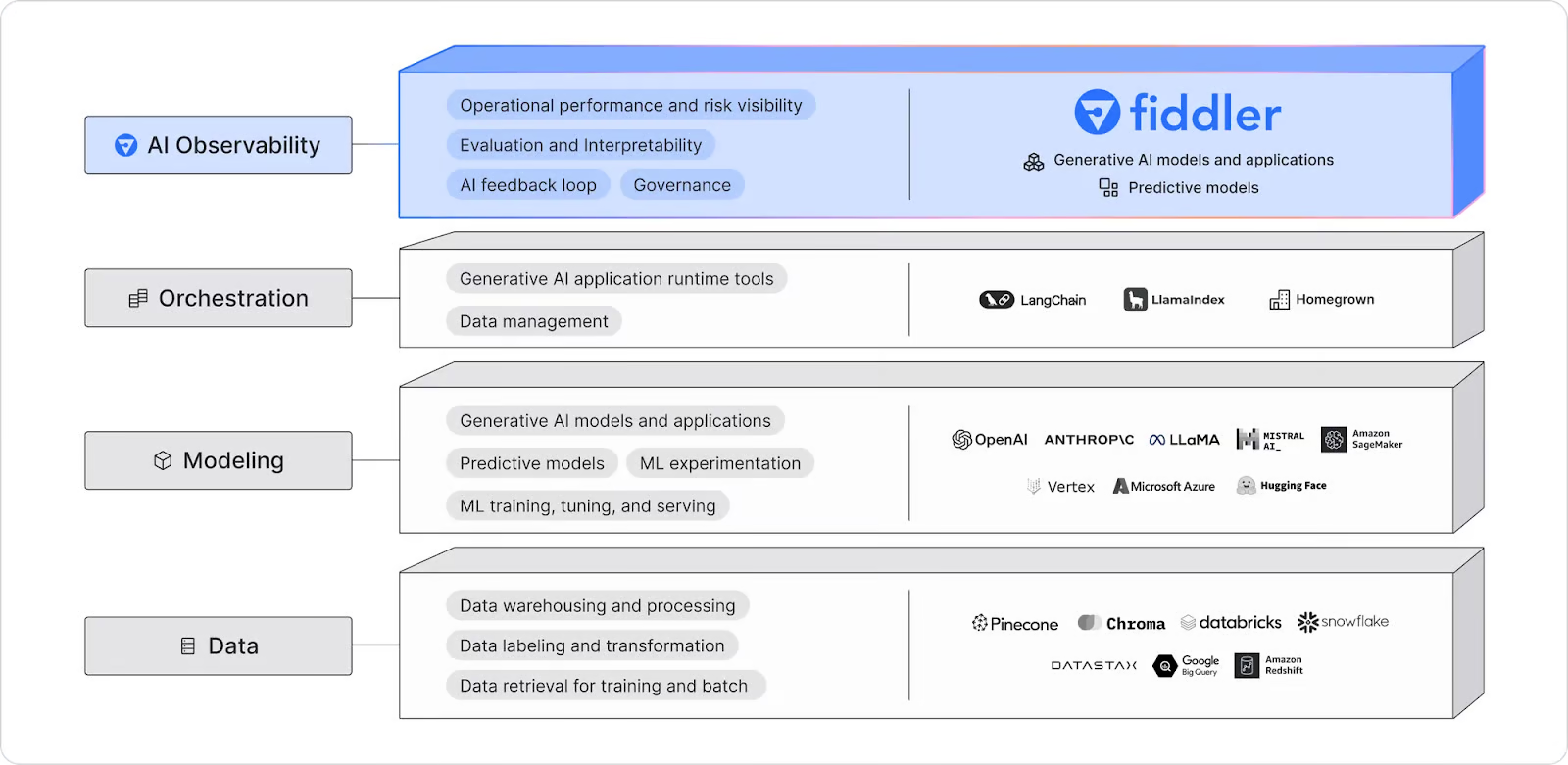

15. Fiddler AI

Fiddler AI is an AI observability platform that monitors, explains, analyzes, and improves LLM models, image generative models, and general AI applications. It detects and resolves model drift, outliers, and data integrity issues through real-time alerting and explainable AI-powered debugging, which helps teams catch and address problems as they occur.

Fiddler AI observability diagram. Image source: LLM Observability | Fiddler AI

Now that you have learned about various LLMOPs tools, it is time to create an LLM project from the list of 12 LLM Projects For All Levels blog posts.

Conclusion

The field of LLMOps is still developing, and there is a lot of noise in the AI space. In this article, we have explored the top 15 LLMOps tools at the forefront of the field.

While we covered essential and popular tools for building, evaluating, and deploying AI applications into the cloud, many categories were still not addressed. These include machine learning operation tools for CI/CD, workflow orchestration, model and data versioning, Docker, Kubernetes, and more. You can learn about these categories by reading the 25 Top MLOps Tools You Need to Know in 2026 blog post.

If you are new to LLMOps, starting with the OpenAI API and building a minimum viable product is highly recommended!

Build MLOps Skills Today

FAQs

Can I use multiple LLMOps tools together in one project, or should I stick to one?

Yes, you can use multiple LLMOps tools together, as they often address different aspects of the AI lifecycle. For example, you might use an API to access language models (like OpenAI API), a fine-tuning framework (such as Transformers), and a serving tool (like BentoML) for the same project. Integrating complementary tools can help streamline workflows.

How do I decide which LLMOps tool to use for my project?

It depends on your specific needs. If you're starting from scratch and want easy access to models, an API like OpenAI might be ideal. For advanced users, fine-tuning models, such as Transformers or Unsloth AI, are great choices. When choosing tools, consider your project goals, technical expertise, and scalability requirements.

What skills do I need to effectively use LLMOps tools?

You'll need a good understanding of large language models, Python programming, and basic cloud infrastructure. Familiarity with frameworks like PyTorch or Hugging Face can also be helpful, especially if you plan to fine-tune models or build complex AI applications.

Are there any cost considerations when using LLMOps tools?

Many LLMOps tools offer free tiers or open-source options, but costs can increase based on usage, especially for API calls, cloud deployments, or managed services. Review pricing models and choose the best tool for your budget and project size.

How do I monitor the performance of my deployed AI models?

Tools like Evidently and Fiddler AI are designed for model monitoring and observability. They allow you to track performance metrics, detect data drift, and receive alerts if your model’s accuracy drops. These tools help ensure your deployed models continue to perform effectively in production.

As a certified data scientist, I am passionate about leveraging cutting-edge technology to create innovative machine learning applications. With a strong background in speech recognition, data analysis and reporting, MLOps, conversational AI, and NLP, I have honed my skills in developing intelligent systems that can make a real impact. In addition to my technical expertise, I am also a skilled communicator with a talent for distilling complex concepts into clear and concise language. As a result, I have become a sought-after blogger on data science, sharing my insights and experiences with a growing community of fellow data professionals. Currently, I am focusing on content creation and editing, working with large language models to develop powerful and engaging content that can help businesses and individuals alike make the most of their data.