Course

Businesses and individuals generate vast amounts of textual data daily. However, extracting insights from this unstructured information poses a challenge for traditional data processing methods.

Natural language processing (NLP) encompasses a range of techniques for analyzing and understanding text, but the specific subfield of natural language understanding (NLU) focuses on machine comprehension of the meaning, context, and intent behind human language.

NLU enables the transformation of raw text into actionable insights, opening the way for applications like intelligent chatbots and sentiment analysis tools.

Despite significant advancements, NLU remains one of the most challenging and unsolved problems in AI, with its complexity rooted in the nuances and ambiguities of natural language. This article will provide you with a solid foundation in NLU.

AI Upskilling for Beginners

What Is NLU?

Natural language understanding (NLU) is a subfield of natural language processing (NLP) focused on enabling machines to comprehend and interpret human language.

Unlike other NLP tasks that might involve generating or translating text, NLU is specifically concerned with understanding the meaning, context, and intent behind the words and phrases people use.

Created with Napkin.ai

At its core, NLU is about transforming unstructured language data into structured information that machines can work with. This involves tasks such as identifying entities in a sentence, determining the sentiment of a statement, or classifying the intent behind a user’s query.

For example, when someone says, "Book a flight to New York," an NLU system must recognize "book" as an action, "flight" as the object, and "New York" as the destination.

How NLU Works

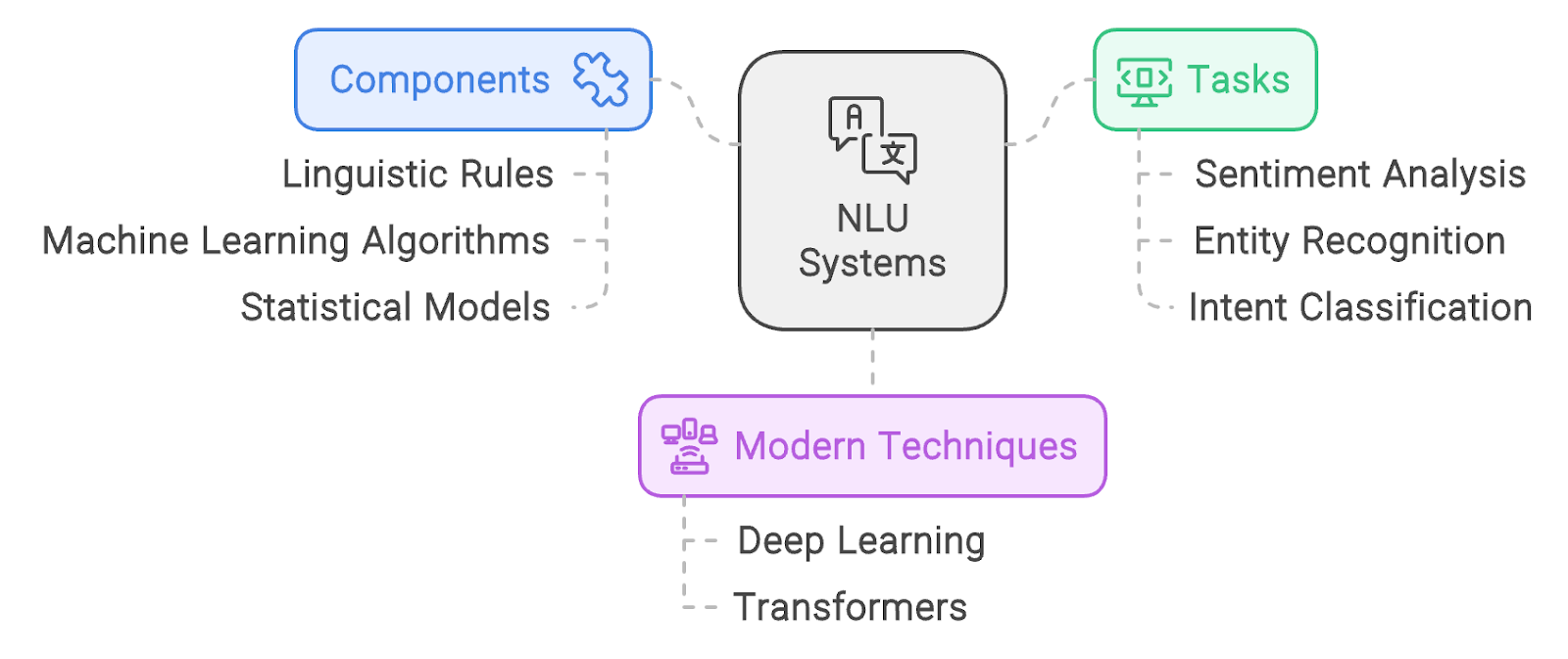

Natural language understanding (NLU) relies on a combination of linguistic rules, machine learning algorithms, and statistical models to interpret human language accurately.

NLU systems transform unstructured language data into structured information that machines can use to perform tasks such as sentiment analysis, entity recognition, and intent classification.

Modern NLU relies mostly on deep learning, particularly models like transformers. For example, even multi-purpose models like ChatGPT are capable of parsing any English language sentence to extract the meaning.

Created with Napkin.ai

NLU vs NLP

It is important to clarify that natural language understanding (NLU) is not separate from natural language processing (NLP) but rather a critical component within it. While people often use the term "NLU vs. NLP," this framing is misleading because NLU is a subset of NLP.

The only meaningful comparison between these two would involve discussing NLP tasks that do not fall under NLU. However, that would shift the focus away from NLU, so we will not pursue this line of comparison.

NLP encompasses a wide range of tasks related to the processing and analysis of text, while NLU specifically focuses on comprehension. For instance, while NLP might involve tasks like speech recognition or text generation, NLU is about understanding the input text’s meaning—what the user is trying to say and why.

The table below highlights some of the key differences between the two:

|

Aspect |

NLP |

NLU |

|

Scope |

Broad (includes all aspects of processing and generating human language) |

Narrow (focused specifically on understanding meaning, intent, and context) |

|

Example Tasks |

Speech recognition, text generation, machine translation |

Entity recognition, intent classification, sentiment analysis |

|

Focus |

Both understanding and generation of language |

Comprehension of language meaning and intent |

This table demonstrates that while NLP covers a broad range of tasks involving both the understanding and generation of human language, NLU is specifically focused on comprehension.

Applications of NLU

Natural language understanding (NLU) is at the core of many AI-driven applications. It enables machines (for example, chatbots) to interact with humans in ways that feel intuitive and natural. Below are some key areas where NLU is making a significant impact, alongside some examples of modern tooling for each.

Chatbots

One of the primary applications of NLU is in chatbots and virtual assistants. These systems rely on NLU to accurately interpret and respond to user requests.

For instance, when a user asks, "Can you find me a nearby coffee shop?" the NLU component helps the system understand that the user is looking for a coffee shop close to their current location, allowing the chatbot or assistant to provide a relevant response.

Beyond simply understanding individual queries, NLU is essential for maintaining the flow of conversation. It enables chatbots and virtual assistants to keep track of context, allowing for more natural and coherent back-and-forth interactions. For example, if a user gets angry at the chatbot, it will understand that and respond accordingly.

A popular tool for building chatbots with advanced NLU capabilities is the Rasa framework. It is an open-source library used to build chatbots with advanced NLU capabilities.

Sentiment analysis

Sentiment analysis is another critical application of NLU, and it is used extensively to gauge public opinion and customer feedback.

Businesses use NLU to analyze the sentiment expressed in customer reviews, social media posts, and other forms of feedback to determine whether the general sentiment is positive or negative.

One commonly used sentiment analysis tool I’d like to mention is VADER (Valence Aware Dictionary and sEntiment Reasoner). It is an open-source rule-based tool specifically designed to understand sentiment in social media.

Text classification

NLU plays a vital role in text classification tasks, such as spam filtering. By analyzing the content and intent behind emails or messages, NLU systems can effectively distinguish between ham and spam.

Two popular tools for text classification are SpaCy and NLTK (Natural Language Toolkit).

SpaCy is an open-source library designed for efficient and fast NLP in Python, offering pre-trained models that can be fine-tuned for various text classification tasks.

NLTK is another powerful Python library that provides easy-to-use tools for tasks like tokenization, part-of-speech tagging, and text classification. Both libraries can be used to build text classification pipelines.

Challenges of NLU

NLU is often referred to as an AI-hard problem, meaning it is one of the most complex and challenging areas in AI. The difficulty lies in the nuances of human language—ambiguities, idioms, context, and cultural differences all contribute to the complexity.

Created with Napkin.ai

Ambiguity

One of the most significant challenges in NLU is handling the inherent ambiguity in human languages.

A single sentence can often be interpreted in multiple ways. For example, consider the following sentence: “I saw a man with binoculars.” This sentence could mean that we saw the man using binoculars or the man we saw had binoculars.

Disambiguating such sentences requires an understanding of the context, something that is relatively straightforward for humans but remains a considerable challenge for machines.

Idioms and figurative language

Idiomatic expressions and figurative language add another layer of complexity to NLU. Phrases like "kick the bucket" or "spill the beans" have meanings that are not directly related to the literal interpretation of the words.

For NLU systems to accurately interpret such expressions, they must be trained on a lot of data that includes these cultural and linguistic nuances, which can vary widely across different languages and regions.

Cultural and linguistic diversity

Language is not uniform. It varies significantly across different cultures, regions, and even social groups. An NLU system that works well in one language or cultural context might fail in another.

For instance, slang, dialects, and regional expressions can be challenging for NLU systems to interpret correctly. Building systems that can handle this diversity requires extensive and diverse training data, which is often difficult to obtain.

Data bias

Bias in training data poses another significant challenge for NLU systems. If the data used to train an NLU model is biased, the model's predictions and interpretations will reflect that bias.

For example, if a model is trained on text from a particular demographic, it may not perform well when interpreting language from different demographics.

Conclusion

Natural language understanding (NLU) is an important subfield of AI and NLP that focuses on enabling machines to accurately comprehend and interpret human language.

By understanding the principles and challenges of NLU, including its role within the broader context of NLP, you can appreciate the complexity and significance of natural language understanding.