Track

With the release of QwQ-32B, Alibaba’s Qwen Team is proving once again that it’s a serious competitor in the AI space. This model achieves performance close to DeepSeek-R1, a leading reasoning model, but does so at a fraction of the size—32 billion parameters compared to DeepSeek’s 671 billion.

If QwQ-32B sounds familiar, that’s because it builds on the QwQ-32B-Preview, which we previously tested in this blog on QwQ-32B-Preview. Now, with this final release version, Qwen has fine-tuned its approach, refining the model’s reasoning capabilities and making it widely available as an open-source AI.

In this blog, I’ll cut through the noise and break down the essentials about QwQ-32B—how it works, how it compares to other models, and how you can access it.

AI Upskilling for Beginners

What Is QwQ-32B?

QwQ-32B is not just a regular chatbot-style AI model—it belongs to a different category: reasoning models.

While most general-purpose AI models, like GPT-4.5 or DeepSeek-V3, are designed to generate fluid, conversational text on a wide range of topics, reasoning models focus on breaking down problems logically, working through steps, and arriving at structured answers.

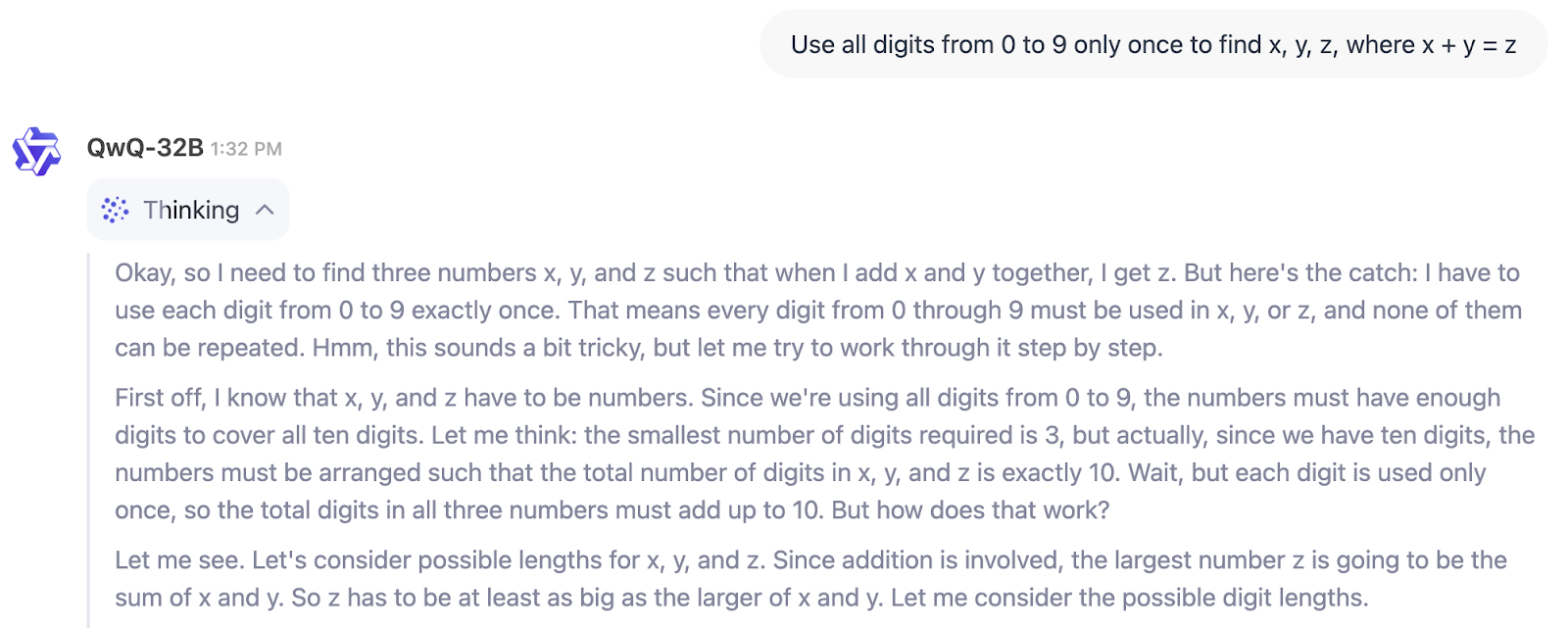

In the example below, we can see directly the thinking process of QwQ-32B:

So, who is QwQ-32B for? If you’re looking for a model to help with writing, brainstorming, or summarizing, this isn’t it.

But if you need something to tackle technical problems, verify multi-step solutions, or assist in domains like scientific research, finance, or software development, QwQ-32B is built for that kind of structured reasoning. It’s particularly useful for engineers, researchers, and developers who require AI that can handle logical workflows rather than just generating text.

There’s also a broader industry trend to consider. Similar to the rise of small language models (SLMs), we may be witnessing with QwQ-32B the emergence of “small reasoning models” (I totally made this term up). Why am I saying this? Well, there’s a 20-fold difference between the 671B parameters of DeepSeek-R1 and the 32B of QwQ-32B, yet QwQ-32B still gets close in performance (as we’ll see below in the section on benchmarks).

QwQ-32B Architecture

QwQ-32B is built to reason through complex problems, and a big part of that comes from how it was trained. Unlike traditional AI models that rely only on pretraining and fine-tuning, QwQ-32B incorporates reinforcement learning (RL), a method that allows the model to refine its reasoning by learning from trial and error.

This training approach has been gaining traction in the AI space, with models like DeepSeek-R1 using multi-stage RL training to achieve stronger reasoning capabilities.

How reinforcement learning improves AI reasoning

Most language models learn by predicting the next word in a sentence based on vast amounts of text data. While this works well for fluency, it doesn’t necessarily make them good at problem-solving.

Reinforcement learning changes this by introducing a feedback system: instead of just generating text, the model gets rewarded for finding the right answer or following a correct reasoning path. Over time, this helps the AI develop better judgment when tackling complex problems like math, coding, and logical reasoning .

QwQ-32B takes this further by integrating agent-related capabilities, allowing it to adapt its reasoning based on environmental feedback. This means that instead of just memorizing patterns, the model can use tools, verify outputs, and refine its responses dynamically. These improvements make it more reliable for structured reasoning tasks, where simply predicting words isn’t enough.

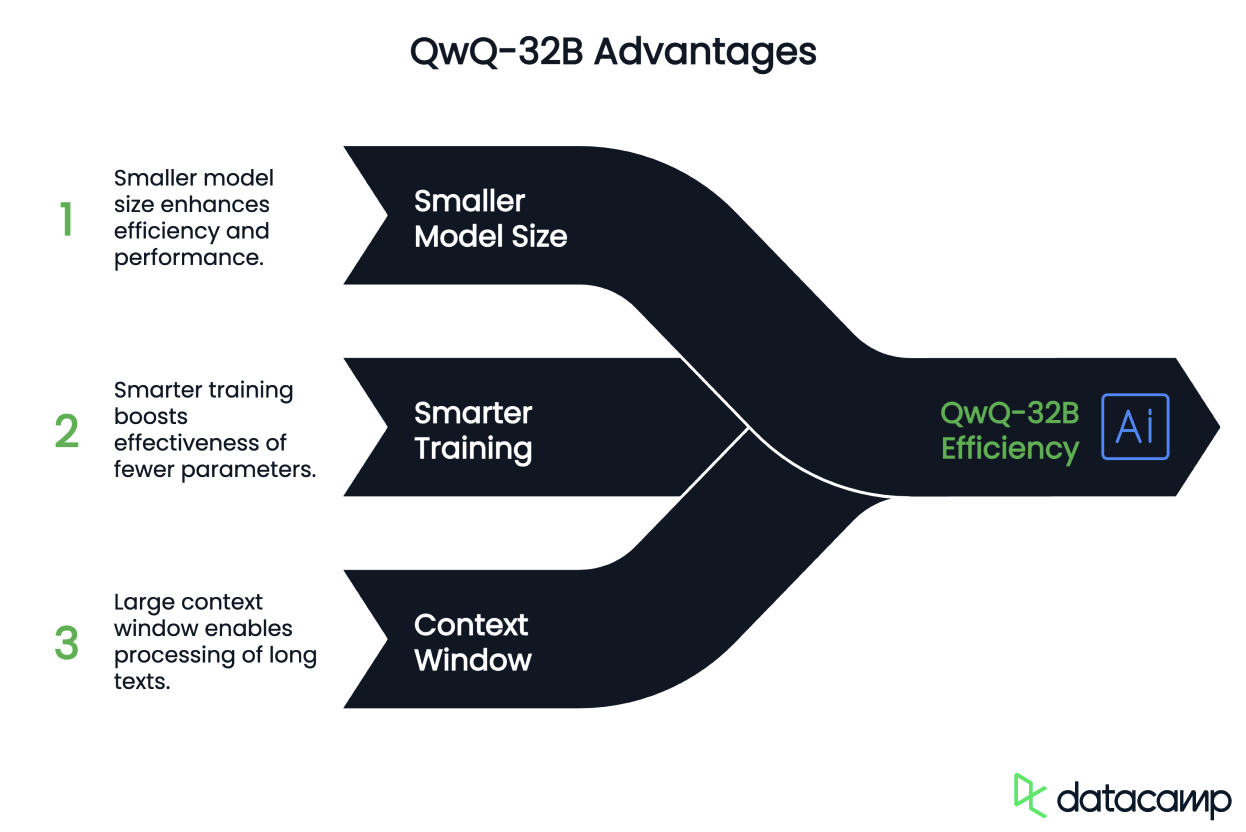

Smaller model, smarter training

One of the most impressive aspects of QwQ-32B’s development is its efficiency. Despite having only 32 billion parameters, it achieves performance comparable to DeepSeek-R1, which has 671 billion parameters (with 37 billion activated). This suggests that scaling up reinforcement learning can be just as impactful as increasing model size.

Another key aspect of its design is its 131,072-token context window, which allows it to process and retain information over long passages of text.

QwQ-32B Benchmarks

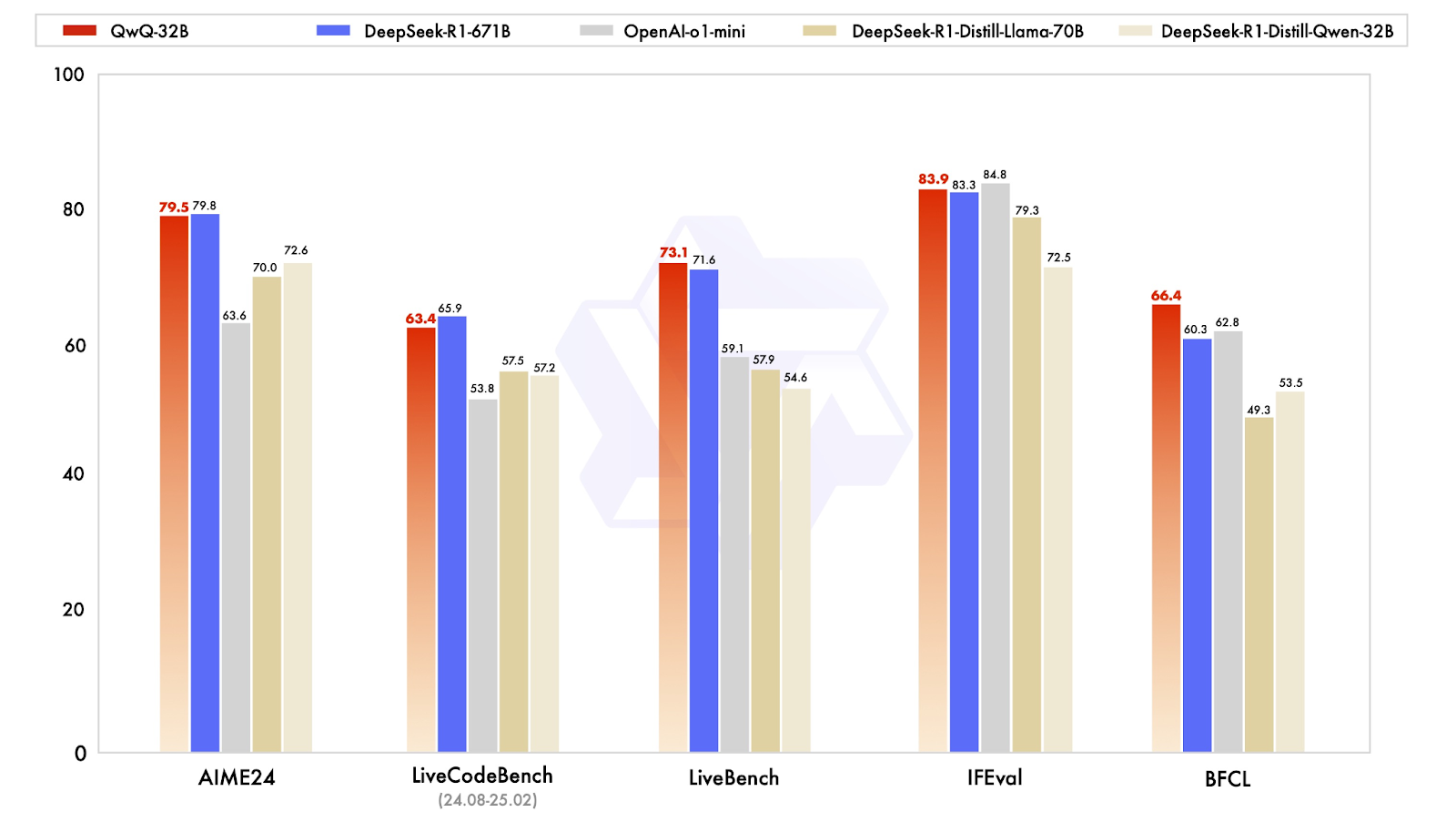

QwQ-32B is designed to compete with state-of-the-art reasoning models, and its benchmark results show that it gets surprisingly close to DeepSeek-R1, despite being much smaller in size. The model was tested on a range of benchmarks evaluating math, coding, and structured reasoning, where it often performed at or near DeepSeek-R1 levels.

Source: Qwen

Strong performance in math and logical reasoning

One of the most telling results comes from AIME24, a math benchmark designed to test math problem-solving. QwQ-32B scored 79.5, just behind DeepSeek-R1 at 79.8 and well ahead of OpenAI’s o1-mini (63.6) and DeepSeek’s distilled models (70.0–72.6). This is particularly impressive given that QwQ-32B has just 32 billion parameters compared to DeepSeek-R1’s 671 billion.

Another key benchmark, IFEval, which tests functional and symbolic reasoning, also saw QwQ-32B perform at a competitive level, scoring 83.9—slightly above DeepSeek-R1! It’s only narrowly behind OpenAI’s o1-mini, which leads this category with a score of 84.8.

Coding capabilities and agentic behavior

For AI models meant to assist with software development, coding benchmarks are essential. In LiveCodeBench, which measures the ability to generate and refine code, QwQ-32B scored 63.4, slightly behind DeepSeek-R1 at 65.9 but significantly ahead of OpenAI’s o1-mini at 53.8 . This suggests that reinforcement learning played a significant role in improving QwQ-32B’s ability to iteratively reason through coding problems rather than just generating one-off solutions.

QwQ-32B scored 73.1 on LiveBench, an evaluation of general problem-solving skills, slightly outperforming DeepSeek-R1's score of 71.6. Both models scored significantly higher than OpenAI's o1-mini, which achieved a score of 59.1.This supports the idea that small, well-optimized models can close the gap with massive proprietary systems, at least in structured tasks.

QwQ-32B stands out in functional reasoning

Perhaps the most interesting result is on BFCL, a benchmark assessing broad functional reasoning. Here, QwQ-32B achieved 66.4, surpassing DeepSeek-R1 (60.3) and OpenAI’s o1-mini (62.8) . This suggests that QwQ-32B’s training approach, particularly its agentic capabilities and reinforcement learning strategies, gives it an edge in areas where problem-solving requires flexibility and adaptation rather than just memorized patterns.

How to Access QwQ-32B

QwQ-32B is fully open-source, making it one of the few high-performing reasoning models available for anyone to experiment with. Whether you want to test it interactively, integrate it into an application, or run it on your own hardware, there are multiple ways to access the model.

Interact with QwQ-32B online

For those who just want to try the model without setting anything up, Qwen Chat offers an easy way to interact with QwQ-32B. The web-based chatbot interface lets you test the model’s reasoning, math, and coding capabilities directly. While it’s not as flexible as running the model locally, it provides a straightforward way to see its strengths in action.

To try it, you need to access https://chat.qwen.ai/ and make an account. Once you’re in, start by selecting the QwQ-32B model in the model picker menu:

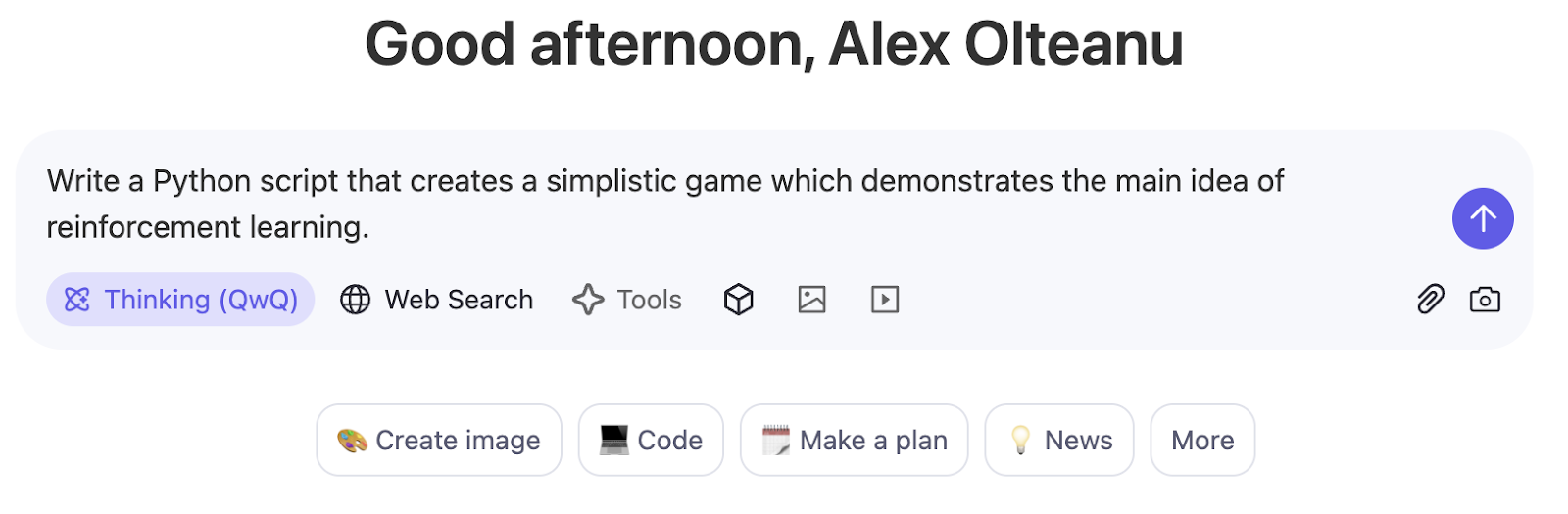

The Thinking (QwQ) mode is activated by default and can’t be turned off with this model. You can start prompting in the chat-based interface:

Download and deploy from Hugging Face and ModelScope

Developers looking to integrate QwQ-32B into their own workflows can download it from Hugging Face or ModelScope. These platforms provide access to the model weights, configurations, and inference tools, making it easier to deploy the model for research or production use.

Conclusion

QwQ-32B challenges the idea that only massive models can perform well in structured reasoning. Despite having far fewer parameters than DeepSeek-R1, it delivers strong results in math, coding, and multi-step problem-solving, showing that training techniques like reinforcement learning and long-context optimization can make a significant impact.

What stands out the most to me is its open-source availability. While many high-performing reasoning models remain locked behind proprietary APIs, QwQ-32B is accessible on Hugging Face, ModelScope, and Qwen Chat, making it easier for researchers and developers to test and build with.

FAQs

Is QwQ-32B free?

Yes, QwQ-32B is fully open-source, meaning you can access it for free through platforms like Hugging Face and ModelScope. However, running it locally or using it in production may require significant hardware resources, which could come with additional costs.

Can QwQ-32B be fine-tuned?

Yes, since QwQ-32B is open-weight, it can be fine-tuned for specific tasks. However, fine-tuning a model of this size requires powerful GPUs and a well-structured dataset. Some platforms, like Hugging Face, provide tools for parameter-efficient fine-tuning to reduce computational costs.

Can you run QwQ-32B locally?

Yes, but it depends on your hardware. Since QwQ-32B is a dense 32B model, it requires a high-end GPU setup, preferably multiple A100s or H100s, to run efficiently. For smaller setups, techniques like quantization can help reduce memory requirements, though with some trade-offs in performance.

Can you use QwQ-32B via API?

Currently, there is no official API from Qwen for QwQ-32B, but third-party platforms may provide hosted API access. For direct use, you’ll need to download and run the model manually through Hugging Face or ModelScope.

Is QwQ-32B multimodal?

No, QwQ-32B is a text-only model focused on reasoning and problem-solving. Unlike models like GPT-4o, it does not process images, video, or audio.

I’m an editor and writer covering AI blogs, tutorials, and news, ensuring everything fits a strong content strategy and SEO best practices. I’ve written data science courses on Python, statistics, probability, and data visualization. I’ve also published an award-winning novel and spend my free time on screenwriting and film directing.