Track

Alibaba has just released Qwen2.5-Max, its most advanced AI model yet. This is not a reasoning model like DeepSeek R1 or OpenAI’s o1, meaning you can’t see its thinking process.

It’s better to think of Qwen2.5-Max as a generalist model and a competitor to GPT-4o, Claude 3.5 Sonnet, or DeepSeek V3.

In this blog, I’ll go over what Qwen2.5-Max is, how it was developed, how it stacks up against the competition, and how you can access it.

We keep our readers updated on the latest in AI by sending out The Median, our free Friday newsletter that breaks down the week’s key stories. Subscribe and stay sharp in just a few minutes a week:

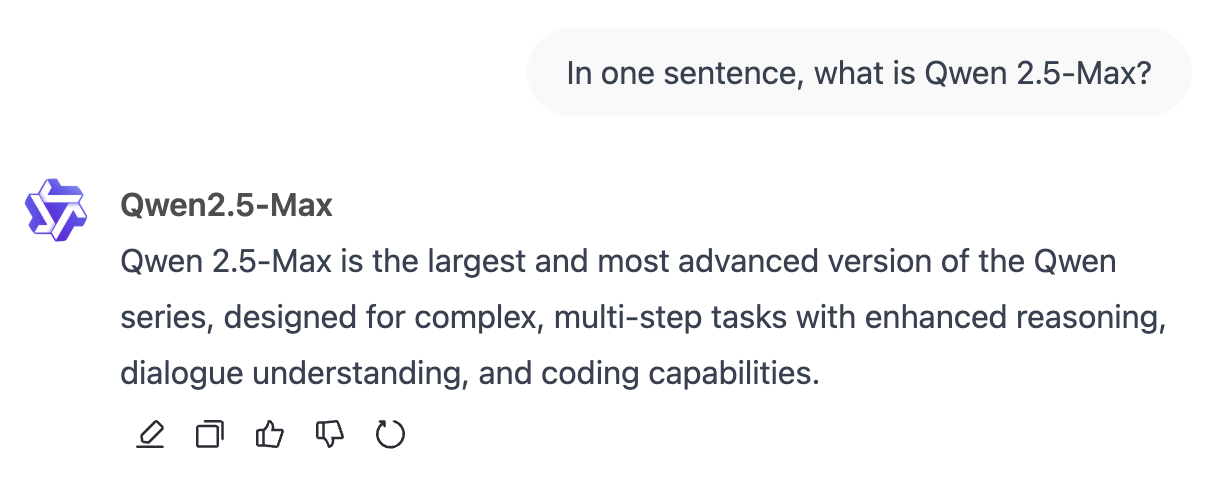

What Is Qwen2.5-Max?

Qwen2.5-Max is Alibaba’s most powerful AI model to date, designed to compete with top-tier models like GPT-4o, Claude 3.5 Sonnet, and DeepSeek V3.

Alibaba, one of China’s largest tech companies, is best known for its e-commerce platforms, but it has also built a strong presence in cloud computing and artificial intelligence. The Qwen series is part of its broader AI ecosystem, ranging from smaller open-weight models to large-scale proprietary systems.

Unlike some previous Qwen models, Qwen2.5-Max is not open-source, meaning its weights are not publicly available.

Trained on 20 trillion tokens, Qwen2.5-Max has a vast knowledge base and strong general AI capabilities. However, it is not a reasoning model like DeepSeek R1 or OpenAI’s o1, meaning it doesn’t explicitly show its thought process. However, given Alibaba’s ongoing AI expansion, we may see a dedicated reasoning model in the future—possibly with Qwen 3.

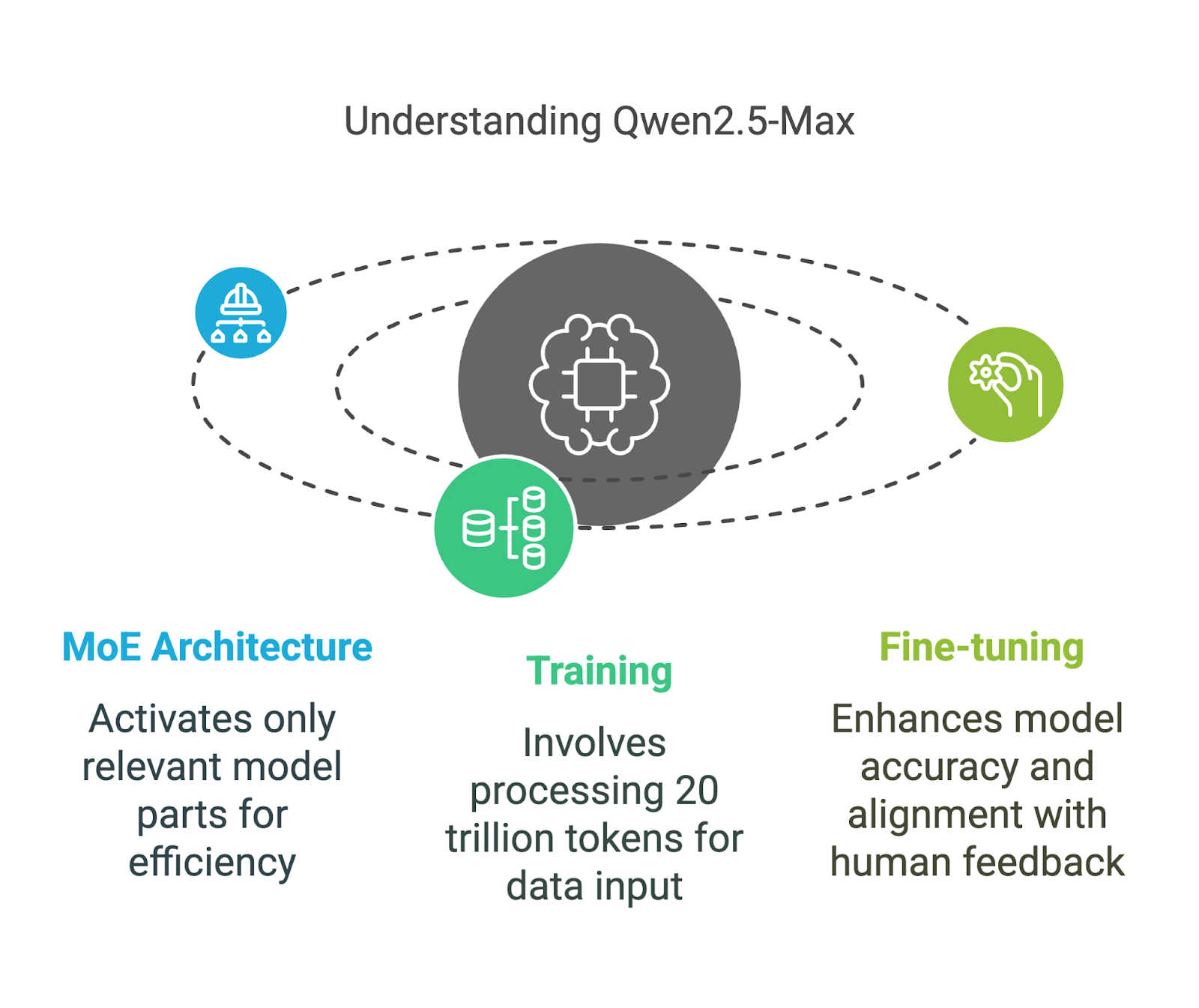

How Does Qwen2.5-Max Work?

Qwen2.5-Max uses a Mixture-of-Experts (MoE) architecture, a technique also employed by DeepSeek V3. This approach allows the model to scale up while keeping computational costs manageable. Let’s break down its key components in a way that’s easy to understand.

Mixture-of-Experts (MoE) architecture

Unlike traditional AI models that use all their parameters for every task, MoE models like Qwen2.5-Max and DeepSeek V3 activate only the most relevant parts of the model at any given time.

You can think of it like a team of specialists: if you ask a complex question about physics, only the experts in physics respond, while the rest of the team stays inactive. This selective activation means the model can handle large-scale processing more efficiently without requiring extreme amounts of computing power.

This method makes Qwen2.5-Max both powerful and scalable, allowing it to compete with dense models like GPT-4o and Claude 3.5 Sonnet while being more resource-efficient—a dense model is one in which all parameters are activated for every input.

Training and fine-tuning

Qwen2.5-Max was trained on 20 trillion tokens, covering a vast range of topics, languages, and contexts.

To put 20 trillion tokens into perspective, that’s roughly 15 trillion words—an amount so vast it’s hard to grasp. For comparison, George Orwell’s 1984 contains about 89,000 words, meaning Qwen2.5-Max has been trained on the equivalent of 168 million copies of 1984.

However, raw training data alone doesn’t guarantee a high-quality AI model, so Alibaba further refined it with:

- Supervised fine-tuning (SFT): Human annotators provided high-quality responses to guide the model in producing more accurate and useful outputs.

- Reinforcement learning from human feedback (RLHF): The model was trained to align its answers with human preferences, ensuring responses are more natural and context-aware.

Qwen2.5-Max Benchmarks

Qwen2.5-Max has been tested against other leading AI models to measure its capabilities across various tasks. These benchmarks evaluate both instruct models (which are fine-tuned for tasks like chat and coding) and base models (which serve as the raw foundation before fine-tuning). Understanding this distinction helps clarify what the numbers really mean.

Instruct models benchmarks

Instruct models are fine-tuned for real-world applications, including conversation, coding, and general knowledge tasks. Qwen2.5-Max is compared here to models like GPT-4o, Claude 3.5 Sonnet, Llama 3.1 405B, and DeepSeek V3.

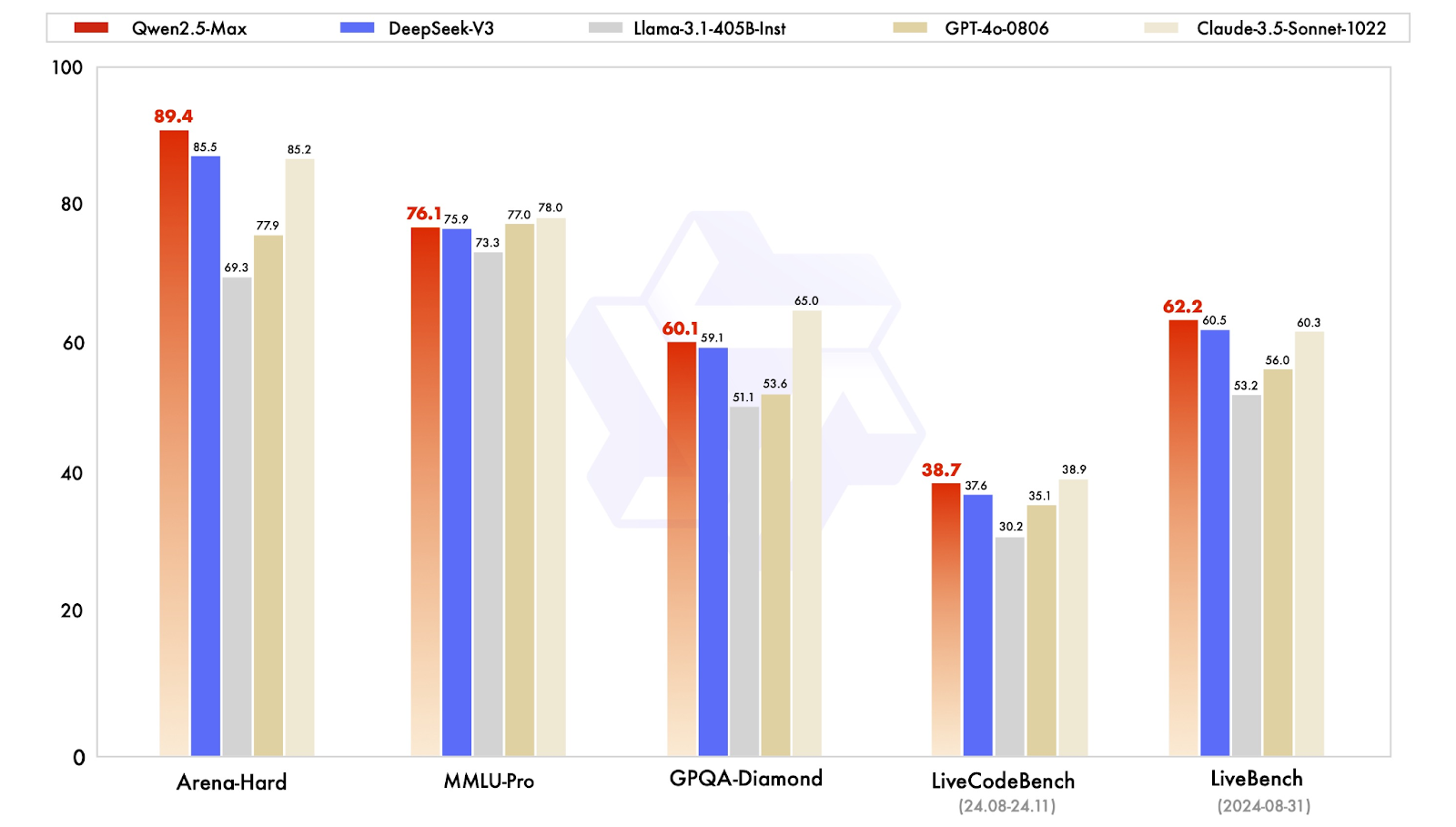

Comparison of the instruct models. Source: QwenLM

Let’s quickly break down the results:

- Arena-Hard (preference benchmark): Qwen2.5-Max scores 89.4, leading against DeepSeek V3 (85.5) and Claude 3.5 Sonnet (85.2). This benchmark approximates human preference in AI-generated responses.

- MMLU-Pro (knowledge and reasoning): Qwen2.5-Max scores 76.1, slightly ahead of DeepSeek V3 (75.9) but also slightly behind the leader Claude 3.5 Sonnet (78.0), and the runner-up GPT-4o (77.0).

- GPQA-Diamond (general knowledge QA): Scoring 60.1, Qwen2.5-Max edges out DeepSeek V3 (59.1), while Claude 3.5 Sonnet leads at 65.0.

- LiveCodeBench (coding ability): At 38.7, Qwen2.5-Max is roughly on par with DeepSeek V3 (37.6) but behind Claude 3.5 Sonnet (38.9).

- LiveBench (overall capabilities): Qwen2.5-Max leads with a score of 62.2, surpassing DeepSeek V3 (60.5) and Claude 3.5 Sonnet (60.3), indicating broad competence in real-world AI tasks.

Overall, Qwen2.5-Max proves to be a well-rounded AI model, excelling in preference-based tasks and general AI capabilities while maintaining competitive knowledge and coding abilities.

Base models benchmarks

Since GPT-4o and Claude 3.5 Sonnet are proprietary models with no publicly available base versions, the comparison is limited to open-weight models like Qwen2.5-Max, DeepSeek V3, LLaMA 3.1-405B, and Qwen 2.5-72B. This provides a clearer picture of how Qwen2.5-Max stands against leading large-scale open models.

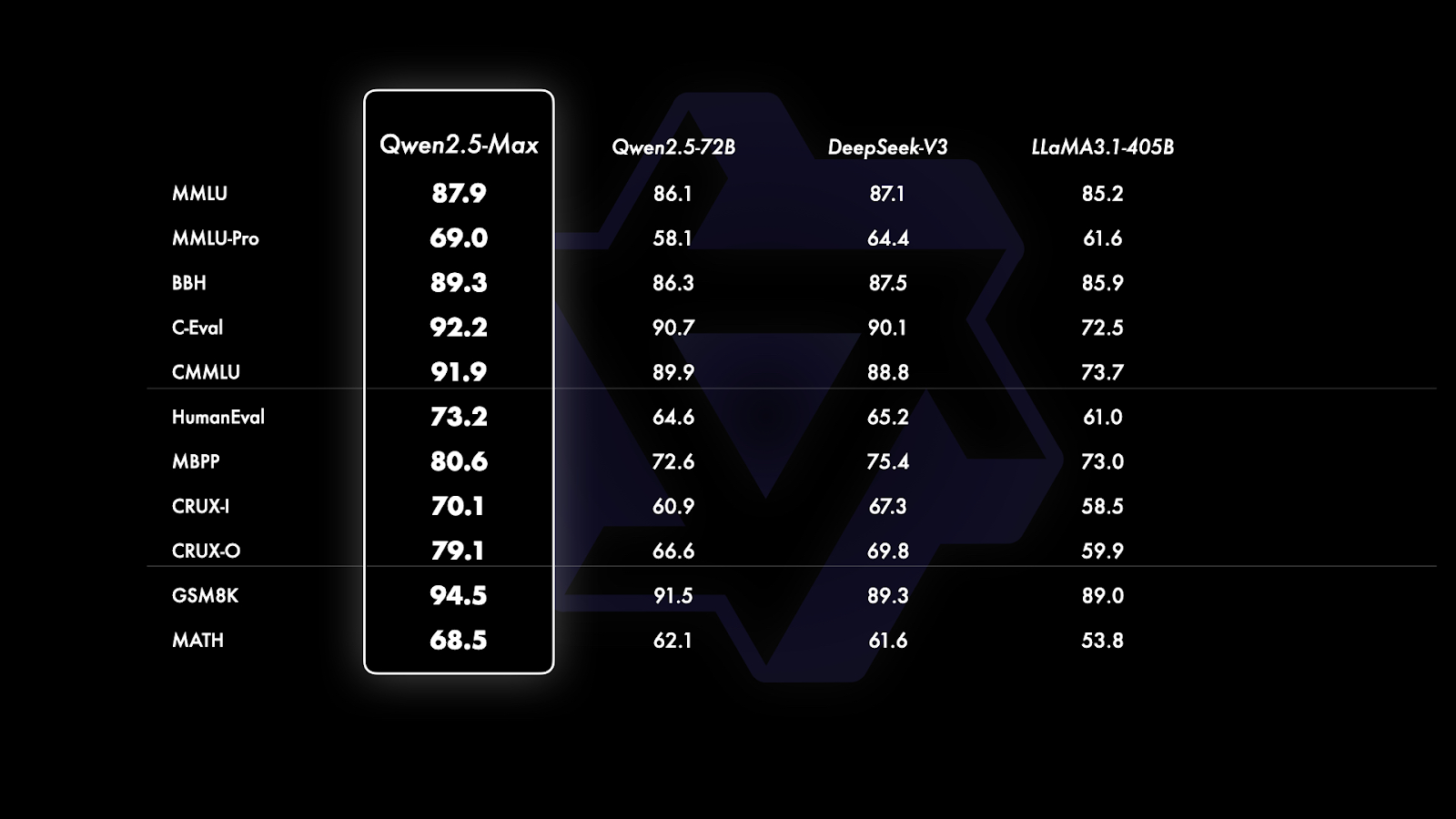

Comparison of the base models. Source: QwenLM

If you look closely at the graph above, it’s divided into three sections based on the type of benchmarks being evaluated:

- General knowledge and language understanding (MMLU, MMLU-Pro, BBH, C-Eval, CMMU): Qwen2.5-Max leads across all benchmarks in this category, scoring 87.9 on MMLU and 92.2 on C-Eval, outperforming DeepSeek V3 and Llama 3.1-405B. These benchmarks focus on the breadth and depth of knowledge and the ability to apply that knowledge in a reasoning context.

- Coding and problem-solving (HumanEval, MBPP, CRUX-I, CRUX-O): Qwen2.5-Max also leads in all benchmarks and performs well in coding-related tasks, scoring 73.2 on HumanEval and 80.6 on MBPP, slightly ahead of DeepSeek V3 and significantly ahead of Llama 3.1-405B. These benchmarks measure coding skills, problem-solving, and the ability to follow instructions or generate solutions independently.

- Mathematical problem solving (GSM8K, MATH): Mathematical reasoning is one of Qwen2.5-Max’s strongest areas, achieving 94.5 on GSM8K, well ahead of DeepSeek V3 (89.3) and Llama 3.1-405B (89.0). However, on MATH, which focuses on more complex problem-solving, Qwen2.5-Max scores 68.5, slightly outperforming its competitors but leaving room for improvement.

How to Access Qwen2.5-Max

Accessing Qwen2.5-Max is straightforward, and you can try it for free without any complicated setup.

Qwen Chat

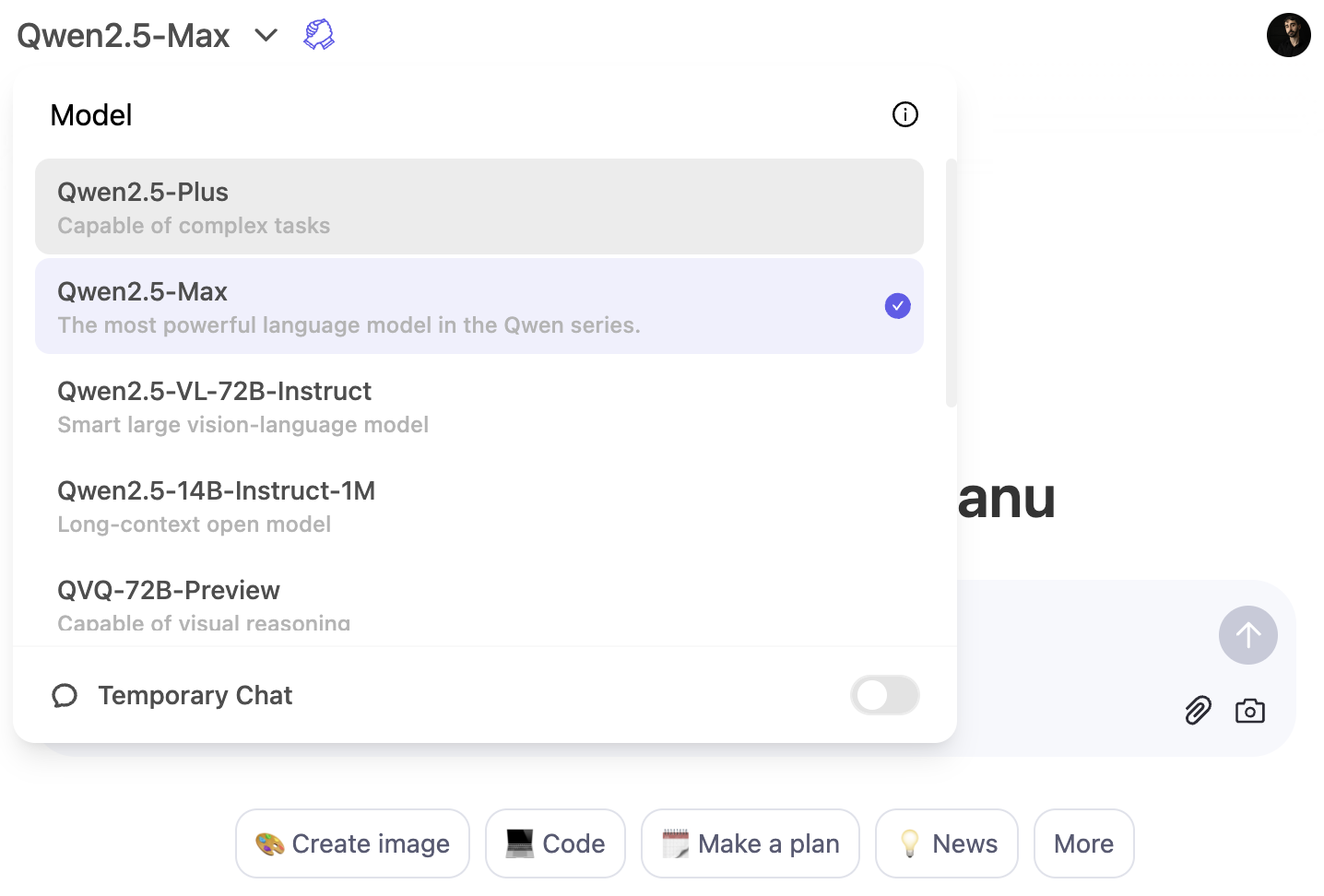

The quickest way to experience Qwen2.5-Max is through the Qwen Chat platform. This is a web-based interface that allows you to interact with the model directly in your browser—just like you’d use ChatGPT in your browser.

To use the Qwen2.5-Max model, click the model dropdown menu and select Qwen2.5-Max:

API access via Alibaba Cloud

For developers, Qwen2.5-Max is available through the Alibaba Cloud Model Studio API. To use it, you’ll need to sign up for an Alibaba Cloud account, activate the Model Studio service, and generate an API key.

Since the API follows OpenAI’s format, integration should be straightforward if you’re already familiar with OpenAI models. For detailed setup instructions, visit the official Qwen2.5-Max blog.

Conclusion

Qwen2.5-Max is Alibaba’s most capable AI model yet, built to compete with top-tier models like GPT-4o, Claude 3.5 Sonnet, and DeepSeek V3.

Unlike some previous Qwen models, Qwen2.5-Max is not open-source, but it is available to test through Qwen Chat or via API access on Alibaba Cloud.

Given Alibaba’s continued investment in AI, it wouldn’t be surprising to see a reasoning-focused model in the future—possibly with Qwen 3.

If you want to read more AI news, I recommend these articles:

FAQs

Can you set up Qwen2.5-Max locally?

No, Qwen2.5-Max is not available as an open-weight model, so you cannot run it on your own hardware. However, Alibaba provides access through Qwen Chat and the Alibaba Cloud API.

Can you fine-tune Qwen2.5-Max?

No, since the model is not open-source, Alibaba has not provided a way for users to fine-tune Qwen2.5-Max. However, they may offer fine-tuned variations in the future or allow limited customization through API settings.

Will Qwen2.5-Max be open-sourced in the future?

Alibaba has not indicated plans to release Qwen2.5-Max as an open-weight model, but given its history of releasing smaller open models, future iterations may include open-source versions.

Can Qwen2.5-Max generate images like DALL-E 3 or Janus-Pro?

No, Qwen2.5-Max is a text-based AI model focused on tasks like general knowledge, coding, and mathematical problem-solving. It does not support image generation.

I’m an editor and writer covering AI blogs, tutorials, and news, ensuring everything fits a strong content strategy and SEO best practices. I’ve written data science courses on Python, statistics, probability, and data visualization. I’ve also published an award-winning novel and spend my free time on screenwriting and film directing.