Track

I recently finished a contract with an AI company. Among other things, they help researchers post-train LLMs. As a PhD mathematician, I created math prompts that stumped frontier AI models. Trick prompts didn't count; the prompts had to expose reasoning errors.

During that work, I heard repeated references to "Humanity’s Last Exam." I learned that it was an AI benchmark designed to test reasoning in many academic fields. My curiosity led me to dig deeper into what HLE is and what it tells us about the current limits of AI reasoning.

If you’re new to AI and benchmarking, I recommend taking the AI Fundamentals skill track.

What Is “Humanity’s Last Exam"?

As LLMs have advanced, researchers rely on collections of evaluation questions, known as benchmarks, to compare performance and track progress. Humanity's Last Exam (HLE) is a benchmark designed to measure an LLM's reasoning capabilities, not just its ability to pattern match. It aims to evaluate how well a model handles expert-level problems across many academic domains.

Why Do We Need a “Last Exam”?

With the many benchmarks already in existence, why yet another one? Benchmarks that once challenged LLMs, such as MMLU, are now saturated, with models often scoring above 90 percent. At this point, these benchmarks stop measuring meaningful differences between models.

HLE is a next-generation benchmark that raises the difficulty level by assembling expert-crafted questions that require multi-step reasoning, not merely recall of superficial pattern-matching.

How Was HLE Developed?

In late 2024, the Centre for AI Safety, a non-profit based on AI safety, partnered with Scale AI, a data company, to develop a more difficult AI benchmark. Dan Hendrycks led the project.

The team crowdsourced graduate-level questions from multiple academic disciplines and offered significant prizes: the top 50 contributors each won $5000, and the next 500 gained $500.

The result was a large pool of expert-level questions across many subjects, such as mathematics, computer science, literature, music analysis, and history.

What’s Included in Humanity's Last Exam?

The HLE paper describes the benchmark as "...the final closed-ended benchmark for broad academic skills." Its questions require multi-step reasoning, which prevents models from guessing or memorizing answers.

HLE consists of 2,500 public questions and about 500 additional questions in a private holdout set.

Each question must be original, have a single correct answer, and resist a simple web search or database lookup. About 76% of the questions use the exact-match answer format and the remaining 24% use multiple choice. Roughly 14% of the questions are multimodal, involving both text and images.

The HLE team had a stringent vetting process for the questions.

- To be accepted, questions had to stump a frontier LLM. About seventy thousand questions fulfilled this criterion.

- Expert peer reviewers refined and filtered the total down to thirteen thousand questions.

- Organizers and expert reviewers manually approved six thousand of these questions.

- Researchers split this pool of questions into a public set of 2,500 questions and a holdout set of approximately 500 questions.

Criticisms of Humanity’s Last Exam

Early results showed that frontier models initially scored poorly on the questions, yet expressed high confidence. This gap indicates hallucinations.

Independent groups also raised concerns. Future House, a non-profit research lab, published a blog, "About 30% of Humanity’s Last Exam chemistry/biology answers are likely wrong."

Their analysis focused on the review protocol. Question writers claimed correct answers, but reviewers were instructed to only spend five minutes reviewing answer correctness. They argue this process lets overly complex, contrived, or ambiguous answers slip through that often conflict with scientific literature.

HLE's maintainers responded to the post by commissioning a three-expert review of the disputed subset. As of September 16, 2025, they planned to announce a rolling review process to HLE.

The AI Benchmarks Landscape

HLE sits within a broader ecosystem of benchmarks that test different aspects of LLM capability.

Knowledge and reasoning

These benchmarks evaluate academic knowledge and reasoning.

- Massive Multitask Language Understanding (MMLU). MMLU tests zero-shot performance across fifty-seven different subjects.

- MMLU-Pro/MMLU Pro+. Increased question complexity and a focus on higher-order reasoning.

- Google Proof Q&A (GPQA) A Google-proof, graduate-level STEM benchmark with 448 multiple-choice questions.

- Humanity's Last Exam (HLE). HLE uses expert-level curated problems that emphasize reasoning over recall.

Multimodal understanding

These benchmarks measure reasoning that involves both text and images.

- The Massive Multi-discipline Multimodal Understanding and Reasoning (MMMU). About 1,500 multimodal, multi-disciplinary questions sourced from exams, quizzes, and textbooks.

- The MMMU-Pro benchmark removes questions solvable by text-only models, adds more robust answer options, and introduces a vision-only setting in which the entire prompt is embedded within an image.

Software engineering and tool use

Still other benchmarks focus specifically on software engineering and tool use.

- SWE-bench. Built from real GitHub issues from twelve Python repositories. The model reads the codebase, interprets a description of the issue, and proposes a patch.

- SWE-bench Verified. An improved version of SWE-bench that fixes overly specific tests, clarifies vague issue descriptions, and stabilizes environment setup.

- SWE-bench-Live. A scalable, continuously updated version with 1319 tasks across 93 repos.

Holistic, multi-metric evaluation frameworks

Stanford's Center for Research on Foundation Models (CRFM) developed Holistic Evaluation of Language Models (HELM) to support responsible AI evaluation.

HELM evaluates models on a battery of standardized scenarios, such as question answering, summarization, safety-critical queries, and social/ethical content. These scenarios are scored across multiple dimensions, not only accuracy, but also calibration, robustness, and toxicity.

HELM has grown into a family of related frameworks.

- HEML Capabilities. A general-purpose leaderboard for evaluating LMs.

- Audio. Evaluations for audio- and speech-related tasks.

- HELM Lite. A smaller, faster subset for quick comparisons.

- HELM Finance. HELM tailored to financial tasks.

- MedHELM. Evaluations for healthcare-related reasoning and safety.

Safety and dangerous-capability Evaluations

Safety frameworks measure risk rather than intellectual competence.

- Model Evaluation & Threat Research (METR) is a nonprofit research organization that evaluates potentially dangerous capabilities, such as cyberattacks, attempts to avoid shutdown, or the ability to automate AI R&D. Its mission is to detect catastrophic risks early.

- Google DeepMind's Frontier Safety Framework. Defines critical capability levels (CCLs), monitors whether frontier models approach them, and implements mitigation plans when they do.

Where Do Current AI Models Score on AI Benchmarks?

Many public leaderboards track LLM performance across various metrics.

- Scale LLM Leaderboards. Includes leaderboards for agentic behavior, safety, and frontier performance across standardized benchmarks.

- LLM Stats. Covers image generation, video generation, text-to-speech, speech-to-text, and embeddings.

- Vellum LLM Leaderboard. Focuses on reasoning and coding.

- Artificial Analysis.AI LM Leaderboard. Ranks models on intelligence, price, speed, and latency.

- Hugging Face Open LLM Leaderboard. Includes detailed results for various models.

Leaderboards

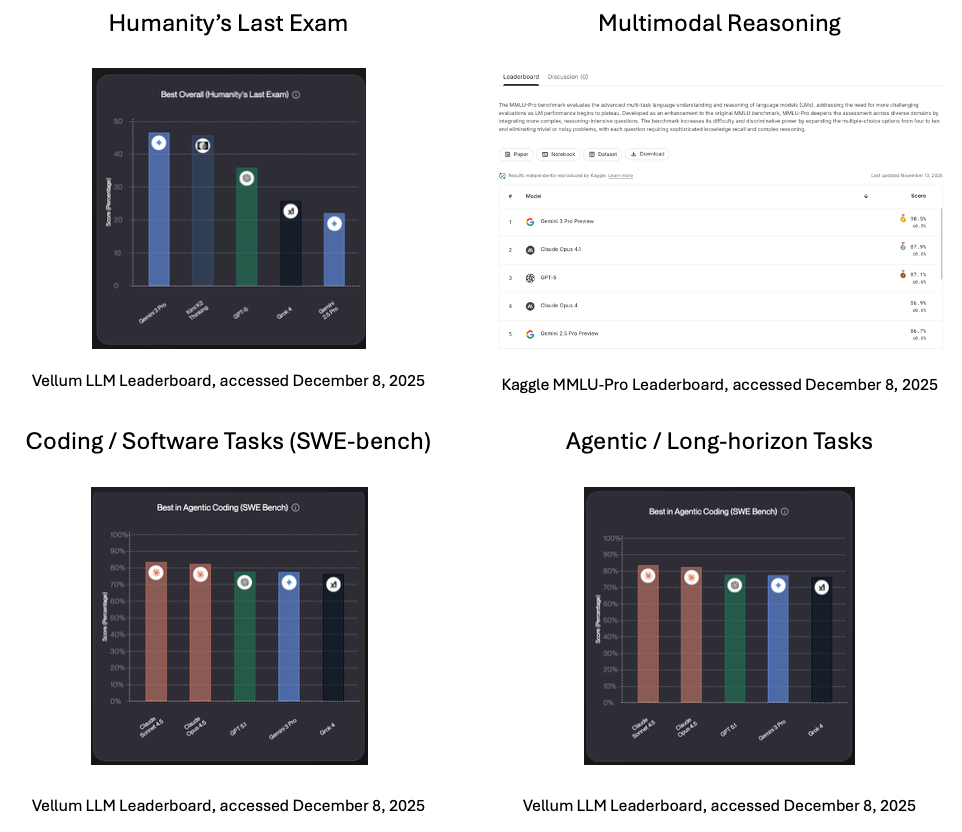

Here are some scores as of the time of this writing in December 2025.

- Humanity's Last Exam. According to the Vellum leaderboard, the current leader (as of this writing) is Gemini 3 Pro, Google's flagship generative multimodal AI model.

- Multimodal Reasoning. Gemini 3 Pro, designed to unify modalities into a single system, leads at multimodal reasoning, according to the Kaggle MMLU-Pro leaderboard.

- Coding / Software Tasks (SWE-bench). Anthopic's Claude Sonnet 4.5, which prioritizes careful reasoning, takes top spot in coding and real-world software tasks, according to the Vellum leaderboard.

- Agentic / Long-horizon Tasks. Claude Sonnet 4.5 also leads in agentic and long-horizon tasks, according to the Vellum leaderboard.

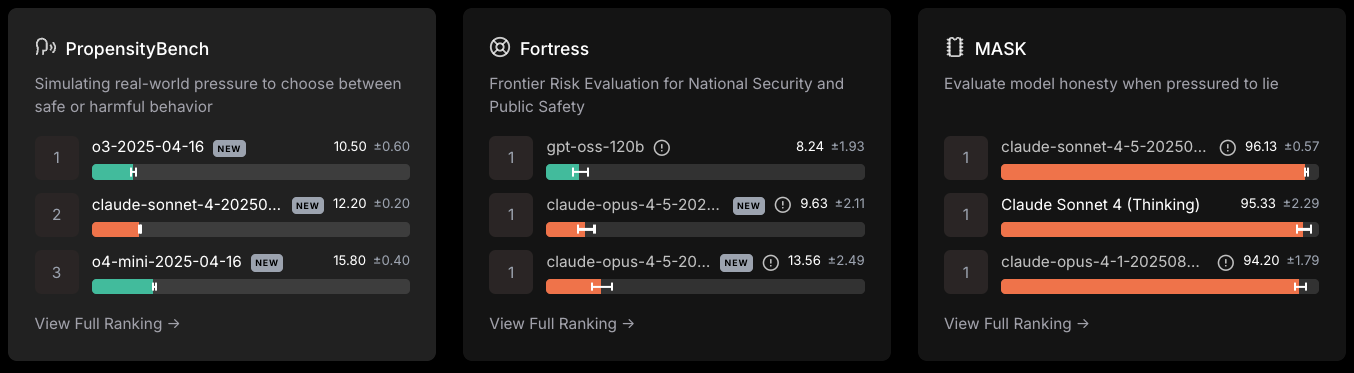

Safety/dangerous capabilities

Scale LLM Safety Leaderboard, accessed December 8, 2025

- Open AI's o3-2024-04-18 led in the PropensityBench, which measures choice between safe or harmful behavior.

- GPT-oss-120b led in the Frontier Risk Evaluation for National Security and Public Safety.

- Caude Sonnet-4 .5 most resisted pressure to lie.

How Humanity’s Last Exam is Used

So far, I’ve outlined what HLE is and how it was developed. Let’s now look at how the test is used in practical terms.

For research teams

HLE provides a standardized evaluation method across domains. It highlights the strengths and weaknesses of a model. It reveals the gap between it and human expert performance. Teams can use these patterns to guide model development and focused post-training.

For policymakers

HLE provides a public, global metric of AI reasoning progress. It creates a shared reference point across countries and regulatory bodies and can anchor discussions about thresholds, oversight, and governance in reality, not hype.

Conclusion

AI benchmarks shape how we measure AI progress. As earlier benchmarks have saturated, the need for a new benchmark, focused on reasoning, not just recall or pattern matching, became apparent.

Humanity's Last Exam attempts to fill that gap by crowdsourcing graduate-level questions from experts all over the globe to expose the limitations of LLMs. It is not the final word, but it does clarify where AI stands today in relation to human expert reasoning.

For more information about LLMs and how they work, I recommend the following resources:

Humanity's Last Exam FAQs

What is Humanity's Last Exam (HLE)?

It is a benchmark designed to test an LLM's ability to reason using expert-level, closed-ended academic questions across many disciplines.

Why did researchers create HLE?

Earlier benchmarks became too easy for frontier models. HLE raises the difficulty so that researchers can measure improvements in reasoning.

What types of questions are in HLE?

HLE includes questions from a large range of disciplines, including mathematics, computer science, history, and music analysis.

Who created HLE?

Dan Hendrycks, of the Center for AI Safety, led the team. They partnered with Scale AI.

How do researchers use HLE?

They compare models, track improvements over time, identify weak domains, and measure the gap between AI and human expertise.

Mark Pedigo, PhD, is a distinguished data scientist with expertise in healthcare data science, programming, and education. Holding a PhD in Mathematics, a B.S. in Computer Science, and a Professional Certificate in AI, Mark blends technical knowledge with practical problem-solving. His career includes roles in fraud detection, infant mortality prediction, and financial forecasting, along with contributions to NASA’s cost estimation software. As an educator, he has taught at DataCamp and Washington University in St. Louis and mentored junior programmers. In his free time, Mark enjoys Minnesota’s outdoors with his wife Mandy and dog Harley and plays jazz piano.