Course

The Bernoulli distribution is a fundamental concept in statistics and data science. Named after the Swiss mathematician Jacob Bernoulli, this distribution is important in probability theory and it serves as a building block for more complex statistical models- everything from predicting customer behavior to developing machine learning algorithms.

As we get started, consider our Introduction to Statistics course for a refresher on probability distributions. Also, consider our Foundations of Probability in Python course to study problems with two possible outcomes and practice with the scipy library. Our Statistical Thinking in Python (Part 1) course is another great option because it will teach you how to write your own Python functions to perform Bernoulli trials.

What is a Bernoulli Distribution?

A Bernoulli distribution is a discrete probability distribution that models a random variable with only two possible outcomes. These outcomes are typically labeled as "success" and "failure," or else they are represented numerically as 1 and 0.

Bernoulli trials

Let’s start with Bernoulli trials. A Bernoulli trial is a random experiment with exactly two possible outcomes. Classic examples include:

- Flipping a coin (heads or tails)

- Answering a true/false question

- Determining if a customer will make a purchase (buy or not buy)

Each Bernoulli trial is independent, meaning the outcome of one trial does not affect the probability of success in subsequent trials.

Bernoulli distributions

A Bernoulli distribution describes the probability of success in a single Bernoulli trial. It is characterized by a single parameter, p, which represents the probability of success. The probability of failure is consequently 1 - p. Mathematically, we can express a Bernoulli distribution as follows, where X is the random variable representing the outcome of the Bernoulli trial.

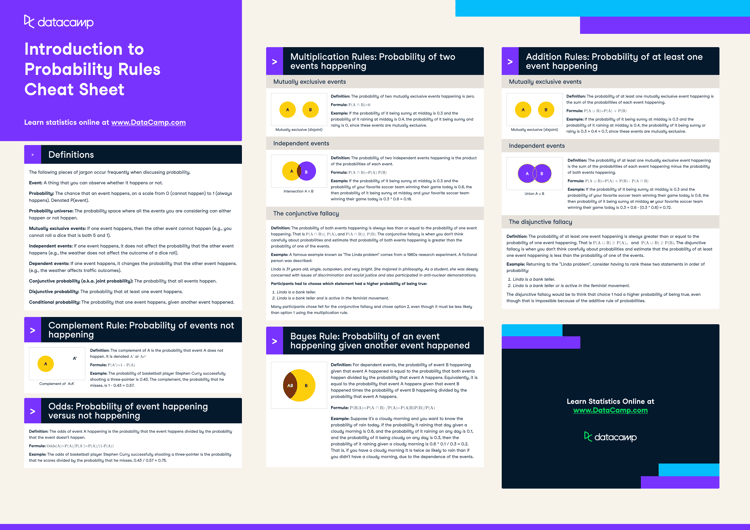

To illustrate how Bernoulli distributions change with different probabilities of success, let’s compare three examples.

Comparison of Bernoulli distributions for different values of p. Image by Author.

Here, each graph shows a different Bernoulli distribution.

- Left: p = 0.3 (30% chance of success)

- Center: p = 0.5 (50% chance of success)

- Right: p = 0.7 (70% chance of success)

As we can see, the probability mass function of a Bernoulli distribution always has two bars: one for the probability of failure (X = 0) and one for the probability of success (X = 1). The height of these bars changes based on the value of p, but they always sum to 1.

Properties of Bernoulli Distributions

Let's explore the key characteristics that define the Bernoulli distribution.

Binary outcomes

The most distinctive feature of a Bernoulli distribution is its binary nature. Each trial can result in only one of two possible outcomes:

- Success (usually denoted as 1)

- Failure (usually denoted as 0)

This binary property makes Bernoulli distributions particularly useful when we're interested in yes/no, true/false, or success/failure outcomes. Here are some key advantages of using Bernoulli distributions in data science and machine learning:

- Simplicity in Modeling: Binary outcomes allow for straightforward modeling of probabilities and odds.

- Ease of Interpretation: Results are often easily interpretable as probabilities or proportions.

- Versatility: Many complex scenarios can be broken down into a series of binary decisions or outcomes.

- Foundation for More Complex Distributions: Bernoulli trials form the basis for other important distributions, like the binomial distribution, which counts successes across multiple Bernoulli trials, and the geometric distribution, which tracks the number of Bernoulli trials until the first success.

Mean and variance

The mean (μ) and variance (σ²) of a Bernoulli distribution are directly related to the probability of success, p.

Mean

The mean is equal to the probability of success. This implies that if you were to repeat a Bernoulli trial many times, the average outcome would converge to p.

Variance

The variance is given by: σ² = p(1 - p). The variance reaches its maximum when p = 0.5 and decreases as p approaches 0 or 1. This relationship provides insights into the spread of the data and the uncertainty associated with the outcomes. In terms of data spread, the variance in a Bernoulli distribution signifies:

- Predictability: A low variance (when p is close to 0 or 1) indicates more predictable outcomes, while a high variance (when p is close to 0.5) suggests more uncertainty.

- Informational Content: The closer p is to 0.5, the more information each trial provides, as outcomes are less predictable.

- Sampling Considerations: The variance informs sample size decisions in experimental design. Higher variance typically requires larger sample sizes to achieve the same level of precision in estimates.

- Risk Assessment: In applications like finance or insurance, the variance can be interpreted as a measure of risk or volatility.

Symmetry and asymmetry

The shape of a Bernoulli distribution depends on the value of p:

- When p = 0.5, the distribution is symmetric. One example is a fair coin toss, where the probabilities of success and failure are equal.

- When p ≠ 0.5, the distribution becomes asymmetric. As p approaches 0 or 1, the asymmetry increases.

The symmetry or asymmetry of the distribution affects interpretation in several ways:

- Expectation Setting: In a symmetric distribution, neither outcome is more likely, which can inform decision-making processes where no bias toward one outcome exists, such as in a fair game.

- Bias Detection: Asymmetry in observed outcomes when symmetry is expected can indicate bias in the process or measurement.

- Threshold Determination: In classification tasks, the symmetry or asymmetry of the distribution can inform the choice of classification thresholds.

- Model Selection: The degree of asymmetry can influence the choice of statistical models or machine learning algorithms.

- Sampling Strategies: In highly asymmetric cases (p very close to 0 or 1), special sampling techniques might be needed to ensure rare events are adequately represented in the data.

Practical Applications of Bernoulli Distributions

Bernoulli distributions find widespread use in various fields, particularly in data science and statistics. Let's explore some of the most common applications:

Binary classification in machine learning

In machine learning, Bernoulli distributions play a central role in binary classification problems. These are scenarios where we need to categorize data into one of two classes. Examples include:

- Spam detection in emails (spam or not spam)

- Fraud detection in financial transactions (fraudulent or legitimate)

- Disease diagnosis based on symptoms (present or absent)

Algorithms that leverage Bernoulli distributions for binary classification include:

- Logistic Regression: Logistic regression assumes that the binary outcome follows a Bernoulli distribution. It models the probability of the outcome using the logit function, which is the inverse of the logistic function. The resulting output can be interpreted as a Bernoulli probability. Read our Understanding Logistic Regression in Python and Logistic Regression in R tutorials to learn the specifics.

- Bernoulli Naive Bayes: This classifier is based on the Naive Bayes algorithm and is particularly suited for document classification tasks where features are binary (word presence or absence).

Become an ML Scientist

Hypothesis testing

Bernoulli distributions are fundamental in hypothesis testing, particularly when dealing with proportions or success rates. Common applications include:

- A/B testing in Marketing: Comparing the success rates of two different marketing strategies. Learn more about A/B testing with our Customer Analytics and A/B Testing in Python course and also our A/B Testing in R code-along.

- Quality Control: Testing whether the defect rate in a manufacturing process exceeds a certain threshold.

- Medical trials: Assessing the efficacy of a new treatment compared to a placebo.

In these scenarios, the null hypothesis often assumes a specific value for the probability of success p, and the alternative hypothesis challenges this assumption based on observed data.

Simulation and modeling

Bernoulli distributions are handy in simulations and probabilistic modeling, especially for scenarios with binary outcomes. They are used in:

- Monte Carlo Simulations: Modeling complex systems with many binary decision points. Learn more with our Introduction to Monte Carlo Methods tutorial.

- Risk Analysis: Assessing the probability of success or failure in business scenarios.

- Population Genetics: Modeling the inheritance of traits in genetic studies.

By generating random samples from a Bernoulli distribution, researchers and data scientists can create realistic simulations of complex systems and processes.

Performance Considerations

When working with Bernoulli distributions in data analysis and machine learning, there are several performance considerations to keep in mind:

Handling imbalanced data

In many real-world scenarios, the probability of success p in a Bernoulli distribution is not 0.5, leading to imbalanced datasets. This imbalance can pose challenges for machine learning algorithms, potentially biasing them towards the majority class. Strategies to address this include:

- Oversampling the Minority Class: Techniques like SMOTE (Synthetic Minority Oversampling Technique) can be used to generate synthetic samples of the minority class.

- Undersampling the Majority Class: Randomly removing instances from the majority class to balance the dataset.

- Adjusting Class Weights: Giving more importance to the minority class during model training.

- Using Ensemble Methods: Techniques like Random Forests or Gradient Boosting can often handle imbalanced data better than single models. Read our What is Boosting tutorial to learn all about boosting and ensemble methods more generally.

- Choosing Appropriate Evaluation Metrics: Accuracy alone can be misleading for imbalanced datasets. Consider metrics like F1-score, precision, recall, or area under the ROC curve.

Computational efficiency

Bernoulli distributions are computationally efficient, especially for large datasets in binary classification tasks. However, there are still considerations to keep in mind:

- Vectorization: When implementing Bernoulli-based algorithms, use vectorized operations (e.g., NumPy in Python) for improved performance.

- Sparsity: In text classification tasks using the Bernoulli Naive Bayes classifier, leveraging sparse matrix representations can significantly reduce memory usage and computation time.

- Trade-offs in Model Complexity: While more complex models might offer slightly better performance, the computational cost may not always justify the marginal improvement. Consider the balance between model accuracy and processing time.

Common Misconceptions

To fully grasp Bernoulli distributions, it's important to address some common misconceptions:

Misinterpreting the probability of success

One frequent misconception is assuming that the probability of success p in a Bernoulli distribution is always 0.5. In reality, p can take any value between 0 and 1, inclusive. The value of p depends on the specific scenario or process being modeled.

For example:

- In a fair coin toss, p = 0.5.

- In a loaded die where rolling a 6 is considered success, p might be 1/4.

- In a quality control process where the defect rate is 1%, p would be 0.01 for a "defect" outcome.

Accurately estimating p from data is crucial for proper statistical inference and modeling. This often involves collecting a representative sample and calculating the proportion of successes.

Bernoulli vs. binomial distribution

Bernoulli distributions are sometimes confused with Binomial distributions. While related, they are distinct:

- A Bernoulli distribution models a single trial with two possible outcomes.

- A binomial distribution models the number of successes in a fixed number of independent Bernoulli trials.

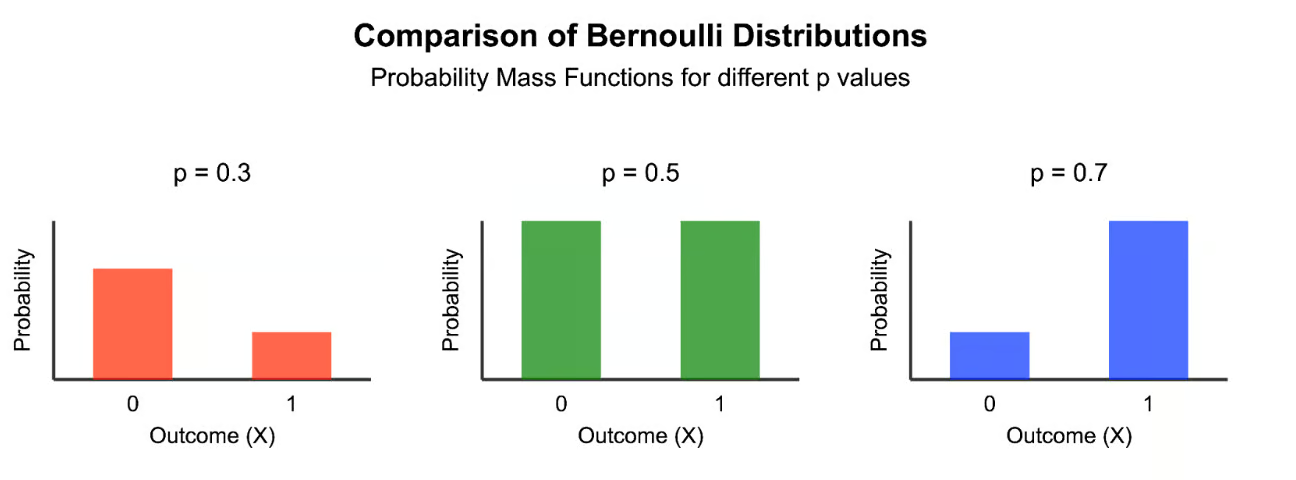

In other words, a binomial distribution is the sum of multiple independent Bernoulli distributions. For instance, if you flip a coin 10 times and count the number of heads, that's a binomial distribution. Each individual flip, however, follows a Bernoulli distribution. Let's compare them visually:

Bernoulli distribution versus binomial distribution. Image by Author.

This comparison clearly shows the fundamental difference between these two distributions:

- The Bernoulli distribution (left) represents a single trial with only two possible outcomes: 0 (failure) or 1 (success). In this example, with p = 0.3, we see a 70% chance of failure and a 30% chance of success.

- The binomial distribution (right) represents the number of successes in multiple trials (in this case, 10 trials). It shows the probability of getting each possible number of successes, from 0 to 10. The shape of this distribution is determined by both n (the number of trials) and p (the probability of success in each trial).

While a Bernoulli distribution always has exactly two possible outcomes, a binomial distribution can have n+1 possible outcomes, where n is the number of trials.

Alternatives to Bernoulli Distributions

While Bernoulli distributions are widely used, there are scenarios where alternative distributions might be more appropriate. Let's explore two common alternatives:

Binomial distribution

The binomial distribution is useful when you're dealing with multiple trials as opposed to a single event. This alternative is often considered when you're interested in the number of successes across several attempts rather than just one outcome.

Geometric distribution

The geometric distribution comes into play when you're interested in how many attempts it will take to achieve the first success. It's often applied in situations where the focus is on the waiting time or number of attempts before success happens.

Conclusion

Bernoulli distributions form the foundation of many statistical concepts and are essential components in data science. Their simple binary nature, combined with wide-ranging applications in machine learning, hypothesis testing, and simulation, makes them a key framework for analyzing and modeling binary outcomes.

Here are the takeaways:

- Bernoulli distributions model single trials with two possible outcomes.

- The probability of success p is the key parameter that defines the distribution.

- They have many applications in binary classification, hypothesis testing, and simulations.

- Understanding their properties and common misconceptions is useful for effective application.

- While powerful, alternatives like binomial and geometric distributions may be more appropriate in certain scenarios.

If you are interested in applying these concepts in specific programming environments, our Introduction to Statistics in Python course and Introduction to Statistics in R course offer practical, hands-on learning experiences. And if you are ready to look into more advanced topics, the Mixture Models in R course builds on these foundational concepts to explore more complex statistical modeling techniques. Finally, look at our Machine Learning in Production in Python skill track to bring your machine learning skills to production level and start deploying advanced models.

Build Machine Learning Skills

As an adept professional in Data Science, Machine Learning, and Generative AI, Vinod dedicates himself to sharing knowledge and empowering aspiring data scientists to succeed in this dynamic field.

Frequently Asked Questions

What is a Bernoulli distribution?

A Bernoulli distribution is a discrete probability distribution for a random variable that has only two possible outcomes: success (usually denoted as 1) or failure (usually denoted as 0). It is characterized by a single parameter p, which represents the probability of success.

What's the difference between a Bernoulli and a Binomial distribution?

A Bernoulli distribution models a single trial with two possible outcomes, while a Binomial distribution models the number of successes in a fixed number of independent Bernoulli trials. You can think of a Binomial distribution as a sum of multiple Bernoulli distributions.

Can Bernoulli distributions be used for hypothesis testing?

Yes, Bernoulli distributions are commonly used in hypothesis testing, especially for scenarios involving proportions or success rates. They're particularly useful in A/B testing, where you're comparing the success rates of two different strategies or treatments.

How do Bernoulli distributions relate to odds and log-odds?

The odds of success in a Bernoulli trial are p/(1-p), where p is the probability of success. The log-odds, also known as the logit function, is the natural logarithm of the odds. These concepts are commonly used in logistic regression and other statistical analyses.

How can I estimate the parameter p of a Bernoulli distribution from data?

The most common method to estimate p is the maximum likelihood estimation (MLE). For a Bernoulli distribution, the MLE of p is simply the proportion of successes in your sample. For example, if you observe 7 successes out of 10 trials, your estimate of p would be 0.7.