Course

Apache Hadoop, often just called Hadoop, is a powerful open-source framework built to process and store massive datasets by distributing them across clusters of affordable, commodity hardware. Its strength lies in scalability and flexibility, enabling it to work with both structured and unstructured data.

In this post, I’ll walk through the key components that make Hadoop—how it stores, processes, and manages data at scale. By the end, you’ll have a clear picture of how this foundational technology fits into big data ecosystems.

Overview of Hadoop Ecosystem

Hadoop is not a single tool. It’s an ecosystem that includes multiple modules that work together to manage data storage, processing, and resource coordination.

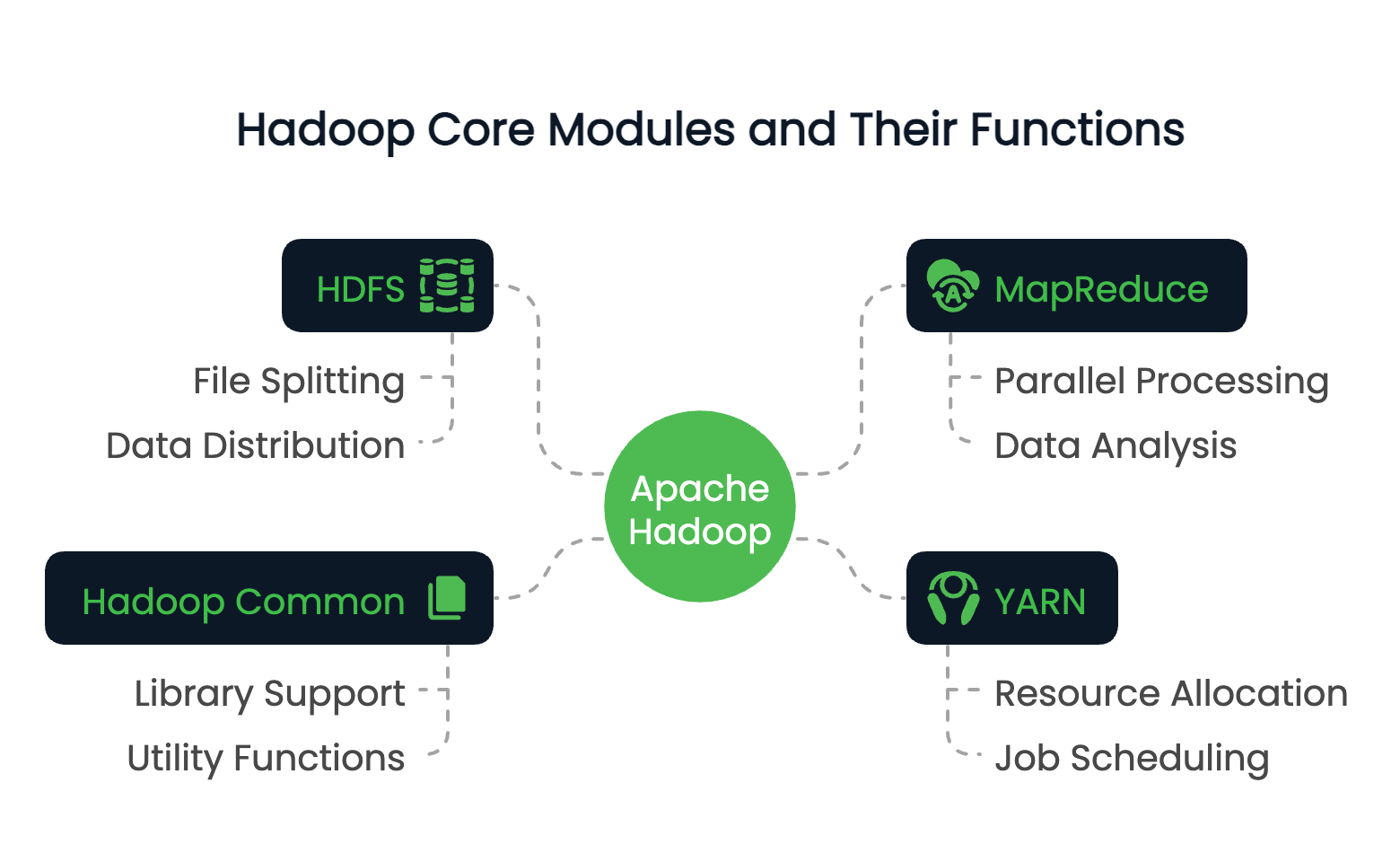

Core modules of Hadoop

Hadoop consists of four foundational modules:

- HDFS (Hadoop Distributed File System): A distributed storage system that splits files into blocks and distributes them across a cluster.

- MapReduce: A programming model for processing data in parallel across nodes.

- YARN (Yet Another Resource Negotiator): Manages resources and schedules jobs in the cluster.

- Hadoop Common: A set of shared libraries and utilities that support other modules.

These modules work together to create a distributed computing environment capable of handling petabytes of data.

To explore how distributed data processing compares across platforms, the Big Data Fundamentals with PySpark course provides a hands-on introduction that complements your understanding of Hadoop.

Role of Hadoop in big data

The primary role of Hadoop lies in its ability to address a core challenge in big data processing, namely, scalability.

Traditional systems often struggle with datasets that exceed the capacity of a single machine, both in storage and computing. Hadoop resolves this by distributing data across many nodes and executing computations in parallel.

So Hadoop can process terabytes or even petabytes of data efficiently. Beyond its scalability, Hadoop also has a deep fault tolerance through data replication and allows organizations to build cost-effective data infrastructure using commodity hardware.

Key features of Hadoop

We’ve discussed the role and structure of Hadoop, now let’s look at some of Hadoop’s most valuable attributes. These include:

- Distributed storage and processing allow it to store extremely large datasets and query them.

- Horizontal scalability without requiring high-end machines.

- Data locality optimization, moving computation to where the data resides.

- Resilience to failure via replication and task re-execution.

These features make Hadoop well-suited for batch data processing, log analysis, and ETL pipelines. Hadoop is a classic case study in distributed computing, where computation happens across multiple nodes to increase efficiency and scale.

Now, let’s look at each of the core modules of Hadoop in detail.

Want to validate your Hadoop knowledge or prep for a data role? Here are 24 Hadoop interview questions and answers for 2025 to get you started.

Hadoop Distributed File System (HDFS)

Of the four main components, HDFS is Hadoop’s primary storage system. It is designed to reliably store the vast amounts of data across a cluster of machines that we discussed earlier. Its architecture is set up for this type of access to large datasets and is optimized for fault tolerance, scalability, and data locality.

Architecture and components

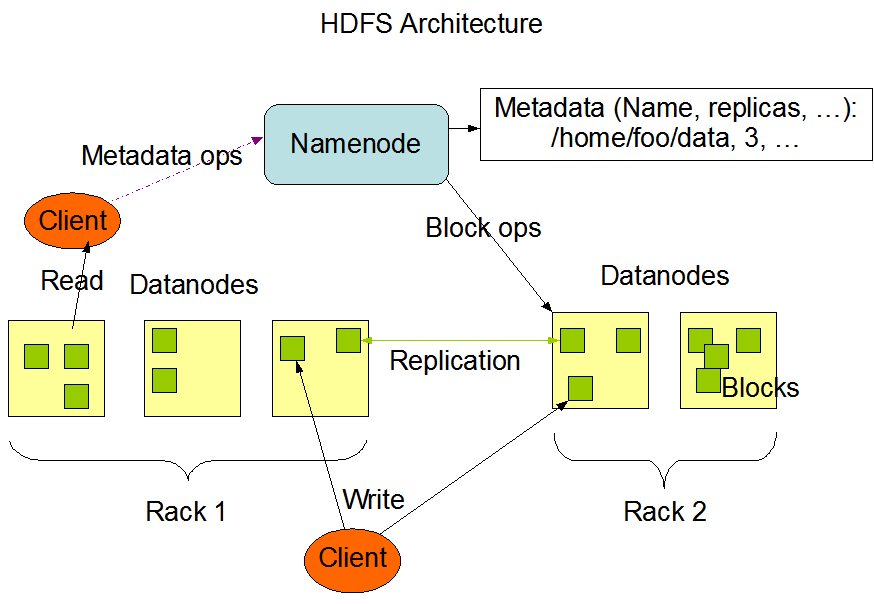

The HDFS architecture revolves around a master-slave model. At the top sits the NameNode, which manages metadata— essentially, the file system’s directory tree and information about each file’s location. It doesn’t store the actual data.

The DataNodes are the workhorses. They manage storage attached to the nodes and serve read/write requests from clients. Each DataNode regularly reports back to the NameNode with a heartbeat and block reports to ensure consistent state tracking.

Finally, HDFS includes a Secondary NameNode, not to be confused with a failover node. Instead, it periodically checkpoints the NameNode’s metadata to reduce startup time and memory overhead.

The HDFS architecture. Image source: Apache Hadoop documentation

How data is stored in HDFS

When a file is written to HDFS, it’s broken into fixed-size 64MB blocks. Each block is then distributed across different DataNodes. This distribution strategy supports parallelism during data access and boosts fault tolerance.

Data in HDFS isn’t just stored once. Each block is replicated (the default is three copies) and spread across the cluster. During a read operation, the system pulls data from the nearest available replica to maximize throughput and minimize latency. Writes go to one replica first and then propagate to the others, ensuring durability without immediate bottlenecks.

This block-level design also allows HDFS to scale horizontally. New nodes can be added to the cluster, and the system will rebalance data to use additional capacity.

For comparison with other big data formats, explore how Apache Parquet optimizes columnar storage for analytics workloads.

Fault tolerance in HDFS

HDFS is built with failure in mind. In large distributed systems, node failures are expected, not exceptional. HDFS ensures data availability through its replication mechanism. If a DataNode goes down, the NameNode detects the failure via missed heartbeats and schedules the replication of lost blocks to healthy nodes.

Plus, the system constantly monitors block health and initiates replication as needed. The redundant storage strategy, combined with proactive monitoring, ensures that no single point of failure can compromise data integrity or access.

MapReduce Framework

Next up is Hadoop’s processing engine, MapReduce. It allows for distributed computation across large datasets by breaking down tasks into smaller, independent operations that can be executed in parallel.

The model simplifies complex data transformations and is especially suited for batch processing in a distributed environment.

Overview of MapReduce

MapReduce follows a two-phase programming model: the Map and Reduce phases.

During the Map phase, the input dataset is divided into smaller chunks, and each chunk is processed to produce key-value pairs. These intermediate results are then shuffled and sorted before being passed to the Reduce phase, where they are aggregated or transformed into final outputs.

This model is highly effective for tasks like counting word frequencies, filtering logs, or joining datasets at scale.

Developers implement custom logic through map() and reduce() functions, which the framework orchestrates across the cluster.

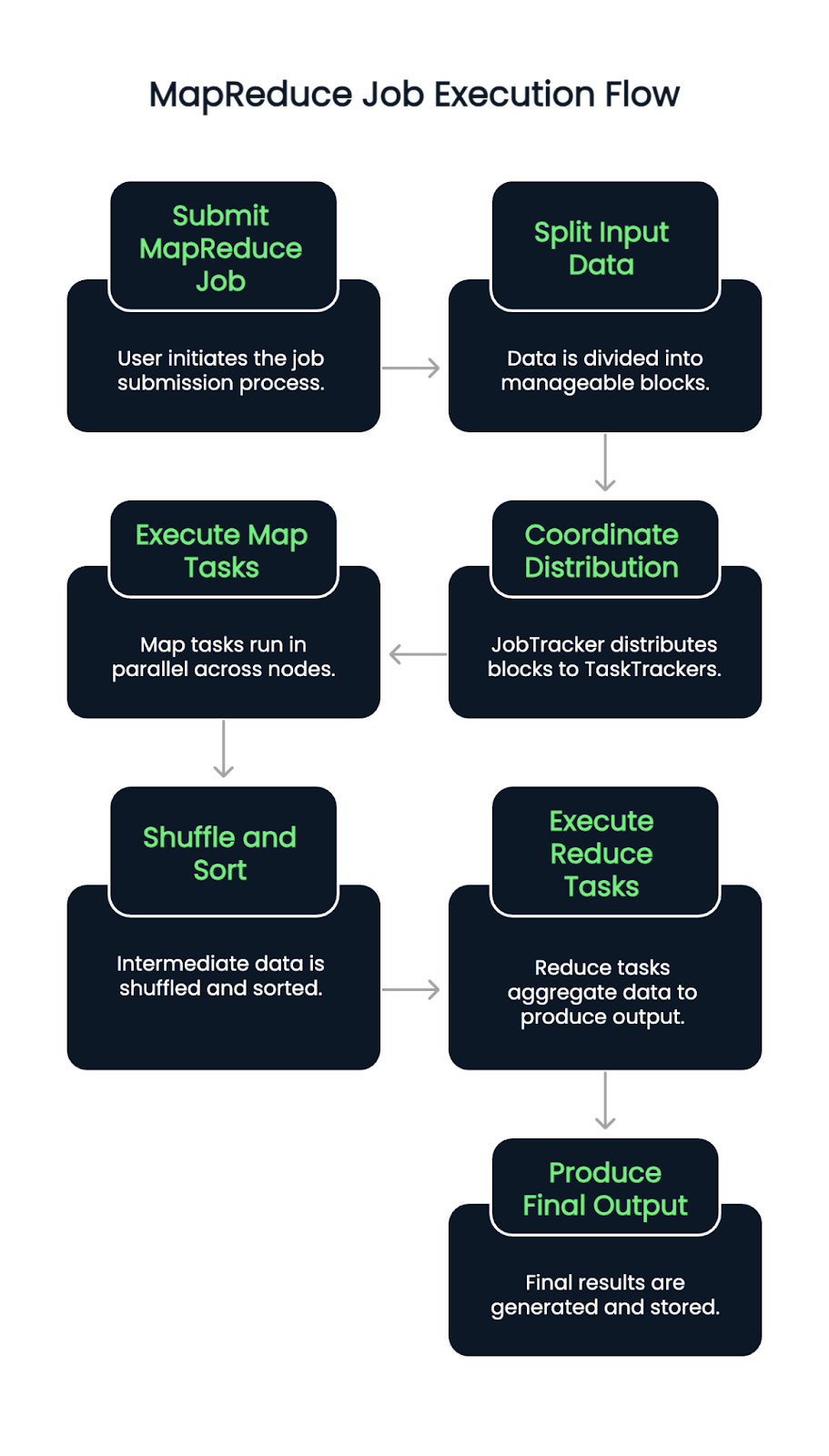

Execution flow of a MapReduce job

When a MapReduce job is submitted, the input data is first split into blocks. A JobTracker (or ResourceManager in YARN-enabled versions) then coordinates the distribution of these blocks across available TaskTrackers or NodeManagers.

The Map tasks execute in parallel across nodes, reading input splits and emitting intermediate key-value pairs. These pairs are then shuffled and sorted automatically. Once this phase is complete, the Reduce tasks begin, pulling in grouped data and applying aggregation logic to produce the final output.

The process guarantees data locality by assigning tasks close to where the data resides, minimizing network congestion and improving throughput. Task failures are detected and reassigned automatically, contributing to system resilience.

MapReduce benefits and limitations

MapReduce offers several clear advantages:

- Excellent parallelism for large-scale batch processing

- Scalability across thousands of nodes

- Strong fault tolerance through task re-execution and checkpointing

But, it also comes with trade-offs:

- The model is latency-heavy, so it's less ideal for real-time processing

- Writing custom MapReduce jobs can be a big lift and difficult to debug

- It lacks the interactivity and flexibility of newer tools like Apache Spark.

Deciding between Hadoop and Spark? Read our guide on which Big Data framework is right for you.

YARN: Yet Another Resource Negotiator

YARN serves as Hadoop’s resource management layer. It separates job scheduling and resource allocation from the processing model, helping Hadoop support multiple data processing engines beyond MapReduce.

Role in Hadoop architecture

YARN acts as the central platform for managing compute resources across the cluster. It allocates available system resources to several applications and coordinates execution. This separation of concerns helps Hadoop scale efficiently and opens the door to support other frameworks like Apache Spark, Hive, and Tez.

YARN key components

YARN introduces three components:

- ResourceManager: The master daemon (background program) that manages all resources and schedules applications.

- NodeManager: A per-node agent that monitors resource usage and reports back to the ResourceManager.

- ApplicationMaster: A job-specific operation that uses resources from the ResourceManager and coordinates execution with NodeManagers.

Each application (like a MapReduce job) has its own ApplicationMaster, which allows for better fault isolation and job tracking.

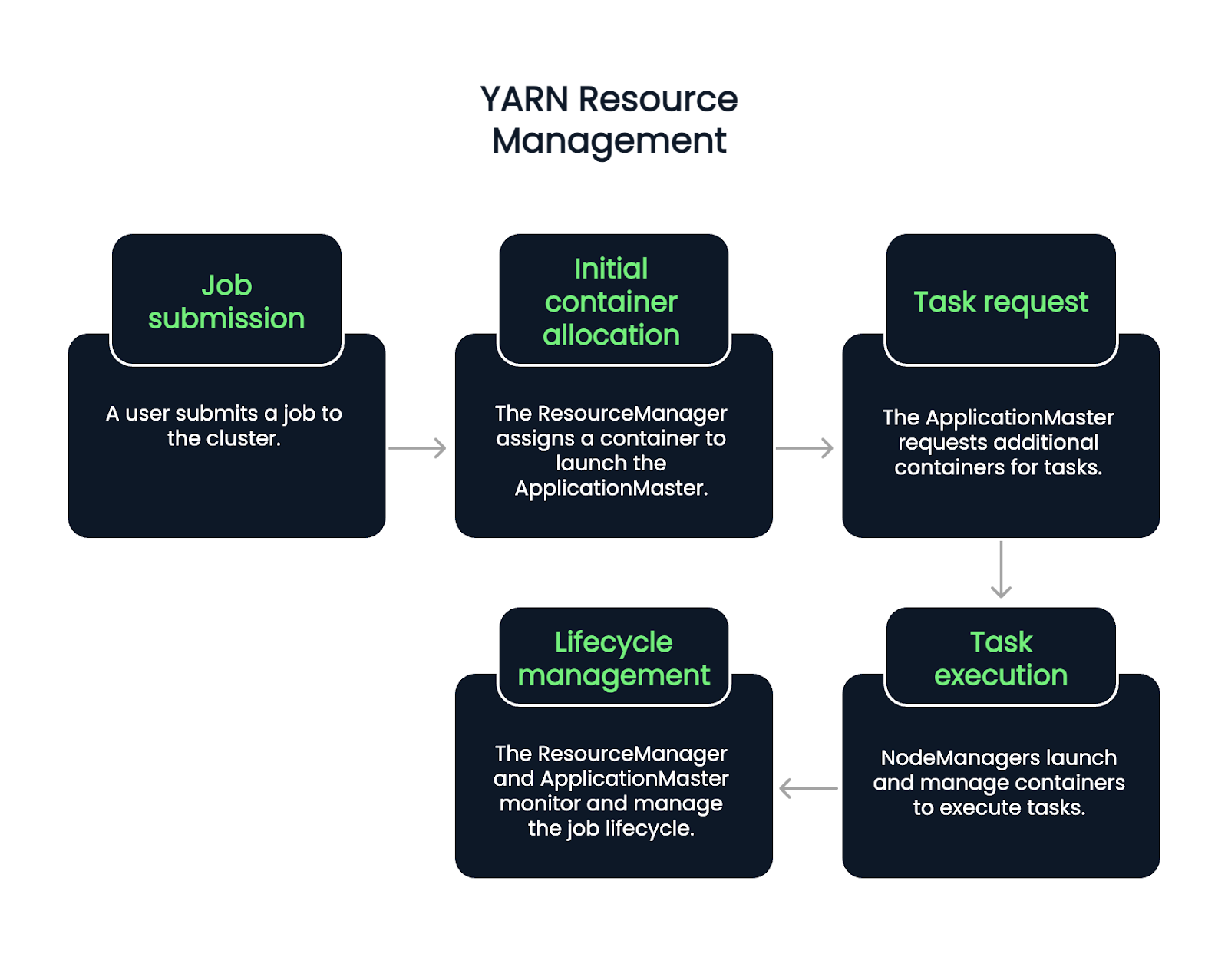

YARN resource management process

The flow begins when a user submits a job. The ResourceManager accepts the job and assigns a container to start the ApplicationMaster. From there, the ApplicationMaster requests more containers to execute tasks, based on the current workload and availability.

NodeManagers launch and manage these containers, which run individual tasks. Throughout the job lifecycle, the ResourceManager and ApplicationMaster handle monitoring, failure recovery, and completion reporting. So, it’s about a five-step process.

- Job submission: A user submits a job to the cluster.

- Initial container allocation: The ResourceManager accepts the job and assigns a container to launch the ApplicationMaster.

- Task request: The ApplicationMaster requests additional containers to run specific tasks, based on workload and available resources.

- Task execution: NodeManagers launch and manage these containers, which carry out the tasks.

- Lifecycle management: The ResourceManager and ApplicationMaster work together to monitor job progress, handle failures, and confirm task completion.Throughout the job lifecycle, the ResourceManager and ApplicationMaster handle monitoring, failure recovery, and completion reporting.

Hadoop Common and Utilities

The glue that pulls all Hadoop’s components together is Hadoop Common. It’s a collection of libraries, configuration files, and utilities required by all modules.

What is Hadoop Common

Hadoop Common provides the foundational code and tools that allow HDFS, YARN, and MapReduce to communicate and coordinate. This includes Java libraries, file system clients, and APIs used across the ecosystem.

It also ensures consistency and compatibility between modules, allowing developers to build on a shared set of primitives.

Importance of configuration and tools

Proper configuration of Hadoop is critical. Hadoop Common houses configuration files like core-site.xml, which define default behaviors such as filesystem URIs and I/O settings.

Command-line tools and scripts included in Hadoop Common support tasks like starting services, checking node health, and managing jobs. Most of these tools, system administrators, and developers rely on for day-to-day cluster operations.

Real-World Applications of Hadoop

If it uses massive datasets, distributed processing, or batch analytics, Hadoop is likely used. More specifically, Hadoop is used across various industries to process massive volumes of structured and unstructured data. Its distributed design and open-source flexibility make it versatile for data-intensive tasks.

Industries leveraging Hadoop

Organizations in finance, healthcare, telecommunications, e-commerce, and social media routinely use Hadoop. It helps banks detect fraud in real time, supports genomic research in healthcare, and enables recommendation engines in online retail.

Example use cases

- Log processing: Aggregating and analyzing log data from servers and applications.

- Recommendation systems: Building models that suggest content or products.

- Fraud detection: Identifying suspicious behavior patterns across transactions.

These scenarios benefit from Hadoop’s ability to handle large datasets with fault tolerance and parallelism.

For a modern, cloud-native alternative to Hadoop, try the Introduction to Databricks course, which supports Spark and other scalable tools.

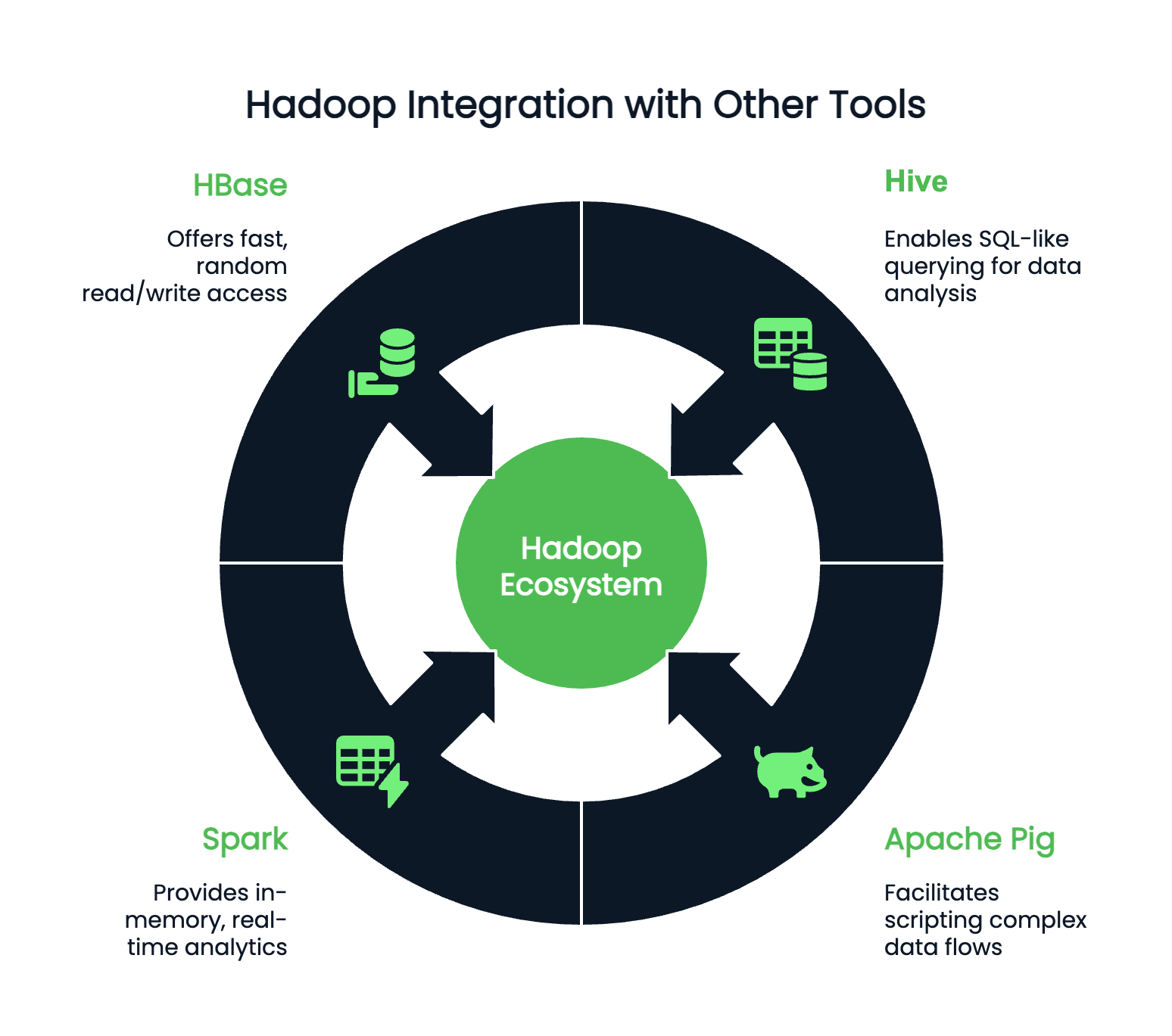

Integration with other tools

Hadoop rarely works in isolation and can integrate with hundreds of accessory programs. Depending on your preferences and coding environment, you might pair Hadoop with:

- Hive: For SQL-like querying

- Apache Pig: For scripting complex data flows

- Spark: For in-memory, real-time analytics

- HBase: For fast, random read/write access to large datasets

Conclusion

While Hadoop may not be the buzzword it once was, it remains a foundational technology in the big data landscape. Understanding Hadoop is still incredibly valuable for any data professional working with large-scale systems. Its core components—HDFS for storage, MapReduce for processing, YARN for resource management, and Hadoop Common for essential utilities—continue to shape how data workflows are designed and scaled.

Before jumping into deployment or optimization, take the time to understand how data is distributed and configured within a Hadoop cluster. That knowledge simplifies troubleshooting and performance tuning and paves the way for integrating powerful tools like Apache Spark and Hive for more sophisticated analytics.

For more on big data processing, check out:

Big Data with PySpark

FAQs

Is Hadoop a database?

No, Hadoop is not a database; it’s a framework of software used for storing and processing large sets of data across multiple (clusters) of computers.

What are the four main components of Hadoop?

The main Hadoop architecture is composed of Hadoop Distributed File System (HDFS), Yet Another Resource Negotiator (YARN), Hadoop Common, and MapReduce.

What programming language does Hadoop use?

Hadoop mainly uses Java as its programming language.

Can Hadoop integrate with other big data tools?

Yes, Hadoop can integrate with multiple big data tools such as Hive, Spark, and HBase for better data querying and processing.

Does anyone still use Hadoop?

Yes, Hadoop is still used and is applicable to big data processing and analytics. However, due to its complexity, other frameworks are becoming more preferred, such as Apache Spark.

What is the difference between Hadoop and Spark?

Hadoop uses batch-oriented MapReduce processing, while Spark offers in-memory processing for faster, iterative tasks. Spark is generally better for real-time analytics and machine learning workflows.

Can Hadoop work with cloud platforms like AWS or Azure?

Yes, Hadoop is cloud-compatible. AWS EMR and Azure HDInsight offer managed Hadoop services that simplify deployment and scaling in cloud environments.

What languages are supported for writing Hadoop MapReduce jobs?

Java is the primary language, but APIs exist for Python, Ruby, and C++. Tools like Hadoop Streaming let developers use any language that reads from stdin and writes to stdout.

What is the role of Zookeeper in the Hadoop ecosystem?

Apache ZooKeeper manages distributed configuration and synchronization. It is often used with Hadoop tools like HBase to manage coordination between nodes.

Ashlyn Brooks is a Technical Writer specializing in cloud platforms (Azure, AWS), API documentation, and data infrastructure. With over seven years of experience, she excels at translating complex concepts into clear, user-friendly content that enhances developer experience and product adoption.