Course

Building a solid data warehouse architecture is crucial for handling large and complex datasets. As data continues to grow, businesses need a structured approach to store, manage, and analyze information efficiently.

Without the right architecture in place, you might run into slow queries, inconsistent data, and challenges that make decision-making more complicated than it should be.

In this post, I’ll walk you through the key components of a data warehouse, different architecture types, and best practices to help you design a system that’s both scalable and efficient. Whether you’re setting up a new data warehouse or optimizing an existing one, understanding these principles will set you up for success.

Components of a Data Warehouse Architecture

A well-designed data warehouse is built on a series of interconnected components. These components work together to process data from various sources, transform it for analysis, and make it available to users in a structured format. Below, we explore the essential building blocks of a data warehouse architecture.

Data sources

A data warehouse collects information from various sources, including structured data from relational databases and unstructured data like logs or text files. Common data sources include:

- Transactional databases (e.g., MySQL, PostgreSQL)

- Cloud storage (e.g., Amazon S3, Google Cloud Storage)

- External APIs (e.g., third-party services, web applications)

By integrating these diverse sources into a single system, businesses gain a complete and unified view of their operations.

ETL layer

The ETL (or ELT) process is a key component of data warehouse architecture. It involves:

- Extracting raw data from various sources

- Transforming it through cleaning, formatting, and structuring

- Loading it into the data warehouse for analysis

In ETL, data is transformed before loading, while in ELT, raw data is loaded first and then transformed within the warehouse. ELT is often preferred for modern cloud-based warehouses due to its scalability and performance advantages.

Staging area

The staging area is an optional, temporary storage space where raw data is held before being processed and loaded into the data warehouse. This layer acts as a buffer to allow data transformations to occur without directly affecting the main data storage.

Data storage layer

At the core of the data warehouse lies the storage layer, where data is organized into fact and dimension tables:

- Fact tables store quantitative data (e.g., sales figures)

- Dimension tables store descriptive information (e.g., customer or product details).

Data is typically arranged in a star or snowflake schema to optimize it for query performance and analysis.

Metadata layer

The metadata layer manages and maintains the structure and relationships within the data warehouse. At its core, metadata provides information about data sources, schema, and transformations. This allows users and systems to understand the context, lineage, and usage of the data within the warehouse.

Data access and analytics layer

This layer allows users to retrieve and analyze data from the warehouse. It typically includes:

- Business intelligence (BI) tools – Dashboards, reports, and visualizations (e.g., Tableau, Power BI)

- Direct access – SQL queries or APIs for data retrieval

- Advanced analytics – Machine learning models, predictive analytics, and in-depth reporting

While basic reporting and dashboards fall under traditional data access, more complex machine learning and predictive analytics workflows are sometimes categorized separately as an analytics layer due to their advanced computational needs.

Associate Data Engineer in SQL

Types of Data Warehouse Architecture

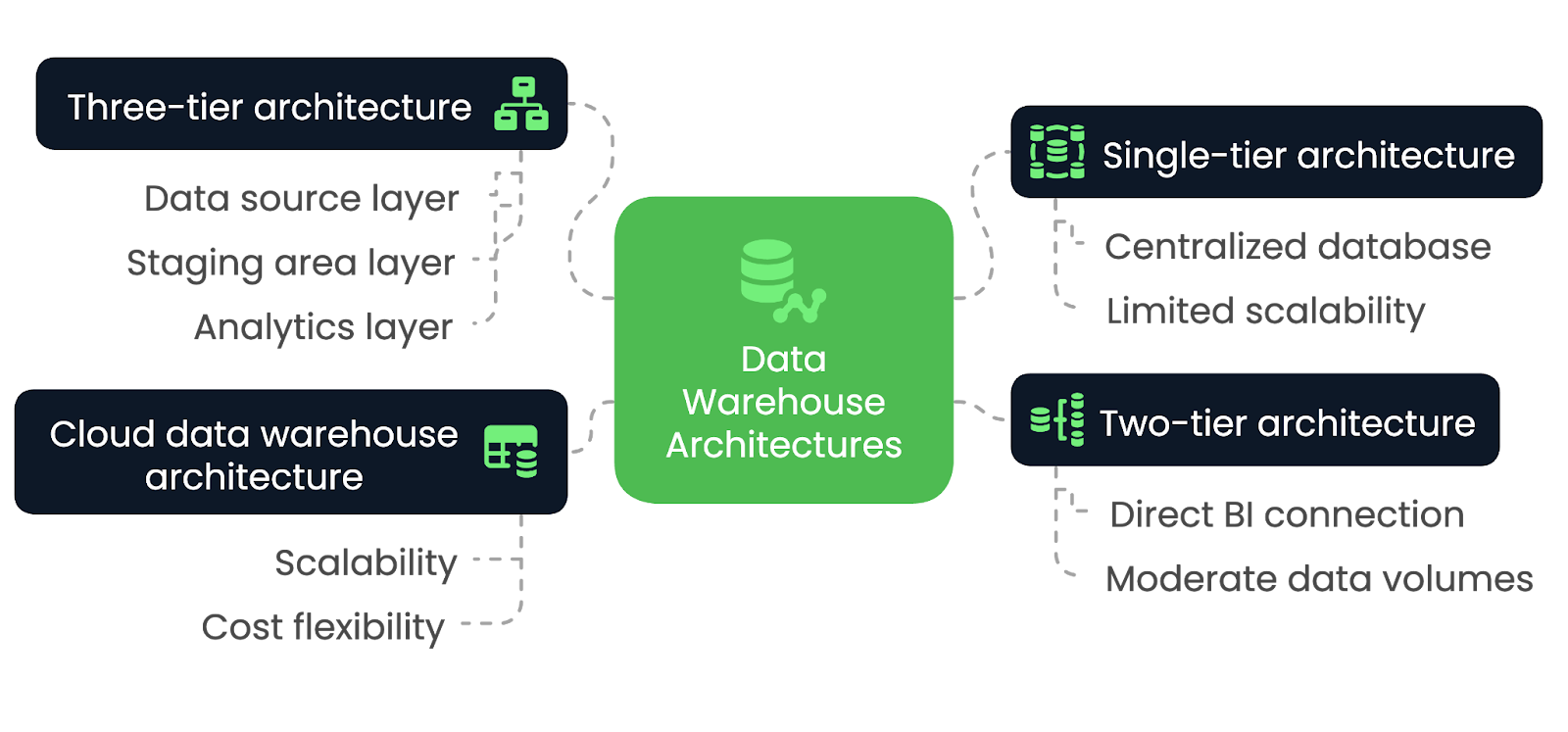

Choosing the right data warehouse architecture is essential for meeting your organization's performance, scalability, and integration needs. However, different architectures provide unique advantages and trade-offs, depending on various factors. Let’s explore them in this section.

Single-tier architecture

In a single-tier architecture, the data warehouse is built on a single, centralized database that consolidates all data from various sources into one system. This architecture minimizes the number of layers and simplifies the overall design, leading to faster data processing and access. However, it lacks the flexibility and modularity found in more complex architectures.

The single-tier architecture best suits small-scale applications and organizations with limited data processing needs. It's ideal for businesses that prioritize simplicity and quick implementation over scalability. However, as data volume increases or more advanced analytics are required, this architecture may struggle to meet those demands effectively.

Two-tier architecture

In a two-tier architecture, the data warehouse connects directly to BI tools, often through an OLAP system. While this approach provides faster access to data for analysis, it may face challenges in handling larger data volumes, as scaling becomes difficult due to the direct connection between the warehouse and BI tools.

The two-tier architecture is best suited for small to medium-sized organizations that need faster data access for analysis but don't require the scalability of larger, more complex architectures. It's ideal for businesses with moderate data volumes and relatively simple reporting or analytics needs, as it allows for direct integration between the data warehouse and business intelligence tools.

However, as data grows or analytical requirements become more sophisticated, this architecture may struggle to scale and handle increasing workloads efficiently.

Three-tier architecture

The three-tier architecture is the most common and widely used model for data warehouses. It separates the system into distinct layers: the data source layer, the staging area layer, and the analytics layer. This separation enables efficient ETL processes, followed by analytics and reporting.

The three-tier architecture is ideal for large-scale enterprise environments that require scalability, flexibility, and the ability to handle massive data volumes. It allows businesses to manage data more efficiently and supports advanced analytics, machine learning, and real-time reporting. Separating layers enhances performance, making it suitable for complex data environments.

Cloud data warehouse architecture

In cloud data warehouse architecture, the entire infrastructure is hosted on platforms like Amazon Redshift, Google BigQuery, or Snowflake. Cloud-based architectures offer virtually unlimited scalability, with the ability to handle large datasets without the need for on-premises hardware. They also provide cost flexibility through pay-as-you-go models, which makes them accessible to a broader range of businesses.

Cloud data warehouse architecture is ideal for organizations of all sizes. It’s ideal for businesses looking for a flexible and scalable solution since this approach enables companies to scale storage and compute resources dynamically.

Data warehouse architectures compared—image by Author.

Data Warehouse Architecture Design Patterns

There are quite a few data warehouse design patterns, but each caters to different needs depending on the complexity of the data and the types of queries being executed. Let’s explore some of the most common ones and decipher the most appropriate scenarios to use them for maximum efficiency.

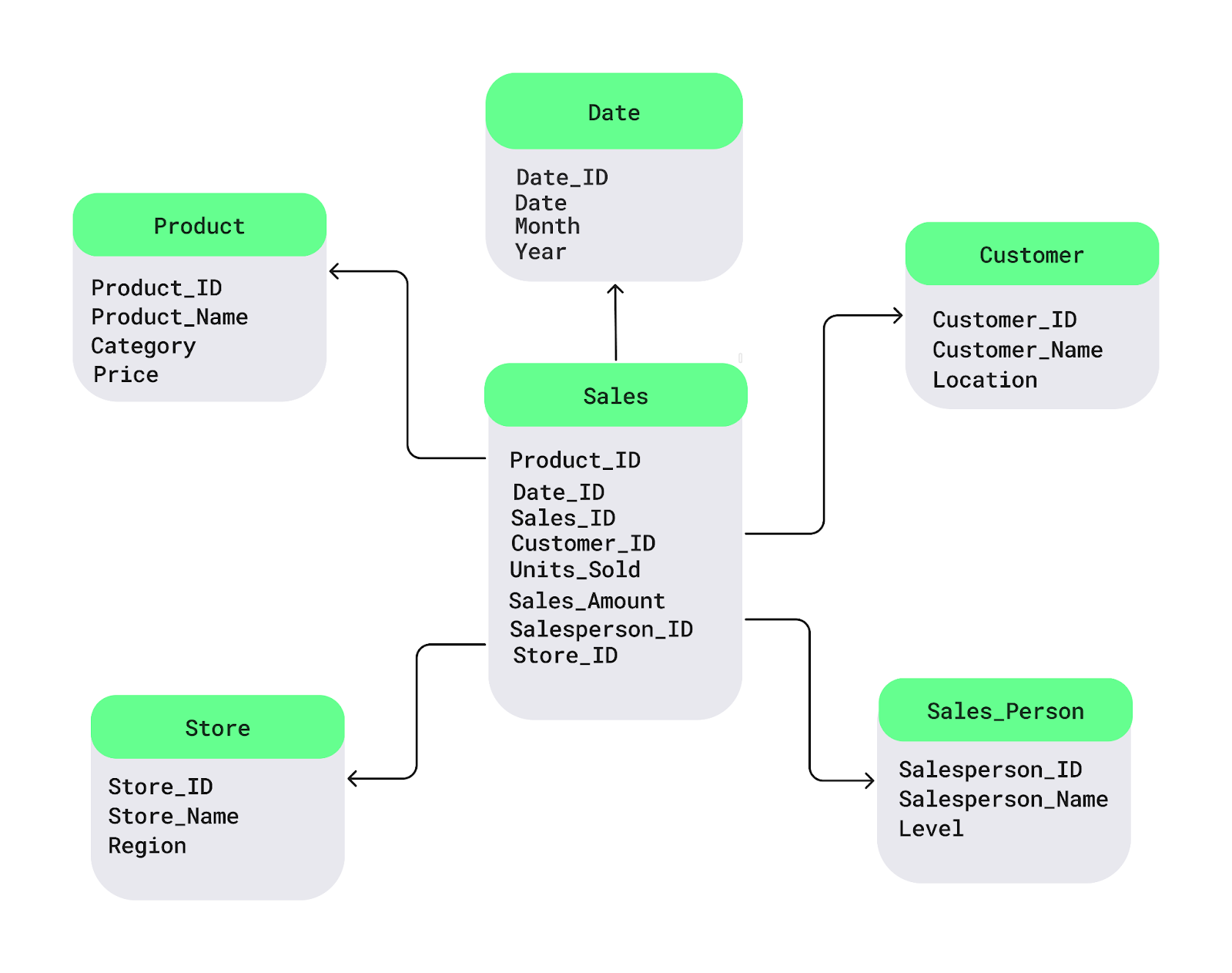

Star schema

The star schema is one of the most commonly used data warehouse design patterns. It structures data into:

- A central fact table – Stores quantitative data, such as:

- Sales revenue

- Units sold

- Surrounding dimension tables – Contain descriptive attributes, including:

- Product details (e.g., product name, category)

- Customer information (e.g., customer ID, demographics)

- Store locations

- Time periods

In a retail scenario, the fact table holds transactional sales data, while dimension tables provide context on products, customers, stores, and time. This schema improves query performance, making it ideal for environments with frequent, straightforward reporting needs.

Star schema example. Image source: DataCamp.

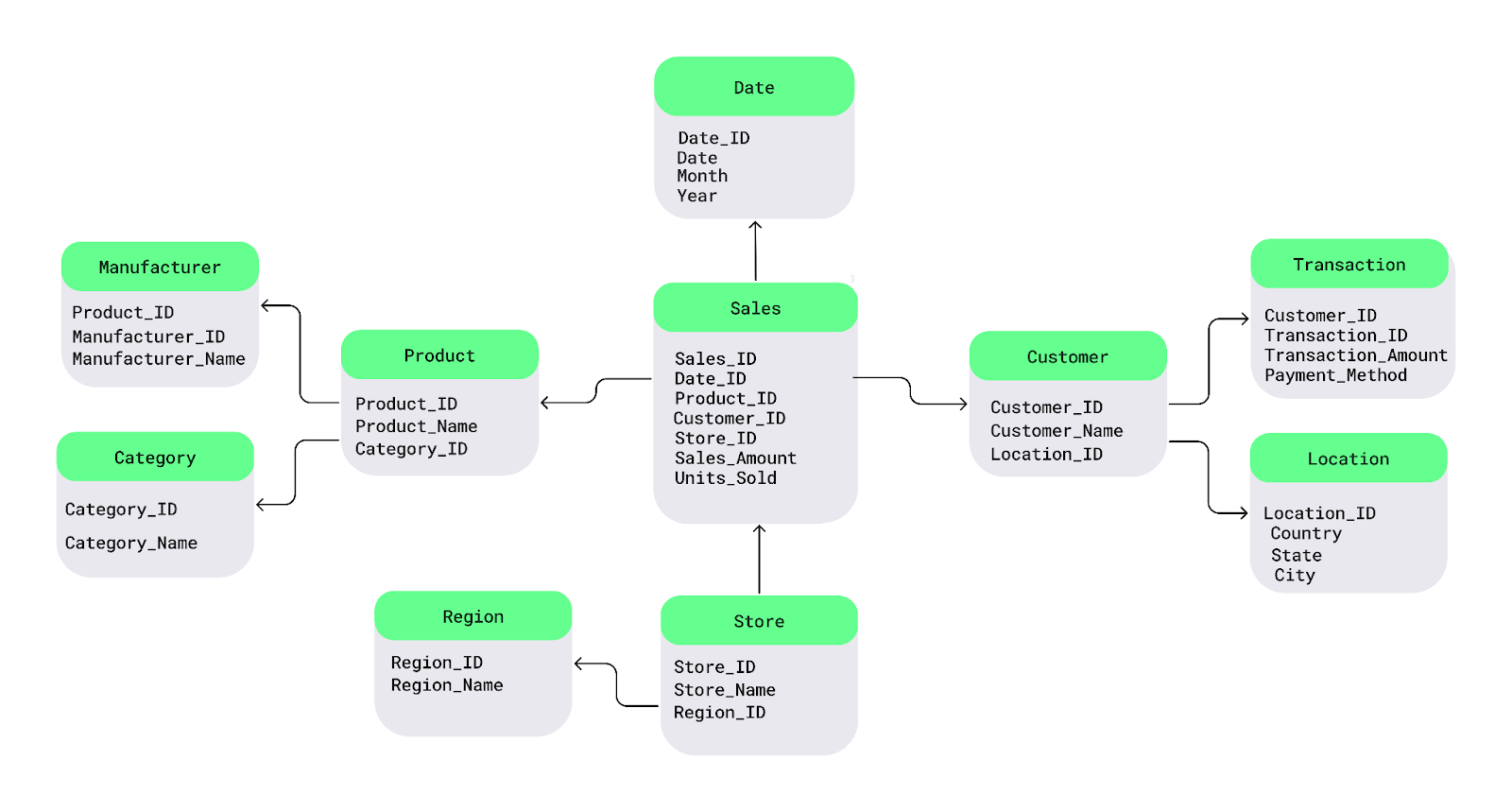

Snowflake schema

The snowflake schema is an extension of the star schema, introducing additional normalization to dimension tables. Key characteristics include:

- Normalized dimension tables – Dimension tables are split into sub-dimensions to reduce redundancy.

- Improved storage efficiency – Less duplicate data compared to the star schema.

- More complex queries – Joins between multiple tables can slow down query performance.

This schema is ideal for:

- Storage optimization – Useful when reducing redundancy is a priority.

- Complex datasets – Beneficial for data with many attributes that require normalization.

- Cost-conscious environments – Preferred when storage costs are high.

- Detailed analytical queries – Supports in-depth analysis at the expense of query speed.

While the snowflake schema conserves storage, it may lead to more intricate queries due to its highly structured nature.

Snowflake schema example. Image source: DataCamp.

Data vault modeling

Data vault modeling is a more recent design pattern focusing on flexibility, scalability, and historical data tracking. It splits the data warehouse into three core components:

- Hubs (which store key business entities)

- Links (which represent relationships between entities)

- Satellites (which hold descriptive attributes)

This approach is highly adaptable to business process changes, making it well-suited for agile development environments.

The data vault modeling design pattern has been growing in popularity due to its ability to handle evolving data environments, accommodate changes in data sources, and support long-term scalability. It has become an ideal solution for organizations that require detailed historical tracking, frequent schema changes, or a highly scalable architecture.

Best Practices for Building a Data Warehouse Architecture

Implementing best practices early on is essential to building a robust architecture. Thus, this section will cover some of the best practices to follow when constructing a high-performance data warehouse.

Plan for scalability

Data volumes and business requirements will inevitably grow over time, so it’s essential to ensure the architecture you select can handle increasing workloads. This can be done simply by using scalable storage solutions, like cloud-based platforms, and partitioning large tables for better performance.

Optimize ETL processes

Streamline the ETL pipeline by minimizing unnecessary data transformations, leveraging incremental loading strategies, and parallelizing ETL tasks when possible. This ensures that data is ingested, transformed, and loaded quickly without bottlenecks.

Ensure data quality and consistency

Maintaining high data quality is fundamental to a data warehouse's value. Implement strong data validation and deduplication procedures to ensure the data entering the warehouse is accurate and consistent. Regular audits and quality checks should be part of the ETL pipeline to prevent issues that could lead to incorrect analyses.

Focus on data security and compliance

Data security should be a top priority – especially when dealing with sensitive or regulated information. There are three essential measures you must take:

- Apply encryption for data at rest and in transit.

- Implement role-based access controls to limit data access to authorized users.

- Ensure the architecture meets regulatory standards (e.g., GDPR, HIPAA, industry-specific requirements).

Monitor performance and usage

To keep the data warehouse operating efficiently, regularly monitor the following:

- Query performance

- User access patterns

- Storage utilization

Tools for tracking performance can help identify bottlenecks, which can help you make proactive adjustments where necessary.

Cloud vs. On-Premises Data Warehouse Architecture

Should you opt for a cloud-based data warehouse or keep everything on-premise? What are the key benefits and trade-offs of each approach? And is a hybrid solution the best of both worlds?

In this section, we’ll explore these questions and help you determine the right architecture for your needs.

Cloud-based architecture

Cloud-based data warehouses provide unparalleled scalability and flexibility. These platforms allow businesses to scale storage and compute resources on demand, making them ideal for handling large, dynamic data volumes without upfront infrastructure costs.

The pay-as-you-go pricing model also makes cloud solutions cost-efficient, particularly for businesses with fluctuating workloads. However, cloud environments may raise concerns about data governance and compliance, especially for highly regulated industries.

Popular cloud vendors include:

- Amazon Web Services (Redshift)

- Google Cloud (BigQuery)

- Microsoft Azure (Azure Synapse Analytics)

On-premises architecture

On-premises data warehouses are best suited for organizations requiring strict data control. With on-premises architecture, companies maintain complete control over their hardware and data, essential for industries like finance, healthcare, and government, where sensitive information must be protected.

But here’s the catch. While on-premises systems can offer robust performance, they often come with high upfront costs for hardware and ongoing maintenance. Scaling can also be challenging as it requires manual upgrades and hardware procurement, which is less flexible than cloud solutions.

Hybrid architecture

Hybrid data warehouse architectures combine cloud and on-premises components, offering greater flexibility for organizations that need to balance security, compliance, and scalability. For example, sensitive data can be stored on-premises, while less critical data or analytics workloads can be processed in the cloud.

Hybrid architectures are particularly useful for businesses that need to transition to the cloud gradually or have specific data privacy requirements. This model provides the best of both worlds but requires careful orchestration to ensure seamless data integration between environments.

Summary table: Cloud vs. on-premises vs. hybrid data warehouse architecture

|

Feature |

Cloud-based Architecture |

On-premises Architecture |

Hybrid Architecture |

|

Scalability |

Highly scalable, on-demand resource allocation |

Limited by on-site hardware, requires manual upgrades |

Combines scalable cloud resources with on-premises control |

|

Cost |

Pay-as-you-go pricing, lower upfront costs |

High upfront investment in hardware and ongoing maintenance |

Hybrid costs, balancing cloud savings and on-premises expenses |

|

Flexibility |

Extremely flexible, ideal for dynamic workloads |

Less flexible, constrained by physical infrastructure |

Flexible, combining cloud agility with on-prem control |

|

Security & Compliance |

May raise concerns for highly regulated industries |

Complete control over data security and regulatory compliance |

Ensures compliance for sensitive data while leveraging cloud |

|

Performance |

Can vary based on the cloud provider and configuration |

High performance but dependent on hardware investments |

Balanced performance based on workload distribution |

|

Maintenance |

Minimal maintenance, managed by the cloud provider |

Requires ongoing internal IT maintenance |

Hybrid approach with cloud services handling some maintenance |

|

Use Cases |

Best for businesses with large, fluctuating data volumes |

Best for organizations with strict security and compliance needs |

Ideal for organizations transitioning to the cloud or with mixed needs |

Popular Cloud Data Warehouse Platforms

Here’s an overview of some of the most popular data warehouse platforms used in the cloud.

Amazon Redshift

Amazon Redshift is a fully managed cloud data warehouse solution for large-scale data analytics. Its architecture is based on a massively parallel processing system, which enables users to query vast datasets quickly. With its ability to scale up and down based on workload requirements, Redshift is well-suited for organizations that need cost-effective scalability and integration with other AWS services.

Google BigQuery

Google BigQuery is a serverless, highly scalable data warehouse platform built for fast, real-time analytics. Its unique architecture decouples storage and compute, which enables users to query petabytes of data without managing infrastructure. BigQuery’s ability to process large-scale analytics with minimal overhead makes it ideal for organizations with heavy data workloads that demand fast, complex queries.

Snowflake

Snowflake offers a multi-cluster, shared-data architecture that separates compute and storage, providing flexibility in independently scaling resources. Snowflake’s cloud-native approach allows businesses to dynamically scale workloads, thus making it an attractive option for organizations that need high flexibility and workload management across multiple cloud platforms.

Microsoft Azure Synapse

Microsoft Azure Synapse Analytics is a hybrid data management platform that combines data warehousing and big data analytics. Its architecture integrates with big data frameworks like Apache Spark to provide a unified environment for managing data lakes and data warehouses. Azure Synapse offers seamless integration with other Microsoft services and is ideal for businesses with diverse data analytics needs.

Summary table: Data warehouse platforms

|

Platform |

Architecture |

Key Features |

Use Cases |

|

Amazon Redshift |

MPP architecture, AWS ecosystem |

Scalable, fast queries, AWS integration |

Large-scale analytics, cloud-native applications |

|

Google BigQuery |

Serverless, decoupled storage and compute |

Real-time analytics, low infrastructure |

Fast analytics, real-time data processing |

|

Snowflake |

Multi-cluster, shared-data architecture, cross-cloud (AWS, Azure, GCP) |

Compute-storage separation, dynamic scaling |

Flexible scaling, cross-cloud platform workloads |

|

Azure Synapse |

Hybrid, big data integration |

Unified analytics, Spark integration |

Hybrid data management, integration with Microsoft tools |

Challenges in Data Warehouse Architecture

While data warehouses provide powerful capabilities for organizations to analyze and manage vast amounts of data, they also come with inherent challenges.

Here are some of the most significant challenges and solutions to consider when designing and maintaining a data warehouse architecture.

Data integration from diverse sources

Organizations collect data from multiple sources, each with different formats, schemas, and structures, making integration a complex challenge. Key considerations include:

- Mixing structured and unstructured data – Combining relational database records with logs, social media streams, or sensor data requires careful transformation.

- Extensive data transformation and cleansing – Raw data often needs cleaning, formatting, and standardization before it can be used effectively.

- Hybrid environments – Synchronizing data between on-premises systems and cloud platforms adds another layer of complexity.

To address these challenges, businesses need flexible ETL processes and data management tools that support diverse data formats and seamless integration across platforms.

Performance at scale

As data warehouses grow, maintaining high query performance becomes a challenge. Large-scale operations must efficiently process millions—or even billions—of rows while avoiding slow queries, high costs, and inefficient resource usage.

Key optimization strategies include:

- Data indexing – Speeds up data retrieval by creating structured lookup paths.

- Partitioning – Organizes data into smaller, manageable segments for faster querying.

- Materialized views – Stores precomputed query results to reduce processing time.

- Columnar storage formats (e.g., Parquet, ORC) – Enhances read performance for analytical queries.

- Query optimization engines – Improve execution plans for faster performance.

Workload management

As more users access the data warehouse, efficient resource allocation becomes critical. Key considerations include:

- Concurrency management – Prevents performance bottlenecks when multiple queries run simultaneously.

- Dynamic scaling – Cloud-based warehouses automatically adjust resources to meet demand.

- Resource contention handling – On-premises or hybrid systems must carefully manage CPU, memory, and disk resources to avoid slowdowns.

By implementing these strategies, organizations can ensure that their data warehouse scales effectively and maintains high performance as data volume and user activity grow.

Data governance and security

As data warehouses store increasing amounts of sensitive information, strong governance, and security measures are essential to prevent breaches, ensure compliance, and maintain data integrity.

- Enforcing consistent governance policies

- Implement role-based access controls (RBAC) to restrict data access.

- Maintain data catalogs to track data lineage, ownership, and usage.

- Regulatory compliance (GDPR, CCPA, HIPAA, etc.)

- Protect personal data with anonymization and masking techniques.

- Implement data retention policies to meet legal requirements.

- Encryption and security best practices

- Encrypt data at rest and in transit to prevent unauthorized access.

- Maintain detailed audit logs to track access to sensitive data.

- Security in hybrid and cloud environments

- Manage encryption keys effectively across on-premises and cloud systems.

- Secure network traffic between cloud and on-prem systems.

- Ensure compliance with the security policies of third-party providers.

Conclusion

In this article, we explored key components of data warehouse architecture, common challenges, and strategies for overcoming them. Ultimately, a well-designed data warehouse does more than store data—it empowers organizations to make informed, data-driven decisions that drive growth and innovation.

Want to dive deeper into data architecture and best practices? Check out these resources:

- Understanding Data Engineering – Learn the fundamentals of data engineering and its role in modern data ecosystems.

- Data Warehousing Concepts – Gain insights into core data warehousing principles and design.

- Understanding Modern Data Architecture – Explore how data architectures have evolved in the cloud era.

- Database Design – Master the foundations of designing efficient and scalable databases.

- ETL and ELT in Python – Learn how to build effective ETL/ELT pipelines using Python.

Become a Data Engineer

FAQs

How does a data warehouse differ from a database?

A database is designed for transactional processing (OLTP), handling real-time operations like inserting and updating records. A data warehouse, on the other hand, is optimized for analytical processing (OLAP), enabling complex queries and historical data analysis.

How does a data warehouse support real-time analytics?

Traditional data warehouses process batch updates, but modern cloud-based architectures (e.g., Snowflake, BigQuery) enable real-time analytics by integrating streaming data via tools like Apache Kafka, AWS Kinesis, or Google Pub/Sub.

What role does metadata play in a data warehouse?

Metadata provides essential information about the structure, lineage, and definitions of data stored in the warehouse. It ensures consistency, improves data governance, and helps users locate the right datasets efficiently.

Can a data warehouse handle unstructured data?

Data warehouses primarily store structured data, but they can integrate semi-structured formats (e.g., JSON, XML, Parquet). However, for purely unstructured data (e.g., images, videos, raw text), organizations often use data lakes in combination with a data warehouse.

What are surrogate keys, and why are they used in data warehouse design?

Surrogate keys are system-generated unique identifiers used in fact and dimension tables instead of natural keys (like customer ID or product code). They improve performance, consistency, and flexibility in schema design.

How do materialized views improve data warehouse performance?

Materialized views store precomputed query results, reducing processing time for repetitive queries. This significantly improves performance, especially in aggregated or complex analytical queries.

How does a data warehouse integrate with AI and machine learning?

Many modern warehouses support ML and AI workloads by offering:

- Built-in ML functions (e.g., BigQuery ML, Redshift ML)

- Integration with ML frameworks (e.g., TensorFlow, PyTorch, Scikit-learn)

- Feature stores that allow analysts to serve structured data to ML models efficiently

What are Slowly Changing Dimensions (SCD) in a data warehouse?

SCDs track historical changes in dimension tables. The most common types include:

- SCD Type 1 – Overwrites old data with new data.

- SCD Type 2 – Keeps historical data by adding new records.

- SCD Type 3 – Maintains limited history using additional columns.