Cursus

For weeks, the arrival of Claude Sonnet 5 was awaited. Instead, Anthropic first released Claude Opus 4.6, and now followed up with an update on its Sonnet model family.

Claude Sonnet 4.6 brings connectors, skills, and context compaction to all users, tops the GDPval-AA benchmark, and offers flagship-level performance at an affordable price.

While the development seemingly focused on agentic skills, it promises “a full upgrade of the model’s skills” across pretty much all relevant domains.

Can the model live up to its claims? In this tutorial, I’ll show you the key features of Anthropic’s new model and put it to the test.

Make sure to also check out our guide on Qwen3.5, Alibaba's new flagship model.

Introduction to Claude Models

What Is Claude Sonnet 4.6?

Claude Sonnet 4.6 is Anthropic’s latest large language model (LLM). It focuses heavily on agentic coding, computer use, and other agentic capabilities, and is the lighter model compared to the recently published flagship model, Claude Opus 4.6.

The incrementally sounding update might have been a surprise not too long ago, but it is in line with this recent release. I would interpret the reasoning behind the versioning such that Claude Sonnet 4.6 might not introduce many new standalone features, but integrates recently introduced features to the Sonnet model family.

In addition to making former paid-only features available to all users, Claude Sonnet 4.6 performs significantly better than its predecessor across the board, while still keeping the API pricing rate of Claude Sonnet 4.5 ($3/$15 per million input/output tokens). It is available immediately via both the Claude web chat interface and the API.

Claude Sonnet 4.6 Key Features

Anthropic’s approach to the new release seemed to be offering Opus-level flagship performance at a Sonnet price. While this sounds ambitious, the benchmark results indicate that this goal was achieved, as we will examine in more detail later.

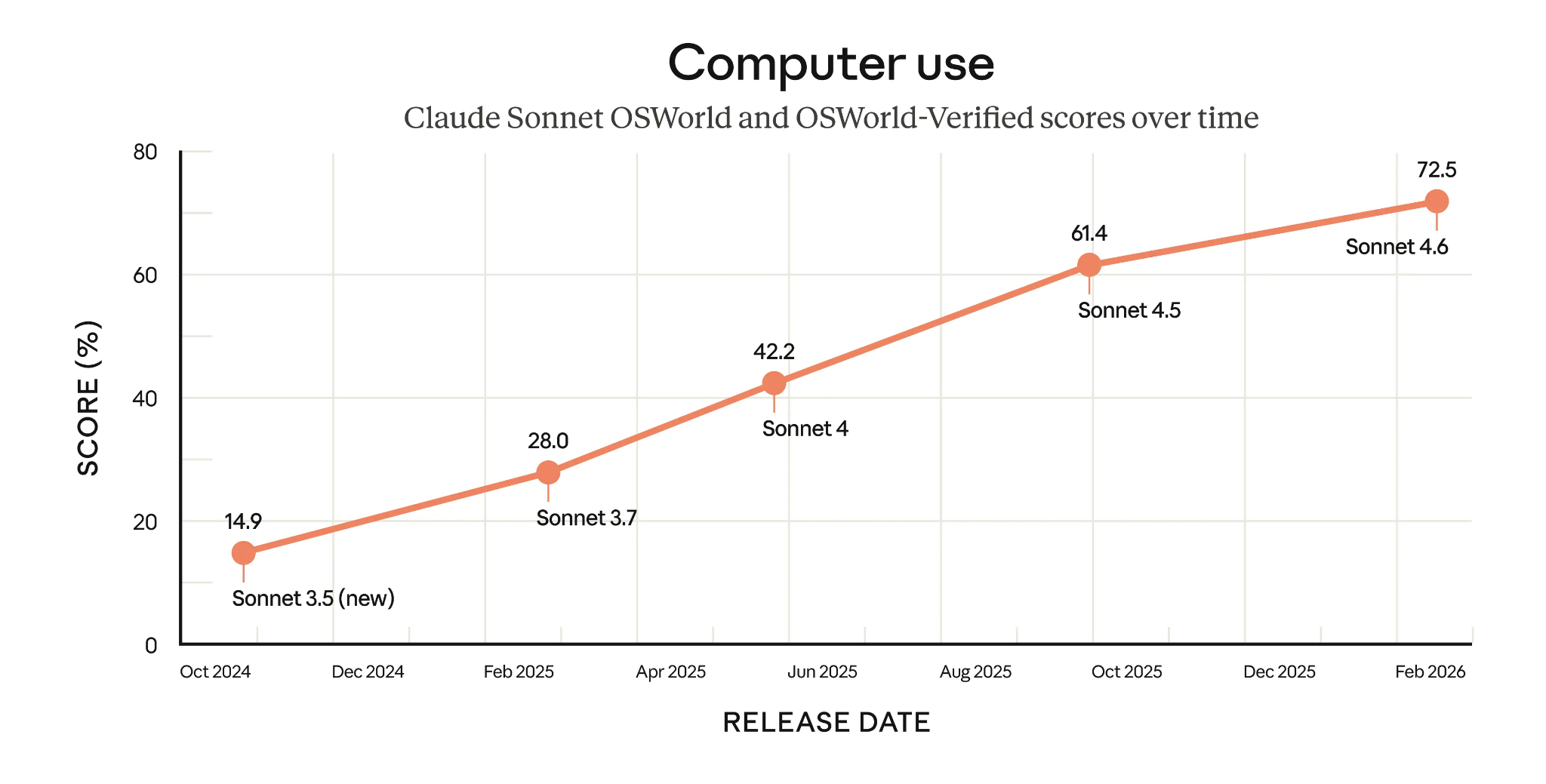

One example that stands out is the agentic computer use skills of Claude Sonnet 4.6, which scores a very impressive 72.5% in OSWorld-Verified. As we can see from the graphic below, the Sonnet models have come a long way and have more than doubled this score in less than one year.

OSWorld-Verified scores of Claude Sonnet models over time (Source: Anthropic)

Let’s look at a few notable features of the new model:

Near-Opus intelligence for coding and reasoning

Claude Sonnet 4.6 delivers a full skills upgrade across a wide range of tasks, including:

- Coding

- Long-horizon reasoning

- Agent planning

- Knowledge-related work

- Design

According to the release note, Anthropic found that beta testers preferred using Sonnet 4.6 over Opus 4.5, which was Anthropic’s flagship model until only two weeks ago, about 59% of the time.

They cited better instruction following, fewer hallucinations, and more reliable multi-step problem solving as reasons for their preference.

Frontier-level agentic skills with stronger safety

The model shows human-level capability on many real software tasks, such as:

- Navigating complex spreadsheets

- Multi-step web forms

- Multi-tab workflows

This becomes apparent, for instance, in the strong OSWorld-Verified score and in some domain-related benchmarks that we will discuss later.

Another focus in the model development was on safety, which is especially relevant in the shift towards agentic AI. Anthropic claims that Claude Sonnet 4.6 has significantly improved its resistance to prompt injections compared to Sonnet 4.5, and is on par with Opus 4.6 in this regard.

Long-horizon planning

The arguably most catchy claim is around the extended context window, which now spans 1 million tokens. This extension lets Sonnet 4.6 ingest even larger codebases, lengthy contracts, or large research bundles in a single request, and reason effectively across that context. This expanded context window places Sonnet 4.6 on a par with Google’s Gemini 3.

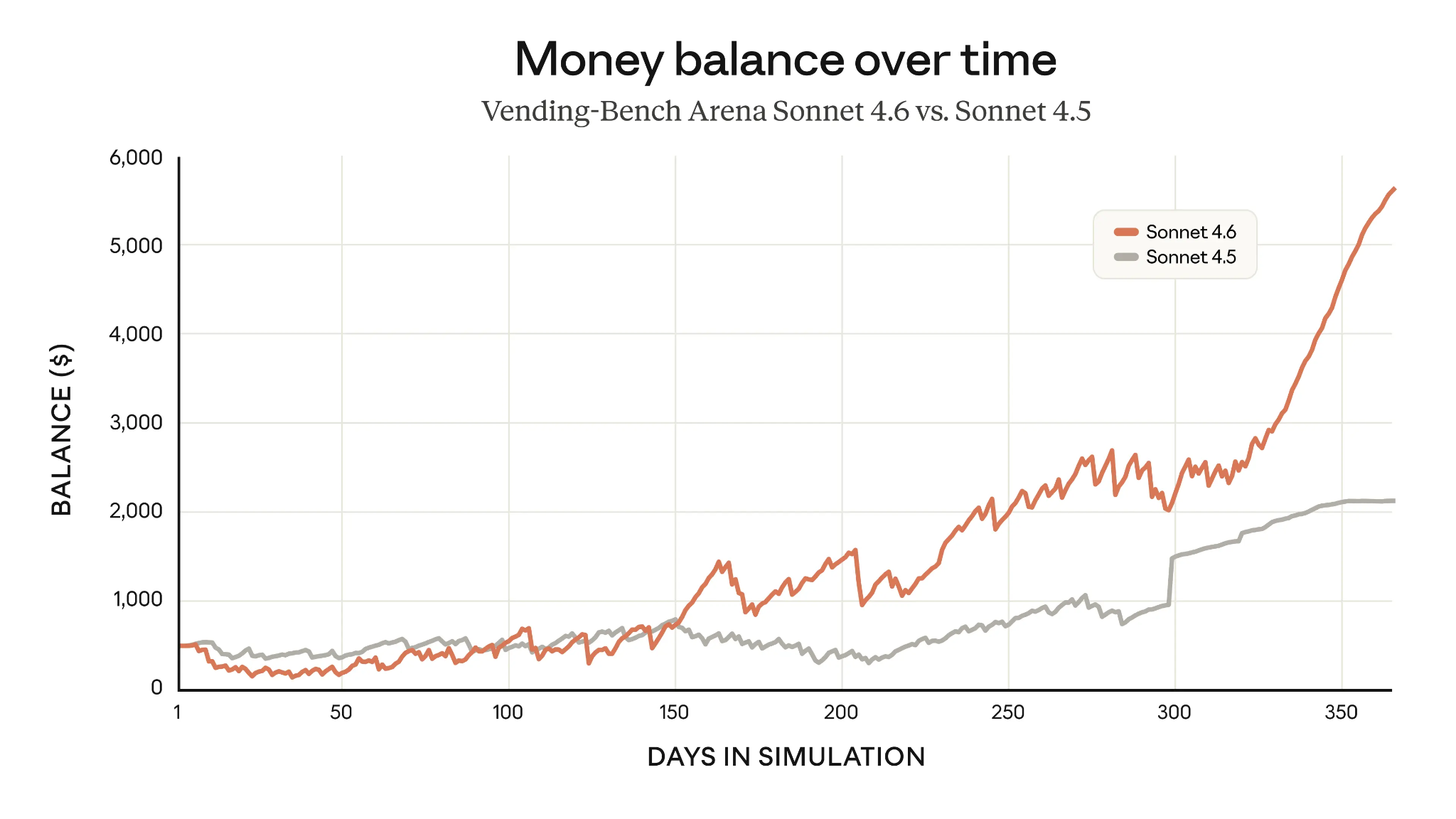

One example of improved long-term planning is the Vending-Bench Arena, which tests a model’s ability to run a simulated business over time, with an element of competition between models. By heavily investing in infrastructure in the beginning and capitalizing on it later on, Sonnet 4.6 was able to almost triple Sonnet 4.5’s average gains after one year.

Vending-Bench scores of Claude Sonnet 4.6 vs Sonnet 4.5 (Source: Anthropic)

Advanced workflow enhancements

On the Claude platform and API, Sonnet 4.6 makes a few features previously restricted to Opus models or paid tiers available for free.

Adaptive thinking

One neat feature that was introduced with the release of Claude Opus 4.6 is adaptive thinking. It lets Claude decide automatically when and how much to reason before answering. In the API, it can be enabled by setting thinking: {type: “adaptive”}. It is baked in automatically for Sonnet 4.6 and Opus 4.6 in the web chat interface.

Connectors

Connectors offer MCP-based integrations, which means they provide direct access to external apps such as Google Workspace and Slack. This way, Sonnet 4.6 can fetch and act on real-time data from these tools within chats. Originally, connectors were a paid-tier-only feature, but with Sonnet 4.6 being the new default model, they are available for free users, too.

Testing Claude Sonnet 4.6

I thought it would be interesting to see how well Claude Sonnet 4.6 performs on the same tasks used for testing in our Claude Opus 4.6 guide, so we can directly compare the two. The tests were designed to involve multi-step tasks and probe mathematical and code-related reasoning.

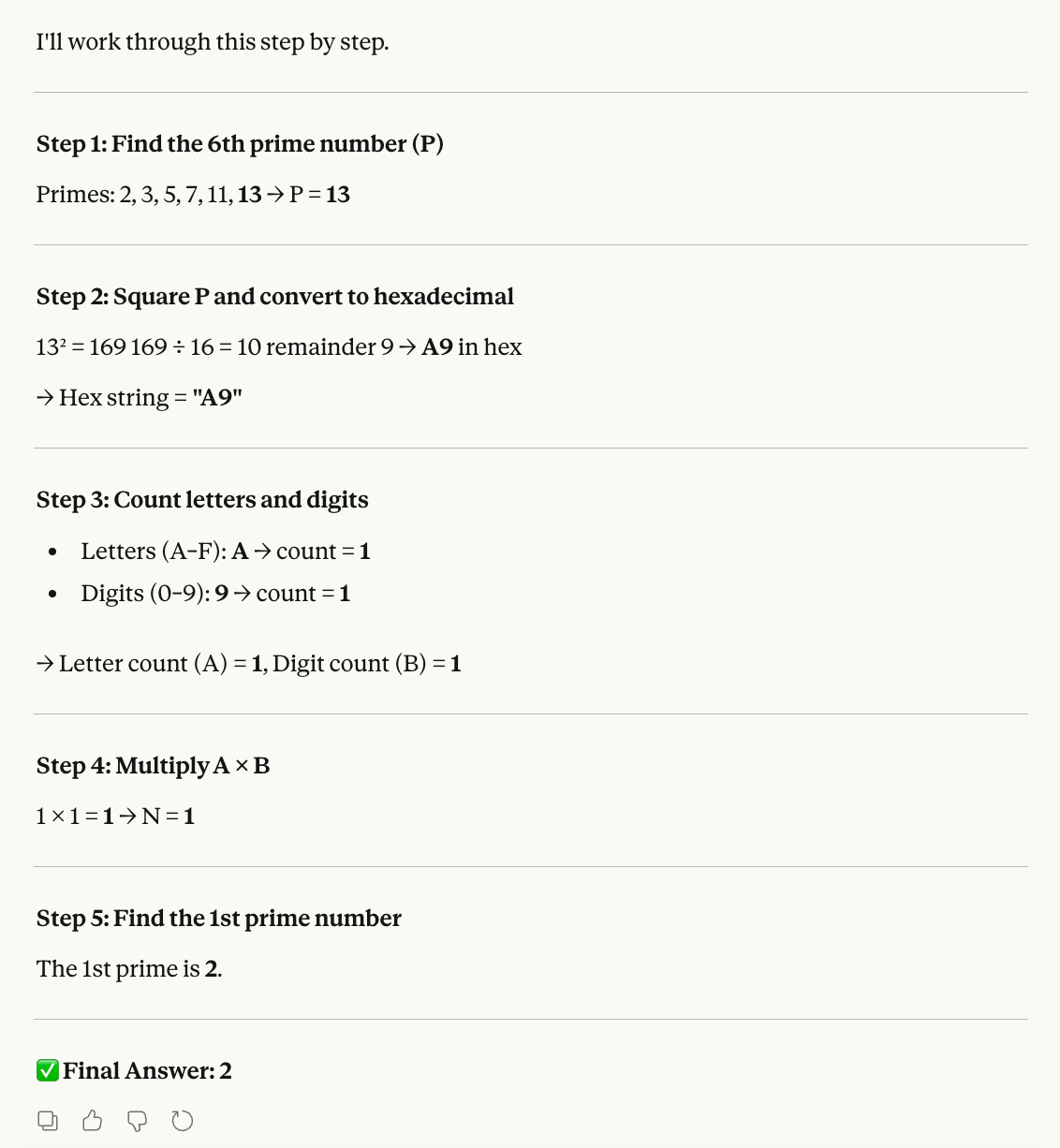

Test 1: Hex-to-decimal logical puzzle

The first test targets mathematical skills:

Step 1: Find the 6th prime number. Let this be P.

Step 2: Convert the square of P into hexadecimal.

Step 3: Count the letters (A–F) and digits (0–9) in that hex string. Let these be A and B.

Step 4: Multiply A × B. Let this be N.

Step 5: Find the Nth prime number.Let’s calculate ourselves, so we can see whether the result is correct:

- The 6th prime is 13.

- 13 squared is 169.

- 169 is A9 in hex, which has 1 letter and 1 digit.

- 1 × 1 is 1.

- The first prime is 2.

The idea behind this test was to confuse the model with tasks requiring different counting logics and number formats. Like Opus 4.6, Sonnet 4.6 successfully tackles each individual step and comes to the right conclusion:

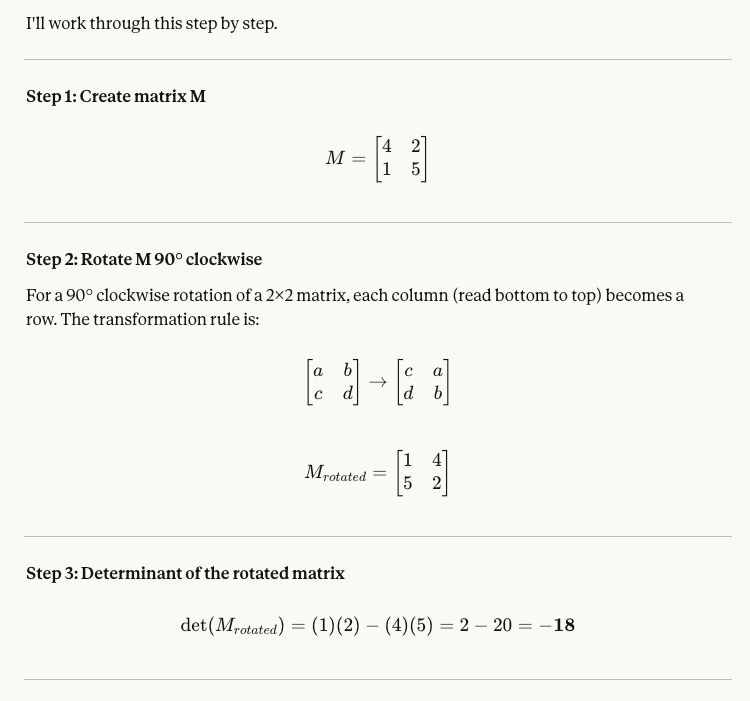

Test 2: Rotating a matrix

The next test is aimed at spatial reasoning, a notorious weak point of many LLMs:

Step 1: Create a 2×2 matrix M with top row [4, 2] and bottom row [1, 5].

Step 2: Rotate M 90 degrees clockwise.

Step 3: Calculate the determinant of the rotated matrix.

Step 4: Cube that determinant.

Step 5: Subtract the 13th Fibonacci number from the result.The correct answer is -6,065:

- The initial matrix is [[4, 2], [1, 5]].

- The rotated matrix is [[1, 4], [5, 2]].

- The determinant is the difference of both diagonal products, which is -18 in this case.

- If we cube -18 we get -5,832.

- -5,832 - 233 is -6,065.

Sonnet 4.6 doesn’t have any problem with the task. It is able to grasp the spatial context of the matrix and rotate it correctly, and dealing with negative numbers does not cause any problems either:

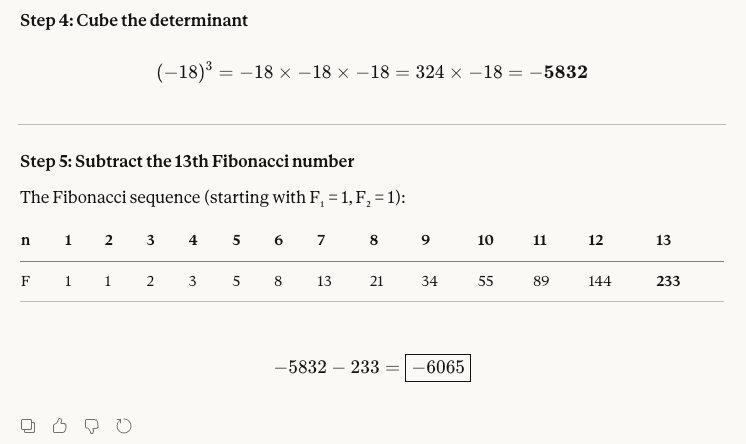

Test 3: Code debugging

Finally, let’s target code debugging, one of the supposed strengths of Sonnet 4.6. The test is designed to check how context-aware the model is when confronted with a specific bug.

A developer wrote this Python function to compute a running average:

def running_average(data, window=3):

result = []

for i in range(len(data)):

start = max(0, i - window + 1)

chunk = data[start:i + 1]

result.append(round(sum(chunk) / window, 2))

return result

When called with running_average([10, 20, 30, 40, 50]), the first two values in the output seem wrong. Why? Please help me fix what is wrong!The issue in this code snippet is that the function always divides by window (3), even before there are 3 elements in the chunk at the start of the list. The output of the buggy code is [3.33, 10.0, 20.0, 30.0, 40.0], but the first two values should be 10.0 and 15.0, since those chunks contain only 1 and 2 elements, respectively, and should be divided by those numbers. Therefore, the fix is dividing by len(chunk) instead of window.

This test is neat because it targets one weak spot of LLMs: they often execute the loop perfectly but interpret the output as correct. The reason is that they see the calculations executed step by step without error, but don’t consider what the function should do. Only if the model can connect the function's purpose to its execution can it spot the bug.

Again, the model passes the test. Of course, this was just a small selection of tests you could run with the model, but at least in those examples, Sonnet 4.6 performs on par with Opus 4.6.

Claude Sonnet 4.6 Benchmarks

With the high frequency of models dropping left and right lately, we are already used to a lot of movement in the first spots of each benchmark’s leaderboard. Still, the first results of Claude Sonnet 4.6 across multiple LLM benchmarks don’t fail to impress, especially considering it’s not Anthropic’s flagship model.

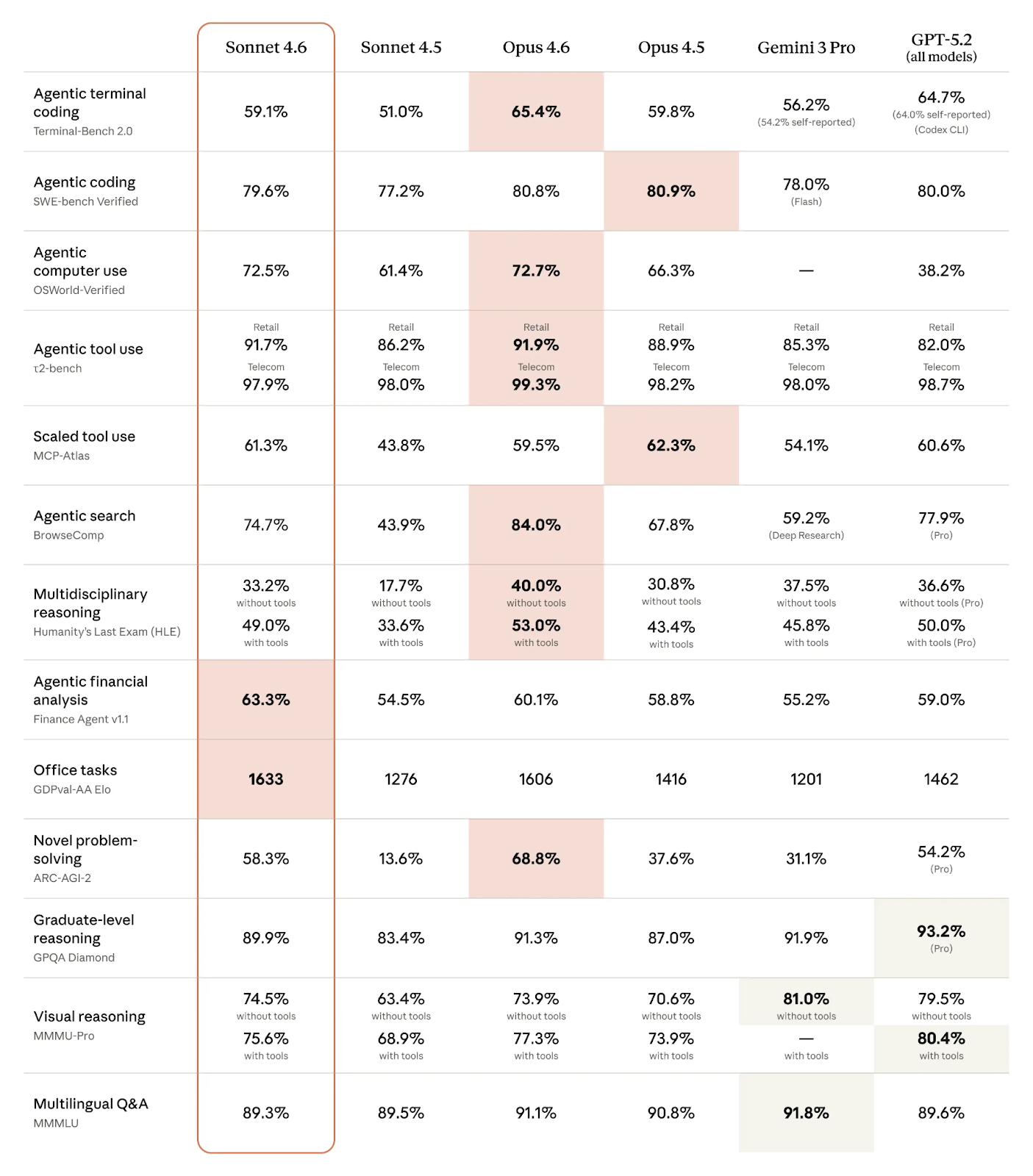

Benchmark scores of Claude Sonnet 4.6 and competitors (Source: Anthropic)

As we can see from the table, Claude Sonnet 4.6 does really well in agentic benchmarks:

- Agentic computer use: With an OSWorld-Verified score of 72.5%, it takes second spot only very slightly behind Claude Opus 4.6 (72.7%), while significantly outperforming OpenAI’s new flagship model GPT-5.3 Codex (64.7%).

- Agentic coding: Claude Sonnet 4.6 reaches 79.6% in the SWE-bench verified. All recent Claude and competitor models are roughly on par, since they all gravitate towards a score of around 80%.

- Agentic terminal coding: A significant improvement to Sonnet 4.5 (59.1% instead of 51% in Terminal-Bench 2.0), but a bit behind Opus 4.6 (65.4%) and a quite big gap to GPT-5.3 Codex (75.1%)

What’s especially notable is that Anthropic seems to outrun the competition in specific domain-related agentic tasks:

- Agentic financial analysis: Here, Claude Sonnet 4.6 takes the top spot with 63.3% in Finance Agent v1.1, even outperforming Opus 4.6 (60.1%).

- Office tasks: Another benchmark where Sonnet 4.6 takes the first place with an Elo of 1633 in GDPval-AA, again putting Opus 4.6 in second place (1606).

How to Access Claude Sonnet 4.6

You can use Claude Sonnet 4.6 now through multiple channels. Here’s how to access it:

Chat access

Sonnet 4.6 is available through the Claude.ai web chat interface, its iOS and Android apps, and the macOS desktop app with Claude Cowork.

Across all these platforms, it is the new default model, even for the free tier. That means file creation, connectors, skills, and context compaction are now available for all users.

API access

Developers can use Claude Sonnet 4.6 via the Anthropic API with the model ID claude-sonnet-4-6. The pricing stays the same compared to its predecessor: one million input tokens cost $3, one million output tokens $15.

For enterprise-scale deployment, Sonnet 4.6 is available across many different cloud platforms, such as AWS Bedrock or Google Vertex AI, each with custom pricing.

Coding tools

Claude Sonnet 4.6 now also powers Claude Code, and is the default model for Pro and Team tier accounts, while higher tiers default to Opus 4.6. If you want to see some examples of what you can build with it, I recommend checking out our tutorials on Claude Code hooks and building plugins for Claude Code.

Additionally, Sonnet 4.6 can also be used with IDEs and other coding assistants, such as Cursor, or Roo Code.

Claude Sonnet 4.6 vs Opus 4.6

In many domains, the difference between Sonnet 4.6 and Opus 4.6 is so marginal that you could call it a tie between the two. This is especially true for many agentic tasks, such as agentic coding, agentic computer use, and agentic tool use. Sonnet 4.6 even outperforms Opus 4.6 in agentic financial analysis, office tasks, and scaled tool use.

As one would expect, it is the tasks involving heavy reasoning or creativity where Opus 4.6 really shines, such as novel problem-solving and multidisciplinary reasoning. In the agentic domain, Opus 4.6 is better at agentic terminal coding and agentic search.

Choosing the right Claude model

For most coding and agentic tasks, and for those where instruction-following is key, Claude Sonnet 4.6 is the better choice because it delivers performance that is basically identical at significantly lower cost. Additionally, it has an edge in terms of speed.

Teams that rely on expert-level reasoning or multi-agent workflows should instead choose Claude Opus 4.6. Especially for research, complex migrations, or high-stakes expert work, Opus 4.6 excels.

Final Thoughts

With Claude Sonnet 4.5, Anthropic continues to emphasize code, agents, and computer use. Besides a huge performance increase over its predecessor, it makes features like connectors and adaptive thinking available to all users, even in the free tier.

The first impressions and benchmark results are really good, and it feels like a game-changer because it offers (near) Opus-level performance without the heavy price tag. For many everyday workflows, it’s even hard to argue why you should use Anthropic’s flagship model instead. That said, for tasks that involve heavy reasoning, Claude Opus 4.6 remains the better choice.

It will be interesting to see how long Claude Sonnet 4.6 can remain at the top of the benchmark leaderboards and how Anthropic’s competitors respond to the release.

We’ve discussed agentic tasks throughout this article. If you want to learn more about using models like Claude Sonnet 4.6 in this kind of workflow, I recommend taking our AI Agent Fundamentals skill track.

Claude Sonnet 4.6 FAQs

What is Claude Sonnet 4.6?

Claude Sonnet 4.6 is Anthropic's latest mid-tier AI model, released on February 15, 2026. It offers upgrades in coding, computer use, long-context reasoning, agent planning, knowledge work, and design. Its main selling point is approaching the performance of Opus 4.6 at a lower cost, which makes it suitable for daily use, production workflows, and complex tasks.

What are the key new features of Claude Sonnet 4.6?

Claude Sonnet 4.6 includes a 1M token context window, adaptive thinking for dynamic reasoning, and context compaction to extend effective context length. It supports enhanced computer use for tasks like navigating spreadsheets or web forms without APIs, and improved tool integration like web search with code execution.

How does Claude Sonnet 4.6 perform in coding and benchmarks?

Users prefer Sonnet 4.6 over Sonnet 4.5 70% and Opus 4.5 59% of the time in Claude Code because of improved instruction following, fewer hallucinations, and consistent multi-step task performance. It excels on many agent-related benchmarks like OSWorld (72.5%) and SWE-bench Verified (79.6%), and takes the first place in agentic office tasks.

How can I access Claude Sonnet 4.6?

Claude Sonnet 4.6 is available now on all Claude plans (free tier default), Claude.ai, Claude Cowork, Claude Code, via Anthropic API (as claude-sonnet-4-6), and on platforms like Amazon Bedrock and GitHub Copilot. Pricing matches Sonnet 4.5 at $3 input/$15 output per million tokens.

When should I use Claude Sonnet 4.6 vs Opus 4.6?

Use Claude Sonnet 4.6 for most everyday coding and automation workflows. It's nearly as capable as Claude Opus 4.6, but faster and much cheaper. Choose Opus 4.6 when you need high-stakes expert reasoning (91.3% GPQA), long-context retrieval, or complex multi-agent work where depth justifies the premium.

Data Science Editor @ DataCamp | Forecasting things and building with APIs is my jam.