Course

Data management has seen the emergence of new solutions to address the limitations of traditional architectures like data lakes and data warehouses. While both architectures have been instrumental in data storage and analytics, they can present challenges in addressing modern data processing needs.

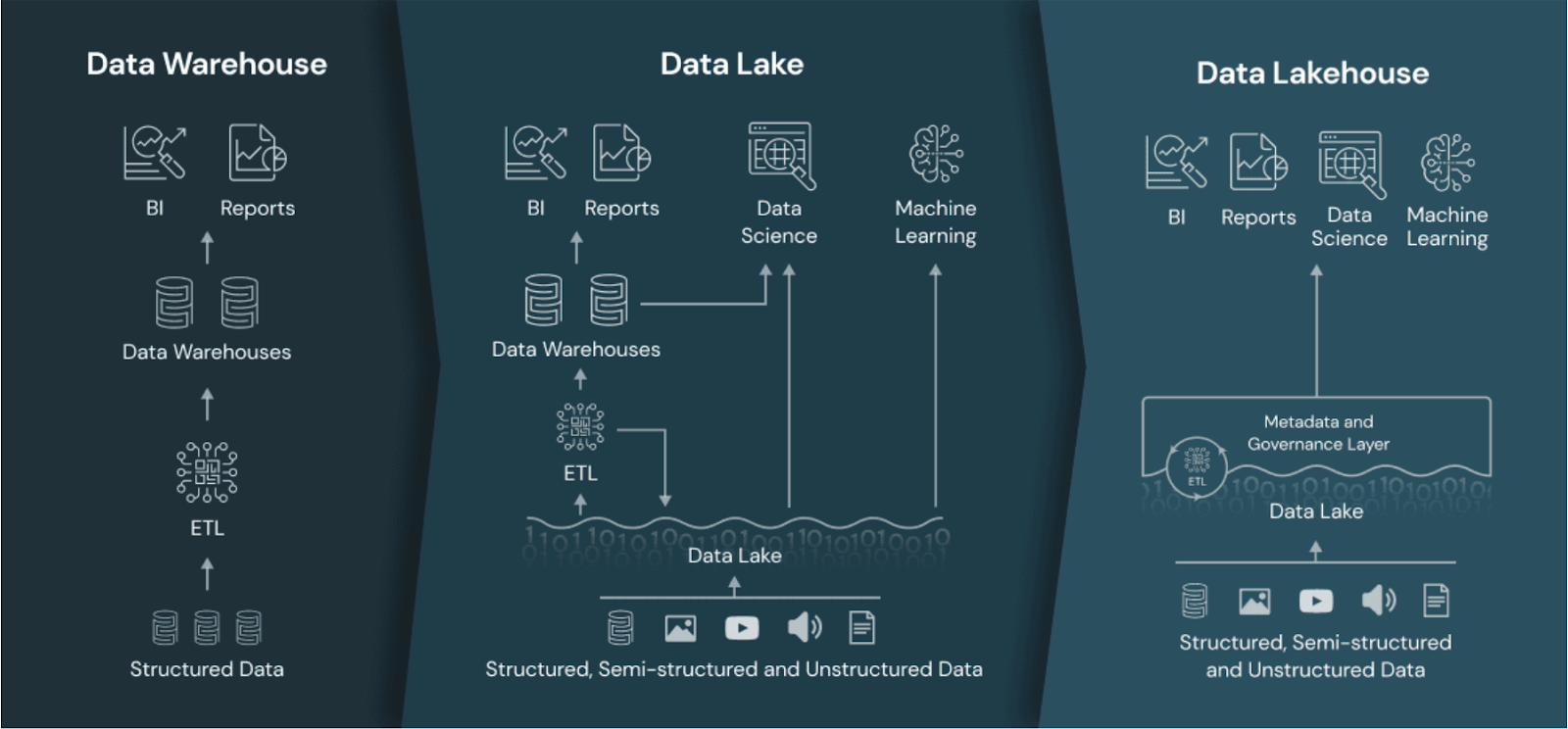

Despite being scalable and flexible, data lakes often face governance and performance issues. On the other hand, data warehouses, although powerful for analytics, are costly and less flexible. The data lakehouse aims to solve these problems by combining the strengths of data lakes and warehouses while minimizing their weaknesses.

In this guide, I’ll walk you through what a data lakehouse is, how it differs from traditional data architectures, and why it is gaining popularity among data professionals looking for a unified data management solution.

What is a Data Lakehouse?

A data lakehouse is an architecture that combines the scalability and low-cost storage of data lakes with the performance, reliability, and governance capabilities of data warehouses.

By integrating the strengths of both architectures, a data lakehouse offers a unified platform for storing, processing, and analyzing all types of data—structured, semi-structured, and unstructured.

The unified approach allows for greater flexibility in data processing and supports a wide range of analytics use cases, from business intelligence to machine learning.

The data lakehouse compared to the data warehouse and data lake. Image source: Databricks.

Become a Data Engineer

Data Lakehouse vs. Data Lake vs. Data Warehouse

Before exploring the data lakehouse architecture in more detail, it’s important to understand how it compares to data warehouses and data lakes.

Data warehouse

Data warehouses are built to store structured data and provide high-performance analytics and reporting.

One of their biggest strengths is the ability to execute fast queries, which makes them well-suited for business intelligence and reporting use cases.

Data warehouses use a well-defined schema that supports strong data governance. This ensures that data is accurate, consistent, and easily accessible for analysis.

However, these advantages come at a cost. Data warehouses are typically expensive to maintain because they rely on specialized hardware and extensive data transformation processes, usually requiring ETL (extract, transform, load) pipelines.

The rigid structure of data warehouses also makes them less suitable for handling unstructured data or quickly adapting to data format changes. This lack of flexibility can be a significant limitation for organizations aiming to work with rapidly evolving data sources or data that doesn’t conform to a predefined schema, such as text, images, audio, etc.

To learn more about data warehousing fundamentals, check out the Introduction to Data Warehousing free course.

Data lake

Data lakes are designed to store large amounts of semi-structured or unstructured data. Their primary advantage lies in their ability to scale, making them an attractive option for teams dealing with diverse data types and massive data volumes.

The flexibility of data lakes allows you to store raw data in its native format, making them ideal for data exploration and science projects.

However, data lakes often struggle with data governance and data quality. Since data is stored in its raw form without much structure, ensuring consistent data quality can be challenging.

The lack of governance can lead to what is commonly known as a "data swamp," where valuable insights are buried under poorly organized data.

Performance is another critical concern in data lakes, particularly for executing complex analytical queries. Data lakes are not optimized for quick querying or for combining different data types, such as images, text, and audio.

Data lakehouse

A data lakehouse aims to combine the best of data lakes and data warehouses. It offers a more balanced solution by integrating the scalability and low-cost storage capabilities of data lakes with the performance and governance strengths of data warehouses.

In theory, in a lakehouse, you can store structured and unstructured data at a lower cost while leveraging the capabilities needed for high-performance analytics.

Data lakehouses provide support for ACID transactions, meaning that data updates and deletes can be managed reliably, which is often lacking in traditional data lakes. This allows for better data integrity and governance, making data lakehouses more suitable for enterprise-grade applications.

Data lakehouses also support real-time data processing, enabling organizations to handle streaming data and derive real-time insights, which is important in some settings.

Features and Components of a Data Lakehouse

How is it possible to have the best of both worlds in a single platform? In this section, I’ll explain the components that make the lakehouse architecture possible.

1. Separation of storage and compute with unified data management

In a data lakehouse, storage and compute are separate but tightly integrated, allowing flexible, scalable resource use.

Data is stored in a cost-effective cloud object store, while compute resources can be scaled independently based on workload demands. This approach reduces the need to move data between systems, enabling users to process data in place without extensive extraction and loading.

By integrating storage and compute in this way, data lakehouses streamline data management, improve efficiency, and reduce latency, leading to faster insights.

Dynamic resource allocation also enables better cost optimization and ensures that compute resources are used effectively.

2. Schema enforcement and governance

Data lakehouses enforce schemas to maintain data quality while offering governance features for data compliance.

Schema enforcement ensures that data is organized in a structured format, making it easier to query and analyze. This helps prevent data inconsistencies and supports better data quality across the organization.

Governance features in data lakehouses enable you to manage data access, lineage, and compliance. With built-in governance tools, data lakehouses can support regulatory requirements and protect sensitive data. This is particularly important for industries such as healthcare and finance, where data privacy and compliance are critical.

3. Support for ACID transactions

ACID transactions ensure data reliability, enabling you to manage updates and deletes confidently.

In a data lakehouse, ACID transactions provide the same level of data integrity expected from a traditional data warehouse but with the added flexibility of handling unstructured data.

The ability to support ACID transactions means that data lakehouses can be used for mission-critical workloads where data accuracy is essential. This feature makes data lakehouses an appealing choice for enterprises that must maintain data consistency across different data operations.

4. Real-time data processing

Data lakehouses can process data in real time, enabling use cases like real-time analytics and streaming insights. Real-time data processing allows teams to make data-driven decisions faster, improving responsiveness and adaptability.

For example, real-time data processing can be used to monitor customer interactions, track inventory levels, or detect anomalies in financial transactions. By enabling immediate insights, data lakehouses help you stay ahead of changing market conditions and respond proactively to new opportunities.

5. Multi-cloud and hybrid architecture support

Data lakehouses can operate in multi-cloud or hybrid environments, offering flexibility and scalability.

Multi-cloud support allows data teams to avoid vendor lock-in and leverage the best services from different cloud providers. Hybrid architecture support ensures that on-premises systems integrate with cloud environments, providing a unified data management experience.

This flexibility particularly benefits teams with complex infrastructure needs or those undergoing cloud migration.

Benefits of Using a Data Lakehouse

In this section, we will discuss the benefits of using a data lakehouse to manage diverse data processing needs.

1. Scalability and flexibility

Data lakehouses inherit the scalability of data lakes, making them capable of handling growing data needs. You can easily add more data without worrying about storage limitations, ensuring your data architecture grows with the business.

For example, Apache Hudi, Delta Lake, and Apache Iceberg can scale storage across thousands of files in object storage systems like Amazon S3, Azure Blob Storage, or Google Cloud Storage.

Flexibility is another significant benefit, as data lakehouses support structured (e.g., transaction records) and unstructured data (e.g., log files, images, and videos). This means you can store all your data in one place, regardless of the format, and use it for various analytics and machine learning use cases.

2. Cost efficiency

Data lakehouses store data at a lower cost than data warehouses while still supporting advanced analytics.

For instance, data lakehouses built on cloud object storage (like Amazon S3 or Google Cloud Storage) leverage their cost-effective storage tiers. Combined with Apache Parquet and ORC file formats—which compress data to minimize storage costs—data lakehouses allow you to achieve substantial savings.

The unified nature of data lakehouses means there is no need to maintain separate systems for storage and analytics, resulting in cost efficiency and making data lakehouses an attractive option.

3. Faster time-to-insights

Unified data storage and processing capabilities reduce the time needed to derive insights. Data lakehouses eliminate the need to move data between storage and compute environments, streamlining workflows and minimizing latency.

Technologies like Apache Spark and Databricks allow data to be processed directly within the lakehouse, eliminating the need to move it to separate analytics environments.

By having all data in one place and processing it efficiently, you can quickly generate insights that drive better decision-making. This faster time-to-insight is particularly valuable for industries where timely information is crucial, such as finance, retail, and healthcare.

4. Data governance and security

Schema enforcement and data governance features meet data quality and compliance standards. Data lakehouses provide tools to manage data access, audit trails, and data lineage, essential for maintaining data integrity and meeting regulatory requirements.

Tools like AWS Lake Formation, Azure Purview, and Apache Ranger provide centralized control over data access, permissions, and audit trails, allowing you to manage data securely and prevent unauthorized access

These governance and security features make data lakehouses suitable for enterprises that need to comply with data privacy regulations, such as GDPR or HIPAA. By ensuring that data is properly managed and protected, data lakehouses help build trust in the data and its insights.

Data Lakehouse Use Cases

In this section, let’s review all the possible use cases for the data lakehouse.

Modern data analytics

Data teams use data lakehouses for real-time analytics, business intelligence, and machine learning workflows. By providing a unified data platform, data lakehouses enable professionals to analyze historical and real-time data, gaining insights that drive better business outcomes.

For example, retailers can use data lakehouses to analyze sales data in real time, identify trends, and adjust their inventory accordingly. Financial institutions can leverage data lakehouses to perform risk analysis and fraud detection, combining historical and real-time transaction data for a comprehensive view.

Check out the free Understanding Modern Data Architecture course to learn more about how modern data platforms work, from ingestion and serving to governance and orchestration.

AI and machine learning pipelines

Data lakehouses provide the infrastructure for building and scaling AI and ML models, with easy access to structured and unstructured data. Machine learning models often require a variety of data types, and data lakehouses make it possible to bring together all relevant data in one place.

This unified data access simplifies the process of training, testing, and deploying machine learning models. Data scientists can quickly experiment with different datasets, iterate on models, and scale them for production without having to worry about data silos or movement between systems.

Data-driven applications

Data lakehouses power data-driven applications that require real-time insights and decision-making capabilities. These applications often rely on up-to-date information to provide users with personalized experiences or automated recommendations.

For example, an e-commerce site recommendation engine can use a data lakehouse to analyze user behavior in real time and provide relevant product suggestions. Similarly, logistics companies can use data lakehouses to optimize delivery routes based on real-time traffic data and historical delivery patterns.

Popular Data Lakehouse Platforms

It’s time to learn about the technologies that make the data lakehouse architecture possible. In this section, I explain the most popular ones.

1. Databricks Lakehouse

Databricks has pioneered the data lakehouse architecture, offering Delta Lake as a core component of its platform. Delta Lake adds reliability and performance improvements to data lakes, enabling ACID transactions and ensuring data quality.

Databricks Lakehouse combines the scalability of data lakes with the data management features of data warehouses, providing a unified platform for analytics and machine learning. Its integration with Apache Spark makes it an attractive choice for organizations leveraging big data processing capabilities.

If you want to learn about the Databricks Lakehouse platform and how it can modernize data architectures and improve data management processes, check out the free Introduction to Databricks course.

2. Google BigLake

Google’s BigLake integrates data lakehouse principles with its existing cloud ecosystem, providing a unified data storage and analytics platform. BigLake allows users to manage structured and unstructured data, making performing analytics across different data types easier.

With integration with Google Cloud services, BigLake provides a comprehensive solution for data teams looking to use cloud-native tools for their data needs. It supports both batch and real-time processing, making it suitable for a wide range of use cases.

3. AWS Lake Formation

AWS Lake Formation provides tools to set up a data lakehouse using AWS’s cloud services, offering seamless integration and management. With AWS Lake Formation, you can easily ingest, catalog, and secure data, creating a unified data lakehouse.

AWS Lake Formation also integrates with other AWS analytics services, such as Amazon Redshift and AWS Glue, providing a full suite of tools for data processing and analytics. This makes it an attractive option for organizations already using AWS for their infrastructure.

4. Snowflake

Snowflake’s architecture is evolving to support data lakehouse capabilities, allowing users to efficiently manage structured and semi-structured data. Snowflake provides high-performance analytics while also offering flexibility in data storage and management.

Snowflake’s data lakehouse features enable you to combine different data types and perform advanced analytics without needing multiple systems. Its cloud-native design and support for multi-cloud deployments make it a popular choice for teams looking to modernize their data infrastructure.

If you’re interested in Snowflake, check out the Introduction to Snowflake course.

5. Azure Synapse Analytics

Microsoft’s Azure Synapse Analytics combines big data and data warehousing capabilities into a unified data lakehouse solution.

Synapse enables teams to analyze both structured and unstructured data using a variety of tools, including SQL, Apache Spark, and Data Explorer. Its deep integration with other Azure services, such as Azure Data Lake Storage and Power BI, provides a seamless experience for end-to-end data processing, analytics, and visualization, making it an ideal option for organizations within the Azure ecosystem.

6. Apache Hudi

Apache Hudi is an open-source project that brings transactional capabilities to data lakes. It supports ACID transactions, upserts, and data versioning.

Hudi integrates well with cloud storage platforms like Amazon S3 and Google Cloud Storage, making it suitable for real-time analytics and machine learning pipelines. With support for popular big data engines like Apache Spark and Presto, Hudi offers flexibility for building a custom data lakehouse on open-source technology.

7. Dremio

Dremio provides an open data lakehouse platform for fast analytics and BI workloads directly on cloud data lakes.

With its SQL-based approach and integration with Apache Arrow, Dremio allows teams to perform high-performance analytics without data movement. It’s beneficial for organizations looking to support SQL-based queries on their data lakes, making it a popular choice for data teams prioritizing speed and efficiency.

Data Lakehouse Platforms Comparison

As you can imagine, not all technologies supporting the data lakehouse architecture are created equal. The following table compares them on several categories:

|

Platform |

Primary Storage Type |

Supports Structured & Unstructured Data |

Compute Engine |

ACID Transactions |

Real-time Processing |

Integration with ML/AI |

Ideal Use Case |

|

Databricks Lakehouse |

Delta Lake (Apache Parquet) |

Yes |

Apache Spark |

Yes |

Yes |

Strong (MLflow, Spark ML) |

Big data analytics, AI/ML |

|

Google BigLake |

Google Cloud Storage |

Yes |

Google BigQuery |

Yes |

Yes |

Integrated with Google Cloud AI |

Cloud-native analytics |

|

AWS Lake Formation |

Amazon S3 |

Yes |

Amazon Redshift / AWS Glue |

Yes |

Limited |

Amazon SageMaker Integration |

AWS-centric data lakehouse |

|

Snowflake |

Proprietary (Cloud-based) |

Primarily Structured |

Snowflake Compute |

Yes |

Limited |

Limited |

Multi-cloud, high-performance analytics |

|

Azure Synapse Analytics |

Azure Data Lake Storage |

Yes |

SQL, Apache Spark, Data Explorer |

Yes |

Yes |

Strong (Azure ML, Power BI) |

Microsoft ecosystem analytics |

|

Apache Hudi |

Cloud Object Storage (e.g., S3, GCS) |

Yes |

Apache Spark, Presto |

Yes |

Yes |

External ML Support |

Open-source real-time data lakes |

|

Dremio |

Cloud Data Lakes |

Yes |

Dremio (Apache Arrow) |

No |

Yes |

No Native Integration |

High-performance SQL on cloud lakes |

Challenges and Considerations for Implementing a Data Lakehouse

Some things are too good to be true, and the data lakehouse is no exception. It seemingly offers it all, but it also has some downsides. Let’s review them in this section.

1. Integration with existing systems

Integrating a data lakehouse with legacy systems can be complex and requires careful planning. Teams may need to migrate data from existing data lakes or warehouses, which can be time-consuming and resource-intensive.

Compatibility issues may also arise when integrating with older systems that are not designed to work with modern data architectures. To successfully implement a data lakehouse, you must evaluate your current infrastructure, plan for data migration, and ensure that all systems can work harmoniously together.

2. Managing data governance and security

Balancing scalability with governance and security can be challenging, especially in regulated industries. Data lakehouses must provide robust governance tools to manage data access, lineage, and compliance while maintaining the scalability needed to handle large datasets.

Another critical consideration is ensuring that sensitive data is properly secured. You need to implement access controls, encryption, and auditing to protect your data and meet regulatory requirements. This can be especially challenging in hybrid and multi-cloud environments, where data is distributed across different locations.

3. Cost vs. performance trade-offs

When implementing a data lakehouse, finding the right balance between cost efficiency and performance can be challenging. While data lakehouses offer cost-effective storage, achieving high-performance analytics may require additional investment in compute resources.

You need to carefully evaluate workload requirements and determine the most cost-effective way to meet your performance needs. This may involve optimizing resource allocation, choosing the right cloud services, or adjusting the configuration of the data lakehouse to ensure that both cost and performance goals are met.

Conclusion

Data lakehouses represent a significant evolution in data architecture, combining the strengths of data lakes and data warehouses into a single, unified platform!

With schema enforcement, support for ACID transactions, scalability, and real-time data processing, data lakehouses offer a powerful solution for modern data needs.

Data lakehouses provide a compelling option for data teams looking to simplify their data landscape and improve analytics capabilities.

Explore the Understanding Data Engineering free course to learn more about data lakehouses and other emerging data engineering technologies.

Get certified in your dream Data Engineer role

Our certification programs help you stand out and prove your skills are job-ready to potential employers.

FAQs

Why are data lakehouses becoming popular?

Data lakehouses combine scalability with robust analytics, making them cost-effective for companies seeking flexibility, high performance, and real-time data insights.

What challenges do data lakehouses face compared to traditional data architectures?

Data lakehouses must balance performance for structured and unstructured data, which can be complex. Implementing governance and security across varied data types and integrating with legacy systems also presents challenges.

How does a data lakehouse handle metadata management?

Data lakehouses use metadata layers (like Delta Lake’s metadata handling) to organize, enforce schemas, and optimize queries, improving data discovery, governance, and performance.

Can data lakehouses replace both data lakes and data warehouses?

While lakehouses can often replace data lakes and warehouses, some specialized use cases might still require dedicated systems. For most needs, lakehouses provide a unified, flexible solution.

How do data lakehouses support data versioning?

Technologies like Delta Lake and Apache Hudi allow data versioning, enabling users to track changes, perform rollbacks, and ensure data consistency over time.

What role do data lakehouses play in data democratization?

Lakehouses promote data democratization by offering a single platform where all data is accessible and governed, empowering broader teams to access and leverage data securely.