Track

Transfer learning has become very important in machine learning, where pre-trained models are adapted for new, related tasks to save time and resources. This strategy is highly effective in fields such as computer vision, natural language processing, and speech recognition, where large-scale pre-trained data is abundant.

Despite its popularity, fine-tuning, a common transfer learning approach, often encounters obstacles such as overfitting or misalignments between the pre-trained model's domain and the target data, a phenomenon known as domain shift. Basic transfer learning might encounter difficulties when faced with complex tasks or limited data, leading to inadequate adaptation.

To overcome these challenges, a new wave of advanced transfer learning techniques has emerged, addressing domain discrepancies, data scarcity, and the need for efficient model adaptation. In this article, I’ll explore some of these advanced transfer learning strategies.

Become an ML Scientist

Types of Advanced Transfer Learning Techniques

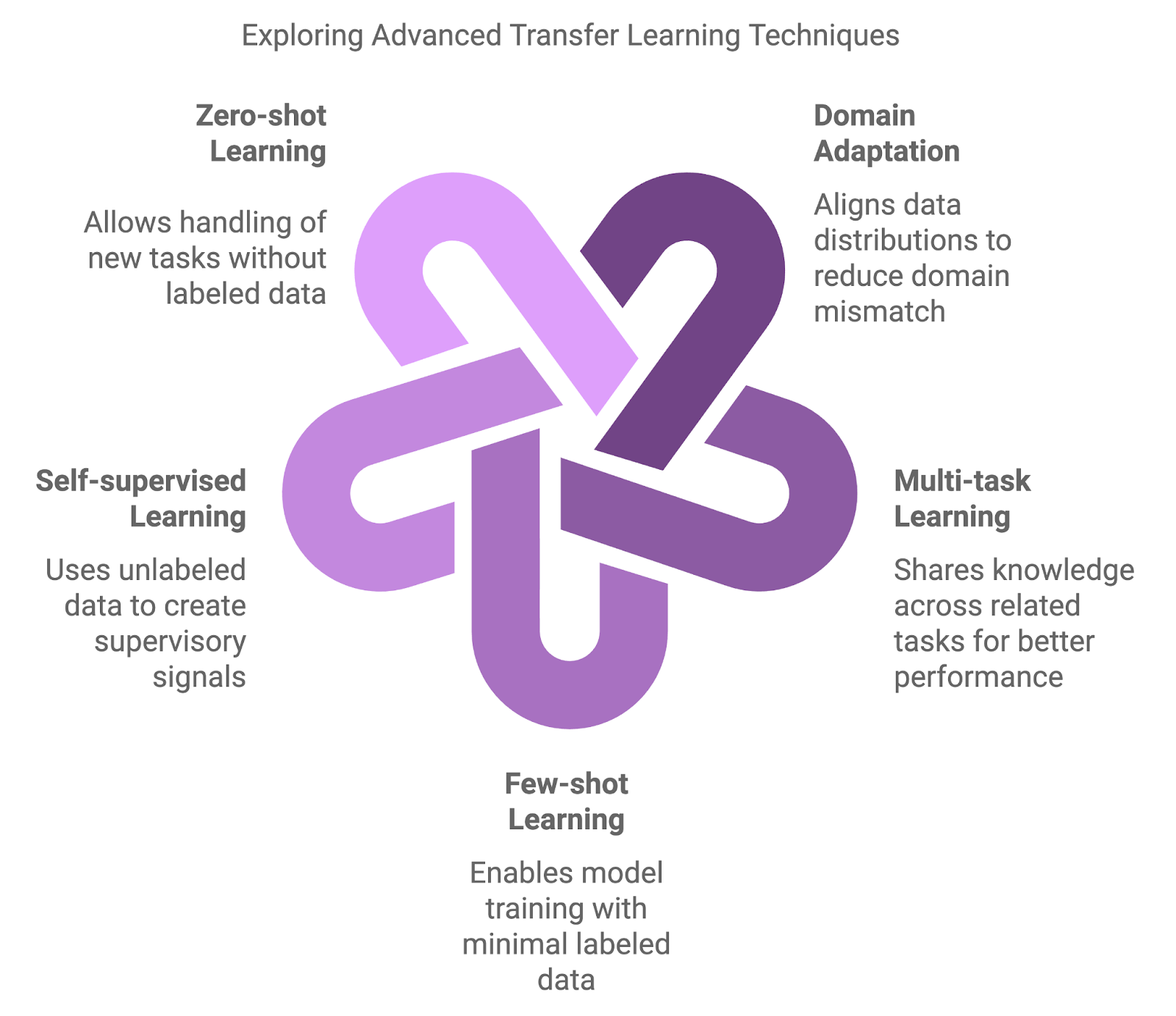

Traditional approaches, such as pre-training on a large dataset and then fine-tuning on a task-specific dataset, are foundational but sometimes insufficient. More advanced methods have emerged to tackle the nuances of domain shifts, data scarcity, and multi-task learning.

Advanced methods introduce additional layers of complexity and flexibility. These techniques aim to improve model performance in scenarios where traditional approaches may not be adequate.

Domain adaptation

Domain adaptation techniques are designed to handle discrepancies between the source (pre-training) and target (fine-tuning) domains. Traditional fine-tuning often yields suboptimal results when pre-trained models encounter data that diverges significantly from their training data. Domain adaptation techniques reduce this mismatch by aligning data distributions, ensuring the model can generalize better in novel settings.

Multi-task learning (MTL)

MTL allows a model to learn across multiple related tasks simultaneously. By sharing knowledge between tasks, the model can use commonalities and improve performance across all tasks. This strategy is especially effective when tasks are complementary, such as joint learning of text classification and sentiment analysis in natural language processing. MTL enhances generalization and reduces the risk of overfitting by guiding the model to focus on shared patterns.

Few-shot learning

Another key technique is few-shot learning, designed to train models with minimal labeled data. While traditional approaches require vast amounts of labeled data, few-shot techniques allow models to transfer knowledge using only a few examples. Methods like meta-learning and prototypical networks can lead to quick adaptation to new tasks, making them invaluable in fields like personalized recommendations or niche image classifications.

Self-supervised learning (SSL)

SSL uses unlabeled data to train models, cementing it as a powerful tool when labeled data is scarce or unavailable. This technique constructs supervisory signals from the data itself, solving pretext tasks such as predicting missing parts of an image or reconstructing scrambled text. Models pre-trained using SSL have demonstrated high transferability across a range of applications.

Zero-shot learning

Zero-shot learning goes a step further by allowing models to handle completely new tasks without any labeled data. This is achieved through auxiliary information like semantic relationships or task descriptions, enabling the model to generalize even when faced with novel categories. This capability is highly relevant in dynamic fields where new categories frequently emerge, such as in NLP applications handling newly coined terms or slang.

Diagram generated with napkin.ai

Domain Adaptation Techniques

As briefly discussed earlier, domain adaptation is a subfield of transfer learning focused on handling situations where there is a significant difference, or "domain shift," between the data used to train a model and the data where the model is deployed.

In typical transfer learning, it's assumed that the source and target domains share similar characteristics, but in many real-world scenarios, these domains differ substantially. Ultimately, this can degrade performance, as models trained on one set of data struggle to generalize to new conditions.

Domain adaptation techniques are designed to bridge this gap, enabling a model trained in one domain to perform effectively in another by minimizing the discrepancy between the source and target data distributions. As a result, domain adaptation is even more valuable in situations where labeled data is abundant in one domain but scarce or unavailable in another.

Several techniques have been developed to tackle the challenge of domain adaptation—two of the most common approaches are adversarial methods and domain-invariant feature learning.

Adversarial domain adaptation

One widely used technique for domain adaptation involves adversarial learning. In this context, a model is trained to learn features that are useful for the target task while simultaneously ensuring that these features are indistinguishable between the source and target domains.

We can accomplish this using a discriminator, i.e. a neural network tasked with distinguishing between source and target domain data. The model is trained in an adversarial manner so that it learns to "fool" the discriminator, making the feature representations domain-invariant. If this sounds familiar, it’s because this technique is inspired by Generative Adversarial Networks (GANs), which use a similar setup to generate realistic synthetic data.

Domain-invariant feature learning

Another approach focuses on learning representations that are unaffected by domain-specific variations. This transforms input data from both domains into a shared feature space where the source and target domains align more closely. Techniques such as Maximum Mean Discrepancy (MMD) and CORAL (Correlation Alignment) help minimize statistical differences in this space, ensuring the model can generalize more effectively when applied to the target domain.

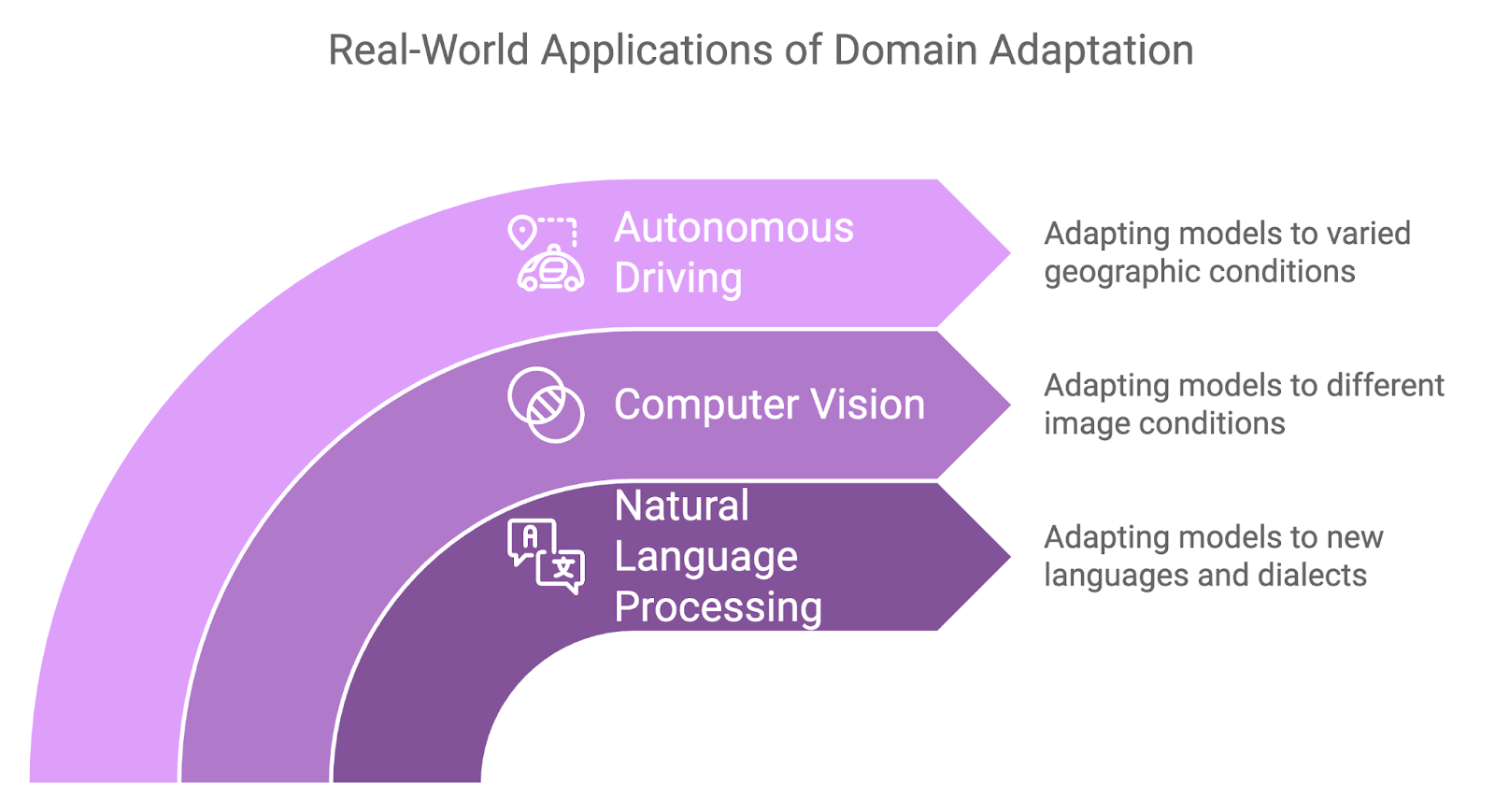

Use cases

Domain adaptation has proven to be useful in a variety of real-world applications where domain shifts are inevitable:

- Domain adaptation is frequently applied in NLP when transferring models trained in one language or dialect to another.

- For example, a sentiment analysis model trained in English may need to be adapted to work with a regional dialect of the same language, or a model trained on formal text might need to adapt to informal, colloquial language e.g., social media data.

- In computer vision, domain adaptation is often used when there are differences in image characteristics between the training and target data.

- For instance, a model trained on images of objects in natural lighting conditions may need to be adapted to work in dim or artificial lighting.

- Autonomous driving systems require strong performance regardless of the geographic regions encountered, and this is where domain adaptation proves useful yet again.

- A model trained to recognize road signs, pedestrians, or vehicles in one country may need to adapt to slightly different road conditions, weather patterns, or even traffic rules in another region.

Multi-task Learning and Transfer Learning Synergy

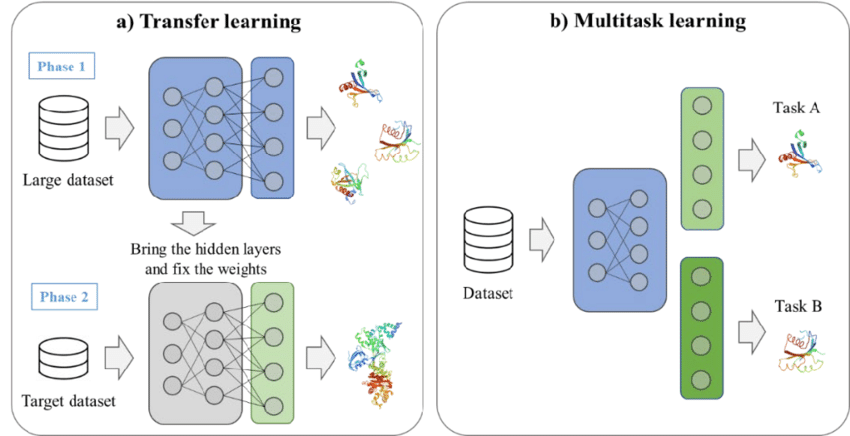

As machine learning tasks grow in complexity, the ability to tackle multiple related problems simultaneously can significantly enhance a model’s performance and efficiency. MTL addresses this by training models to perform multiple tasks at once, rather than focusing on a single task in isolation.

What is multi-task learning?

The strength of MTL lies in its ability to capitalize on relationships and shared structures between tasks, enabling models to learn richer feature representations. Insights gained from one task improve the model's performance on another, leading to greater overall learning efficiency.

In MTL, two main strategies are commonly used:

- Hard parameter sharing: With this approach, the model shares most of its parameters across tasks, with only task-specific layers being independent. This reduces overfitting by driving the model to learn representations that generalize well across tasks.

- Soft parameter sharing: Here, each task has its own set of parameters, but some form of regularization ensures that these parameters are similar, encouraging shared learning without full parameter overlap.

An example of transfer learning and multitask learning. Source: Kim et al., 2021

Benefits of multi-task learning

MTL offers several advantages that make it a valuable approach for complex machine-learning scenarios.

Improved generalization

By learning across multiple tasks, the model is exposed to a broader range of data and learns more general representations. This often leads to better performance on each task, especially when those tasks are related.

Data efficiency

With MTL, models make better use of available data, particularly in situations where labeled data is scarce. Since the model learns from multiple tasks, it can use insights from related tasks to improve performance. This cross-task learning accelerates the learning process and reduces the reliance on large datasets for each task.

Model efficiency

Instead of training separate models for each task, MTL consolidates learning into a single model. This reduces the computational cost and memory footprint, making it more efficient for deployment in environments with limited resources.

Applications of multi-task learning

MTL has been successfully applied across various domains, with notable use cases in NLP and computer vision.

Natural language processing

In natural language processing, MTL is commonly used to train models on multiple related tasks. For instance, a language model can be trained to perform both machine translation and text classification simultaneously. By sharing learned representations across these tasks, the model can improve its understanding of language structure and context, benefiting both tasks.

Computer vision

In computer vision, MTL can be applied to tasks like object detection and image segmentation. We can train a model to identify objects within an image while simultaneously also learning to classify those objects. This shared learning process allows the model to recognize both the location and category of objects, making it more versatile and efficient.

Few-shot and Self-Supervised Learning

In scenarios where labeled data is scarce or unavailable, few-shot learning and SSL have emerged as powerful techniques to address the limitations of traditional supervised methods.

These approaches offer innovative ways to adapt models to new tasks with minimal labeled data or by using large amounts of unlabeled data, making them essential tools in the realm of advanced transfer learning.

Few-shot learning

As we now know, few-shot learning is designed to enable models to quickly adapt to new tasks with only a few labeled examples.

However, collecting large datasets is impractical in many applications, such as rare disease diagnosis or niche product identification. Few-shot learning solves this by transferring knowledge from related tasks and fine-tuning models to perform well with minimal new data.

Below, we discuss two key strategies in few-shot learning.

Meta-learning

Also known as "learning to learn," meta-learning involves training a model to adapt quickly to new tasks with minimal data. The idea is to train the model across a variety of tasks so that it can generalize its learning and rapidly adjust to unseen tasks.

Meta-learning frameworks like Model-Agnostic Meta-Learning (MAML) enable the model to learn an optimal set of parameters that can be fine-tuned to new tasks with only a few examples.

Prototypical networks

Another common approach is prototypical networks, which are designed to classify data points by computing distances between them in a learned feature space. In this setup, each class is represented by a prototype (the mean of the examples from that class in the feature space).

New data points are then classified based on their proximity to these prototypes. Prototypical networks can be effective in few-shot learning as they require only a minimal number of examples per class to generate meaningful prototypes for classification.

Self-supervised learning

SSL allows models to extract meaningful representations from vast amounts of unlabeled data by generating supervisory signals, bypassing the need for manual labels. Once the model has learned from the unlabeled data, it can then be fine-tuned on a smaller labeled dataset, achieving strong performance on downstream tasks.

One of the most impactful SSL techniques is contrastive learning, which trains models by comparing positive and negative pairs of data points. The objective is to pull similar data points (positive pairs) closer together in the feature space while pushing dissimilar ones (negative pairs) farther apart.

In natural language processing, a popular SSL method is masked language modeling, used in models like BERT. Here, parts of the input are masked, and the model is trained to predict the missing elements using the surrounding context. This pre-training strategy helps the model learn robust, general-purpose representations that can be fine-tuned for various NLP tasks.

Applications

Few-shot and self-supervised learning are widely used in fields where data limitations are a significant barrier. Here are a few key applications of each.

Few-shot learning in custom object detection

Few-shot learning proves valuable in custom object detection, where identifying rare or specialized objects may require models to learn from only a handful of labeled examples.

In industrial settings, detecting defects in a manufacturing process might involve very few instances of the defect. By leveraging few-shot learning, a model pre-trained on general object detection tasks can be quickly adapted to detect these specific objects with minimal additional training data.

Self-supervised learning in LMs

Self-supervised learning has revolutionized natural language processing through models like GPT and BERT. These models learn general-purpose language representations that can be fine-tuned for specific tasks with relatively small labeled datasets. For example, a language model pre-trained using SSL can be fine-tuned to perform tasks like sentiment analysis, text classification, or summarization, often achieving state-of-the-art results.

Practical Implementation of Advanced Transfer Learning Techniques

Machine learning libraries and frameworks can significantly simplify the implementation of advanced transfer learning methods. Tools like PyTorch, TensorFlow, and Hugging Face Transformers provide the necessary flexibility and pre-built components to accelerate the development process.

Frameworks and tools

For practitioners looking to implement advanced transfer learning techniques, several libraries and frameworks provide extensive support:

- PyTorch: A flexible, user-friendly framework widely used for research and practical machine-learning projects. PyTorch’s dynamic computational graph makes it ideal for tasks like meta-learning and multi-task learning.

- TensorFlow: Another popular deep learning framework, TensorFlow offers robust support for transfer learning and domain adaptation through its high-level API, Keras. TensorFlow's tensorflow_hub also provides pre-trained models that can be fine-tuned for new tasks.

- Hugging Face Transformers: An ideal library for natural language processing tasks, it provides a variety of pre-trained models like BERT, GPT, and T5 that can be fine-tuned for specific downstream tasks with minimal effort.

Challenges and considerations

Implementing advanced transfer learning techniques is challenging, and there are some critical factors we need to keep in mind.

Catastrophic forgetting

Fine-tuning on new tasks can cause models to "forget" what they’ve learned from earlier tasks. Techniques like Elastic Weight Consolidation (EWC) can help preserve the weights of the pre-trained model, which is crucial for prior knowledge.

Handling computational complexity

Techniques like domain adaptation and multi-task learning can significantly increase computational requirements. Training adversarial domain adaptation models or multi-task architectures may require more resources due to the additional complexity. We need to optimize our model and use tools like mixed precision training to handle large models efficiently.

Ensuring fairness and bias mitigation

Transfer learning models pre-trained on biased data may propagate or even amplify these biases in new tasks, leading to skewed predictions. This risk is critical in sensitive fields like healthcare and finance. To ensure fairness, we should regularly evaluate model outputs for bias and apply mitigation techniques such as reweighting, adversarial de-biasing, or fair representation learning.

Industry Examples

Advanced transfer learning methods are being applied across various industries, providing innovative solutions to complex real-world challenges. Below are some key applications and industry examples that highlight how these techniques are driving AI advancements.

OpenAI: GPT models using few-shot earning

OpenAI’s GPT-4o model, a leading example of few-shot learning in action, has demonstrated the ability to adapt to new tasks with only a few examples. GPT-4o, pre-trained on massive amounts of text data, can perform various tasks such as translation, summarization, and question-answering without the need for task-specific fine-tuning.

Instead, users provide a few examples of the desired task, and the model generalizes from there. This few-shot learning capability has broadened the range of applications for large language models, from creative writing to conversational agents.

DeepMind: Multi-task learning in healthcare AI

DeepMind, a leader in AI research, has utilized multi-task learning to develop healthcare models that can assist in diagnosing multiple conditions from medical imaging. For instance, their AI system for detecting eye diseases from retinal scans is capable of diagnosing a range of conditions, including diabetic retinopathy and age-related macular degeneration, using a single model.

This MTL approach reduces the need for separate models for each condition, allowing healthcare providers to streamline their diagnostic processes and provide faster, more accurate patient care.

Waymo: Domain adaptation in autonomous driving

Waymo (Google’s self-driving car project) uses domain adaptation techniques to improve the generalization of its autonomous vehicle models across various driving environments. The company trains its models in simulated environments and then adapts them for real-world deployment in different cities and weather conditions.

This process ensures that self-driving cars can handle the nuances of different urban landscapes, from congested streets in San Francisco to wide-open highways in Arizona, without requiring extensive retraining for each location.

Future Trends in Advanced Transfer Learning

Researchers are constantly pushing the boundaries, striving to make models more adaptable, fair, and capable of handling complex real-world tasks while reducing the amount of data and training time required.

Continual learning

Continual learning or lifelong learning focuses on developing models that can continuously learn from new data while retaining knowledge from previously learned tasks. In contrast to traditional transfer learning, where models are static once fine-tuned, continual learning allows for ongoing adaptation to new information.

This is vital for dynamic environments, such as robotics and autonomous systems, where new scenarios are bound to emerge regularly. In this context, memory-based strategies help balance the need to preserve past knowledge while incorporating new skills. Future developments aim to extend continual learning to more complex domains, ensuring models can update efficiently without compromising earlier performance.

Fairness and bias mitigation

As transfer learning becomes more prevalent in industries like healthcare, finance, and law enforcement, the need to address fairness and bias is impossible to ignore.

Models pre-trained on large, diverse datasets often carry inherent biases, which can lead to skewed outcomes in new applications. To counteract this, researchers are exploring techniques such as adversarial de-biasing, reweighting, and fair representation learning to detect and mitigate these biases.

Ensuring fairness will remain a central focus in transfer learning research, especially in sensitive domains where biased predictions can have real-world implications.

AI Ethics

Hybrid transfer learning

Hybrid transfer learning combines supervised, unsupervised, and self-supervised methods to leverage the strengths of each. Models are typically pre-trained using unsupervised or self-supervised approaches to learn general representations and then fine-tuned with labeled data for specific tasks.

This hybrid strategy enhances transferability and reduces the reliance on large labeled datasets, making it particularly useful in fields with limited annotated data, such as rare disease diagnosis or specialized industrial applications.

Reinforcement learning in transfer learning

Transfer learning is also making strides in reinforcement learning (RL), where agents trained in one environment are adapted for use in related settings. This reduces the time and data needed for agents to master new tasks, improving their efficiency in solving complex, multi-step problems.

For example, an RL agent trained to navigate a specific type of robotic system can apply its knowledge to a new, but similar, setup with minimal retraining, significantly boosting the versatility and scalability of RL systems.

Conclusion

In this article, we explored advanced transfer learning techniques. We've examined domain adaptation for handling data distribution differences, multi-task learning for using shared knowledge, and few-shot and self-supervised learning for scenarios with limited labeled data.

These techniques can offer us effective strategies to address challenges and improve model performance. As AI continues to advance, familiarity with these techniques will be essential for staying competitive.