Course

Feature engineering is all about selecting or creating significant features that improve a model’s performance. No matter your ML algorithm, you'll likely rely on feature engineering techniques for data preparation.

In this article, we'll explore feature engineering and its methods and understand how to apply them using a hands-on house price prediction example.

What is Feature Engineering in Machine Learning?

I remember building a model to improve on-time delivery rates for a time-in-transit project at my workplace. Rather than training complex ensemble models, we used a simple regression algorithm with just three additional features derived from existing data.

This approach alone improved our on-time delivery rate from 48% to 56%. It’s a huge improvement, considering 10 million records. That’s how much of a difference feature engineering techniques, like feature extraction, can make!

Simply put, feature engineering means selecting the right features from existing data.

Consider a weather dataset with columns for temperature, location, month, year, and date. The date column may not add significant value for capturing seasonality trends since the month column already provides that information. Removing the date column can reduce the dataset’s dimensionality without negatively impacting the accuracy of weather predictions.

Become an ML Scientist

Types of Features in Machine Learning

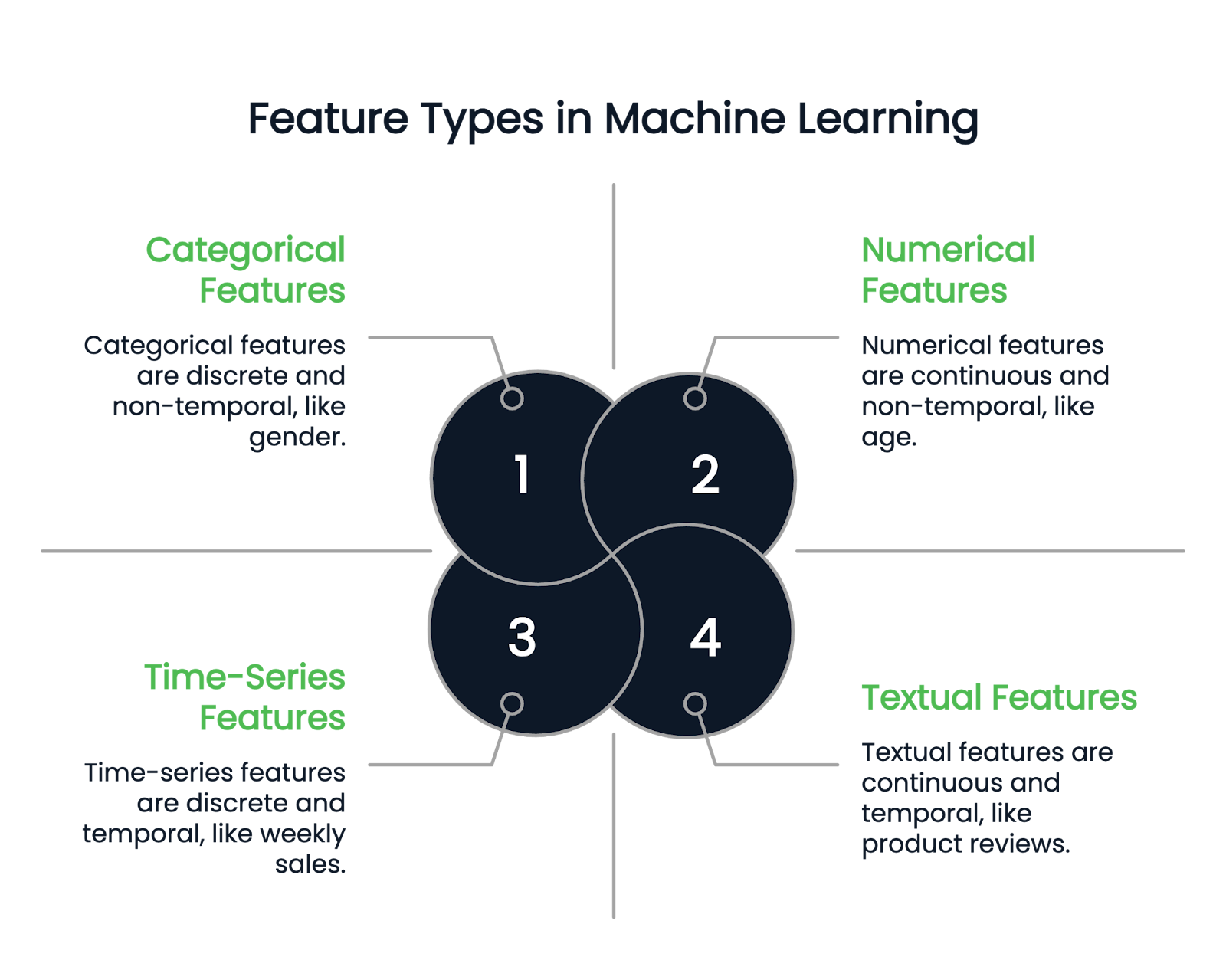

Before diving into different feature engineering techniques, let’s first understand the types of features available.

Numerical features

As the name suggests, numerical features represent data in numbers. They are continuous quantitative variables. Examples include height, age, and salary.

Categorical features

A categorical column can contain only discrete features. For example, a person’s gender is a categorical column, as it can only have a few gender types. Birth month is another example because the values must fall between January and December.

Categorical variables are further divided into binary and non-binary types. Binary variables have two possible categories, while non-binary features can have multiple categories.

Textual and time-series features

Textual columns contain only text data. Examples include product reviews or product description columns in a retail dataset.

On the other hand, time-series features represent timely data, such as weekly sales or stock price fluctuations over a year.

Image by Author

Feature Engineering Techniques

Feature engineering offers various powerful techniques for converting raw columns into desirable features. Here, we discuss some prominent ones.

Handling missing values

Missing values can distort model performance, so handling them properly is crucial. There are two main approaches:

- Imputation: Imputation is the process of filling missing values using available information. For instance, you can use the mean, mode, and median values to replace missing values.

- Deletion: This method removes rows with missing values and is most suitable when missing data is less than 10% of the dataset size.

For an in-depth guide on handling missing data, read this Techniques to Handle Missing Data Tutorial or explore this Dealing with Missing Data in Python Course.

Handling outliers

Outliers are abnormal values significantly different from the rest of the data points. For example, if you have a salary dataset with most observations between $90K and $120k, a salary number like $400K or $10K is an outlier.

- Replace: You can replace outliers with a statistical number like the max or min of the column.

- Transformations: Apply transformations like log or square root to reduce the impact.

- Robust models: Use models that are less sensitive to outliers. Decision trees, gradient boosting, and ridge regression are less affected by outliers.

- Delete: If none of the methods work, dropping the outliers from the dataset is the final option.

Encoding categorical variables

Machine learning models cannot directly process categorical variables, so they must be converted into numerical representations. Below, we discuss some popular encoding techniques.

- One-hot encoding: Each category in a categorical feature is represented as a separate column, with a value of 1 if the category is present in the sample and 0 for all other columns. The example below explains this.

Consider a dataset with the following categorical feature:

|

Name |

Gender |

|

John |

Male |

|

Rachel |

Female |

|

Emma |

Female |

Using one-hot encoding, we create separate columns for each possible category in the Gender feature:

|

Name |

Female |

Male |

|

John |

0 |

1 |

|

Richale |

1 |

0 |

|

Emma |

1 |

0 |

Since John is Male, the "Male" column gets a 1, while the "Female" column remains 0. Likewise, Rachel and Emma are Female, so the "Female" column is 1 and the "Male" column is 0.

For a complete tutorial on one-hot encoding in Python, check out this One-Hot Encoding Tutorial.

- Label encoding: Label encoding assigns a unique numerical value to each category in a categorical feature. This approach is useful for ordinal data (where categories have a meaningful order) but can introduce issues in non-ordinal categorical variables, as the model might misinterpret the numerical values as having an inherent ranking.

Consider a dataset with a Location column containing categorical values:

|

Location |

Encoded value |

|

New York |

1 |

|

California |

2 |

|

Texas |

3 |

|

California |

2 |

|

Texas |

3 |

Each unique location is assigned a distinct numerical value. However, since California (2) is not inherently "between" New York (1) and Texas (3), using label encoding for non-ordinal data can lead to misleading model assumptions. In such cases, one-hot encoding is often preferred to avoid implying an unintended numerical relationship between categories.

- Ordinal encoding: Ordinal encoding is similar to label encoding but is specifically used when categorical values have a meaningful order. Instead of assigning arbitrary numerical values, it maps categories based on their ranking. This ensures that higher values correspond to higher-ranked categories.

Consider an Education level column with the following categories:

|

Education level |

Encoded value |

|

UG (Undergraduate) |

1 |

|

PG (Postgraduate) |

2 |

|

PhD |

3 |

Since a PhD represents a higher level of education than a PG, which in turn is higher than UG, the assigned numerical values reflect this ranking.

- Target encoding: Replaces each categorical value with the mean of its corresponding target variable values. The target variable is the dependent variable the model is trying to predict. This technique is particularly useful when dealing with high-cardinality categorical features (i.e., those with many unique values), as it helps reduce dimensionality while retaining relevant information.

Consider a dataset where Location is a categorical feature, and the Target variable represents some numerical outcome:

|

Location |

Target variable |

|

New York |

2 |

|

California |

3 |

|

Texas |

5 |

|

California |

1 |

|

Texas |

4 |

To encode the Location column, we calculate the mean of the Target variable for each unique category:

- California: (3 + 1) / 2 = 2

- Texas: (5 + 4) / 2 = 4.5

- New York: Only one value (2), so it remains 2

|

Location |

Encoded value |

|

New York |

2 |

|

California |

2 |

|

Texas |

4.5 |

|

California |

2 |

|

Texas |

4.5 |

If you’re looking for a broader guide on handling categorical data, this Categorical Data Handling Tutorial provides additional insights.

Feature scaling

Feature scaling ensures that numerical features lie within a standardized range, preventing some features from dominating the learning process due to their larger values.

Machine learning models that rely on distance-based calculations (e.g., linear regression, k-nearest neighbors, and neural networks) can be affected when features have vastly different scales.

For example, consider an employee dataset with the following features:

- Age ranges from 20 to 60

- Income ranges from $30,000 to $150,000

Since income has much larger values than age, a model might assign more importance to income simply because of its scale, not because it is actually more relevant.

Here are some common techniques:

- Normalization (min-max scaling): This method scales all feature values to fall within 0 and 1. It subtracts the minimum value of the column from each data point and then divides it by the range of that column, which is the difference between the maximum and minimum values. The formula looks like this:

Scaled value =( datapoint - min(column))/(max(column) - min(column))

- Standardization (Z-score scaling): This transforms all features to have a mean of 0 and a standard deviation of 1. The formula is: the mean of a column is subtracted from each data point of that column, and the residue is divided by the standard deviation of that feature.

Scaled value =( datapoint - mean(column))/(std(column))

For a detailed comparison of normalization vs. standardization, check out this Normalization vs. Standardization Guide.

Creating new features

Creating new, meaningful features from existing data provides more logical insights into the model.

For instance, in a house price prediction dataset, if you have the length and breadth columns separately, you can derive a new feature: area = length * breath, which may directly relate to the target variable, price. Inputting this area feature to the model simplifies discovering hidden patterns.

Feature selection

Feature selection keeps only relevant features by removing unnecessary columns. Focusing on the most informative data helps prevent overfitting, reduce computational complexity, and improve model performance. Here are some techniques:

- Filter methods: This method selects important features based on their statistical properties. For instance, we can remove features that carry the same information using a correlation heatmap. Other techniques include Chi-square test, ANOVA, and Information Gain methods.

- Wrapper methods: These methods train a predictive model iteratively using different combinations of feature subsets, and the best subset with optimized model performance is chosen. Forward selection, backward selection, and recursive elimination methods fall under this category.

Feature Engineering in Python: A Practical Example

Feature engineering is best understood through hands-on implementation.

The “house price prediction” is a huge, real-world dataset with 81 columns. I have selected this for its diverse range of features, which can help you better understand feature engineering techniques practically.

Getting started:

- Download the dataset from Kaggle.

- Load it into a Pandas DataFrame for analysis and feature engineering.

Handling categorical missing values

The following code identifies categorical columns in the dataset and replaces their missing values with the most frequent category:

import pandas as pd

# Load dataset (replace 'your_file.csv' with the actual file name)

df = pd.read_csv('your_file.csv')

# Select categorical columns

categorical_cols = df.select_dtypes(include=['object']).columns

# Replace missing values with the most frequent category (mode)

for col in categorical_cols:

mode = df[col].mode()[0] # Get the most common value

df[col].fillna(mode, inplace=True) # Fill missing valuesHandling numeric missing values

We handle numerical missing values by replacing them with either mean or median. Mean is a more popular option for statistically distributed data, while median works well when the column has outliers. So, we will check for outliers and decide on the method.

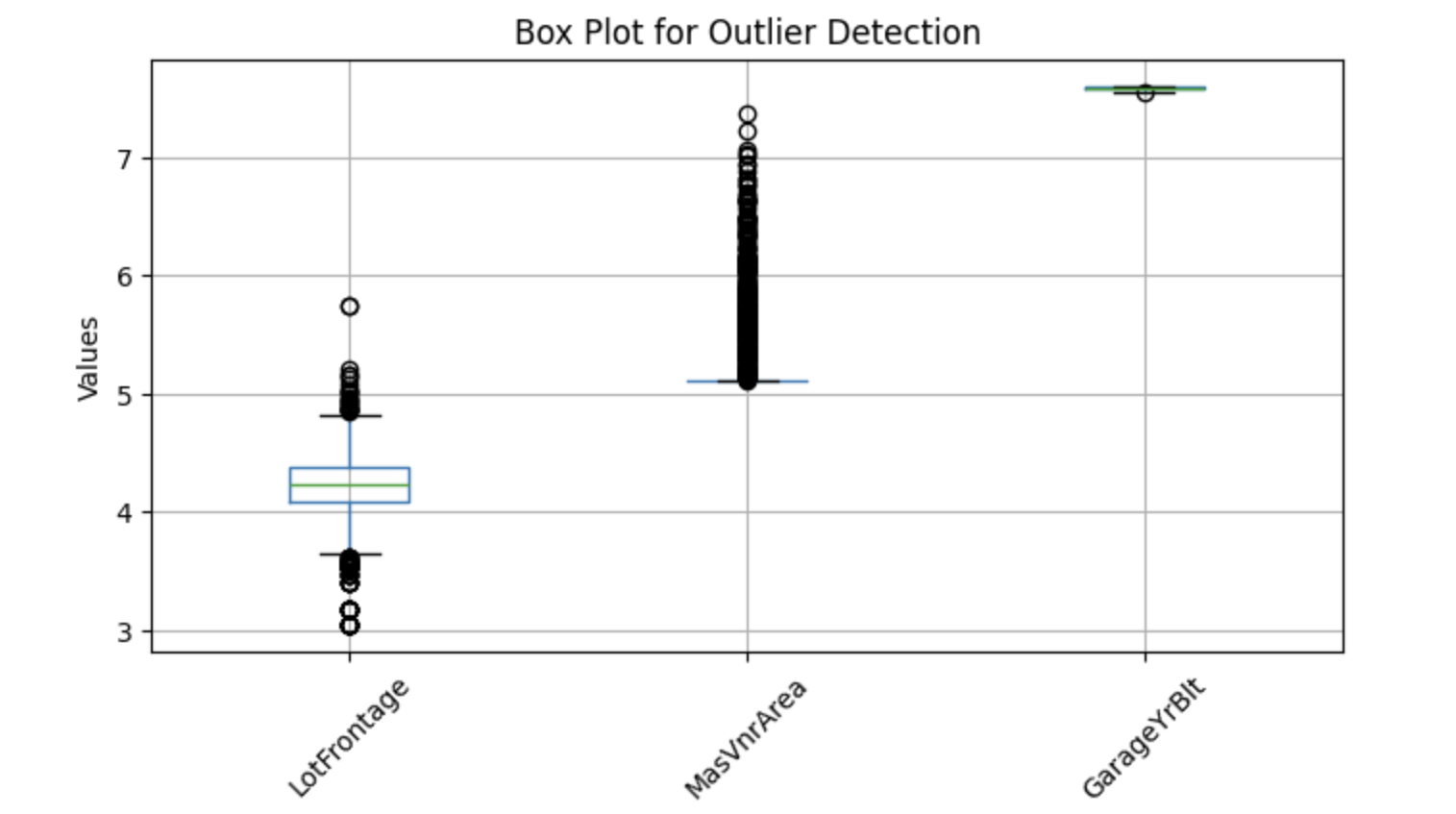

To visualize potential outliers, we can use box plots, which help identify extreme values. Below is a Python implementation for detecting outliers in selected numerical columns:

import pandas as pd

import matplotlib.pyplot as plt

import numpy as np

features = ['LotFrontage', 'MasVnrArea', 'GarageYrBlt']

# Plot box plots

df[features]=np.log(df[features])

df[features].boxplot(figsize=(8, 4))

plt.title('Box Plot for Outlier Detection')

plt.ylabel('Values')

plt.xticks(rotation=45)

plt.show()Output:

The above boxplots show points outside whiskers—these are called outliers. So, let's replace missing values with the median.

Code to replace nulls with median values:

import pandas as pd

# Select numerical columns

numerical_columns = df.select_dtypes(include=['number']).columns

for col in numerical_columns:

median = df[col].median()

df[col].fillna(median, inplace=True) # Replace nulls with medianCreating new features

Columns like YearBuilt, YearRemodAdd, GarageYrBlt, and YrSold contain years (e.g., 2001, 1976) that don’t directly influence the target variable. While these absolute year values may not directly impact house prices, we can derive more useful insights by calculating how old the house or renovation is at the time of sale.

For example, instead of using YearBuilt, we can create a new feature: House Age=YrSold−YearBuilt

Code to create these new features:

# Get columns that contain 'Yr' or 'Year'

year_columns = [feature for feature in numerical_columns if 'Yr' in feature or 'Year' in feature]

# Convert year values into age-related features

for col in year_columns:

df[col] = df['YrSold'] - df[col]Feature transformation

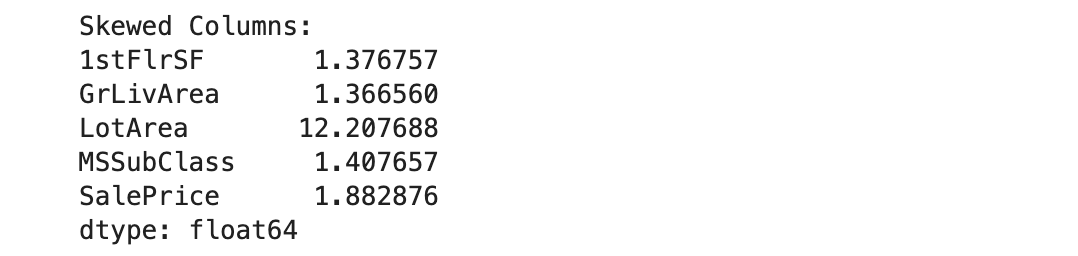

In machine learning, skewed numerical features can negatively impact model performance, especially for models that assume a normal distribution (e.g., linear regression). To correct this, we apply log transformation.

Before applying a log transformation, we must identify skewed features. However, we exclude columns containing zeros since the logarithm of zero is undefined.

Here’s an implementation in Python to identify skewed columns:

import pandas as pd

# Get numerical columns

numerical_columns = df.select_dtypes(include=['number']).columns

# Identify columns containing zeros

numerical_0s = df.loc[:, (df == 0).any()].select_dtypes(include=['number']).columns

# Remove columns that contain zeros from consideration

numerical_columns = numerical_columns.difference(numerical_0s)

# Calculate skewness for the remaining numerical columns

skewness = df[numerical_columns].skew()

# Set threshold for skewness (e.g., absolute value > 1 indicates high skewness)

skewed_columns = skewness[abs(skewness) > 1]

# Display skewed columns

print("Skewed Columns:")

print(skewed_columns)Output:

We will use log-normal distribution to convert these five skewed columns into a Gaussian distribution:

import numpy as np

# The list of highly skewed features identified earlier

skew_features = ['LotFrontage', 'LotArea', '1stFlrSF', 'GrLivArea', 'SalePrice']

# Apply log transformation to each skewed feature

for col in skew_features:

df[col] = np.log(df[col])Convert categorical features to numerical values

We previously discussed several encoding techniques; in this example, we will apply target encoding.

# Select categorical variables

categorical_columns = df.select_dtypes(include=['object', 'category']).columns

# Apply target encoding

for col in categorical_columns:

# Compute mean SalePrice for each category

labels_ordered = df.groupby([col])['SalePrice'].mean().sort_values().index

# Assign numerical values based on target variable mean

labels_ordered = {x: i for i, x in enumerate(labels_ordered, 0)}

# Map encoded values back to the dataframe

df[col] = df[col].map(labels_ordered)In the code above, the target variable is SalePrice, so we grouped the data by each categorical column and calculated the mean SalePrice for each group. These mean values were then assigned to the corresponding categorical values in that column.

Our dataset is now ready for machine learning!

If you want to strengthen your understanding of supervised learning concepts and how models utilize engineered features, this Supervised Learning with Scikit-Learn Course is an excellent resource.

Tools and Libraries for Feature Engineering

In this section, we’ll go through the most used Python libraries and automation tools to implement feature engineering.

Pandas

Pandas is the most used Python framework for handling structured data. It performs many feature engineering steps, such as transformation, data aggregation, and feature extraction. Pandas also make data cleaning and manipulation easy.

If you're new to pandas, this Data Manipulation with pandas Course is a great starting point.

Scikit-Learn

Scikit-learn is a powerful machine-learning library with various tools for feature engineering. It contains methods like OneHotEncoder and LabelEncoder to convert categorical to numerical variables. It also offers feature scaling methods like StandardScaler and Minmaxscaler.

Feature-Engine

Feature-engine is an open-source Python library offering a variety of transformers to simplify feature engineering. These transformers are specialized tools for specific tasks, such as missing data imputation, outlier handling, feature selection, and discretization. Fully compatible with scikit-learn, these transformers can be passed as input parameters for hyperparameter tuning.

Automated feature engineering tools

- Featuretools: Featuretools is an open-source library for automating feature engineering. The framework is primarily used to create new features from a relational database. It relies on the DFS (deep feature synthesis) algorithm, which builds new features based on transformation and aggregation operations.

- TSFresh: TSFresh, known as Time Series Feature Extraction based on Scalable Hypothesis Tests, is specially designed to extract meaningful features from time series data. The library conducts hypothesis testing to select statistically significant features for the prediction.

- Autofeat: The Autofeat library automates feature selection, creation, and transformation to improve linear model accuracy. For example, instead of

fit(), the library offers afit_transform()method that simultaneously performs fit and transforms operations on the input data. Moreover,FeatureSelectorandAutoFeatLightmodels are available for feature selection and scaling.

Best Practices for Feature Engineering

To effectively implement feature engineering, focus on these best practices.

Know your data

Understanding the meaning and significance of each feature makes it much easier for you to perform techniques like feature selection or extraction. I suggest you research your data and relevant domain knowledge for effective feature engineering.

Conduct exploratory data analysis (EDA)

Utilize Python libraries like Pandas and Matplotlib to conduct comprehensive exploratory data analysis, such as exploring statistical information, visualizations, and correlations for finding patterns and potential relationships within the data.

Create interaction features

Creating interaction features involves identifying relationships between existing features and deriving new ones. For instance, in house price prediction, calculating a house's age by subtracting the year it was built from the current year highlights trends, such as house price decreases as time passes.

Choose your model ahead

Different machine learning models require different steps of feature engineering. For instance, models like linear or multiple regression, SVM, and KNN often benefit from feature standardization, but this technique doesn't help tree-based models.

So, deciding on your model ahead of time can help you build an effective feature engineering pipeline for your use case.

Conclusion

Feature engineering is an integral part of building machine learning solutions, allowing you to leverage features in the most efficient way. The process is carried out by data scientists or ML engineers when dealing with any dataset. If you’re a data professional or aiming to become one, mastering all the techniques mentioned in this article will help you advance your career!

To explore these techniques in more detail, check out the DataCamp courses on feature engineering for machine learning and feature engineering for NLP. There’s also a course on feature engineering for R programmers.

Build Machine Learning Skills

FAQs

How is feature engineering different from feature selection?

Feature engineering involves creating new features or transforming existing ones to improve model performance. Feature selection, on the other hand, is the process of choosing the most relevant features and discarding irrelevant or redundant ones to prevent overfitting and reduce model complexity.

Can feature engineering be automated?

Yes! Automated Feature Engineering tools like FeatureTools, AutoML libraries (e.g., Auto-sklearn, H2O.ai), and Google’s AutoML Tables can automatically create and transform features, saving time and effort. However, domain knowledge is still crucial for interpreting and selecting the best features.

How does feature engineering affect model interpretability?

Feature engineering can improve or reduce interpretability, depending on the techniques used. For example:

- Creating meaningful features (e.g., "House Age" instead of "YearBuilt") improves interpretability.

- Applying transformations like PCA (Principal Component Analysis) can make features less interpretable but improve model performance.

Does feature engineering depend on the type of machine learning model?

Yes! Different models benefit from different feature engineering techniques:

- Linear models (e.g., Linear Regression, Logistic Regression) – Require feature scaling and often benefit from polynomial feature transformations.

- Tree-based models (e.g., Decision Trees, Random Forest, XGBoost) – Handle unscaled data well and often benefit more from feature selection than transformations.

- Deep learning models – Prefer raw features, and transformations like embedding layers help with categorical data.

What is feature crossing, and when should I use it?

Feature crossing is the process of combining two or more features to create a new feature that captures interactions between them. Example:

- Instead of using "Age" and "Income" separately, create "Income-to-Age Ratio" to capture financial stability across age groups.

- Use feature crossing when relationships between variables impact the target variable non-linearly.

How can I evaluate whether a feature improves model performance?

You can evaluate feature importance using:

- Permutation Importance – Measures how shuffling a feature affects model accuracy.

- Feature Importance in Tree-Based Models – Many models like Random Forests provide built-in feature importance scores.

- Cross-validation performance – Compare model accuracy with and without a feature.

What is interaction feature engineering?

Interaction feature engineering involves creating new features based on interactions between existing features. This can include:

- Multiplication (Product Features): Combining two features (e.g., "Height × Weight" for BMI).

- Ratios: Dividing one feature by another (e.g., "Price per Square Foot").

- Polynomial Features: Raising features to a power (e.g., "Age²" for non-linear relationships).

How do you handle categorical variables with high cardinality?

For categorical variables with many unique values (e.g., zip codes, user IDs):

- Target Encoding: Replace categories with the mean of the target variable.

- Embedding Layers (for Deep Learning): Learn lower-dimensional representations of categories.

- Hashing Encoding: Assign categories to buckets using a hashing function.

Srujana is a freelance tech writer with the four-year degree in Computer Science. Writing about various topics, including data science, cloud computing, development, programming, security, and many others comes naturally to her. She has a love for classic literature and exploring new destinations.