Course

Traditionally, data warehouses have been the go-to solution for structured data and business intelligence. However, with the rise of big data, AI, and machine learning, a newer architecture—the data lakehouse—has emerged, combining the strengths of both data warehouses and data lakes.

In this guide, we’ll explore:

- What data warehouses and data lakehouses are, and how do they differ.

- Key features, advantages, and challenges of each architecture.

- Real-world use cases where one may be preferable over the other.

- When to use a hybrid approach that leverages the best of both worlds.

Let’s dive in!

What Is a Data Warehouse?

A data warehouse is a centralized system that stores, organizes, and analyzes data for business intelligence (BI), reporting, and analytics. It integrates structured data from multiple sources and follows a highly organized schema, ensuring consistency and reliability. Data warehouses play a central role in helping businesses make data-driven decisions efficiently.

Features

- Schema-on-write: Data is transformed and structured before loading, adhering to a predefined schema for optimized querying.

- High performance: Optimized for complex queries, allowing fast aggregations, joins, and analytics.

- ACID compliance: Ensures reliable, consistent, and accurate data for mission-critical applications.

- Historical data management: Stores years of data for trend analysis, forecasting, and compliance.

- Data integration: Combines data from multiple sources (ERP, CRM, transactional databases) into a unified repository.

- Security and governance: Provides role-based access control (RBAC), data encryption, and compliance features for enterprise security.

Use cases

- Financial reporting and regulatory compliance: Ensures accurate, auditable records for regulatory requirements like SOX, HIPAA, and GDPR.

- Business intelligence dashboards: Powers real-time and historical BI dashboards for data-driven decision-making.

- Operational reporting: Supports predefined, structured queries for day-to-day business operations.

- Customer analytics: Enables customer segmentation, behavior analysis, and churn prediction using structured datasets.

- Supply chain and logistics: Optimizes inventory management, demand forecasting, and operational efficiency with historical trends.

Examples of tools

- Snowflake: A cloud-native data warehouse known for its scalability and ease of use.

- Amazon Redshift: AWS's data warehousing service offers fast query performance and integration with other AWS tools.

- Google BigQuery: A serverless, highly scalable data warehouse designed for analytics.

What Is a Data Lakehouse?

A data lakehouse is a modern data architecture that combines the scalability and flexibility of a data lake with the structured performance and reliability of a data warehouse. It allows organizations to store, manage, and analyze structured, semi-structured, and unstructured data in a single system.

Features

- Schema-on-read and schema-on-write: Supports raw data ingestion for flexibility and structured datasets for traditional analytics.

- Diverse data types: Handles structured (databases), semi-structured (JSON, XML), and unstructured (images, videos) data.

- Optimized for modern workloads: Built for analytics, AI, machine learning, and streaming data ingestion.

- Unified storage: Combines the scalability of data lakes with the performance of data warehouses.

- Cost-efficiency: Reduces operational costs by consolidating storage and processing.

- Built-in governance and security: Provides fine-grained access control, auditing, and compliance features to ensure data integrity and privacy.

Use cases

- Big data analytics: Stores and processes vast amounts of structured and unstructured data for large-scale analysis.

- AI and machine learning pipelines: Enables feature engineering, model training, and inference with flexible data ingestion.

- Real-time data processing: Supports streaming analytics for fraud detection, recommendation systems, and IoT applications.

- Enterprise data consolidation: Unifies operational and analytical workloads, reducing data duplication and complexity.

Examples of tools

- Databricks: A unified analytics platform known for implementing the lakehouse architecture with Delta Lake as its foundation.

- Delta Lake: An open-source storage layer that provides reliability and performance enhancements to data lakes.

- Apache Iceberg: A high-performance table format designed for large-scale, multi-modal analytics on data lakes.

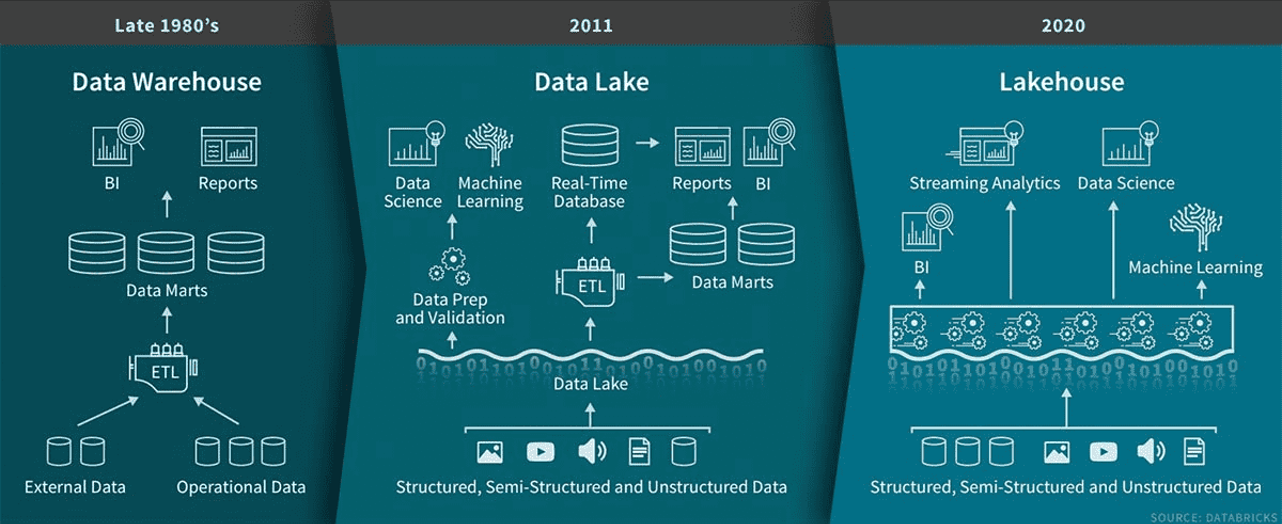

The evolution of data architecture from data warehouses in the late 1980s to data lakes in 2011 and finally to lakehouses in 2020. Image source: Databricks.

Become a Data Engineer

Differences Between Data Lakehouses and Data Warehouses

Understanding the key differences between data lakehouses and data warehouses can help determine which solution best fits your needs. Below is a breakdown of their core distinctions.

Data types supported

Data lakehouses handle diverse data types, making them ideal for diverse workloads. They support:

- Structured data: Sales transactions, relational databases

- Semi-structured data: JSON user profiles, sensor readings

- Unstructured data: IoT logs, images, audio files.

Data warehouses primarily store structured and some semi-structured data, making them better suited for traditional business processes like financial reporting and analytics.

Example: A retail company using a data lakehouse can analyze clickstream logs, social media data, and transaction records to assess customer sentiment.

Cost efficiency

Data lakehouses leverage cost-effective cloud storage (e.g., Amazon S3, Azure Data Lake Storage) and support schema-on-read, reducing ETL preprocessing costs.

Data warehouses are typically more expensive due to structured storage, ETL processing, and proprietary formats.

Example: A startup needing low-cost storage for raw and processed data may find a data lakehouse more affordable than a data warehouse.

Performance

Data lakehouses support real-time and batch processing, making them ideal for big data analytics and machine learning. Their distributed architecture ensures high-speed processing of large datasets.

Data warehouses excel at SQL-based queries and transactional workloads, providing fast, consistent performance for structured data.

Example: A financial institution running real-time fraud detection may benefit from a data lakehouse’s ability to process streaming data.

Integration with machine learning

Data lakehouses integrate natively with ML tools like TensorFlow, PyTorch, and Databricks ML, allowing direct model training on large datasets.

Data warehouses have limited ML support and often require exporting data to external systems for analysis.

Example: A tech company using Databricks can develop AI-powered recommendation systems directly within a data lakehouse.

Scalability

Data lakehouses scale to petabytes of data while supporting multi-engine processing.

Data warehouses scale well for structured data but struggle with massive unstructured datasets.

Example: A telecom provider can scale a lakehouse to process billions of call records daily, while a traditional warehouse may struggle with IoT logs.

Types of users

Data lakehouses serve data scientists, analysts, and engineers working with real-time analytics, ML pipelines, and exploratory analysis.

Data warehouses cater primarily to business analysts and executives who rely on preprocessed, structured data for reporting and dashboards.

Example: A marketing team might use a data warehouse for BI dashboards, while a data science team prefers a data lakehouse for predictive modeling.

Data Lakehouses vs Data Warehouses: A Summary

Here’s a detailed comparison table for data lakehouses vs. data warehouses that includes further technical details than the ones discussed previously:

|

Feature |

Data Lakehouse |

Data Warehouse |

|

Data Types Supported |

Structured, semi-structured, and unstructured (JSON, images, videos, IoT logs) |

Primarily structured, with limited semi-structured support (JSON, XML) |

|

Storage Format |

Open formats (Parquet, ORC, Delta, Iceberg) |

Proprietary structured formats |

|

Schema Management |

Schema-on-read & schema-on-write (flexible) |

Schema-on-write (strict) |

|

Query Performance |

Optimized for both batch and real-time queries |

Optimized for structured SQL queries |

|

Processing Engine |

Supports multiple engines (Spark, Presto, Trino, Dremio) |

SQL-based engines (Snowflake, Redshift, BigQuery) |

|

Cost Efficiency |

Lower cost due to cheap cloud object storage and less preprocessing |

Higher costs due to ETL, structured storage, and proprietary formats |

|

Scalability |

Scales easily with diverse workloads (structured and unstructured) |

Scales well for structured data but struggles with massive unstructured datasets |

|

Machine Learning (ML) Support |

Built-in ML integration with TensorFlow, PyTorch, and Databricks ML |

Limited ML integration, often requires data export |

|

Real-Time Data Streaming |

Supports real-time ingestion and analytics (Kafka, Spark Streaming) |

Limited real-time support, mainly batch processing |

|

Best For |

AI/ML workloads, real-time analytics, big data, IoT |

Business intelligence, reporting, structured analytics |

|

Security & Governance |

Advanced security, access control, and auditing |

Strong security and compliance controls for structured data |

|

Example Use Cases |

Fraud detection, recommendation systems, IoT analytics, AI model training |

Financial reporting, operational dashboards, regulatory compliance |

|

Popular Tools & Platforms |

Databricks, Snowflake (with Iceberg/Delta), Apache Hudi, Google BigLake |

Amazon Redshift, Google BigQuery, Snowflake, Microsoft Synapse |

Pros and Cons of Data Warehouses vs. Data Lakehouses

In this section, we break down each architecture's key advantages and disadvantages to provide a balanced view.

Pros and cons of data warehouses

|

Pros ✅ |

Cons ❌ |

|

Optimized for structured data – Provides high performance for SQL-based queries and analytics. |

Limited support for unstructured data – Struggles with formats like images, videos, IoT logs. |

|

Fast query performance – Designed for aggregations, joins, and complex queries with indexing and compression. |

High storage and compute costs – Expensive compared to cloud-based object storage solutions. |

|

ACID compliance – Ensures data integrity, reliability, and consistency, which is crucial for financial and regulatory applications. |

Rigid schema-on-write approach – Data must be cleaned and structured before ingestion, increasing ETL complexity. |

|

Great for BI and reporting – Works seamlessly with Power BI, Tableau, Looker, enabling real-time dashboards. |

Not ideal for machine learning – ML workflows require data export to external platforms for preprocessing. |

|

Highly secure and governed – Strong RBAC, encryption, and compliance controls (e.g., GDPR, HIPAA). |

Challenging to scale for big data – Struggles with massive datasets compared to more scalable architectures. |

Pros and cons of data lakehouses

|

Pros ✅ |

Cons ❌ |

|

Supports all data types – Can handle structured, semi-structured, and unstructured data in a unified system. |

Query performance can be slower – While optimized for large-scale analytics, it may require additional tuning for structured data queries. |

|

Flexible schema-on-read and schema-on-write – Supports raw data ingestion for ML workloads while enabling structured storage for BI. |

Requires more governance effort – Since data is not always pre-structured, enforcing data quality and access control is more complex. |

|

Cost-effective storage – Uses cloud object storage (Amazon S3, Azure Data Lake) for affordable, scalable storage. |

Steeper learning curve – Requires familiarity with modern data tools like Apache Iceberg, Delta Lake, and Hudi. |

|

Optimized for AI and ML workloads – Seamlessly integrates with TensorFlow, PyTorch, Databricks ML, and real-time streaming frameworks. |

Data consistency challenges – Achieving ACID compliance across vast, distributed datasets requires additional configurations. |

|

Real-time data processing – Supports streaming data ingestion from IoT devices, logs, and real-time event sources. |

Less mature than data warehouses – Traditional warehouses have a longer history of proven reliability for BI and financial reporting. |

When to Use a Data Warehouse

Data warehouses best suit structured data, business intelligence, and regulatory compliance. A data warehouse is the right choice if you rely on highly organized, fast, and consistent analytics.

Structured data analytics

- Ideal for clean, structured datasets with well-defined schema requirements.

- Use when consistency and performance are critical for analytics and reporting.

Example: A company that uses a data warehouse to analyze structured sales data from its vast network of stores. This helps track inventory levels, identify best-selling products, and optimize real-time restocking processes.

Business intelligence (BI) reporting

- Best for generating dashboards and reports for decision-makers.

- Supports tools like Power BI and Tableau with optimized query performance.

Example: A financial services firm creating quarterly earnings reports for stakeholders.

Regulatory compliance

- Designed for industries with strict data accuracy and audit requirements.

- Provides reliable storage for financial records, healthcare data, and compliance reporting.

Example: A financial institution uses a data warehouse to store and analyze transactional data, ensuring compliance with regulations like Basel III and GDPR. This centralized approach helps manage audit trails and prevent fraud.

Historical data analysis

- Use for long-term trend analysis and strategic decision-making.

- It is ideal for industries like manufacturing or energy needing multi-year data insights.

Example: An energy company analyzing historical power usage to optimize production.

When to Use a Data Lakehouse

A data lakehouse is ideal when you need a scalable, flexible system that can handle structured, semi-structured, and unstructured data while supporting AI, machine learning, and real-time analytics.

Unified storage for diverse data

- Best for combining structured, semi-structured, and unstructured data into a single platform.

- Reduces silos and supports dynamic data access.

Example: A streaming service storing video content, user activity logs, and metadata.

Machine learning and AI workflows

- Perfect for raw data exploration, model training, and experimentation.

- Provides schema-on-read flexibility for diverse datasets.

Example: A company that uses a data lakehouse to process raw trip data, driver ratings, and GPS logs. This data powers machine learning models for route optimization, dynamic pricing, and fraud detection.

Real-time data streaming

- Use for applications requiring near-instant data ingestion and processing.

- Supports dynamic use cases like fraud detection and IoT analytics.

Example: IoT-enabled vehicles stream real-time sensor data to a lakehouse architecture. This allows a company to monitor vehicle performance, detect anomalies, and roll out over-the-air software updates.

Cost-effective big data storage

- Reduces expenses by storing raw data without extensive pre-processing.

- Scales efficiently for organizations generating vast amounts of data.

Example: A social media company that uses a data lakehouse to store and process vast amounts of raw user-generated content, such as texts, images, and videos. This setup enables them to perform sentiment analysis, detect trending topics, and optimize ad targeting.

Hybrid Solutions: Combining Data Warehouses and Data Lakehouses

While data warehouses and lakehouses serve different purposes, many organizations combine architectures to balance performance, cost, and flexibility.

A hybrid approach enables you to store structured data in a warehouse for fast analytics while leveraging a lakehouse for big data, AI, and machine learning.

A hybrid approach follows a two-tiered strategy:

- Raw and semi-structured data in the data lakehouse (flexible, scalable, cost-effective)

-

- Stores diverse data (structured, semi-structured, unstructured) in cloud object storage (Amazon S3, Azure Data Lake, Google Cloud Storage).

- Uses schema-on-read to provide flexibility for data scientists and AI/ML teams.

- Supports real-time data ingestion from IoT devices, event logs, and streaming platforms.

- Structured and cleaned data in the data warehouse (optimized for fast analytics and BI)

-

- Data is filtered, transformed, and structured before being stored in a warehouse (Snowflake, Redshift, BigQuery, Synapse).

- Uses schema-on-write to enforce data consistency and optimize query performance.

- Provides fast access to business intelligence, dashboards, and operational reports.

A hybrid data architecture is beneficial when:

- You need high-speed BI reporting and flexible data storage for ML/AI workloads.

- Your company handles structured and unstructured data, requiring schema-on-write and schema-on-read capabilities.

- You want to optimize costs, using a warehouse for structured, high-value analytics and a lakehouse for cost-effective raw data storage.

- You need real-time data ingestion and processing while maintaining governed historical records.

Conclusion

This guide explored the key differences between data warehouses and data lakehouses, their strengths, challenges, and use cases, and how organizations often combine both architectures for a hybrid approach.

Understanding these concepts is essential for building efficient, future-proof data systems as data architectures evolve. To dive deeper into these topics, check out these courses:

- Data Warehousing Concepts – A foundational guide to data warehouses, their components, and their role in analytics.

- Databricks Concepts – Learn how Databricks enables data lakehouse architecture for scalable data processing and machine learning.

Become a Data Engineer

FAQs

How do I migrate from a data warehouse to a data lakehouse?

Migrating involves:

- Assessing data – Identify structured and unstructured sources.

- Choosing a platform – Tools like Databricks, Apache Iceberg, or Snowflake support lakehouses.

- Building ETL pipelines – Use Apache Spark or dbt for transformation and ingestion.

- Optimizing performance – Implement indexing, caching, and partitioning strategies.

How does governance work in a data lakehouse compared to a data warehouse?

Data warehouses have centralized governance, with role-based access control (RBAC) and predefined schemas.

Data lakehouses require:

- Fine-grained access controls (e.g., AWS Lake Formation, Unity Catalog).

- Metadata management to track datasets across storage layers.

- Data quality monitoring for consistency in schema-on-read environments.

What are the biggest challenges when adopting a data lakehouse?

- Query performance tuning – Requires optimization techniques like indexing and caching.

- Data consistency issues – Needs ACID transaction support (e.g., Delta Lake, Apache Iceberg).

- Learning curve – Teams must adopt new tools beyond traditional SQL-based systems.

What role does AI and machine learning play in the lakehouse model?

Lakehouses are ideal for AI/ML because they:

- Store structured, semi-structured, and unstructured data for training models.

- Enable real-time feature engineering with Databricks ML and Spark.

- Support on-demand model training without requiring data exports.

Unlike warehouses, lakehouses let data scientists work with raw data directly.

What’s the future of data lakehouses and warehouses?

The industry is shifting toward hybrid and unified architectures.

- Cloud platforms are integrating lakehouse features into warehouse solutions.

- Serverless data warehousing is improving scalability and cost efficiency.

- Data mesh architectures are decentralizing data ownership across teams.

Understanding these trends will help data professionals stay ahead.

Sai is a software engineer with expertise in Python, Java, cloud platforms, and big data analytics and a Master’s in Software Engineering from UMBC. Experienced in AI models, scalable IoT systems, and data-driven projects across industries.