Track

Retrieval-augmented generation (RAG) combines large language models (LLMs) with retrieval systems to bring in relevant external information during the text generation process.

Over the past few years, RAG has gained significant traction and become a common topic in interview questions for roles such as AI engineer, machine learning engineer, prompt engineer, and data scientist.

This article aims to prepare you for RAG-related interview questions by providing a comprehensive overview of 30 key questions, ranging from foundational concepts to more advanced topics.

Even if you’re not preparing for an interview soon, this article might be a good opportunity to test your RAG knowledge.

Become an ML Scientist

Basic RAG Interview Questions

Let’s begin with a series of fundamental interview questions about RAG.

Explain the main parts of a RAG system and how they work.

A RAG (retrieval-augmented generation) system has two main components: the retriever and the generator.

The retriever searches for and collects relevant information from external sources, like databases, documents, or websites.

The generator, usually an advanced language model, uses this information to create clear and accurate text.

The retriever makes sure the system gets the most up-to-date information, while the generator combines this with its own knowledge to produce better answers.

Together, they provide more accurate responses than the generator could on its own.

What are the main benefits of using RAG instead of just relying on an LLM’s internal knowledge?

If you rely only on an LLM’s built-in knowledge, the system is limited to what it was trained on, which could be outdated or lacking detail.

RAG systems offer a big advantage by pulling in fresh information from external sources, resulting in more accurate and timely responses.

This approach also reduces "hallucinations"—errors where the model makes up facts—because the answers are based on real data. RAG is especially helpful for specific fields like law, medicine, or tech, where up-to-date, specialized knowledge is needed.

What are some common applications of RAG?

RAG is used in a variety of real-world AI applications across different domains:

-

Question-answering and support systems: RAG powers customer support chatbots and knowledge base assistants by retrieving up-to-date documentation or FAQs and generating accurate answers for users. This ensures customer queries are resolved with the latest information (for example, pulling current policy info or product details).

-

Conversational agents: Many chatbots and virtual assistants use RAG to provide factual, context-aware responses. By fetching relevant facts on the fly, a conversational agent (like a healthcare or finance chatbot) can give informed answers grounded in credible sources.

-

Content generation and summarization: RAG helps in generating or summarizing content with factual accuracy. For instance, it can retrieve parts of news articles or research papers and then produce summaries or reports that are both coherent and fact-checked against source data.

-

Domain-specific research: In specialized fields like law or medicine, RAG systems assist by pulling from domain-specific databases (case law, medical journals, etc.) to answer complex queries. This way, the model’s output is grounded in reliable, up-to-date domain knowledge, which is important for professional use cases.

What types of external knowledge sources can RAG use?

RAG systems can gather information from both structured and unstructured external sources:

- Structured sources include databases, APIs, or knowledge graphs, where data is organized and easy to search.

- Unstructured sources consist of large collections of text, such as documents, websites, or archives, where the information needs to be processed using natural language understanding.

This flexibility allows RAG systems to be tailored to different fields, such as legal or medical use, by pulling from case law databases, research journals, or clinical trial data.

Does prompt engineering matter in RAG?

Prompt engineering helps language models provide high-quality responses using the retrieved information. How you design a prompt can affect the relevance and clarity of the output.

- Specific system prompt templates help guide the model. For example, instead of having a simple out-of-the-box system prompt like “Answer the question,” you might have, “Answer the question based only on the context provided.” This gives the model explicit instructions to only use the context provided to answer the question, which can reduce the probability of hallucinations.

- Few-shot prompting involves giving the model a few example responses before asking it to generate its own, so it knows the type of response you're looking for.

- Chain-of-thought prompting helps break down complex questions by encouraging the model to explain its reasoning step-by-step before answering.

How does the retriever work in a RAG system? What are common retrieval methods?

In a RAG system, the retriever gathers relevant information from external sources for the generator to use. There are different ways to retrieve information.

One method is sparse retrieval, which matches keywords (e.g., TF-IDF or BM25). This is simple but may not capture the deeper meaning behind the words.

Another approach is dense retrieval, which uses neural embeddings to understand the meaning of documents and queries. Methods like BERT or Dense Passage Retrieval (DPR) represent documents as vectors in a shared space, making retrieval more accurate.

The choice between these methods can greatly affect how well the RAG system works.

What are the challenges of combining retrieved information with LLM generation?

Combining retrieved information with an LLM’s generation presents some challenges. For instance, the retrieved data must be highly relevant to the query as irrelevant data can confuse the model and reduce the quality of the response.

Additionally, if the retrieved information conflicts with the model’s internal knowledge, it can create confusing or inaccurate answers. As such, resolving these conflicts without confusing the user is crucial.

Finally, the style and format of retrieved data might not always match the model's usual writing or formatting, making it hard for the model to integrate the information smoothly.

What’s the role of a vector database in RAG?

In a RAG system, a vector database helps manage and store dense embeddings of text. These embeddings are numerical representations that capture the meaning of words and phrases, created by models like BERT or OpenAI.

When a query is made, its embedding is compared to the stored ones in the database to find similar documents. This makes it faster and more accurate to retrieve the right information. This process helps the system quickly locate and pull up the most relevant information, improving both the speed and accuracy of retrieval.

What are some common ways to evaluate RAG systems?

To evaluate a RAG system, you need to look at both the retrieval and generation components.

- For the retriever, you assess how accurate and relevant the retrieved documents are. Metrics like precision (how many retrieved documents are relevant) and recall (how many of the total relevant documents were found) can be used here.

- For the generator, metrics like BLEU and ROUGE can be used to compare the generated text to human-written examples to gauge quality.

For downstream tasks like question-answering, metrics like F1 score, precision, and recall can also be used to evaluate the overall RAG system.

How do you handle ambiguous or incomplete queries in a RAG system to ensure relevant results?

Handling ambiguous or incomplete queries in a RAG system requires strategies to ensure that relevant and accurate information is retrieved despite the lack of clarity in the user’s input.

One approach is to implement query refinement techniques, where the system automatically suggests clarifications or reformulates the ambiguous query into a more precise one based on known patterns or previous interactions. This can involve asking follow-up questions or providing the user with multiple options to narrow down their intent.

Another method is to retrieve a diverse set of documents that cover multiple possible interpretations of the query. By retrieving a range of results, the system ensures that even if the query is vague, some relevant information is likely to be included.

Lastly, we can use natural language understanding (NLU) models to infer user intent from incomplete queries and refine the retrieval process.

Intermediate RAG Interview Questions

Now that we’ve covered a few basic questions, it’s time to move on to intermediate RAG interview questions.

How do you choose the right retriever for a RAG application?

Choosing the right retriever depends on the type of data you're working with, the nature of the queries, and how much computing power you have.

For complex queries that need a deep understanding of the meaning behind words, dense retrieval methods like BERT or DPR are better. These methods capture context and are ideal for tasks like customer support or research, where understanding the underlying meanings matter.

If the task is simpler and revolves around keyword matching, or if you have limited computational resources, sparse retrieval methods such as BM25 or TF-IDF might be more suitable. These methods are quicker and easier to set up but might not find documents that don’t match exact keywords.

The main trade-off between dense and sparse retrieval methods is accuracy versus computational cost. Sometimes, combining both approaches in a hybrid retrieval system can help balance accuracy with computational efficiency. This way, you get the benefits of both dense and sparse methods depending on your needs.

Describe what a hybrid search is.

Hybrid search combines the strengths of both dense and sparse retrieval methods.

For instance, you can start with a sparse method like BM25 to quickly find documents based on keywords. Then, a dense method like BERT re-ranks those documents by understanding their context and meaning. This gives you the speed of sparse search with the accuracy of dense methods, which is great for complex queries and large datasets.

Do you need a vector database to implement RAG? If not, what are the alternatives?

A vector database is great for managing dense embeddings, but it’s not always necessary. Alternatives include:

- Traditional databases: If you’re using sparse methods or structured data, regular relational or NoSQL databases can be enough. They work well for keyword searches. Databases like MongoDB or Elasticsearch are good for handling unstructured data and full-text searches, but they lack deep semantic search.

- Inverted indices: These map keywords to documents for fast searches, but they don’t capture the meaning behind the words.

- File systems: For smaller systems, organized documents stored in files might work, but they have limited search capabilities.

The right choice depends on your specific needs, such as the scale of your data and whether you need deep semantic understanding.

How can you ensure that the retrieved information is relevant and accurate?

To make sure the retrieved information is relevant and accurate, you can use several approaches:

- Curate high quality knowledge bases: Make sure the information in your database is reliable and fits the needs of your application.

- Fine-tune retriever: Adjust the retriever model to better match your specific tasks and requirements. This helps improve how relevant the results are.

- Use re-ranking: After retrieving initial results, sort them based on detailed relevance to get the most accurate information. This step involves checking how well the results match the query in more depth.

- Implement feedback loops: Get input from users or models about the usefulness of the results. This feedback can help refine and improve the retriever over time. An example of this is the Corrective RAG (CRAG).

- Regular evaluation: Continuously measure the system’s performance using metrics like precision, recall, or F1 score to keep improving accuracy and relevance.

What are some techniques for handling long documents or large knowledge bases in RAG?

When dealing with long documents or large knowledge bases, here are some useful techniques:

- Chunking: Break long documents into smaller, more manageable sections. This makes it easier to search through and retrieve relevant parts without having to process the entire document.

- Summarization: Create condensed versions of long documents. This allows the system to work with shorter summaries rather than the full text, speeding up retrieval.

- Hierarchical retrieval: Use a two-step approach where you first search for broad categories of information and then narrow down to specific details. This helps to manage large amounts of data more effectively.

- Memory-efficient embeddings: Use compact vector representations to reduce the amount of memory and computational power needed. Optimizing the size of embeddings can make it easier to handle large datasets.

- Indexing and sharding: Split the knowledge base into smaller parts and store them across multiple systems. This enables parallel processing and faster retrieval, especially in large-scale systems.

How can you optimize the performance of a RAG system in terms of both accuracy and efficiency?

To get the best performance from a RAG system in terms of accuracy and efficiency, you can use several strategies:

- Fine-tune models: Adjust the retriever and generator models using data specific to your task. This helps them perform better on specialized queries.

- Efficient indexing: Organize your knowledge base using quick data structures like inverted indices or hashing. This speeds up the process of finding relevant information.

- Use caching: Store frequently accessed data so it doesn’t have to be retrieved repeatedly. This improves efficiency and speeds up responses.

- Reduce retrieval steps: Minimize the number of times you search for information. Improve the retriever’s precision or use re-ranking to ensure only the best results are passed to the generator, cutting down on unnecessary processing.

- Hybrid search: Combine sparse and dense retrieval methods. For example, use sparse retrieval to quickly find a broad set of relevant documents, then apply dense retrieval to refine and rank these results more accurately.

How does a RAG system maintain context in a multi-turn conversation?

In multi-turn conversations (e.g., a chatbot dialog), a RAG system needs to carry over relevant context from earlier turns to answer later questions correctly. To achieve this, RAG can incorporate the conversation history into each new query:

-

Query refinement: The system can automatically rewrite or augment the user’s question using information from previous exchanges. By adding details from prior turns, the retriever gets a more context-rich query, so it can fetch documents relevant to the ongoing discussion.

-

Including conversation history: Another approach is to provide the model with a summary or list of the previous dialogue turns as part of its input context. Many RAG architectures allow passing a sequence of messages (user and assistant history) along with the new question. This way, when the retriever searches for information, it considers the established context, and the generator can use the past context to maintain a coherent conversation.

By using these methods, the RAG system keeps track of “who said what” and what has already been resolved. This prevents it from forgetting details or repeating itself.

Advanced RAG Interview Questions

So far, we’ve covered basic and intermediate RAG interview questions, and now we will tackle more advanced concepts like chunking techniques or contextualization.

What are the different chunking techniques for breaking down documents, and what are their pros and cons?

There are several ways to break down documents for retrieval and processing:

- Fixed-length: Splitting documents into fixed-size chunks. It’s easy to do, but sometimes chunks may not align with logical breaks, so you could split important info or include irrelevant content.

- Sentence-based: Breaking documents into sentences keeps sentences intact, which is great for detailed analysis. However, it may lead to too many chunks or lose context when sentences are too short to capture full ideas.

- Paragraph-based: Dividing by paragraphs helps keep the context intact, but paragraphs may be too long, making retrieval and processing less efficient.

- Semantic chunking: Chunks are created based on meaning, like sections or topics. This keeps the context clear but is harder to implement since it needs advanced text analysis.

- Sliding window: Chunks overlap by sliding over the text. This ensures important info isn't missed but can be computationally expensive and may result in repeated information.

What are the trade-offs between chunking documents into larger versus smaller chunks?

Smaller chunks, like sentences or short paragraphs, help avoid the dilution of important contextual information when compressed into a single vector. However, this can lead to losing long-range dependencies across chunks, making it difficult for models to understand references that span across chunks.

Larger chunks keep more context, which allows for richer contextual information but can be less focused and information might get lost when trying to encode all the information into a single vector.

What is late chunking and how is it different from traditional chunking methods?

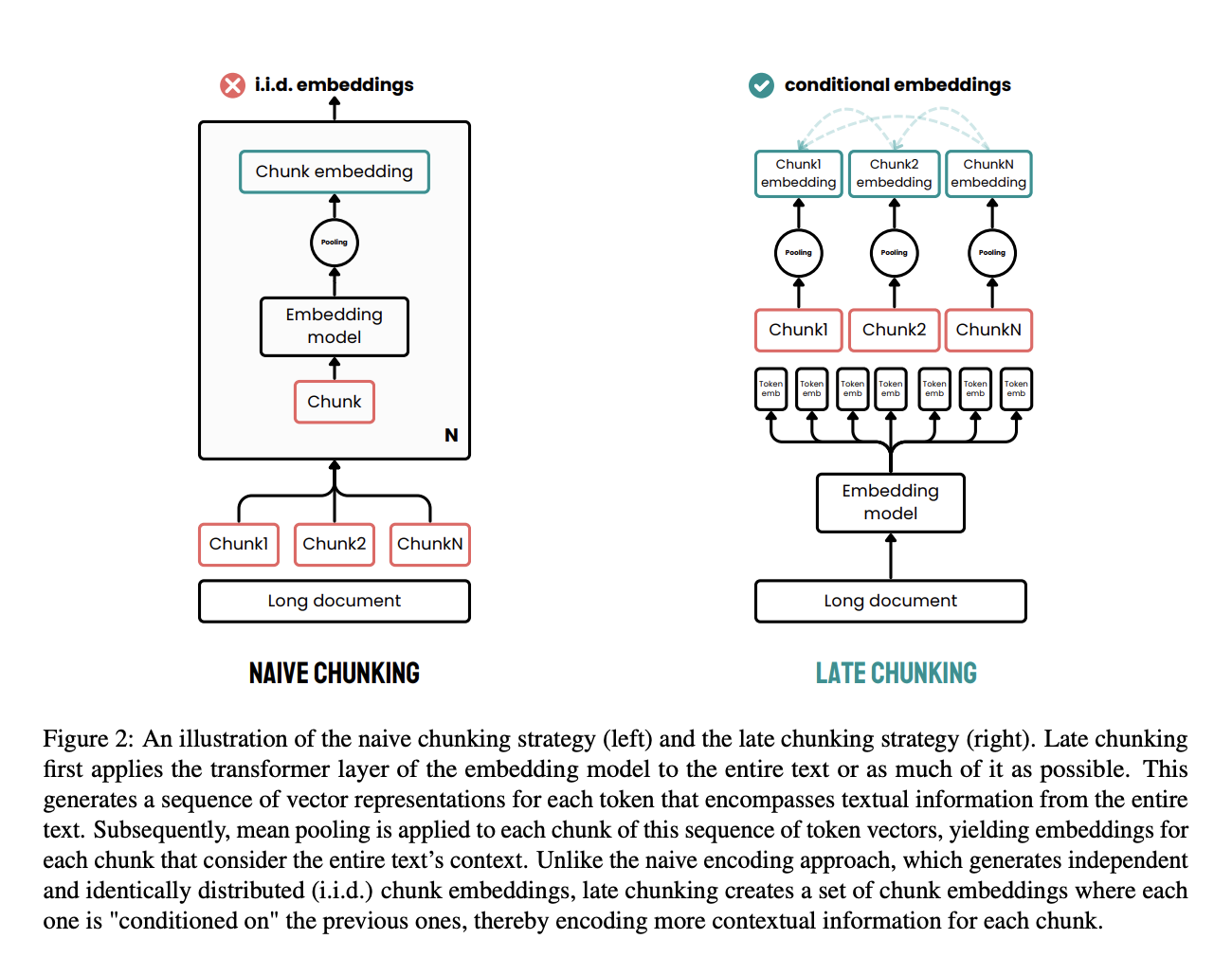

Late chunking is an effective approach designed to address the limitations of traditional chunking methods in document processing.

In traditional methods, documents are first split into chunks, such as sentences or paragraphs, before applying an embedding model. These chunks are then individually encoded into vectors, often using mean pooling to create a single embedding for each chunk. This approach can lead to a loss of long-distance contextual dependencies because the embeddings are generated independently, without considering the full document context.

Late chunking takes a different approach. It first applies the transformer layer of the embedding model to the entire document or as much of it as possible, creating a sequence of vector representations for each token. This method captures the full context of the text in these token-level embeddings.

Afterward, mean pooling is applied to the chunks of this sequence of token vectors, producing embeddings for each chunk that are informed by the entire document's context. Unlike the traditional method, late chunking generates chunk embeddings that are conditioned on each other, preserving more contextual information and resolving long-range dependencies.

By applying chunking later in the process, it ensures that each chunk's embedding benefits from the rich context provided by the entire document, rather than being isolated. This approach addresses the problem of lost context and improves the quality of the embeddings used for retrieval and generation tasks.

Source: Günther et al., 2024

Explain the concept of "contextualization" in RAG and its impact on performance.

Contextualization in RAG means making sure the information retrieved is relevant to the query. By aligning the retrieved data with the query, the system produces better, more relevant answers.

This reduces the chances of incorrect or irrelevant results and ensures the output fits the user's needs. One approach is to use an LLM to check if the retrieved documents are relevant before sending them to the generator model, as demonstrated by Corrective RAG (CRAG).

How can you address potential biases in the retrieved information or in the LLM's generation?

First, it's essential to build the knowledge base in a way that filters out biased content, making sure the information is as objective as possible. You can also retrain the retrieval system to prioritize balanced, unbiased sources.

Another important step could be to adopt an agent specifically to check for potential biases and ensure that the model’s output remains objective.

Discuss the challenges of handling dynamic or evolving knowledge bases in RAG.

One major issue is keeping the indexed data up-to-date with the latest information, which requires a reliable updating mechanism. As such, version control becomes crucial to manage different iterations of information and ensure consistency.

Additionally, the model needs to be able to adapt to new information in real-time without having to retrain frequently, which can be resource-intensive. These challenges require sophisticated solutions to ensure that the system remains accurate and relevant as the knowledge base evolves.

What is CAG, and how does it differ from traditional RAG? When would you prefer CAG over RAG in production?

CAG (Cache-Augmented Generation) is an evolution of RAG where retrieved documents are summarized or compressed before being passed to the LLM. This improves relevance, reduces token usage, and helps fit more information into the model’s context window.

The key difference is that in CAG, the retrieved content goes through an intermediate step, like a summarizer or context refiner, before being fed to the generator. In contrast, traditional RAG passes raw documents directly into the prompt.

CAG is especially useful when:

-

You’re working with static datasets (e.g., product catalogs, academic papers) that can be pre-compressed and cached.

-

Token efficiency is critical (e.g., cost-sensitive APIs or mobile/on-device inference).

-

The retrieved documents are long or noisy and need distillation.

RAG, on the other hand, is preferred when:

-

The underlying data is dynamic or frequently updated (e.g., real-time support tickets, live documentation).

-

You want to incorporate the freshest knowledge at query time, without reprocessing the entire knowledge base.

In short, use CAG for stable domains where you can optimize the context ahead of time, and RAG for dynamic scenarios where freshness and on-demand retrieval matter more.

Check out this article on RAG versus CAG for a more detailed comparison.

What are some advanced RAG systems?

There are many advanced RAG systems.

One such system is the Adaptive RAG, where the system not only retrieves information but also adjusts its approach in real-time based on the query. The adaptive RAG can decide to perform no retrieval, single-shot RAG, or iterative RAG. This dynamic behavior makes the RAG system more robust and relevant to the user's request.

Another advanced RAG system is Agentic RAG, which introduces retrieval agents—tools that decide whether or not to pull information from a source. By giving a language model this capability, it can determine on its own if it needs extra information, making the process smoother.

Corrective RAG (CRAG) is also becoming popular. In this approach, the system reviews the documents it retrieves, checking for relevancy. Only documents that are classified as relevant would be fed to the generator. This self-correction step helps ensure accurate relevant information is used. To learn more, you can read this tutorial on Corrective RAG (CRAG) Implementation With LangGraph.

Self-RAG takes this a step further by evaluating not just the retrieved documents but also the final responses generated, making sure both are aligned with the user’s query. This leads to more reliable and consistent results.

How can you reduce latency in a real-time RAG system without sacrificing accuracy?

One effective approach is pre-fetching relevant and commonly requested information so that it's ready to go when needed. Additionally, refining your indexing and query algorithms can make a big difference in how quickly data is retrieved and processed.

What are some limitations or challenges of RAG systems?

While RAG is powerful, it has several limitations and challenges:

-

Dependency on retrieved data quality: A RAG system is only as good as the information it retrieves. If the retriever pulls in irrelevant or incorrect documents, the generator’s answer will suffer. Ensuring high-quality, trusted data sources (and fine-tuning the retriever) is an ongoing challenge to avoid garbage-in, garbage-out issues.

-

Increased complexity and latency: RAG introduces an extra retrieval step on top of generation, which makes the whole system more complex and computationally heavy than a standalone LLM. Searching a large knowledge base can add latency and require significant compute resources, so RAG systems must balance accuracy with efficiency.

-

Need for maintenance of the knowledge base: Unlike static LLMs, RAG depends on an external knowledge repository that needs regular updating and curation. Organizations must continuously ingest new data, remove outdated info, and manage indices. Without reliable and up-to-date data sources, a RAG system can quickly become less effective or even provide outdated answers.

-

Integration and tuning difficulty: Combining retrieval and generation means more components to tune and monitor (the vector database, retriever model, and the LLM). Troubleshooting errors can be harder because issues might stem from the retrieval side or the generation side. This complexity can increase development and maintenance effort compared to using an LLM alone.

It’s also worth noting that if a domain’s data is largely static and fits in the model’s training, a fine-tuned LLM might be good enough in place of RAG. However, fine-tuning lacks RAG’s ability to incorporate fresh information on the fly and can be costlier to retrain for each knowledge update.

RAG Interview Questions for AI Engineers

Now, let’s address a few specific questions targeted at those interviewing for AI Engineer positions.

Earn a Top AI Certification

How would you evaluate and improve the performance of a RAG system in a production environment?

First, you will need to track user feedback to measure how well the system is performing and whether it’s relevant.

You will also want to monitor latency to ensure responses are timely and evaluate the quality of both the retrieved documents and generated outputs. Key metrics like response accuracy, user satisfaction, and system throughput are important.

To increase performance, you might retrain parts of the system with updated data or tweak parameters. You could also refine retrieval algorithms to improve relevance and efficiency, and regularly update knowledge sources to keep them current.

Continuous performance reviews and A/B testing can provide insights for ongoing improvements.

How do you ensure the reliability and robustness of a RAG system in production, particularly in the face of potential failures or unexpected inputs?

Building a production-ready RAG system requires handling various challenges. Potential solutions might include:

- Redundancy and failover: Implementing redundant components or backup systems to ensure continuous operation in case of failures.

- Error handling and logging: Implementing error handling mechanisms to catch and log errors, allowing for quick diagnosis and troubleshooting.

- Input validation and sanitization: Validating and sanitizing user inputs to prevent potential vulnerabilities and attacks like prompt injections.

- Monitoring and alerting: Setting up monitoring and alerting systems to detect and address performance issues or potential threats.

How would you design a RAG system for a specific task (e.g., question answering, summarization)?

For a question answering system, you can start by picking a retriever that can efficiently find and fetch relevant documents based on the user’s query. This could be something traditional, like keyword searches, or more advanced, such as using dense embeddings for better retrieval. Next, you need to choose or fine-tune a generator that can create accurate and coherent answers using the documents retrieved.

When it comes to summarization, the retriever’s job is to gather comprehensive content related to the document or topic at hand. The generator, on the other hand, should be able to distill this content into concise, meaningful summaries.

Prompt engineering is crucial here. Depending on the downstream task, we need to create prompts that guide the model towards incorporating the retrieved information to produce the relevant output.

Can you explain the technical details of how you would fine-tune an LLM for a RAG task?

It starts with gathering and preparing data specific to the task. This could be annotated examples of question-answer pairs or summarization datasets.

You might then use techniques like retrieval-augmented language modeling (REALM), which helps the model better integrate the documents it retrieves into its responses. This often means tweaking the model’s architecture or training methods to improve its handling of context from retrieved documents.

You could also use Retrieval-Augmented Fine-Tuning (RAFT), which blends the strengths of RAG with fine-tuning, letting the model learn both domain-specific knowledge and how to effectively retrieve and use external information.

How do you handle out-of-date or irrelevant information in a RAG system, especially in fast-changing domains?

One approach is to implement regular updates to the knowledge base or document index, so that new information is incorporated as it becomes available. This can involve setting up automated workflows that periodically scrape or ingest updated content, ensuring that the retriever is always working with the latest data.

Additionally, metadata tagging can be used to flag outdated information, allowing the system to prioritize more recent and relevant documents during retrieval.

In fast-changing domains, it’s also important to integrate mechanisms that filter or re-rank search results based on their timeliness. For example, giving higher weight to more recent articles or documents during retrieval helps ensure that the generated responses are based on up-to-date sources.

Another technique is using feedback loops or human-in-the-loop systems where flagged inaccuracies can be corrected quickly, and the retriever can be adjusted to avoid retrieving obsolete information.

How do you balance retrieval relevance and diversity in a RAG system to ensure comprehensive responses?

Balancing relevance and diversity in a RAG system is all about providing accurate and well-rounded answers. Relevance makes sure the retrieved documents match the query closely, while diversity ensures the system doesn’t focus too narrowly on a single source or viewpoint.

One way to balance these is by using re-ranking strategies that prioritize both relevance and diversity. You can also enhance diversity by pulling documents from a range of sources or sections within the knowledge base.

Clustering similar results and selecting documents from different clusters can help, too.

Fine-tuning the retriever with a focus on both relevance and diversity can also ensure that the system retrieves a comprehensive set of documents.

How do you ensure that the generated output in a RAG system remains consistent with the retrieved information?

One key approach is through tight coupling between retrieval and generation via prompt engineering. Carefully designed prompts that explicitly instruct the language model to base its answers on the retrieved documents help ensure that the generation remains grounded in the data provided by the retriever.

Additionally, techniques like citation generation, where the model is asked to reference or justify its responses with the retrieved sources, can help maintain consistency.

Another approach is to apply post-generation checks or validation, where the output is compared against the retrieved documents to ensure alignment. This can be achieved using similarity metrics or employing smaller verification models that validate the factual consistency between the retrieved data and the generated text.

In some cases, iterative refinement methods can be used where the model first generates an output, then revisits the retrieved documents to check and refine its answer. Feedback loops and user corrections can also be leveraged to improve consistency over time, as the system learns from past inconsistencies and adjusts its retrieval and generation mechanisms accordingly.

How do you ensure data privacy and security in a RAG system, especially when dealing with sensitive information?

Ensuring privacy and security in RAG systems is critical when the retrieved data or user queries contain sensitive or personal information. Key strategies include:

-

Data redaction and anonymization: Before data ever enters the RAG pipeline, remove or mask personally identifiable information (PII) and other sensitive details. For example, documents can be scanned, and any PII can be redacted at ingestion. This zero-trust approach means even if data is retrieved, it won’t expose confidential info because it was sanitized beforehand.

-

Secure data storage and transfer: Use encryption for data at rest (in databases or vector stores) and in transit when the retriever communicates with the storage. Ensuring the vector database and knowledge sources are access-controlled (with proper authentication and authorization) prevents unauthorized access. Only trusted applications or services should be able to read from the knowledge base.

-

Role-based access controls: Implement strict access filters on retrieval results. You can tag chunks of knowledge with metadata about who is allowed to see them (e.g., admin vs. user) and make the RAG system enforce these rules during retrieval. For instance, a healthcare RAG assistant might allow doctors to retrieve patient-identifying info but never show that to other users.

-

Prompt filtering and output monitoring: Incorporate AI guardrails to prevent the LLM from outputting private data. Techniques like prompt injection defense, output scanning, or policies (such as OWASP guidelines for LLMs) can help catch and strip sensitive content from responses. Human-in-the-loop review can also be used for high-stakes domains.

-

Compliance and auditing: Ensure the RAG system complies with data protection regulations (GDPR, HIPAA, etc.) by design. This includes maintaining audit logs of what data was retrieved and when, obtaining proper user consent for using their data, and regularly auditing the knowledge sources for sensitive information.

By combining these measures, a RAG system can securely handle sensitive data. In an interview, you’d be expected to mention that protecting user data isn’t just an afterthought – it’s built into how the RAG pipeline ingests, stores, retrieves, and generates information.

Conclusion

This guide provided you with 30 key interview questions to help you prepare for discussions on RAG, ranging from basic concepts to advanced RAG systems.

If you want to learn more about RAG systems, I recommend these blogs:

Ryan is a lead data scientist specialising in building AI applications using LLMs. He is a PhD candidate in Natural Language Processing and Knowledge Graphs at Imperial College London, where he also completed his Master’s degree in Computer Science. Outside of data science, he writes a weekly Substack newsletter, The Limitless Playbook, where he shares one actionable idea from the world's top thinkers and occasionally writes about core AI concepts.