Course

As someone who’s supported data teams with MLOps platforms and helped design scalable infrastructures, I’ve seen firsthand how crucial data lakes are in today’s data stack.

Unlike traditional data warehouses, which require data to be clean and structured before storage, data lakes let you store everything in raw, messy, and unstructured formats. This flexibility unlocks a world of possibilities for data-driven applications and use cases. A data lake allows you to gather and store data from various sources in one place, where it can be further analyzed and used for AI.

In this article, I’ll explain what a data lake is, how it works, when to use it, and how it compares to more traditional solutions.

Understanding the Concept of a Data Lake

Before discussing the technical architecture or use cases, it’s essential to understand what a data lake is and what distinguishes it from more traditional storage systems.

This section defines the concept, breaks down its key characteristics, and compares it to the more structured world of data warehouses.

I recommend the Data Strategy course if you want to learn more about how a well-designed data strategy can help businesses.

Definition of a data lake

A data lake is a centralized repository that stores all your structured, semi-structured, and unstructured data at any scale.

You can ingest raw data from various sources and keep it in its original format until you need to process or analyze it.

Unlike databases or data warehouses, which typically require a predefined schema (schema-on-write), data lakes use a schema-on-read approach. This means the structure is applied only when data is accessed for analysis, giving teams flexibility in interpreting and transforming the data later.

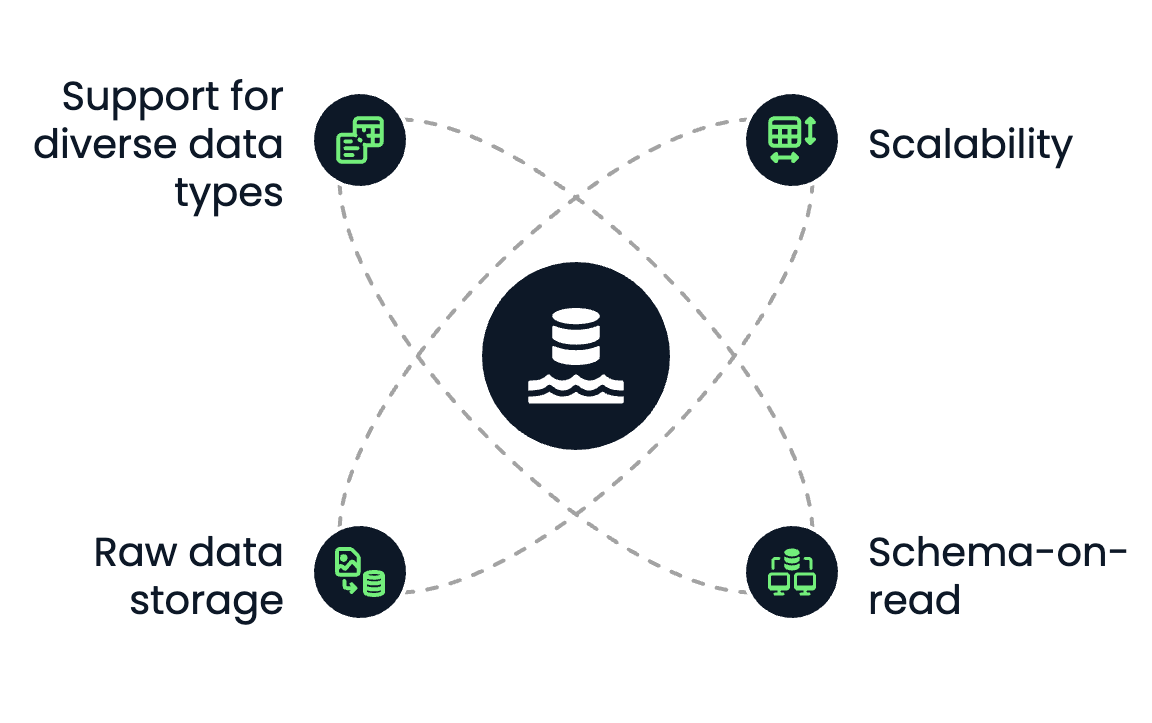

Core characteristics

A data lake comes with the following core characteristics:

- Scalability: Data lakes are built to scale horizontally. Whether you're storing terabytes or petabytes of data, cloud-based object storage solutions like Amazon S3 or Azure Data Lake Storage make it easy to grow without worrying about capacity.

- Schema-on-read: You don’t need to define the schema when storing the data. Instead, data scientists, analysts, or engineers can apply different schemas depending on the use case during retrieval or transformation.

- Raw data storage: Data lakes retain all data types in their native format, whether CSV files, JSON logs, audio files, videos, or sensor data.

- Support for diverse data types: You can store structured (e.g., SQL tables), semi-structured (e.g., XML, JSON), and unstructured data (e.g., images, PDFs, text) in one place.

Core characteristics of data lakes. Image by Author.

Comparison with data warehouses

Data lakes and data warehouses are used to store and analyze data. Yet, they differ significantly in structure, performance, and use cases.

If you are new to data warehouses and want to learn more, I recommend the course Data Warehousing Concepts and the articles What Is a Data Warehouse and Data Warehouse Architecture: Trends, Tools, and Techniques.

Let’s see how they differ:

|

Feature |

Data Lake |

Data Warehouse |

|

Data type |

Structured, semi-structured, unstructured |

Structured only |

|

Storage format |

Raw (file objects) |

Processed (tables, rows, columns) |

|

Schema |

Schema-on-read |

Schema-on-write |

|

Cost |

Low-cost storage |

More expensive per GB |

|

Use case |

Machine learning, big data, and raw data storage |

Business intelligence, structured analytics |

|

Performance |

Flexible, depends on the compute engine |

Optimized for fast SQL queries |

In practice, many modern data strategies combine both: using data lakes as the foundation for raw data storage and data warehouses for structured reporting and business intelligence.

There is also a hybrid solution called a data lakehouse. The article What is a Data Lakehouse? Architecture, Technology & Use Cases explains it more fully.

Components of a Data Lake Architecture

A well-designed data lake architecture is essential to make raw data usable and accessible to downstream analytics, machine learning, and reporting.

This section breaks down the five key layers of a modern data lake, from ingestion to governance.

Data ingestion layer

The ingestion layer brings data from various sources into the data lake. These sources include internal systems (like databases or CRMs), external APIs, or IoT devices and logs.

There are three main ingestion modes:

- Batch ingestion: Loading data periodically (like nightly) in batches.

- Stream ingestion: Real-time data flows, such as clickstream or sensor data.

- Hybrid ingestion: A combination of both batch and stream, which is common in enterprise environments.

Standard ingestion tools include:

- Apache Kafka and AWS Kinesis for streaming

- Apache NiFi, Flume, and AWS Glue for batch ETL

A flexible ingestion layer ensures that data arrives quickly and can handle a variety of formats and velocities.

Storage layer

When data is ingested, it is stored in the raw zone of the data lake. The storage layer is usually built on cloud object storage, which offers elastic scaling, redundancy, and cost efficiency.

Popular options include:

- Amazon S3

- Azure Data Lake Storage

- Google Cloud Storage

However, there are also options for companies that want to store their data in their on-premise clouds hosted in their data centers.

One could, for example, install MinIO in their infrastructure. MinIO itself is open-source and offers an Amazon S3-compatible API.

Key features of a storage layer include:

- Durability and availability: Data is automatically replicated across regions.

- Separation of storage and compute: You can scale compute independently based on demand.

- Data tiering: Allows cold storage for archival and hot storage for frequently accessed data.

Storing data in its original format allows you to keep all its potential for future processing and analysis.

Catalog and metadata management

Without metadata, a data lake becomes a data swamp, as it's impossible to find your data again and work with it efficiently.

The catalog and metadata layer bring structure to the chaos by keeping track of:

- Data schema

- Data location and partitioning

- Lineage and versioning

This layer ensures discoverability and usability by indexing all available datasets and enabling search and access control. Standard tools for this layer include:

- AWS Glue Data Catalog

- Apache Hive Metastore

- Apache Atlas

- DataHub

A sound metadata system is essential for collaboration, governance, and operational efficiency.

Processing and analytics layer

This is the layer where raw data gets processed and turned into insights. The processing layer supports various operations, from fundamental transformations to advanced analytics and machine learning.

Typical workflows include:

- ETL/ELT pipelines for data cleaning and normalization

- SQL querying

- Machine learning pipelines

This layer often includes batch and real-time computing, enabling exploratory data analysis and production workloads.

Security and governance

A robust data lake architecture must have built-in mechanisms to protect sensitive data and ensure compliance. This part is crucial, as data lakes mostly contain a lot of sensitive data, which, when compromised, could harm the business to which they belong.

Essential features of that layer include:

- Identity and access management (IAM): Restrict who can see or modify data.

- Encryption: In-transit (TLS) and at-rest encryption.

- Data masking and anonymization: For handling personally identifiable information.

- Auditing and monitoring: For traceability and compliance.

Popular tools and services:

- AWS Lake Formation

- Apache Ranger

- Azure Purview

Security often determines whether a data lake can be used in regulated industries like finance, healthcare, or the military.

Common Use Cases for Data Lakes

Data lakes are well-suited for use cases where scale, flexibility, and diverse data types are essential. They serve as the foundation for modern data-driven operations and applications across industries.

In this section, we’ll explore some of the most common and impactful use cases where data lakes play a central role.

Big data analytics

One of the most well-known uses of data lakes is enabling large-scale data analytics.

Because data lakes can store data in its raw form, organizations no longer need to worry about cleaning or structuring every dataset before analysis. This is ideal when collecting logs, sensor data, or clickstreams, which are too large or fast-changing for traditional systems.

Data lakes make it possible to:

- Analyze historical data alongside real-time data

- Run custom queries on petabyte-scale datasets

- Enable cross-departmental analytics with a single source of truth

Tools like Amazon Athena or Google BigQuery can query data directly in the lake without requiring complex transformations or moving the data elsewhere.

Machine learning and AI

Data lakes are a perfect fit for machine learning and AI workflows.

They provide the ideal environment for:

- Storing training datasets in multiple formats (e.g., CSV, Parquet, images, etc.)

- Keeping raw and, therefore, more comprehensive data, allowing for more flexibility in exploring, experimenting, and iterating on ML models

- Powering automated ML pipelines that iterate over massive volumes of data

Instead of limiting models to pre-cleaned data from a warehouse, data scientists can access rich, raw data to experiment more freely and iterate faster. This is especially useful in domains like natural language processing (NLP), computer vision, and time-series forecasting.

If you want an example of how the Databricks Lakehouse solution can be used for AI, I recommend reading A Comprehensive Guide to Databricks Lakehouse AI For Data Scientists.

Centralized data archiving

Many organizations use data lakes for long-term storage and archiving.

Thanks to the low cost of cloud object storage, it’s economically feasible to store years of operational data that may not be used daily but still needs to be accessible for compliance and audit purposes, trend analysis, forecasting, or training future ML models.

Most cloud object storage solutions allow you to tier your storage to archive rarely accessed data while keeping the lake lean and efficient.

Data science experimentation

Data lakes empower data scientists to explore raw data without constraints.

Whether you're building a quick prototype, testing a new hypothesis, or running exploratory data analysis (EDA), the lake gives you flexible access to:

- Raw unstructured data (like PDFs, emails, text files)

- Semi-structured formats (like JSON, XML, or logs)

- Massive datasets that wouldn’t fit into a traditional DB

This experimentation layer is essential for innovation. Data lakes let you explore datasets in new ways without submitting tickets to the data engineering team or waiting for a cleaned version in the warehouse.

Benefits and Challenges of Data Lakes

While data lakes provide potent capabilities for modern data workflows, they also have unique challenges. Understanding both sides is essential before adopting a data lake architecture. In this section, I’ll discuss the main benefits, common pitfalls, and how to overcome them.

Benefits of data lakes

The most significant advantage of a data lake is its flexibility. You’re not bound to a specific schema or data model from the beginning, which is excellent when working with fast-changing or evolving datasets.

Here are some key benefits:

- Scalability: Data lakes are built on cloud-native storage that scales effortlessly, whether you’re storing gigabytes or petabytes.

- Support for all data types: Structured (SQL tables), semi-structured (JSON, XML), and unstructured (text, images, video) data can be stored in the same platform.

- Cost-efficiency: Cloud object storage (e.g., Amazon S3) is significantly cheaper than the storage used by traditional databases or warehouses.

- Decoupled architecture: Compute and storage are separated to scale analytics workloads independently of the data storage.

- Enhanced analytics: Data lakes store raw, unprocessed data and enable more comprehensive and flexible data analysis, including machine learning and predictive analytics.

- Improved decision-making: Data lakes can enable more profound and comprehensive data analysis, leading to better-informed decisions across the organization.

- Elimination of data silos: Data lakes help break down data silos by consolidating data from various sources into a single, centralized repository.

In projects I’ve worked on, this centralization has reduced data silos and improved cross-team collaboration significantly.

Key challenges

Data lakes are powerful, yet some key challenges must be managed. Left unmanaged, they can quickly become disorganized and unusable, a state often called a data swamp.

Some common challenges include:

- Lack of structure: Without predefined schemas, data can be difficult to query, document, or govern.

- Data quality issues: Since data is ingested in raw form, poor-quality or incomplete data may go undetected until downstream.

- Metadata and discoverability gaps: Without a strong cataloging system, users may not know what data exists or how to use it.

- Complex governance: Ensuring access control, auditability, and compliance is harder in a flexible environment.

These issues can reduce trust in the data lake and reduce adoption across the business.

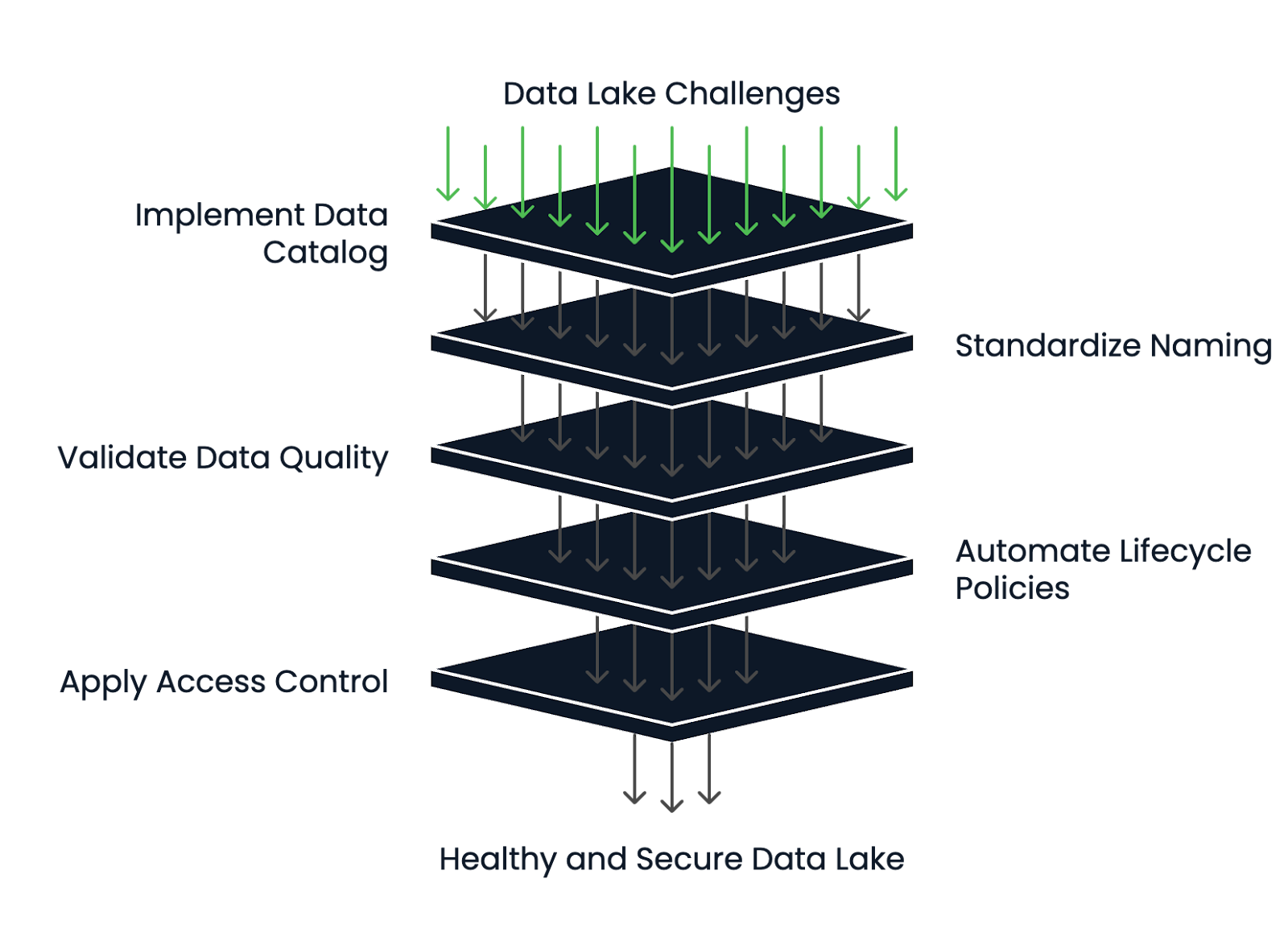

How to mitigate the challenges

The most successful data lakes have strong metadata and lifecycle management practices.

Here’s how to reduce risk and keep your data lake healthy:

- Implement a data catalog: Tools like AWS Glue or DataHub help maintain discoverability, schema definitions, and lineage tracking.

- Use standardized naming and folder structures: This creates consistency and reduces team confusion.

- Adapt data validation and profiling tools: Ensure quality during ingestion and periodically revalidate data.

- Automate lifecycle policies: Automatically move stale data to cold storage or delete it after a defined retention period.

- Apply robust access control and encryption: To protect sensitive data, use IAM policies, role-based access, and encryption at rest and in transit.

A data lake can remain an asset and not a liability by designing a clean architecture from the beginning and establishing solid governance structures.

Data lake health management framework. Image by Author.

Popular Data Lake Technologies

With the growing importance of data lakes in modern data architecture, a wide range of technologies has emerged to support them. These tools span cloud-native platforms, open-source ecosystems, and integrations with analytics solutions. In this section, I’ll walk you through the most popular tools.

Cloud-native solutions

Cloud providers offer fully managed services for building and maintaining data lakes. These platforms are often the starting point for teams looking for scale, durability, and tight integration with other cloud services.

Popular cloud-native solutions include:

- Amazon S3 + AWS Lake Formation: S3 provides object storage, while Lake Formation handles permissions, cataloging, and governance.

- Azure Data Lake Storage: Combines blob storage with HDFS-like capabilities.

- Google Cloud Storage (GCS): GCS is highly durable and integrates well with BigQuery and Vertex AI for analytics and ML workloads.

These services are optimized for elasticity, cost-efficiency, and security, making them ideal for enterprise-scale data lakes.

Open-source tools

Open-source technologies add flexibility and extensibility, especially for teams that want to avoid vendor lock-in or operate in hybrid/multi-cloud environments.

Popular solutions include:

- Apache Hadoop: One of the original data lake frameworks. While less common today for new projects, it laid the foundation for distributed data storage and processing.

- Delta Lake: Developed by Databricks, Delta Lake adds ACID transactions, versioning, and schema enforcement to cloud object storage.

- Apache Iceberg: Iceberg offers atomic operations, hidden partitioning, and time travel for querying historical data.

- Presto: A distributed SQL query engine that lets you run fast, interactive queries on data stored in S3, HDFS, or other sources without moving the data.

These tools often integrate into broader data platforms and are favored by engineering teams building custom or modular solutions.

If you want to explore the differences between Apache Iceberg and Delta Lake in more detail, I recommend reading Apache Iceberg vs. Delta Lake: Features, Differences & Use Cases.

Integration with analytics platforms

A data lake becomes valuable when integrated with analytics and visualization tools that help extract insights and drive business decisions. Therefore, before selecting the final solution, one should always check upfront with which analytics platforms the data lake solution should be integrated.

Popular analytics platforms include:

- Databricks: Combines data lake storage with a collaborative workspace for data engineering, machine learning, and analytics. It supports Delta Lake out of the box.

- Snowflake: While traditionally a data warehouse, Snowflake can query external data in cloud storage (via Snowpipe or external tables), making it a hybrid lakehouse solution.

- Power BI, Tableau: These BI tools can connect to data lakes either directly or through query engines like Athena or BigQuery, enabling dashboarding and reporting on top of raw or semi-structured data.

If you want to learn more about Databricks, I recommend the Introduction to Databricks course.

When choosing technologies, aligning them with your team’s expertise, your data strategy and the level of flexibility or abstraction you need is essential.

Conclusion

Data lakes empower organizations to build robust machine learning applications and real-time dashboards by offering a flexible, scalable, raw, and semi-structured data foundation.

In this article, we explored what a data lake is, its key components, and when to use one. We discussed the benefits like flexibility and scalability, challenges such as data governance and the risk of a "data swamp," and how to avoid them. Finally, we covered popular technologies for building data lakes, including cloud platforms and open-source tools.

From my experience working across MLOps, AI infrastructure, and cloud-native data systems, I’ve seen how a well-architected data lake can transform how teams collaborate and innovate. But I’ve also seen how quickly a poorly governed data lake can become a liability, and navigating it can become hard.

If your organization handles complex, diverse, or large-scale data and wants to enable faster experimentation, richer analytics, or AI development, a data lake might be precisely what you need.

The Understanding Modern Data Architecture course is a great first stop if you want to learn more.

Become a Data Engineer

FAQs

What types of organizations benefit the most from using a data lake?

Organizations dealing with large-scale, diverse, or fast-evolving datasets, such as tech companies, financial institutions, and healthcare providers, benefit the most from data lakes.

How does a data lake support real-time analytics?

A data lake can ingest streaming data from IoT devices or applications in real time, enabling immediate analytics and faster decision-making through tools like Apache Kafka and Amazon Kinesis.

Can data lakes be deployed on-premises, or are they cloud-only?

While cloud-based data lakes are common, it’s possible to deploy on-premises data lakes using solutions like Hadoop Distributed File System (HDFS) or MinIO for S3-compatible object storage.

What is a data swamp, and how can it be avoided?

A data swamp occurs when a data lake becomes disorganized and unusable due to poor metadata management and governance. Strong cataloging, documentation, and governance policies help prevent this.

How do data lakes enable machine learning development?

By storing raw and diverse data types, data lakes allow data scientists to experiment more freely, create richer training datasets, and iterate faster on machine learning models.

Are there specific compliance concerns with data lakes?

Yes, storing unstructured and sensitive data in raw formats requires strict encryption, access control, auditing, and compliance frameworks to meet regulations like GDPR and HIPAA.

How does schema-on-read impact data analysis in a data lake?

Schema-on-read allows users to apply different schemas at query time, offering flexibility but also requiring careful data discovery and interpretation for accurate analysis.

What is the difference between a data lake and a data lakehouse?

A data lakehouse combines the flexibility of a data lake with the management and transactional capabilities of a data warehouse, offering the best of both worlds for analytics and AI.

How much does it typically cost to maintain a cloud-based data lake?

Costs vary depending on storage volume, data transfer, and access frequency, but cloud object storage services (like Amazon S3) are typically cheaper per GB than traditional databases.

What skills are needed to build and manage a data lake successfully?

Key skills include cloud infrastructure management, data engineering, security and compliance expertise, metadata management, and experience with big data tools like Spark or Presto.

I am a Cloud Engineer with a strong Electrical Engineering, machine learning, and programming foundation. My career began in computer vision, focusing on image classification, before transitioning to MLOps and DataOps. I specialize in building MLOps platforms, supporting data scientists, and delivering Kubernetes-based solutions to streamline machine learning workflows.