Speakers

Training 2 or more people?

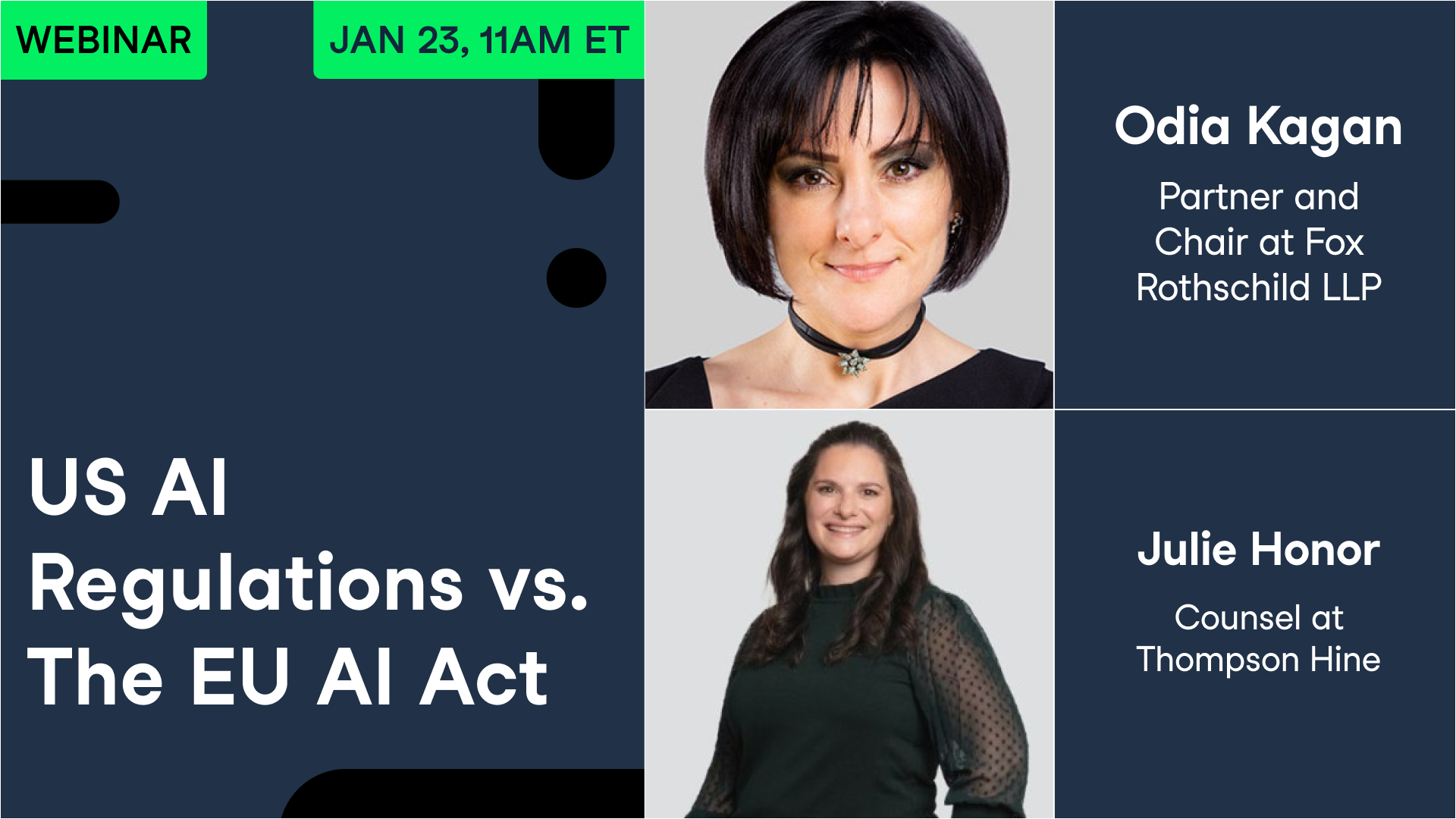

Get your team access to the full DataCamp library, with centralized reporting, assignments, projects and moreUS AI Regulations vs. The EU AI Act

January 2025

Related

webinar

Understanding Regulations for AI in the USA, the EU, and Around the World

In this session, two experts on AI governance explain which AI policies you need to be aware of, how governments are treating AI regulation, and how you need to deal with them.webinar

The EU AI Act: How Will It Affect Your Business?

Dan Nechita, EU Director for the Transatlantic Policy Network, and Lily Li, Founder and Lawyer at Metaverse Law, explain what the legislation involves, how it will affect your business, and how to comply with the legislation.webinar

EU AI Act Readiness: Meeting Your Organization's AI Literacy Requirements

Anandhi, CRAIO at Esdha and Will, a Senior Associate at Ashurst, teach you how you and your organization can comply with the AI literacy clause of the EU AI Act.webinar

Best Practices for Developing Generative AI Products

In this webinar, you'll learn about the most important business use cases for AI assistants, how to adopt and manage AI assistants, and how to ensure data privacy and security while using AI assistants.webinar

Scaling AI Adoption in Financial Services

Explore regulatory AI initiatives in financial services and how to overcome themwebinar