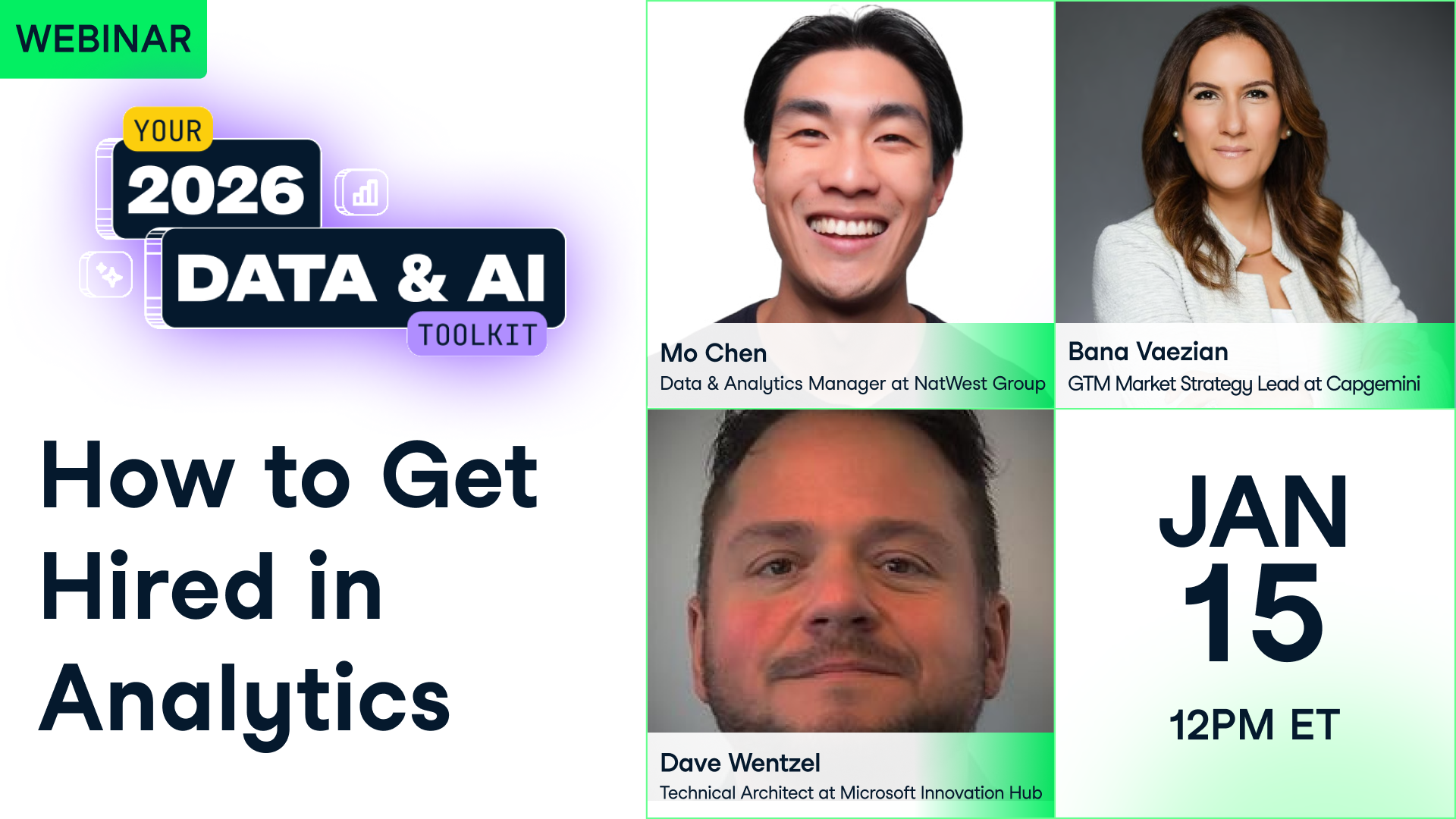

Palestrantes

Treinar 2 ou mais pessoas?

Dê acesso à sua equipe à biblioteca completa do DataCamp, com relatórios centralizados, tarefas, projetos e muito mais.How to Get Hired in Analytics

January 2026

Session Resources

Summary

A practical session for aspiring data analysts and career changers who want a clear, up-to-date guide to what actually gets you hired in analytics—especially for entry-level roles.

Generative AI is automating the mechanics—draft SQL, charts, even slide decks—so hiring managers are paying closer attention to higher-value work: framing the right business question, checking whether outputs are correct, and turning results into a recommendation someone can act on. Panelists emphasized that many teams already have plenty of dashboards, but still struggle to make decisions, which makes business context and communication stand out in interviews. The discussion moved from core skills (Excel, SQL, a BI tool, plus data modeling basics) to a modern view of “experience,” where proof of problem-solving and learning speed can matter as much as years on a résumé. Portfolio guidance focused on a small set of end-to-end projects that read like a story and are easy for recruiters to scan, with clear problem statements, methods, and recommendations. On hiring processes, the panel outlined common stages—recruiter screen, hiring manager conversation, technical assessment, and panel rounds—and debated AI use in interviews, separating AI for prep from AI used to answer in real time. Finally, they shared direct job-search tactics: treat applications as a volume-and-iteration process, adjust your résumé when you’re not getting screens, and use targeted networking to reduce the odds of getting filtered out by applicant tracking syste ...

Ler Mais

Key Takeaways:

- AI raises the bar: analysts are increasingly valued as “validators and interpreters,” not only builders of queries and charts.

- Dashboards are secondary to decisions; the core job is turning data into insights and actions tied to real business levers.

- Focus your toolset on fundamentals (Excel, SQL, one BI tool) plus data modeling and strong data-quality instincts.

- A strong portfolio is 2–3 end-to-end projects presented as a narrative—not a pile of code—so recruiters can grasp impact fast.

- Hiring is both process and probability: expect multi-stage interviews, seek feedback, and use networking to get past the ATS.

In-Depth Notes

1) How AI Is Changing the Analyst’s Value—and the Hiring Signal

Generative AI is not only adding convenience; it is changing what employers consider “real” analytical skill. Bana Vaezian described a world where tasks that once took up large parts of an analyst’s day—writing queries, generating charts, summarizing results—are increasingly automated. In practice, that means the advantage is shifting earlier in the work: problem framing and decision support. An analyst who can ask the right question, clarify what the business is trying to decide, and verify AI output will often come across as more advanced than someone who can only produce a dashboard on request.

Mo Chen put the shift in blunt operational terms: “companies are drowning in dashboards. And are starving for insights and actions.” The line resonates because it matches what many teams see day to day: reporting grows, but decisions and follow-through don’t improve. That is why the panel repeatedly returned to context and judgment—understanding how the business makes money, anticipating how stakeholders will interpret results, and explaining trade-offs. The analyst becomes a quality-check layer, not only for data pipelines and metrics but also for AI-generated narratives that can quietly repeat bias, hide uncertainty, or build on flawed data.

Dave Wentzel framed the new expectation as a combination of design thinking and critical thinking—skills that show up when the “answer” is not a report, but a recommendation. He offered an example that hiring managers recognize immediately: a conversation about marketing spend where the real work is evaluating assumptions (what changed, what didn’t, what else could explain the pattern), not simply calculating ROI. In interviews, AI can help with syntax; it cannot replace reasoning about whether a conclusion is justified.

The message for job seekers is straightforward: listing “ChatGPT” or “prompting” as a skill is not enough. What stands out is showing how you use AI to move faster while still checking definitions, data quality, and implications. If you want the full nuance—including how the panel separates “automation” from “authority”—the on-demand session is worth watching closely.

2) The Modern Skills Stack: Fundamentals, Data Modeling, and Learnability

Technical requirements for analyst roles remain surprisingly stable at the foundation. Mo’s shortlist—spreadsheets, SQL, and one BI tool—reflects what many hiring managers still expect on day one, especially in larger companies where Excel is still the default tool for quick analysis. His recommendation leaned toward Power BI as a practical choice given broad adoption and fewer licensing headaches, though the larger point was to avoid obsessing over tools. “Don’t get too hung up on the delivery type,” Mo said, arguing that dashboards, tables, and one-off reports are simply formats; what matters is whether the output supports a specific decision.

Bana added a skill that is often missing from entry-level prep: data modeling basics. Understanding relationships, cardinality, and how data is structured improves everything downstream—SQL joins, cleaning logic, and even your ability to spot when a metric is being double-counted. In hiring conversations, this can be a quiet differentiator because it signals you understand how the data is built, not only how to click through a BI tool.

Just as important, the panel elevated “learnability” from a nice-to-have to a requirement in an AI-assisted workplace. Mo described how the time to become effective has dropped: what once took months can now take weeks or days if you have the right foundation and know how to use AI to learn faster. That does not mean fundamentals are optional; it means memorizing syntax is less valuable than solving problems under changing constraints.

Dave reinforced this by de-emphasizing tool trivia in interviews. The details of left vs. right joins, or where to place a filter clause, are increasingly searchable—or generated—on demand. What persists is the discipline of exploratory analysis: checking duplicates and nulls, looking at distributions, validating edge cases, and challenging assumptions before you present results. In other words, success looks like good judgment early, not polish at the end. The webinar’s concrete examples—advertising ROI, forecasting, executive disagreements over definitions—add context that is hard to capture in a checklist, and they’re worth hearing in full.

3) Portfolios That Get Opened: End-to-End Projects, Narrative, and Real Data

The portfolio discussion was less about design and more about audience. Mo’s central warning was that a portfolio built only for other analysts can fail at the first step. Recruiters are unlikely to read “1,000 lines of Python,” so a portfolio that is basically a GitHub list of repos may signal effort to engineers while communicating little to the person deciding whether you move forward. “When you think your GitHub page is a portfolio, you could not be more wrong,” he said, pushing candidates to present work as a story: the question, the approach, the output, and the recommended action.

The panel converged on a simple scope: two or three end-to-end projects. “End-to-end” here means beyond the analysis: finding or collecting data, cleaning it, modeling it, analyzing it, visualizing it (when it helps), and then writing down what the results mean for a decision-maker. Mo suggested formats that reduce friction for nontechnical readers—Notion pages, Medium-style writeups, even a clean Google Doc—so long as the narrative is quick to understand. The goal is not to hide technical ability; it is to show business thinking without forcing the reader to dig for it.

Dave offered a complementary view: GitHub can still help, but only when it captures your thinking, trade-offs, and decisions—not “half baked Python code.” He suggested that candidates earn “additional points” by showing how they handle ambiguity and disagreement: metric definitions that leaders interpret differently, or analyses where there is no single correct answer. That kind of documentation signals maturity because it shows you can work in the messy reality where analytics often lives.

On data sources, the panel noted common options (Kaggle, public datasets, data.gov) but encouraged originality. Mo’s example—building a dashboard from his own paddle-boarding metrics—illustrates a key idea: uniqueness can signal initiative. A familiar dataset can show competence; an uncommon one can show curiosity, ownership, and comfort with imperfect data. The session contains more tactical advice on structuring these projects so they pass a quick recruiter scan and still hold up for technical reviewers—another reason to watch the full recording.

4) Hiring Reality: Interviews, the ATS, Networking, and the “Numbers Game”

On hiring processes, Bana described a familiar funnel: recruiter/HR screening (logistics and baseline fit), hiring-manager interview (communication, collaboration, and how you solve problems), technical assessment (SQL/Python, data modeling, case studies), and then a panel round with cross-functional stakeholders. The emphasis, however, was not on “passing tests” but on showing you can work with people who have different goals and constraints. Early-career candidates often underestimate how much of an entry-level data analyst job is alignment work—defining metrics, clarifying requirements, and building trust in conclusions.

The panel also debated AI use during interviews. Dave’s view was pragmatic: if you will use AI on the job, banning it in interviews risks testing an outdated version of the role. Bana drew a sharper line, warning that using AI to generate real-time verbal answers is usually obvious and can damage trust. Mo focused the debate on technical assessments, arguing that forbidding AI can be as outdated as forbidding search engines—especially when many teams now code with AI-assisted tools by default. The practical takeaway: use AI heavily to prepare (study SQL patterns, practice cases, tighten explanations), be transparent when appropriate, and expect interviewers to probe reasoning that can’t be handed off to a tool.

Experience barriers came up repeatedly, and the panel’s advice was to “create relevance” where you are—especially if you’re applying for entry-level data analyst roles with no formal analytics title. Mo’s résumé example was pointed: if you run a coffee shop, don’t sell cappuccino skills—sell inventory optimization, waste reduction, staffing patterns, and demand trends. Dave echoed the same idea: show an analytical way of thinking applied to real constraints, even if your job title was not “data analyst.”

Finally, the job search itself: “It’s a numbers game,” Mo said, citing a rough 2–3% interview-to-application rate. The implication is practical—track your funnel, adjust your résumé if you’re not getting recruiter screens (especially if ATS filtering is likely), and treat rejections as feedback. Bana added a direct workaround to ATS filtering: use LinkedIn to connect with recruiters, hiring managers, and internal employees so your application has a human path in. Dave’s closing advice was to ask for feedback in a way that makes a real response more likely—and sometimes that conversation can change outcomes.

Relacionado

webinar

How to Get a Job in Data

In this session, you'll learn what hiring managers look for in candidates for data analyst and data scientist roles, and get tips on how to prepare yourself for the hiring process and your first weeks on the job.webinar

Breaking into Data Analysis Careers

Annie, author of the best-selling "How to Become a Data Analyst," teaches you what skills you need to get hired as a data analyst, where the challenges and opportunities lie in getting a job, and how to find your dream job once you have the skills.webinar

Get Hired Faster with a Data Portfolio

In the session you'll learn how to create a data portfolio that will get you hired faster.webinar

How to Become a Data Analyst

Discover the skills, steps, and certifications needed to become a data analyst.webinar

Radar—Ask a Hiring Manager: How to Land a Job in Data Science

Learn the key tactics that can help you stand out from the crowd.webinar